docker

1, Consul

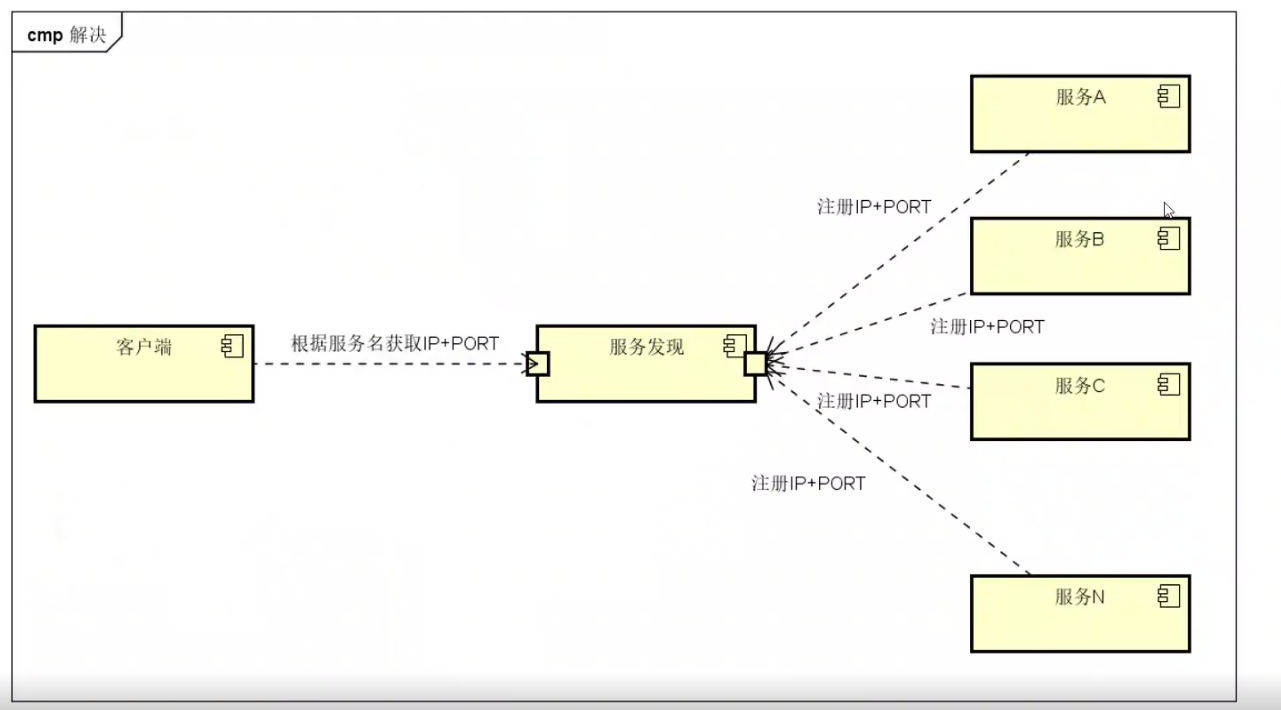

1. What is service registration and discovery

Service registration and discovery is an indispensable component in microservice architecture. At first, services are single node, which does not guarantee high availability, and does not consider the pressure bearing of services. Calls between services are simply accessed through interfaces. Until the distributed architecture of multiple nodes appeared later, the initial solution was load balancing at the service front end. In this way, the front end must know the network location of all back-end services and configure them in the configuration file. Here are a few questions:

● if you need to call back-end services A-N, you need to configure the network location of N services, which is very troublesome

● the configuration of each caller needs to be changed when the network location of the back-end service changes

Since there are these problems, service registration and discovery solve these problems. The back-end service A-N can register its current network location with the service discovery module, and the service discovery is recorded in the form of K-V. K is generally the service name, and V is IP:PORT. The service discovery module conducts regular health checks and polls to see if these back-end services can be accessed. When the front end calls the back-end services A-N, it runs to the service discovery module to ask for their network location, and then calls their services. In this way, the above problems can be solved. The front end does not need to record the network location of these back-end services, and the front end and back-end are completely decoupled!

2. What is consumer

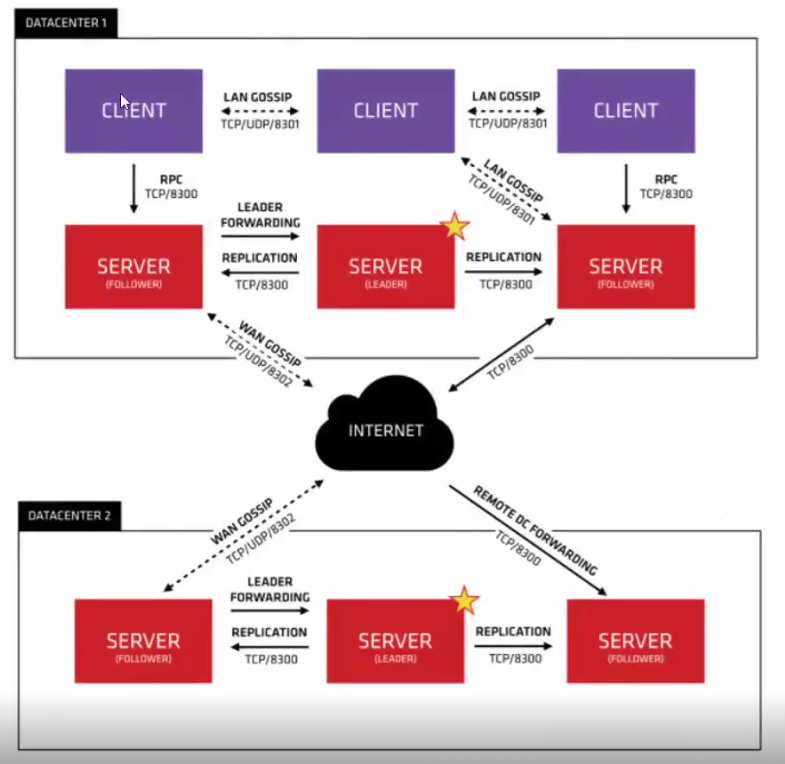

Consumer is an open source service management software developed by google using go language. Support multi data center, distributed high availability, service discovery and configuration sharing. Raft algorithm is adopted to ensure high availability of services. Built in service registration and discovery framework, implementation of distributed consistency protocol, health check, Key/Value storage and multi data center scheme, no longer need to rely on other tools (such as ZooKeeper). Service deployment is simple, with only one runnable binary package. Each node needs to run agent, which has two running modes: server and client. The official recommendation of each data center requires 3 or 5 server nodes to ensure data security and ensure that the server leader election can be carried out correctly.

In the client mode, all services registered with the current node will be forwarded to the server node, and the information itself is not persistent.

In the server mode, the function is similar to the client mode. The only difference is that it will persist all information locally, so that in case of failure, the information can be retained. The server leader is the boss of all server nodes. Unlike other server nodes, it is responsible for synchronizing the registered information to other server nodes and monitoring the health of each node

Some key features of consumer:

Service registration and discovery: consumer makes service registration and discovery easy through DNS or HTTP interfaces. Some external services, such as those provided by saas, can also be registered.

Health check: health check enables consum1 to quickly alarm the operation in the cluster. Integration with service discovery can prevent services from being forwarded to failed services. Key/Value storage: a system used to store dynamic configuration. Provides a simple HTTP interface that can be operated anywhere.

Multiple data centers: any number of areas can be supported without complex configuration.

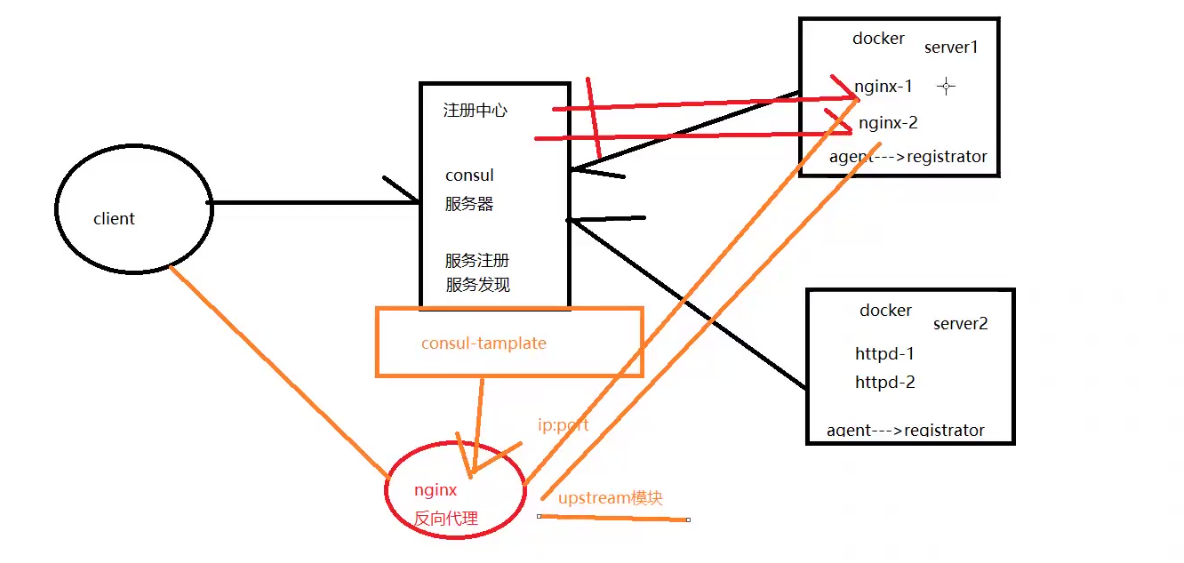

Install consumer is used for service registration, that is, some information of the container itself is registered in consumer. Other programs can obtain the registered service information through consumer, which is service registration and discovery.

consul : Service discovery and registration registrator : Installed at each service node agent template: Profile template, which can be used for service discovery ip+port Generated in template format and in the specified path nginx/haproxy: Reverse proxy, load balancing client: visit nginx And other load balancers to forward access requests to the service nodes at the back end

2, Consumer deployment

consul The server 192.168.100.150 function consul Services nginx Services consul-template Daemon registrator The server 192.168.100.130 function registrator Container, operation nginx container systemctl stop firewalld.service setenforce 0

Consumer server

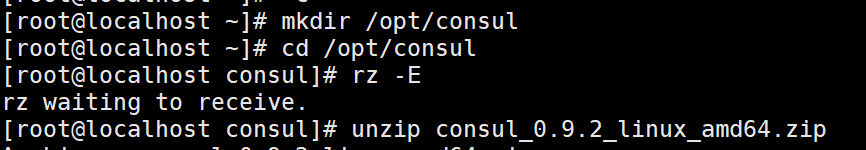

1. Establish Consul service

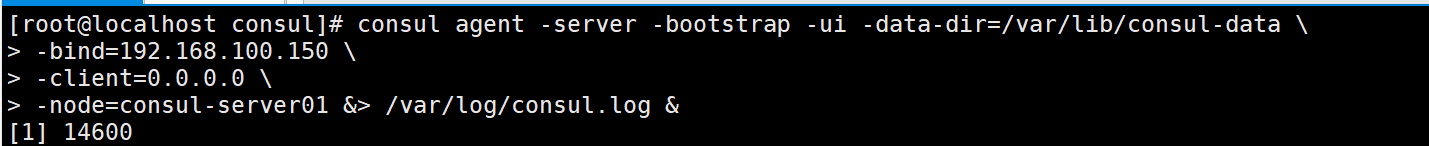

mkdir /opt/consul cp consul_0.9.2_linux_amd64.zip /opt/consul cd /opt/consul unzip consul_0.9.2_linux_amd64.zip mv consul /usr/local/bin/ Set up the agent and start it in the background consul Server consul agent -server -bootstrap -ui -data-dir=/var/lib/consul-data \ -bind=192.168.100.150 \ -client=0.0.0.0 \ -node=consul-server01 &> /var/log/consul.log &

-server:with server Start as. The default is client. -bootstrap:Used to control a server Is it bootstrap There can only be one mode in a data center server be in bootstrap Mode, when a server be in bootstrap Mode, you can elect to server-leader. -bootstrap-expect=2:Minimum cluster requirements server Quantity. When it is lower than this quantity, the cluster will fail. -ui:Specify on UI Interface, so that you can http://Use an address like localhost:8500/ui to access the web UI provided by consumer. -data-dir Specify the data store directory. -bind:Specify the communication address within the cluster. All nodes in the cluster must be reachable to this address. The default is 0.0.0.0. -client:appoint consul Where is the binding client On the address, this address provides HTTP, DNS,RPC And other services. The default is 127.0.0.1. -node:The name of a node in a cluster must be unique in a cluster. The default is the host name of the node.. datacenter:Specify the data center name. The default is dc1.

netstat -natp | grep consul start-up consul Five ports will be monitored by default after: 8300: replication,leader farwarding Port 8301: lan cossip Port 8302: wan gossip Port 8500: web ui Interface port 8600: use dns Port for viewing node information by protocol

2. View cluster information

see members state consul members Node Address Status Type Build Protocol DC consul-server01 192.168.100.150:8301 alive server 0.9.2 2 dc1 View cluster status consul operator raft list-peers Node ID Address State Voter RaftProtocol consul-server01 192.168.100.150:8300 192.168.100.150:8300 leader true 2 consul info | grep leader leader = true leader_addr = 192.168.100.150:8300

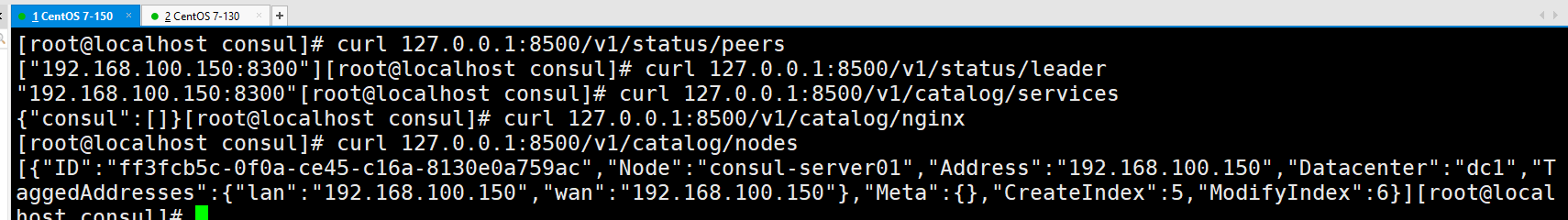

3. Obtain cluster information through http api

curl 127.0.0.1:8500/v1/status/peers #View cluster server members curl 127.0.0.1:8500/v1/status/leader #Cluster server leader curl 127.0.0.1:8500/v1/catalog/services #All registered services curl 127.0.0.1:8500/v1/catalog/nginx #View nginx service information curl 127.0.0.1:8500/v1/catalog/nodes #Cluster node details

Registrar server

The draper service automatically joins the Nginx cluster

1. Install gliderlabs / Registrar

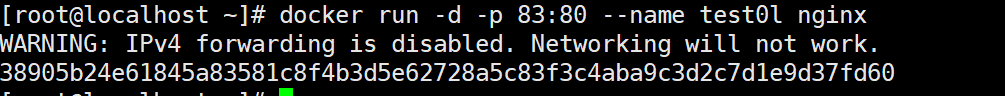

Gliderlabs/Registrator You can check the running status of the container, register automatically, and log out docker The service of the container to the service configuration center. Current support Consul,Etcd and SkyDNS2. docker run -d \ --name=registrator \ --net=host \ -v /var/run/docker.sock:/tmp/docker.sock \ --restart=always \ gliderlabs/registrator:latest \ -ip=192.168.100.130 \ consul://192.168.100.150:8500 docker run -d -p 83:80 --name test01 nginx docker run -d -p 84:80 --name test02 nginx docker run -d -p 88:80 --name test03 httpd docker run -d -p 89:80 --name test04 httpd

-net=host : Put the running docker Container set to host Network mode. -v /var/run/docker.sock:/tmp/docker.sock : Put the host Docker Daemon(Docker daemon)Default listening Unix Mount the domain socket into the container. --restart=always : Set to always restart the container when it exits. --ip : Just put network Specified host Mode, so we specify ip For the host ip. consul : appoint consul Server IP And ports.

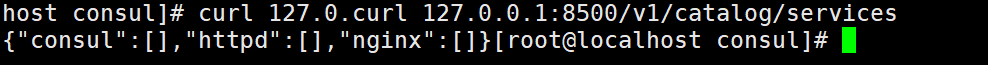

docker---consul upper curl 127.0.0.1:8500/v1/catalog/services

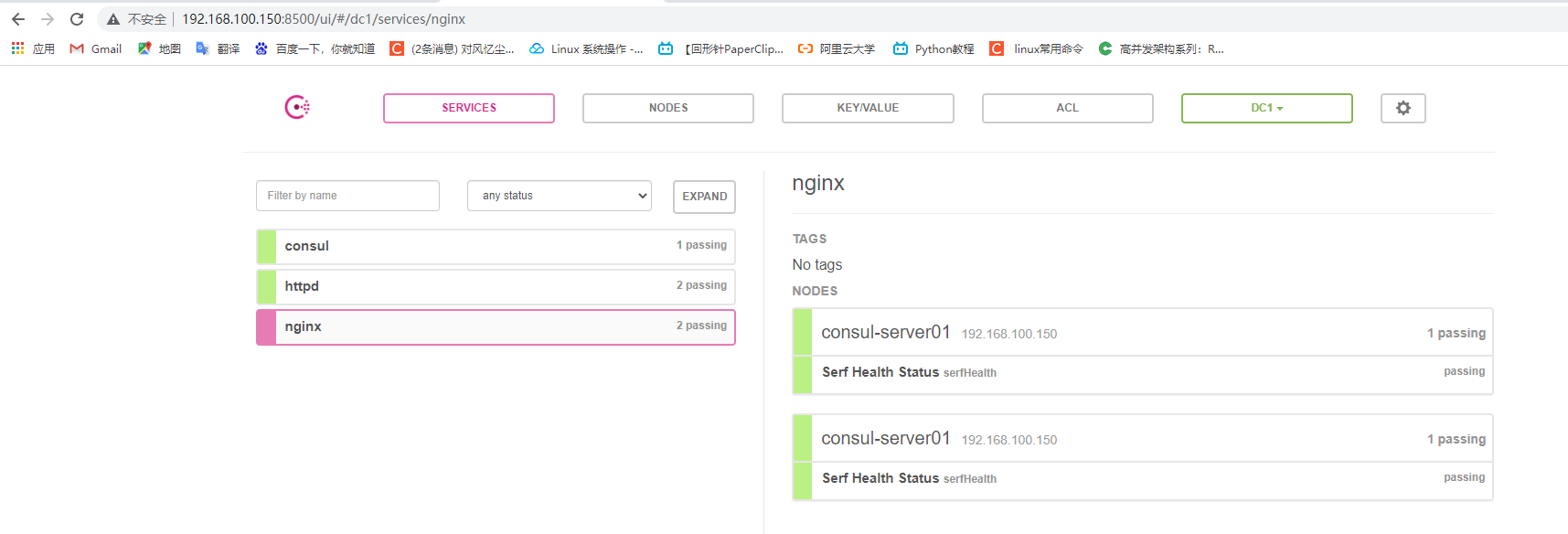

visit http://192.168.100.150:8500

3, Consumer template

Consul template is an application based on consul to automatically replace configuration files. Consul template is a daemon used to query consul cluster information in real time, update any number of specified templates on the file system and generate configuration files. After the update is completed, you can choose to run the shell command to perform the update operation and reload Nginx

The consult template can query the service directory, Key, Key values, etc. in consult. This powerful abstraction function and query language template can make Consul template particularly suitable for dynamic configuration file creation. For example, create Apache/Nginx Proxy Balancers, Haproxy Backends, etc.

1. Prepare template nginx template file

stay consul Operation on server

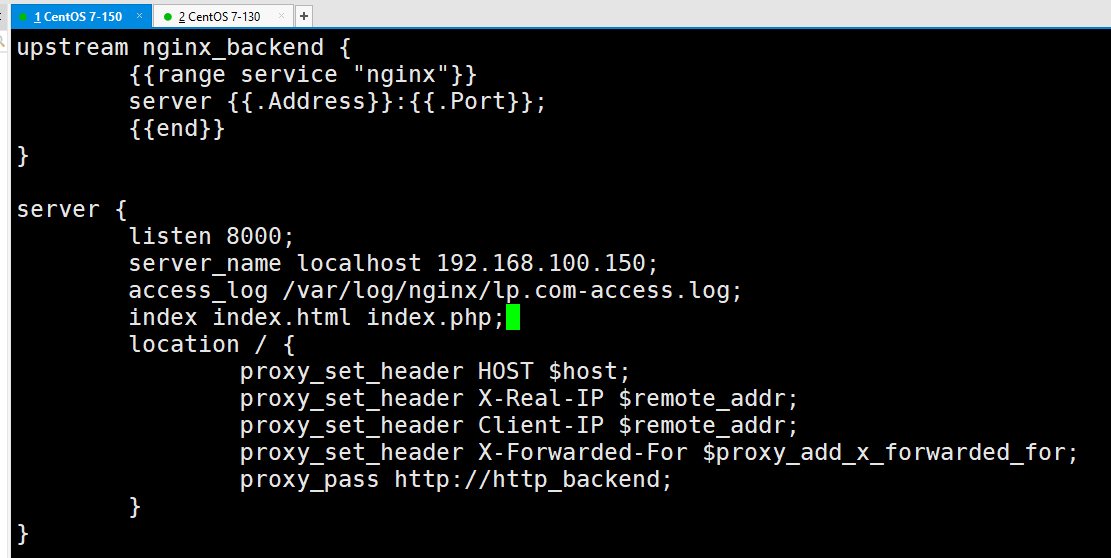

vim /opt/consul/nginx.ctmpl

#Define a simple template for nginx upstream

upstream http_backend {

{{range service "nginx"}}

server {{.Address}}:{{.Port}};

{{end}}

}

Define a server,Listen to port 8000 and reverse proxy to upstream

server {

listen 8000;

server_name localhost 192.168.100.150;

access_log /var/log/nginx/lp.com-access.log; #Modify log path

index index.html index.php;

location / {

proxy_set_header HOST $host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header Client-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_pass http://http_backend;

}

}

2. Compile and install nginx

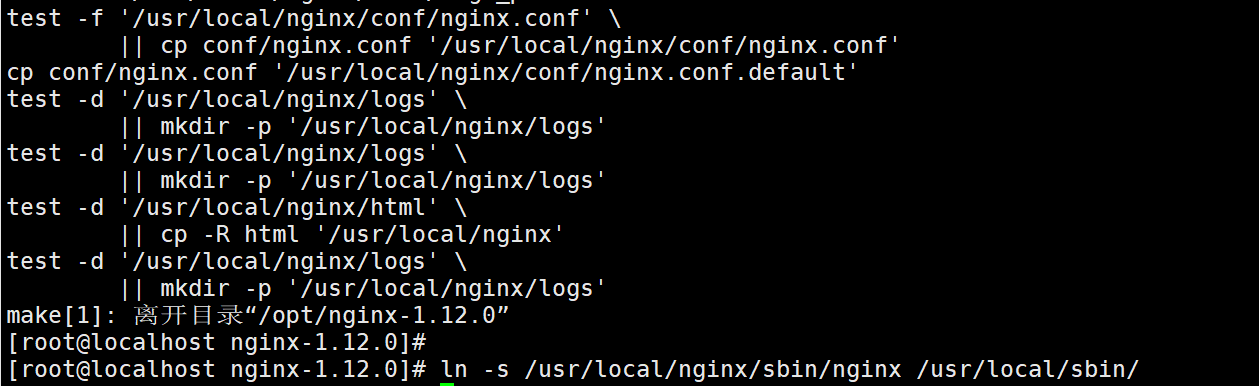

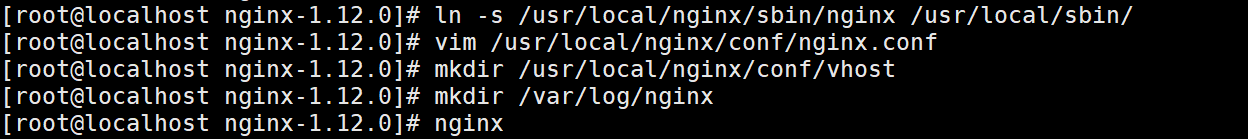

cd /opt yum -y install pcre-devel zlib-devel gcc gcc-c++ make useradd -M -s /sbin/nologin nginx tar zxvf nginx-1.12.0.tar.gz -C /opt/ cd /opt/nginx-1.12.0/ ./configure --prefix=/usr/local/nginx --user=nginx --group=nginx && make -j4 && make install ln -s /usr/local/nginx/sbin/nginx /usr/local/sbin/

3. Configure nginx

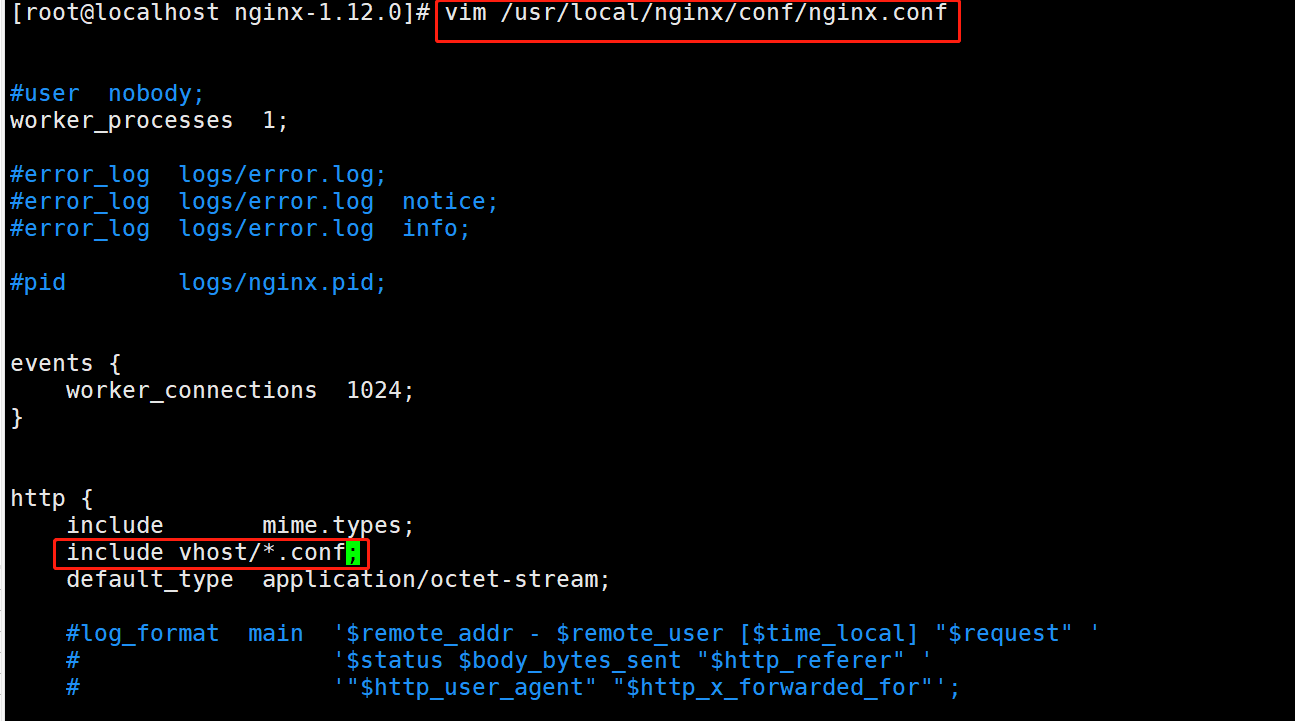

vim /usr/local/nginx/conf/nginx.conf

http {

include mime.types;

include vhost/*.conf; #Add virtual host directory

default_type application/octet-stream;

Create virtual host directory

mkdir /usr/local/nginx/conf/vhost

Create log file directory

mkdir /var/log/nginx

start-up nginx

nginx

4. Configure and start the template

cd /opt/consul

unzip consul-template_0.19.3_linux_amd64.zip -d /opt/

cd /opt/

mv consul-template /usr/local/bin/

Start at the front desk template Service, do not press after startup ctrl+c suspension consul-template Process.

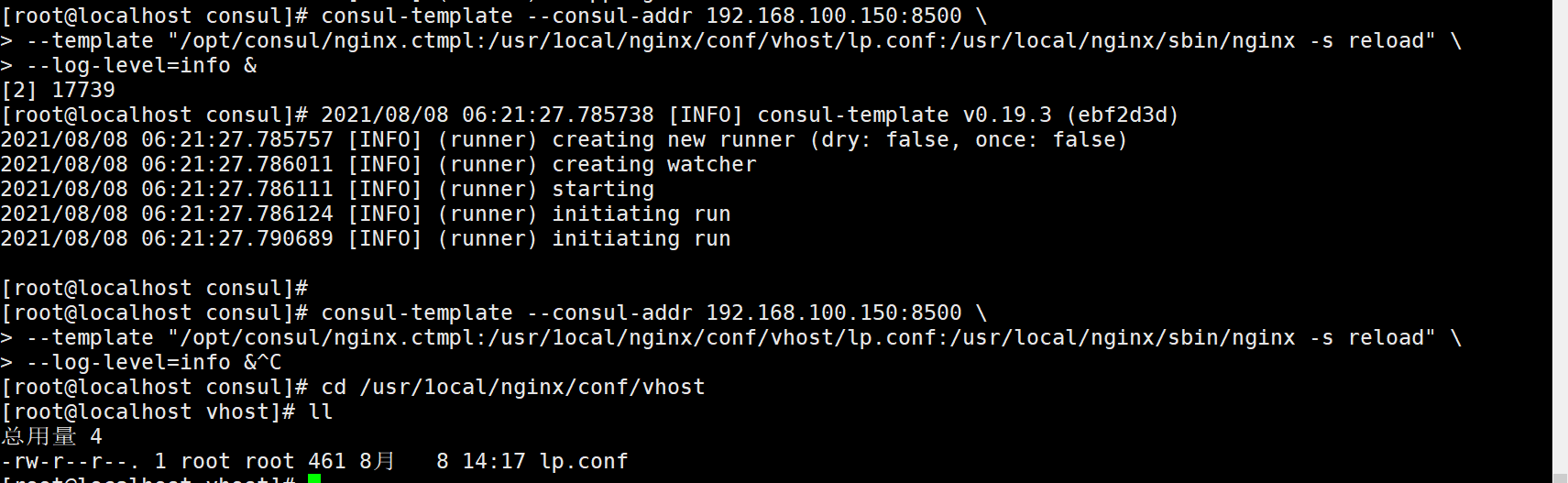

cd /opt/consul

consul-template --consul-addr 192.168.100.150:8500 \

--template "/opt/consul/nginx.ctmpl:/usr/1ocal/nginx/conf/vhost/lp.conf:/usr/local/nginx/sbin/nginx -s reload" \

--log-level=info &

cd /usr/local/nginx/conf/vhost/

ls

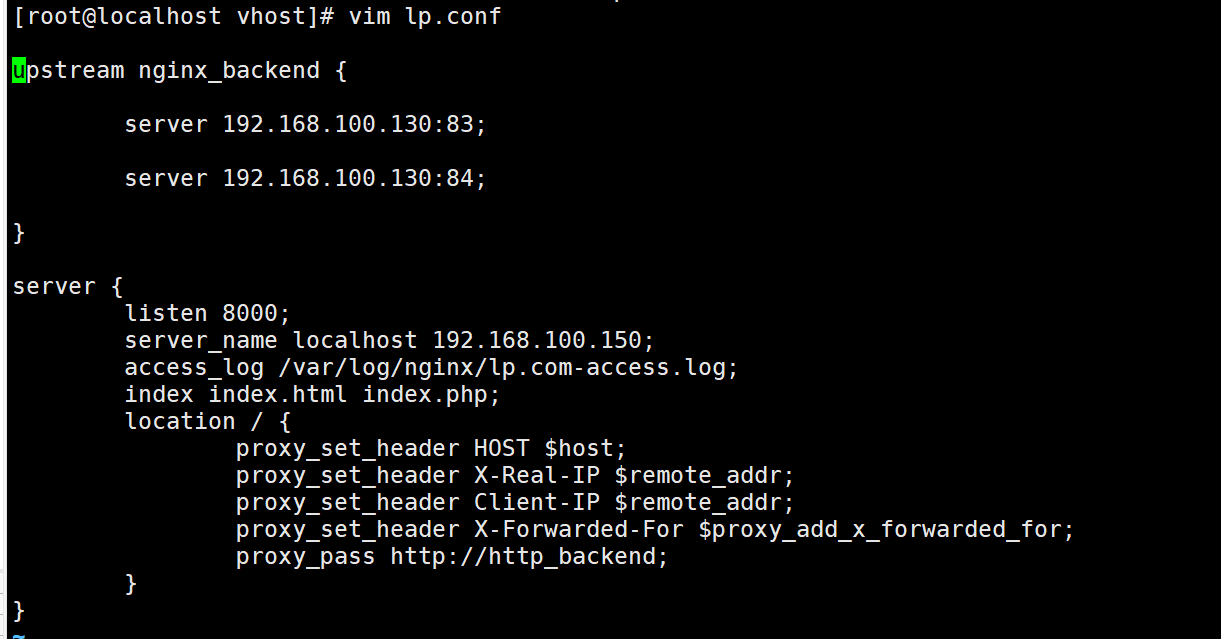

vim lp.conf

upstream http_backend {

server 192.168.100.130:83;

server 192.168.100.130:84;

}

server {

listen 8000;

server_name localhost 192.168.100.150;

access_log /var/log/nginx/lp.com-access.log;

index index.html index.php;

location / {

proxy_set_header HOST $host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header Client-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_pass http://http_backend;

}

}

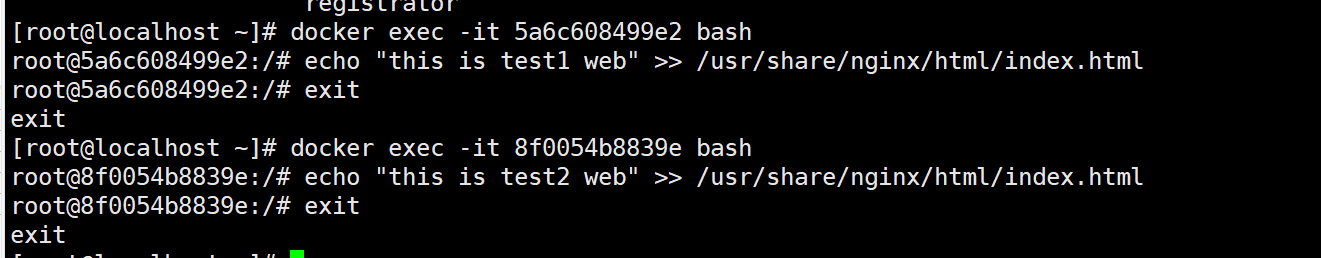

5. Access template nginx

docker ps -a CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES 74d66d7673fc httpd "httpd-foreground" 34 minutes ago Up 34 minutes 0.0.0.0:89->80/tcp, :::89->80/tcp test04 5e002b884b8d httpd "httpd-foreground" 34 minutes ago Up 34 minutes 0.0.0.0:88->80/tcp, :::88->80/tcp test03 8f0054b8839e nginx "/docker-entrypoint...." 35 minutes ago Up 35 minutes 0.0.0.0:84->80/tcp, :::84->80/tcp test02 5a6c608499e2 nginx "/docker-entrypoint...." 35 minutes ago Up 35 minutes 0.0.0.0:83->80/tcp, :::83->80/tcp test01 576cfca70afc gliderlabs/registrator:latest "/bin/registrator -i..." 39 minutes ago Up 28 minutes registrator docker exec -it 5a6c608499e2 bash echo "this is test1 web" >> /usr/share/nginx/html/index.html docker exec -it 8f0054b8839e bash echo "this is test2 web" >> /usr/share/nginx/html/index.html Browser access: http://192.168. 100.150:8000/ and constantly refresh.

(2) Check / usr / local / nginx / conf / Vhost / LP Conf file content

cat /usr/local/nginx/conf/vhost/lp.conf

upstream http_backend {

server 192.168.100.130:83;

server 192.168.100.130:84;

server 192.168.100.130:85;

}

(3) Check the logs of three nginx containers and request normal polling to each container node

docker logs -f test-01 docker logs -f test-02 docker logs -f test-05

4, Consumer multi node

Add a server 192.168 with an existing docker environment 80.12/24 join the existing cluster

consul agent \ -server \ -ui \ -data-dir=/var/lib/consul-data \ -bind=192.168.80.110 \ -client=0.0.0.0 \ -node=consul-server02 \ -enable-script-checks=true \ -datacenter=dc1\ -join 192.168.100.150 &> /var/log/consul.log &

-enable-script-checks=true :Set check service to available -datacenter :Data center name -join :Join an existing cluster

consul members Node Address Status Type Build Protocol DC consul-server01 192.168.100.150:8301 alive server 0.9.2 2 dc1 consul-server02 192.168.100.110:8301 alive server 0.9.2 2 dc1 consul operator raft list-peers Node ID Address State Voter RaftProtocol consul-server01 192.168.100.150:8300 192.168.100.150:8300 leader true 2 consul-server02 192.168.100.110:8300 192.168.100.110:8300 follower true 2