Docker network

Understand Docker0

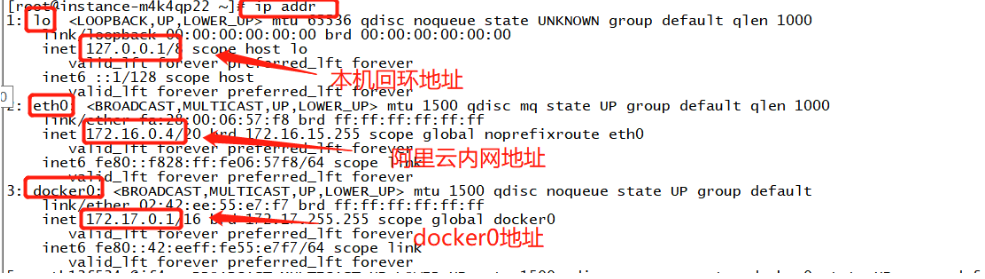

Test, enter a docker container and view its network environment

Three networks

#Question: how does docker handle container network access?

#View the internal network address ip addr of the container #docker exec -it tomcat01 ip addr #linux can ping through the docker container

principle

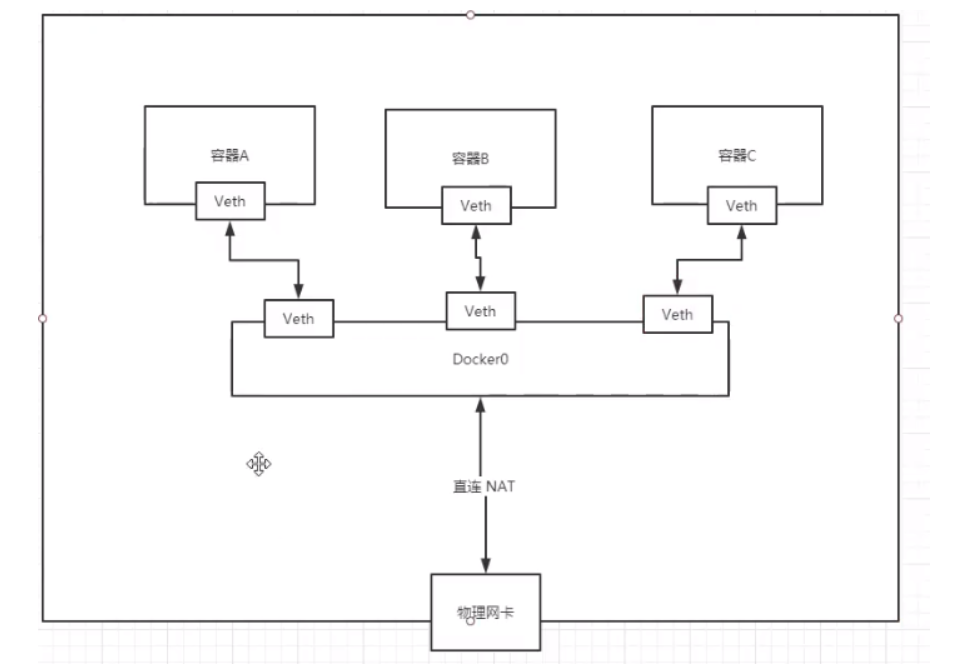

#Every time a docker container is started, docker will assign an ip to the docker container. As long as docker is installed, another network card docker0 bridging mode will be established. The technology used is Veth pair technology! Veth pair acts as a bridge to connect various virtual network devices # veth virtual ethernet virtual network card

Introduce some network knowledge

When we use virtual machines, we often have bridge mode and NAT mode to configure

bridged networking: the virtual container is like an independent host in the LAN. It can access any machine in the network. If VMWare is used, in this mode, you need to manually configure the IP address and subnet mask, and be in the same network segment as the host, so that the virtual system can communicate with the host

Nat mode: Network address translation. Using NAT mode is to let the virtual system access the public network through the network where the host machine is located with the help of NAT (Network address translation) function. The TCP/IP configuration information of the virtual system in NAT mode is provided by DHCPserver of VMnet(NAT) virtual network and cannot be modified manually. Therefore, the virtual system cannot communicate with other real hosts in the LAN. The biggest advantage of using NAT mode is that it is easy to access the Internet.

Therefore, the network using docker compose for inter container communication defaults to the bridge mode.

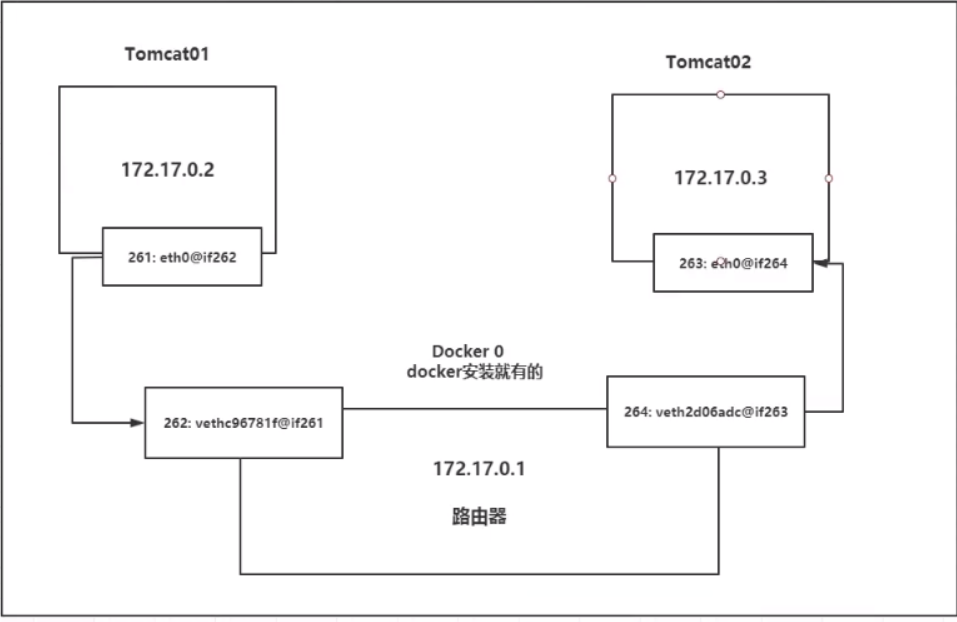

3. Let's test tomcat01 and tomcat02

# Containers and containers can ping each other!

Conclusion: tomcat01 and tomcat02 share the same router, docker0

When all containers do not specify a network, they are routed by docker0. Docker will assign a default available ip to our containers

–link

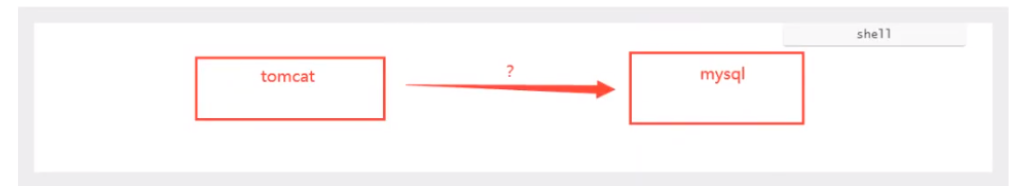

Considering a scenario, we write a micro service, database url=ip; The project does not restart and the database ip is replaced. We want to deal with this problem. Can we access the container by name?

use docker-compose To deploy, you can access it through the domain name under the same network

Summary

Docker uses the Linux bridge, and the host is a docker container bridge docker0

All network interfaces in Docker are virtual. Virtual forwarding efficiency is high!

As long as the container is deleted, the corresponding bridge pair is gone!

Docker Compose network rules

Network rules:

View network

[root@VM-16-12-centos louyu_pure]# docker network ls NETWORK ID NAME DRIVER SCOPE 4428d4d0c09e bridge bridge local 16ee19ed647f host host local 5fd188828ce6 louyu_pure_app_net bridge local 3d93dd81bd1b none null local 5064e48e1778 old_louyu_2_app_net bridge local

If there are 10 services in a project (all the services in the project are accessed through the network domain name)

Similar to connecting to the data area, the database is no longer connected through IP: 3306.

url: jdbc:mysql://louyu_mysql:3306/louyu?useUnicode=true&characterEncoding=utf8&zeroDateTimeBehavior=convertToNull&useSSL=false&serverTimezone=GMT%2B8

Connecting to the database through the domain name can avoid maintaining the data connection in the modification program after the IP is changed.

View network details:

Check the internal network of docker compose and find that each container is under a network segment and has its own domain name

Therefore, projects packaged through docker compose can be accessed through the network domain name

docker network insepct xxx

[root@VM-16-12-centos louyu_pure]# docker network inspect louyu_pure_app_net

[

{

"Name": "louyu_pure_app_net",

"Id": "5fd188828ce6531dcf77e201fc5710986c0c00d1c6426dfd4a7f3b59e8a1e795",

"Created": "2021-07-31T11:05:15.330757936+08:00",

"Scope": "local",

"Driver": "bridge",

"EnableIPv6": false,

"IPAM": {

"Driver": "default",

"Options": null,

"Config": [

{

"Subnet": "172.19.0.0/16",

"Gateway": "172.19.0.1"

}

]

},

"Internal": false,

"Attachable": true,

"Containers": {

"17d5dddb14c24f2c18bb283c234da26beea5c1547116f635fff1d6563d051205": {

"Name": "louyu_show",

"EndpointID": "9c461ce70f814283511d5ecfc170473224e9457539beb9819d8aa5fa40fdca19",

"MacAddress": "02:42:ac:13:00:05",

"IPv4Address": "172.19.0.5/16",

"IPv6Address": ""

},

"66375ae1816a7b41bfb59622224fc40360f36faef314bb5eaf9070c97ad5627e": {

"Name": "louyu_mysql",

"EndpointID": "ea666cf12dbb7b5d01237e32a62febc77432812ecdc438d3eb4b24b15149c3ba",

"MacAddress": "02:42:ac:13:00:03",

"IPv4Address": "172.19.0.3/16",

"IPv6Address": ""

},

"d8f1e4fa521fb2661f357d758709a81187739dfd59d73e65fce691d7bac62678": {

"Name": "louyu_serv",

"EndpointID": "4b158585d7a61f26945369f841d2fc4cc48a4e2cf135bfba39661147139cbfe9",

"MacAddress": "02:42:ac:13:00:04",

"IPv4Address": "172.19.0.4/16",

"IPv6Address": ""

},

"f609b22ac59bbf071c8ce73315d40271d832654930f6817c8a30386cdcc30509": {

"Name": "louyu_redis",

"EndpointID": "0865d8ecef37a9f9450376aadde4cfdee22ebc513cbcb7377809a1af64d06dd1",

"MacAddress": "02:42:ac:13:00:02",

"IPv4Address": "172.19.0.2/16",

"IPv6Address": ""

}

},

"Options": {},

"Labels": {

"com.docker.compose.network": "app_net",

"com.docker.compose.project": "louyu_pure",

"com.docker.compose.version": "1.21.2"

}

}

]