1, Establishment of private image

1. Download and modify the daemon file

[root@docker ~]# docker pull registry

[root@docker ~]# vim /etc/docker/daemon.json

{

"insecure-registries": ["192.168.100.21:5000"], #Add line, local ip

"registry-mirrors": ["https://xxxxx.mirror.aliyuncs.com "] # own alicloud image accelerator

}

[root@docker ~]# systemctl restart docker

2. Modify the image label and upload it to the registry

[root@docker ~]# docker run -d -p 5000:5000 -v /data/registry:/tmp/registry registr y

2792c5e1151a50f6a1131ac3b687f5f4314fe85d9f6f7ba2c82f96a964135dc4

#Start registry and mount the directory

[root@docker ~]# docker tag nginx:v1 192.168.100.21:5000/nginx

#Label locally

[root@docker ~]# docker push 192.168.100.21:5000/nginx

#Upload to local warehouse

Using default tag: latest

The push refers to repository [192.168.100.21:5000/nginx]

[root@docker ~]# curl -XGET http://192.168.100.21:5000/v2/_catalog

#View mirror list

{"repositories":["nginx"]}

[root@docker ~]# docker rmi 192.168.100.21:5000/nginx:latest

#Test delete mirror

[root@docker ~]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

nginx v1 8ac85260ea00 2 hours ago 205MB

centos 7 8652b9f0cb4c 9 months ago 204MB

[root@docker ~]# docker pull 192.168.100.21:5000/nginx:latest

#download

latest: Pulling from nginx

Digest: sha256:ccb90b53ff0e11fe0ab9f5347c4442d252de59f900c7252660ff90b6891c3be4

Status: Downloaded newer image for 192.168.100.21:5000/nginx:latest

192.168.100.21:5000/nginx:latest

[root@docker ~]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

192.168.100.21:5000/nginx latest 8ac85260ea00 2 hours ago 205MB

nginx v1 8ac85260ea00 2 hours ago 205MB

centos 7 8652b9f0cb4c 9 months ago 204MB

2, cgroup resource allocation

Cgroup overview

Cgroup is the abbreviation of control groups. It is a mechanism provided by linux kernel that can limit, record and isolate the physical resources used by process groups

Physical resources such as CPU, memory, disk IO, etc

CGroup is used by LXC, docker and many other projects to realize process resource control. CGroup itself is the infrastructure that provides the function and interface of grouping management of processes. Specific resource management such as IO or memory allocation control is through this way

These specific resource management functions become cgroup subsystems, which are implemented by the following subsystems:

blkio: set and limit the input and output control of each block device, such as disk, optical disc, USB, etc

CPU: use the scheduler to provide CPU access for cgroup tasks

cpuacct: generate cpu resource report of cgroup task

cpuset: if it is a multi-core cpu, this subsystem will allocate separate cpu and memory for cgroup tasks

Devices: allow or deny cgroup task access to devices

freezer: pause and resume cgroup tasks

Memory: set the memory limit of each cgroup and generate a memory resource report

net_cls: mark each network packet for cgroup's convenience

ns: namespace subsystem

perf_event: it increases the ability to monitor and track each group. It can monitor all threads belonging to a specific group and the lines running on a specific CPU

1. docker resource control cpu

Use the stress stress test tool to test CPU and memory usage.

Use Dockerfile to create a centos based stress tool image

[root@docker ~]# mkdir /opt/stress [root@docker ~] # vim /opt/stress/Dockerfile

FROM centos:7 RUN yum install -y wget RUN wget -O /etc/yum.repos.d/epel.repo http://mirrors.aliyun.com/repo/epel-7.repo RUN yum install -y stress

[root@docker ~]# cd /opt/stress/ [root@docker ~]# docker build -t centos:stress . [root@docker stress]# docker images REPOSITORY TAG IMAGE ID CREATED SIZE centos stress cc9f380c2556 About a minute ago 520MB

(1) , CPU shares parameters

When creating a container, the value of the – CPU shares parameter in the command does not guarantee that one vcpu or several GHz CPU resources can be obtained. It is only an elastic weighted value

[root@docker stress]# docker run -itd --cpu-shares 100 centos:stress 3d423eabad883fa1001c21a506d899a0c5060aca32080a69f2f9a715d9e357c5

Note: by default, the CPU share of each Docker container is 1024. The share of a single container is meaningless. The effect of container CPU weighting can be reflected only when multiple containers are running at the same time.

For example, the CPU shares of two containers A and B are 1000 and 500 respectively. When the CPU allocates time slices, container A has twice the chance to obtain CPU time slices than container B.

However, the allocation result depends on the running status of the host and other containers at that time. In fact, it can not guarantee that container A can obtain CPU time slices. For example, the process of container A is always idle,

Container B can obtain more CPU time slices than container A. in extreme cases, for example, if only one container is running on the host, even if its CPU share is only 50, it can monopolize the CPU resources of the whole host.

Cgroups takes effect only when the resources allocated by a container are scarce, that is, when it is necessary to limit the resources used by the container. Therefore, it is impossible to determine how many CPU resources are allocated to a container simply according to the CPU share of a container. The resource allocation result depends on the CPU allocation of other containers running at the same time and the operation of processes in the container.

You can set the priority of CPU used by containers through cpu share, such as starting two containers and running to view the percentage of CPU used.

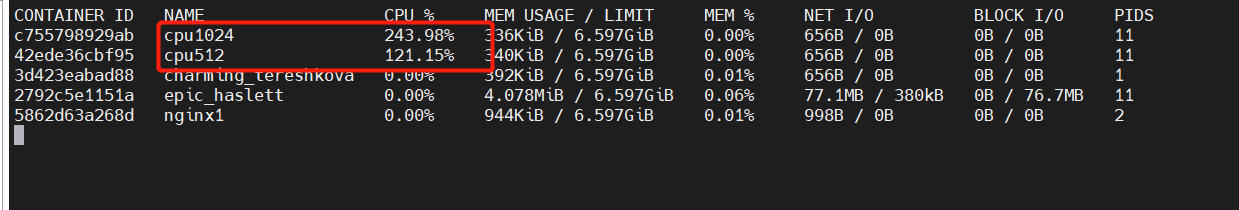

[root@docker stress]# docker run -tid --name cpu512 --cpu-shares 512 centos:stress stress -c 10 # The container generates 10 sub function processes #Open another container for comparison [root@docker stress]# docker run -tid --name cpu1024 --cpu-shares 1024 centos:stress stress -c 10 [root@docker stress]# docker stats

Summary: when there are multiple containers, the parameter - cpu shares is equivalent to weighting the usage right of cpu. Generally, the greater the weight, the more cpu resources will be obtained

(2) . cpu cycle limit

– CPU quota is used to specify how much time can be used to run the container during this cycle.

Unlike – CPU shares, this configuration specifies an absolute value, and the container will never use more CPU resources than the configured value.

CPU period and CPU quota are in microseconds (US). The minimum value of CPU period is 1000 microseconds, the maximum value is 1 second (10^6us), and the default value is 0.1 second (100000 us).

The default value of CPU quota is – 1, indicating no control. The CPU period and CPU quota parameters are generally used together.

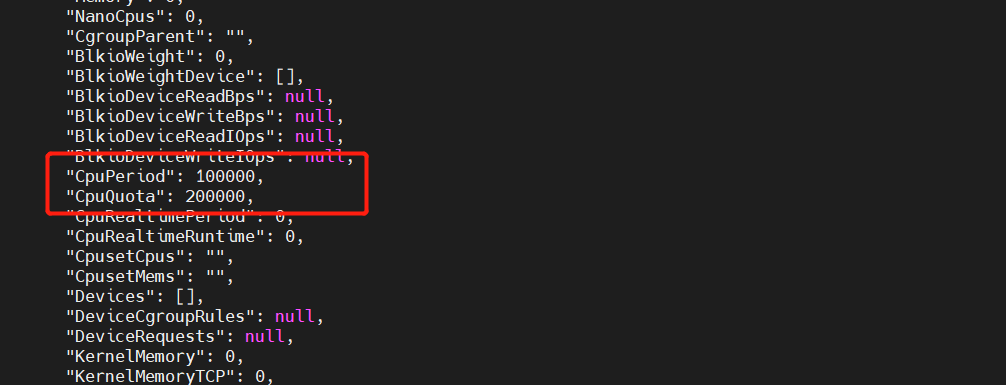

For example, the container process needs to use 0.2 seconds of a single CPU every 1 second. You can set CPU period to 1000000 (i.e. 1 second) and CPU quota to 200000 (0.2 second).

Of course, in the case of multi-core, if the container process is allowed to occupy two CPUs completely, you can set CPU period to 10000 (i.e. 0.1 seconds) and CPU quota to 200000 (0.2 seconds).

cat /sys/fs/cgroup/cpu/docker / container ID/cpu.cfs period_us

cat /sys/fs/cgroup/cpu/docker / container ID/cpu.cfs_quota_us

-1 means that cpu resource usage is unlimited, and the upper limit is hardware

[root@docker ~]# cd /sys/ [root@docker sys]# ls block bus class dev devices firmware fs hypervisor kernel module power [root@docker sys]# cd fs/ [root@docker fs]# ls bpf cgroup pstore xfs [root@docker fs]# cd cgroup/ [root@docker cgroup]# ls blkio cpu,cpuacct freezer net_cls perf_event cpu cpuset hugetlb net_cls,net_prio pids cpuacct devices memory net_prio systemd [root@docker cgroup]# cd cpu [root@docker cpu]# ls cgroup.clone_children cpuacct.usage cpu.rt_runtime_us release_agent cgroup.event_control cpuacct.usage_percpu cpu.shares system.slice cgroup.procs cpu.cfs_period_us cpu.stat tasks cgroup.sane_behavior cpu.cfs_quota_us docker user.slice cpuacct.stat cpu.rt_period_us notify_on_release [root@docker cpu]# cd docker/ [root@docker docker]# ls 2792c5e1151a50f6a1131ac3b687f5f4314fe85d9f6f7ba2c82f96a964135dc4 cpu.cfs_period_us 5862d63a268dde038555f211afed8bde87f72ccdbf2d7c157737dfd7f1dac9fd cpu.cfs_quota_us cgroup.clone_children cpu.rt_period_us cgroup.event_control cpu.rt_runtime_us cgroup.procs cpu.shares cpuacct.stat cpu.stat cpuacct.usage notify_on_release cpuacct.usage_percpu tasks [root@docker docker]# cat cpu.cfs_quota_us -1 [root@docker docker]# cd 2792c5e1151a50f6a1131ac3b687f5f4314fe85d9f6f7ba2c82f96a9641 35dc4/ [root@docker 2792c5e1151a50f6a1131ac3b687f5f4314fe85d9f6f7ba2c82f96a964135dc4]# ls cgroup.clone_children cpuacct.stat cpu.cfs_period_us cpu.rt_runtime_us notify_on_release cgroup.event_control cpuacct.usage cpu.cfs_quota_us cpu.shares tasks cgroup.procs cpuacct.usage_percpu cpu.rt_period_us cpu.stat [root@docker 2792c5e1151a50f6a1131ac3b687f5f4314fe85d9f6f7ba2c82f96a964135dc4]# cat cpu.cfs_period_us 1000000 #cpu slice, unit: μ s, um = 1s [root@docker 2792c5e1151a50f6a1131ac3b687f5f4314fe85d9f6f7ba2c82f96a964135dc4]# cat cpu.cfs_quota_us -1

remarks:

cpu cycle 1s =100000 microseconds

Allocate according to cpu time cycle

cpu can only be occupied by one process in an instant

Insert the code slice here

(2.1) method of limiting container cpu

Take 20% as an example

[root@docker cpu]# docker run -itd --name centos_quota --cpu-period 100000 --cpu-quota 200000 centos:stress [root@docker cpu]# docker inspect centos_quota

(3) CPU and core control

For servers with multi-core CPUs, Docker can also control which CPU cores are used by the container, that is, use the – cpuset CPUs parameter. This is particularly useful for servers with multi-core CPUs, and can configure containers that require high-performance computing for optimal performance.

[root@docker ~]# docker run -itd --name cpu1 --cpuset-cpus 0-1 centos:stress #Executing the above command requires that the host machine is dual core, which means that the container can only be created with 0 and 1 cores. 7b97035a0750aadd848769f7f5049d5f001eef48d5eb068ec9a6c3774ebece5d [root@docker ~]# cat /sys/fs/cgroup/cpuset/docker/7b97035a0750aadd848769f7f5049d5f001eef48d5eb068ec9a6c3774ebece5d/cpuset.cpus 0-1

You can see the binding relationship between the processes in the container and the CPU kernel through the following instructions to bind the CPU kernel.

[ root@localhost stress]# docker exec container ID taskset -c -p 1 #The first process inside the container with pid 1 is bound to the specified CPU for running

Summary:

How containers restrict resources:

1. Use parameters directly to specify resource limits when creating containers

2. After creating the container, specify the resource allocation

Modify the file in / sys/fs/cgroup / of the container resource control corresponding to the host

Mixed use of CPU quota control parameters

Specify that container A uses CPU core 0 and container B only uses CPU core 1 through the cpuset CPUs parameter.

On the host, only these two containers use the corresponding CPU kernel. They each occupy all kernel resources, and CPU shares has no obvious effect.

cpuset-cpus,cpuset-mems

Parameters are only valid on servers on multi-core and multi memory nodes, and must match the actual physical configuration, otherwise the purpose of resource control cannot be achieved.

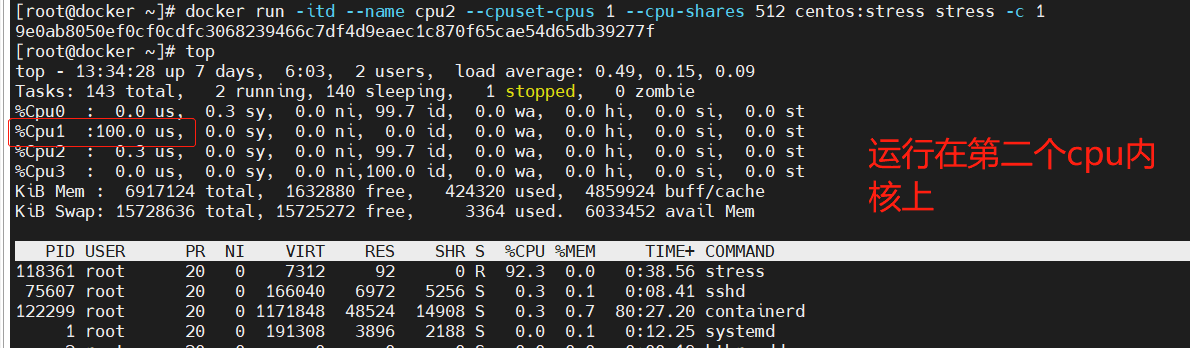

When the system has multiple CPU cores, you need to set the container CPU core through the cpuset CPUs parameter to test conveniently.

docker run -itd --name cpu2 --cpuset-cpus 1 --cpu-shares 512 centos:stress stress -c 1 #--Cpuset CPUs specifies the cpu on which to run 9e0ab8050ef0cf0cdfc3068239466c7df4d9eaec1c870f65cae54d65db39277f [root@docker ~]# top

(4) Memory quota

Similar to the operating system, the memory available to the container includes two parts: physical memory and Swap

Docker controls the memory usage of the container through the following two sets of parameters.

-M or – memory: set the usage limit of memory, such as 100M, 1024M.

– memory swap: set the usage limit of memory + swap.

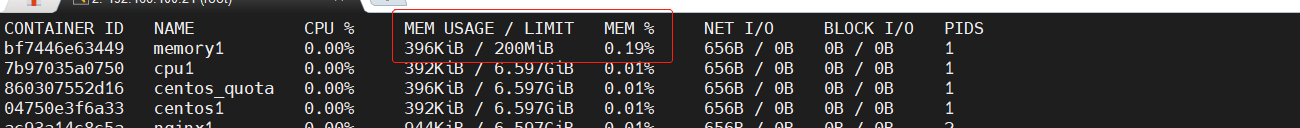

Execute the following command to allow the container to use up to 200M of memory and 300M of swap

[root@docker ~]# docker run -itd --name memory1 -m 200M --memory-swap=300M centos:stress #By default, the container can use all free memory on the host. #Similar to the cgroups configuration of the CPU, Docker automatically displays the container in the directory / sys / FS / CGroup / memory / Docker / < full long ID of the container > Create corresponding in cgroup configuration file

docker stats

(5) Restrictions on Block IO

By default, all containers can read and write disks equally. You can change the priority of container block io by setting the – blkio weight parameter Similar to – CPU shares, blkio weight sets the relative weight value, which is 500 by default.

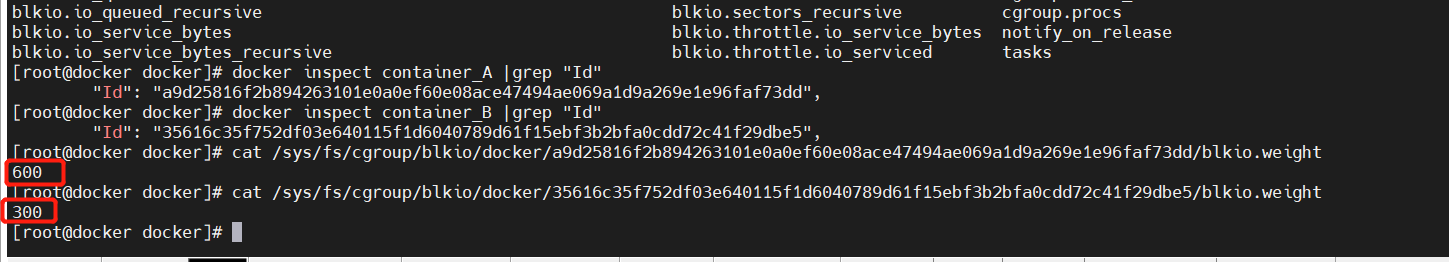

In the following example, container A reads and writes twice as much disk bandwidth as container B.

root@docker ~]# docker run -itd --name container_A --blkio-weight 600 centos:stress [root@docker ~]# docker run -itd --name container_B --blkio-weight 300 centos:stress

(6) Limitations of bps and iops

bps is byte per second, the amount of data read and written per second.

iops is io per second, the number of IOS per second. The bps and iops of the container can be controlled by the following parameters:

--device-read-bps,Restrict access to a device bps --device-write-bps,Restrict writing to a device bps. --device-read-iops,Restrict access to a device iops --device-write-iops,I Restrict writing to a device iops

The following example limits the container to write / dev/sda at a rate of 5 MB/s

Test the speed of writing to the disk in the container through the dd command. Because the file system of the container is on host /dev/sda, writing files in the container is equivalent to writing to host /dev/sda. In addition, oflag=direct specifies to write files in direct Io mode, so that -- device write BPS can take effect.

The speed limit is 5MB/S,10MB/S and no speed limit. The results are as follows:

[root@docker ~]# docker run -it --device-write-bps /dev/sda:5MB centos:stress [root@26fdd492da03 /]# dd if=/dev/zero of=test bs=1M count=10 oflag=direct 10+0 records in 10+0 records out 10485760 bytes (10 MB) copied, 2.00582 s, 5.2 MB/s [root@26fdd492da03 /]# exit exit [root@docker ~]# docker run -it --device-write-bps /dev/sda:10MB centos:stress [root@da528f6758bb /]# dd if=/dev/zero of=test bs=1M count=10 oflag=direct 10+0 records in 10+0 records out 10485760 bytes (10 MB) copied, 0.95473 s, 11.0 MB/s [root@da528f6758bb /]# exit exit [root@docker ~]# docker run -it centos:stress [root@02a55b38d0fa /]# dd if=/dev/zero of=test bs=1M count=10 oflag=direct 10+0 records in 10+0 records out 10485760 bytes (10 MB) copied, 0.00934216 s, 1.1 GB/s [root@02a55b38d0fa /]# exit exit

Specify resource limits when building images

--build-arg=[] : Sets the variable when the mirror is created; --cpu-shares : set up cpu Use weights; --cpu-period : limit CPU CFS cycle; --cpu-quota : limit CPU CFS quota; --cpuset-cpus : Specify the used CPU id; --cpuset-mems : Specifies the memory used id; --disable-content-trust : Ignore verification and enable by default; -f : Specify the to use Dockerfile route; --force-rm : Set the intermediate container to be deleted during mirroring; --isolation : Use container isolation technology; --label=[] : Set the metadata used by the mirror; -m : Set maximum memory; --memory-swap : set up Swap The maximum value for is memory swap, "-1"Indicates unlimited swap; --no-cache : The process of creating a mirror does not use caching; --pull : Try to update the new version of the image; --quiet, -q : Quiet mode. Only the image is output after success ID; --rm : Delete the intermediate container after setting the image successfully; --shm-size : set up/dev/shm The default value is 64 M; --ulimit : Ulimit to configure; --squash : take Dockerfile All operations in are compressed into one layer; --tag, -t: The name and label of the image, usually name:tag perhaps name format;You can set multiple labels for a mirror in a single build. --network : default default;Set during build RUN Network mode of instruction