I Broker Load overview

Broker load is an asynchronous import method. The supported data sources depend on the data sources supported by the broker process.

The user needs to create a Broker load import through MySQL protocol and check the import results by viewing the Import command.

Applicable scenarios:

The source data is in a storage system accessible to the Broker, such as HDFS.

The amount of data ranges from tens to hundreds of GB.

Explanation of terms:

- Frontend (FE): metadata and scheduling node of Doris system. In the import process, it is mainly responsible for the generation of import plan s and the scheduling of import tasks.

- Backend (BE): the computing and storage node of Doris system. In the import process, it is mainly responsible for ETL and storage of data.

- Broker: broker is an independent stateless process. It encapsulates the file system interface and provides Doris with the ability to read files in the remote storage system.

- Plan: import the execution plan. BE will execute the import execution plan and import the data into Doris system.

Basic principle:

After the user submits the import task, the FE will generate the corresponding Plan and distribute the Plan to multiple bes for execution according to the current number of BES and file size, and each BE will execute part of the imported data.

BE will pull data from the Broker during execution, and import the data into the system after transform ing the data. All BE are imported, and FE will finally decide whether the import is successful.

+

| 1. user create broker load

v

+----+----+

| |

| FE |

| |

+----+----+

|

| 2. BE etl and load the data

+--------------------------+

| | |

+---v---+ +--v----+ +---v---+

| | | | | |

| BE | | BE | | BE |

| | | | | |

+---+-^-+ +---+-^-+ +--+-^--+

| | | | | |

| | | | | | 3. pull data from broker

+---v-+-+ +---v-+-+ +--v-+--+

| | | | | |

|Broker | |Broker | |Broker |

| | | | | |

+---+-^-+ +---+-^-+ +---+-^-+

| | | | | |

+---v-+-----------v-+----------v-+-+

| HDFS/BOS/AFS cluster |

| |

+----------------------------------+

II basic operation

2.1 create import

Syntax:

LOAD LABEL db_name.label_name

(data_desc, ...)

WITH BROKER broker_name broker_properties

[PROPERTIES (key1=value1, ... )]

* data_desc:

DATA INFILE ('file_path', ...)

[NEGATIVE]

INTO TABLE tbl_name

[PARTITION (p1, p2)]

[COLUMNS TERMINATED BY separator ]

[(col1, ...)]

[PRECEDING FILTER predicate]

[SET (k1=f1(xx), k2=f2(xx))]

[WHERE predicate]

* broker_properties:

(key1=value1, ...)

Example:

LOAD LABEL db1.label1

(

DATA INFILE("hdfs://abc.com:8888/user/palo/test/ml/file1")

INTO TABLE tbl1

COLUMNS TERMINATED BY ","

(tmp_c1,tmp_c2)

SET

(

id=tmp_c2,

name=tmp_c1

),

DATA INFILE("hdfs://abc.com:8888/user/palo/test/ml/file2")

INTO TABLE tbl2

COLUMNS TERMINATED BY ","

(col1, col2)

where col1 > 1

)

WITH BROKER 'broker'

(

"username"="user",

"password"="pass"

)

PROPERTIES

(

"timeout" = "3600"

);

Create the imported detailed syntax, execute HELP BROKER LOAD to view the syntax help. This section mainly introduces the meaning of parameters and precautions in the creation and import syntax of Broker load.

2.1.1 Label

ID of the import task. Each import task has a unique label within a single database. Label is a user-defined name in the Import command. Through this label, users can view the execution of the corresponding import task.

Another function of label is to prevent users from importing the same data repeatedly. It is strongly recommended that users use the same label for the same batch of data. In this way, repeated requests for the same batch of data will only be accepted once, ensuring the at most once semantics

When the status of the import job corresponding to the Label is CANCELLED, you can use the Label to submit the import job again.

2.1.2 data description parameters

The data description class parameter mainly refers to the data in the Broker load creation and import statement_ Parameters of desc section. Data per group_ Desc mainly describes the data source address, ETL function, target table, partition and other information involved in this import.

Some parameters of the data description class are explained in detail below:

-

Multi table import

Broker load supports that one import task involves multiple tables. Each broker load import task can import data in multiple tables_ Desc declares multiple tables to implement multi table import. Each individual data_desc can also specify the data source address belonging to the table. Broker load ensures the success or failure of atomicity between multiple tables imported in a single time. -

negative

data_desc can also set data inversion and import. This function is mainly used when the aggregate columns in the data table are of SUM type. If you want to undo a batch of imported data. You can import the same batch of data through the negative parameter. Doris will automatically reverse the data in the aggregation column for this batch of data to eliminate the same batch of data. -

partition

In data_ The partition information of the table to be imported can be specified in desc. If the data to be imported does not belong to the specified partition, it will not be imported. At the same time, data that is not in the specified partition will be considered as error data. -

set column mapping

In data_ The SET statement in desc is responsible for setting the column function transformation, which supports the equivalent expression transformation of all queries. This attribute is required if the columns of the original data do not correspond to the columns in the table one by one. -

preceding filter predicate

Used to filter raw data. The original data is the data without column mapping and conversion. Users can filter the data before conversion, select the desired data, and then convert. -

where predicate

In data_ The WHERE statement in desc is responsible for filtering the transform ed data, AND the filtered data will not enter the statistics of tolerance rate. If multiple data_ If multiple conditions of the same table are declared in DESC, multiple conditions of the same table will be merged. The merge policy is AND.

2.1.3 import operation parameters

The import job parameter mainly refers to the opt in the Broker load create import statement_ Parameters in the Properties section. Import job parameters are applied to the entire import job.

Some parameters of import job parameters are explained in detail below:

-

timeout

The timeout (in seconds) of the import job, which can be accessed by the user in Opt_ Set the timeout for each import in properties. If the import task is not completed within the set timeout time, it will be CANCELLED by the system and become CANCELLED. The default import timeout for Broker load is 4 hours.

Generally, users do not need to manually set the timeout of import tasks. When the import cannot be completed within the default timeout, you can manually set the timeout of the task. -

max_filter_ratio

The maximum tolerance rate of import task. The default value is 0. The value range is 0 ~ 1. When the import error rate exceeds this value, the import fails.

If you want to ignore the wrong rows, you can set this parameter greater than 0 to ensure that the import can succeed.

The calculation formula is:

max_filter_ratio = (dpp.abnorm.ALL / (dpp.abnorm.ALL + dpp.norm.ALL ) )

dpp.abnorm.ALL indicates the number of rows with unqualified data quality. Such as type mismatch, column number mismatch, length mismatch, etc.

dpp.norm.ALL refers to the number of correct data in the import process. You can query the correct data amount of the import task through the SHOW LOAD command.

Number of lines in the original file = DPP abnorm. ALL + dpp. norm. ALL -

exec_mem_limit

Import memory limit. The default is 2GB. The unit is bytes. -

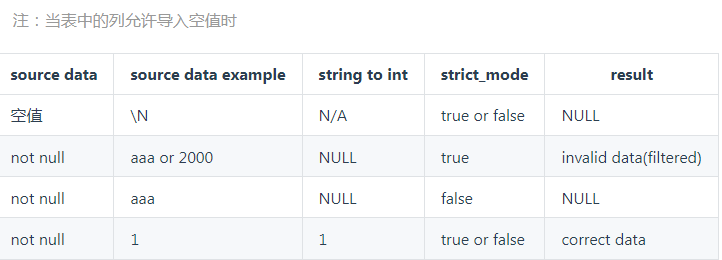

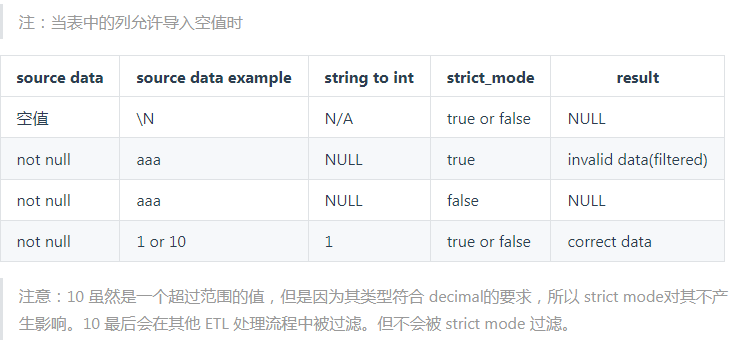

strict_mode

Broker load import enables strict mode. The opening method is properties ("strict_mode" = "true"). The default strict mode is off.

strict mode mode means that column type conversion during import is strictly filtered. The strict filtering strategy is as follows:

4.1) for column type conversion, if strict mode is true, the wrong data will be filter ed. The error data here refers to the data whose original data is not null and whose result is null after participating in column type conversion.

4.2) when an imported column is generated by function transformation, strict mode does not affect it.

4.3) if the imported column type contains range limit, if the original data can pass the type conversion normally, but cannot pass the range limit, strict mode will not affect it. For example, if the type is decimal(1,0) and the original data is 10, it belongs to the scope that can be converted by type but is not within the scope of column declaration. This data strict has no effect on it. -

merge_type

There are three types of data consolidation types: APPEND, DELETE and MERGE. APPEND is the default value, which means that all this batch of data needs to be added to the existing data. DELETE means to DELETE all rows with the same key as this batch of data. MERGE semantics needs to be used in combination with the DELETE condition, The data that meets the DELETE condition is processed according to the DELETE semantics, and the rest are processed according to the APPEND semantics.

2.1.4 import relationship between strict mode and source data

Here, take TinyInt as an example:

Here, take Decimal(1,0) as an example:

2.2 viewing and importing

Because the Broker load import method is asynchronous, the user must create the imported Label record and use the Label in the view Import command to view the import results. The view Import command is common in all import methods. The specific syntax can be viewed by executing HELP SHOW LOAD.

Example:

mysql> show load order by createtime desc limit 1\G

*************************** 1. row ***************************

JobId: 76391

Label: label1

State: FINISHED

Progress: ETL:N/A; LOAD:100%

Type: BROKER

EtlInfo: unselected.rows=4; dpp.abnorm.ALL=15; dpp.norm.ALL=28133376

TaskInfo: cluster:N/A; timeout(s):10800; max_filter_ratio:5.0E-5

ErrorMsg: N/A

CreateTime: 2019-07-27 11:46:42

EtlStartTime: 2019-07-27 11:46:44

EtlFinishTime: 2019-07-27 11:46:44

LoadStartTime: 2019-07-27 11:46:44

LoadFinishTime: 2019-07-27 11:50:16

URL: http://192.168.1.1:8040/api/_load_error_log?file=__shard_4/error_log_insert_stmt_4bb00753932c491a-a6da6e2725415317_4bb00753932c491a_a6da6e2725415317

JobDetails: {"Unfinished backends":{"9c3441027ff948a0-8287923329a2b6a7":[10002]},"ScannedRows":2390016,"TaskNumber":1,"All backends":{"9c3441027ff948a0-8287923329a2b6a7":[10002]},"FileNumber":1,"FileSize":1073741824}

The following mainly describes the meaning of viewing the parameters in the result set returned by the Import command:

-

JobId

The unique ID of the import task. The JobId of each import task is different and is automatically generated by the system. Different from Label, JobId will never be the same, while Label can be reused after the import task fails. -

Label

ID of the import task. -

State

The current phase of the import task. During Broker load import, the status of PENDING and LOADING will appear. If the Broker load is in PENDING status, it indicates that the current import task is waiting to be executed; The LOADING status indicates that it is being executed.

There are two final stages of the import task: canned and FINISHED. When the Load job is in these two stages, the import is completed. Where CANCELLED is import failure and FINISHED is import success. -

Progress

The progress description of the import task. There are two kinds of progress: ETL and LOAD, which correspond to the two stages of the import process: ETL and LOADING. At present, the Broker load has only the LOADING stage, so the ETL will always be displayed as N/A

The progress range of LOAD is 0 ~ 100%.

LOAD progress = number of tables currently imported / total number of tables designed for this import task * 100%

If all import tables are imported, the LOAD progress will be 99% and the import will enter the final effective stage. After the whole import is completed, the LOAD progress will be changed to 100%.

Import progress is not linear. Therefore, if the progress does not change over a period of time, it does not mean that the import is not being executed. -

Type

Type of import task. The type value of Broker load is only BROKER. -

EtlInfo

It mainly displays the imported data volume indicator unselected rows , dpp. norm. All and DPP abnorm. ALL. The user can judge how many rows are filtered by the where condition according to the first value, and the last two indicators verify whether the error rate of the current import task exceeds max_filter_ratio.

The sum of the three indicators is the total number of original data. -

TaskInfo

It mainly displays the current import task parameters, that is, the import task parameters specified by the user when creating the Broker load import task, including cluster, timeout and max_filter_ratio. -

ErrorMsg

When the import task status is CANCELLED, the reason for failure will be displayed. The display is divided into two parts: type and msg. If the import task is successful, N/A will be displayed.

Value meaning of type:

8.1) USER_CANCEL: task cancelled by user

8.2) ETL_RUN_FAIL: failed import task in ETL phase

8.3) ETL_QUALITY_UNSATISFIED: the data quality is unqualified, that is, the error data rate exceeds max_filter_ratio

8.4) LOAD_RUN_FAIL: failed import task in LOADING phase

8.5) TIMEOUT: the import task is not completed within the timeout

8.6) UNKNOWN: unknown import error -

CreateTime/EtlStartTime/EtlFinishTime/LoadStartTime/LoadFinishTime

These values represent the time when the import is created, the time when the ETL phase starts, the time when the ETL phase completes, the time when the Loading phase starts, and the time when the entire import task completes.

The Broker load import has no ETL phase, so its etlstarttime, etlfinishtime and loadstarttime are set to the same value.

If the import task stays at CreateTime for a long time, and LoadStartTime is N/A, it indicates that the current import task accumulation is serious. Users can reduce the frequency of import submission.

LoadFinishTime - CreateTime = Time consumed by the entire import task LoadFinishTime - LoadStartTime = whole Broker load Import task execution time = Time consumed by the entire import task - Waiting time for import task

-

URL

The error data sample of the import task can be obtained by accessing the URL address. When there is no error data in this import, the URL field is N/A. -

JobDetails

Displays the detailed running status of some jobs. Including the number of imported files, total size (bytes), number of subtasks, number of original lines processed, BE node Id of running subtasks, and BE node Id of unfinished tasks.

The number of original rows processed is updated every 5 seconds. This number of lines is only used to show the current progress and does not represent the final actual number of lines processed. The actual number of lines processed shall be subject to that displayed in etlininfo.

2.3 cancel import

When the Broker load job status is not CANCELLED or completed, it can be manually CANCELLED by the user. When canceling, you need to specify the Label of the import task to be CANCELLED. To cancel the Import command syntax, execute HELP CANCEL LOAD to view.

mysql> HELP CANCEL LOAD;

Name: 'CANCEL LOAD'

Description:

This statement is used to undo the specified load label Import job for batch.

This is an asynchronous operation. If the task is submitted successfully, it will be returned. Available after execution SHOW LOAD Command to view progress.

Syntax:

CANCEL LOAD

[FROM db_name]

WHERE LABEL = "load_label";

Examples:

1. Undo database example_db Up, label by example_db_test_load_label Import job for

CANCEL LOAD

FROM example_db

WHERE LABEL = "example_db_test_load_label";

mysql>

III Related system configuration

3.1 FE configuration

The following configurations belong to the system level configuration of Broker load, that is, the configuration used for all Broker load import tasks. Mainly by modifying Fe Conf to adjust the configuration value.

min_bytes_per_broker_scanner/max_bytes_per_broker_scanner/max_broker_concurrency

The first two configurations limit the minimum and maximum amount of data processed by a single BE. The third configuration limits the maximum number of concurrent imports for a job. The minimum amount of data processed, the maximum number of concurrent files, the size of source files and the number of current cluster bes jointly determine the number of concurrent files imported this time.

Concurrent number of this import = Math.min(Source file size/Minimum processing capacity, maximum concurrent number, current BE Number of nodes) Single import this time BE Processing capacity of = Source file size/Concurrent number of this import

Generally, the maximum amount of data supported by an import job is max_bytes_per_broker_scanner * number of be nodes. If you need to import a larger amount of data, you need to adjust Max appropriately_ bytes_ per_ broker_ The size of the scanner parameter.

Default configuration:

Parameter name: min_bytes_per_broker_scanner, Default 64 MB,Company bytes. Parameter name: max_broker_concurrency, The default is 10. Parameter name: max_bytes_per_broker_scanner,Default 3 G,Company bytes.

IV case

4.1 import Hive orc format table

Creating tables on doris side

CREATE TABLE broker_test1

(

id bigint,

sale_date varchar(100),

prod_name varchar(32),

sale_nums BIGINT SUM DEFAULT '0'

) ENGINE=OLAP

AGGREGATE KEY(id,sale_date,prod_name)

DISTRIBUTED BY HASH(id) BUCKETS 10

PROPERTIES("replication_num" = "3");

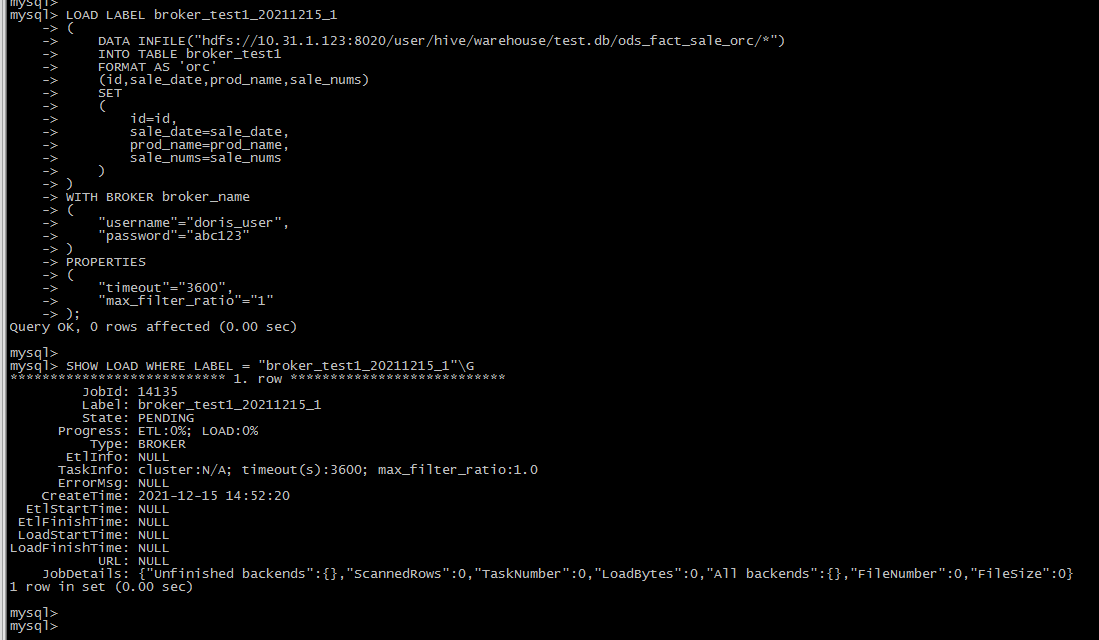

Prepare the load command

LOAD LABEL broker_test1_20211215_1

(

DATA INFILE("hdfs://10.31.1.123:8020/user/hive/warehouse/test.db/ods_fact_sale_orc/*")

INTO TABLE broker_test1

FORMAT AS 'orc'

(id,sale_date,prod_name,sale_nums)

SET

(

id=id,

sale_date=sale_date,

prod_name=prod_name,

sale_nums=sale_nums

)

)

WITH BROKER broker_name

(

"username"="doris_user",

"password"="abc123"

)

PROPERTIES

(

"timeout"="3600",

"max_filter_ratio"="1"

);

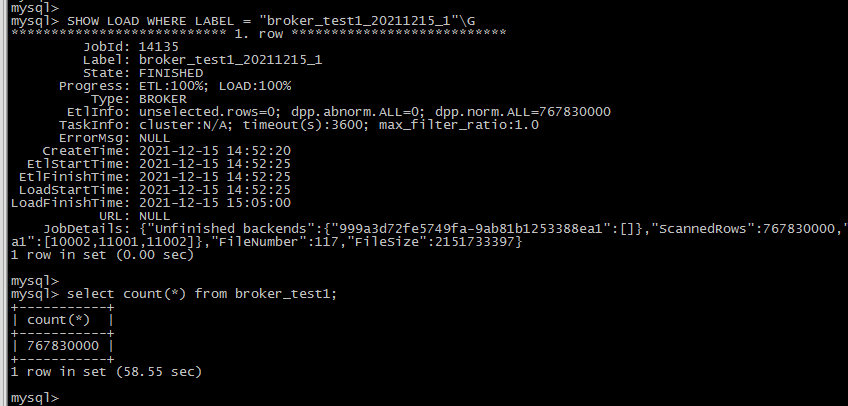

View the progress of the import

SHOW LOAD WHERE LABEL = "broker_test1_20211215_1"\G

Waiting for execution to complete:

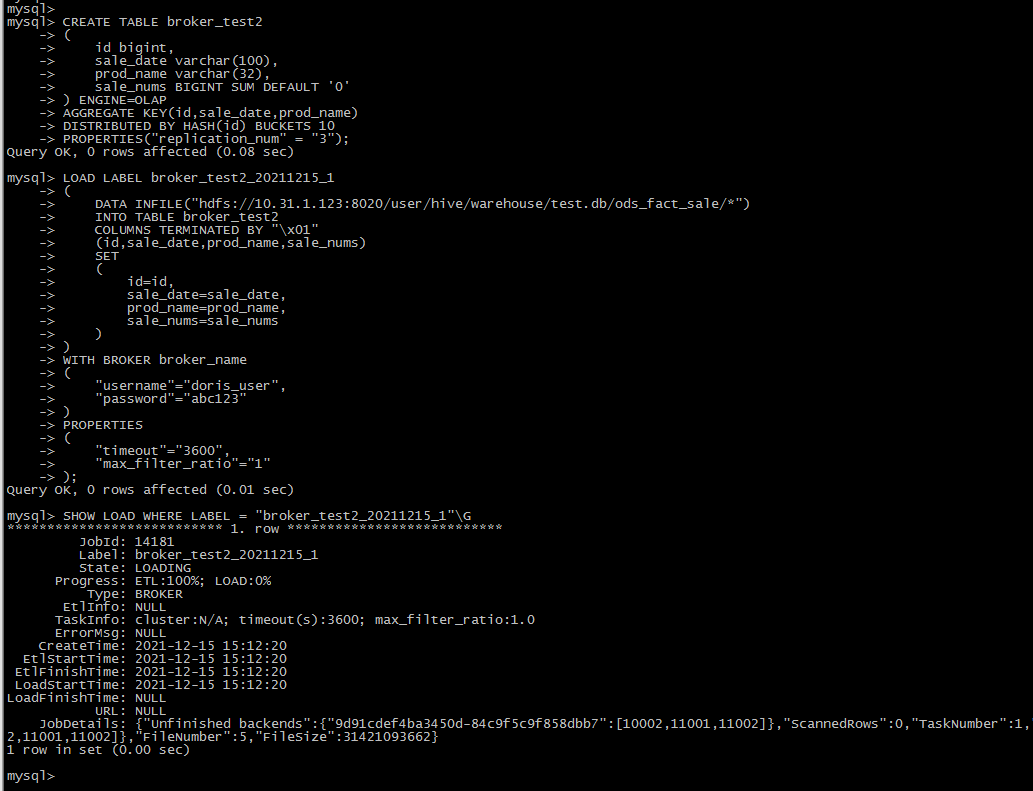

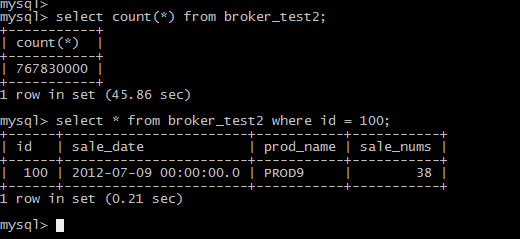

4.2 import Hive text format table

Creating tables on doris side

CREATE TABLE broker_test2

(

id bigint,

sale_date varchar(100),

prod_name varchar(32),

sale_nums BIGINT SUM DEFAULT '0'

) ENGINE=OLAP

AGGREGATE KEY(id,sale_date,prod_name)

DISTRIBUTED BY HASH(id) BUCKETS 10

PROPERTIES("replication_num" = "3");

Prepare the load command

LOAD LABEL broker_test2_20211215_4

(

DATA INFILE("hdfs://10.31.1.123:8020/user/hive/warehouse/ods_fact_sale/*")

INTO TABLE broker_test2

COLUMNS TERMINATED BY "\\x01"

(id,sale_date,prod_name,sale_nums)

SET

(

id=id,

sale_date=sale_date,

prod_name=prod_name,

sale_nums=sale_nums

)

)

WITH BROKER broker_name

(

"username"="doris_user",

"password"="abc123"

)

PROPERTIES

(

"timeout"="3600",

"max_filter_ratio"="1"

);

View the progress of the import

SHOW LOAD WHERE LABEL = "broker_test2_20211215_4"\G

reference resources:

- https://doris.apache.org/master/zh-CN/administrator-guide/load-data/broker-load-manual.html