This paper mainly introduces the application of centos7 9. Various deployment modes and configuration management of FullNAT mode of system deployment DPVS, including IPv4-IPv4, binding, IPv6-IPv6, IPv6-IPv4 (NAT64) and keepalived mode.

The following configurations are all based on dual arm mode, and the corresponding toa module of DPVS has been installed on the RS machine. Let's start with the simple IPv4 configuration of a single network card, then do the bonding configuration, then do the simple IPv6 configuration, NAT64 configuration, and finally use keepalived to configure the active and standby modes.

The DPVS version installed in this article is 1.8-10 and the dpdk version is 18.11.2. The detailed installation process has been described in the previous article DPVS fullnat mode deployment - TinyChen's Studio It has been described in and will not be repeated here.

1. IPv4 simple configuration

1.1 architecture diagram

The first is the simplest configuration method, which directly uses the command line operation of ipvsadm to realize the FullNat mode of an IPv4 network. The architecture diagram is as follows:

[the external chain picture transfer fails. The source station may have an anti-theft chain mechanism. It is recommended to save the picture and upload it directly (img-uio6gr21-164578284634)( https://resource.tinychen.com/20210806163652.svg )]

Here, we use the dpdk2 network card as the wan port and the dpdk0 network card as the lan port

1.2 configuration process

# First, we add VIP 10.0.96.204 to the dpdk2 network card (wan)

$ dpip addr add 10.0.96.204/32 dev dpdk2

# Next, we need to add two routes, which are divided into wan port network segment and RS machine network segment

$ dpip route add 10.0.96.0/24 dev dpdk2

$ dpip route add 192.168.229.0/24 dev dpdk0

# It is better to add a default route to the gateway to ensure that the return packet of ICMP packet can run through

$ dpip route add default via 10.0.96.254 dev dpdk2

# Establish forwarding rules using RR algorithm

# add service <VIP:vport> to forwarding, scheduling mode is RR.

# use ipvsadm --help for more info.

$ ipvsadm -A -t 10.0.96.204:80 -s rr

# Here, for the convenience of testing, we only add one RS

# add two RS for service, forwarding mode is FNAT (-b)

$ ipvsadm -a -t 10.0.96.204:80 -r 192.168.229.1 -b

# Add LocalIP to the network. FNAT mode is required here

# add at least one Local-IP (LIP) for FNAT on LAN interface

$ ipvsadm --add-laddr -z 192.168.229.204 -t 10.0.96.204:80 -F dpdk0

# Then let's see the effect

$ dpip route show

inet 192.168.229.204/32 via 0.0.0.0 src 0.0.0.0 dev dpdk0 mtu 1500 tos 0 scope host metric 0 proto auto

inet 10.0.96.204/32 via 0.0.0.0 src 0.0.0.0 dev dpdk2 mtu 1500 tos 0 scope host metric 0 proto auto

inet 10.0.96.0/24 via 0.0.0.0 src 0.0.0.0 dev dpdk2 mtu 1500 tos 0 scope link metric 0 proto auto

inet 192.168.229.0/24 via 0.0.0.0 src 0.0.0.0 dev dpdk0 mtu 1500 tos 0 scope link metric 0 proto auto

inet 0.0.0.0/0 via 10.0.96.254 src 0.0.0.0 dev dpdk2 mtu 1500 tos 0 scope global metric 0 proto auto

$ dpip addr show

inet 10.0.96.204/32 scope global dpdk2

valid_lft forever preferred_lft forever

inet 192.168.229.204/32 scope global dpdk0

valid_lft forever preferred_lft forever

$ ipvsadm -ln

IP Virtual Server version 0.0.0 (size=0)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 10.0.96.204:80 rr

-> 192.168.229.1:80 FullNat 1 0 0

$ ipvsadm -G

VIP:VPORT TOTAL SNAT_IP CONFLICTS CONNS

10.0.96.204:80 1

192.168.229.204 0 0

Then we start an nginx on the RS, set the return IP and port number, and then directly test the VIP with ping and curl commands:

$ ping -c4 10.0.96.204 PING 10.0.96.204 (10.0.96.204) 56(84) bytes of data. 64 bytes from 10.0.96.204: icmp_seq=1 ttl=54 time=47.2 ms 64 bytes from 10.0.96.204: icmp_seq=2 ttl=54 time=48.10 ms 64 bytes from 10.0.96.204: icmp_seq=3 ttl=54 time=48.5 ms 64 bytes from 10.0.96.204: icmp_seq=4 ttl=54 time=48.5 ms --- 10.0.96.204 ping statistics --- 4 packets transmitted, 4 received, 0% packet loss, time 8ms rtt min/avg/max/mdev = 47.235/48.311/48.969/0.684 ms $ curl 10.0.96.204 Your IP and port is 172.16.0.1:62844

1.3 summary

This mode is very simple and can be quickly configured to check whether the DPVS on your machine can work normally. However, it is rarely used because it is a single point.

2. Binding configuration

At present, DPVS supports the configuration of bonding4 and bonding0, which are basically the same. For the configuration method, please refer to DPVS / conf / DPVS conf.single-bond. Sample this file.

When configuring the binding mode, it is not necessary to specify kni for the slave network card (such as dpdk0)_ Name. Instead, specify the corresponding kni in the binding_ Name. At the same time, note that the MAC address of the network card specified by the primary parameter is generally the MAC address of the bonding network card.

[external chain picture transfer failed. The source station may have anti-theft chain mechanism. It is recommended to save the picture and upload it directly (img-d2ypkdtt-1645782784636)( https://resource.tinychen.com/dpvs-two-arm-fnat-bond4-fakeip.drawio.svg )]

! netif config

netif_defs {

<init> pktpool_size 1048575

<init> pktpool_cache 256

<init> device dpdk0 {

rx {

max_burst_size 32

queue_number 16

descriptor_number 1024

rss all

}

tx {

max_burst_size 32

queue_number 16

descriptor_number 1024

}

fdir {

mode perfect

pballoc 64k

status matched

}

! mtu 1500

! promisc_mode

! kni_name dpdk0.kni

}

<init> device dpdk1 {

rx {

max_burst_size 32

queue_number 16

descriptor_number 1024

rss all

}

tx {

max_burst_size 32

queue_number 16

descriptor_number 1024

}

fdir {

mode perfect

pballoc 64k

status matched

}

! mtu 1500

! promisc_mode

! kni_name dpdk1.kni

}

<init> device dpdk2 {

rx {

max_burst_size 32

queue_number 16

descriptor_number 1024

rss all

}

tx {

max_burst_size 32

queue_number 16

descriptor_number 1024

}

fdir {

mode perfect

pballoc 64k

status matched

}

! mtu 1500

! promisc_mode

! kni_name dpdk2.kni

}

<init> device dpdk3 {

rx {

max_burst_size 32

queue_number 16

descriptor_number 1024

rss all

}

tx {

max_burst_size 32

queue_number 16

descriptor_number 1024

}

fdir {

mode perfect

pballoc 64k

status matched

}

! mtu 1500

! promisc_mode

! kni_name dpdk3.kni

}

<init> bonding bond1 {

mode 4

slave dpdk0

slave dpdk1

primary dpdk0

kni_name bond1.kni

}

<init> bonding bond2 {

mode 4

slave dpdk2

slave dpdk3

primary dpdk2

kni_name bond2.kni

}

}

Then, when configuring each worker CPU, note that the port should select the corresponding bond network card

<init> worker cpu1 {

type slave

cpu_id 1

port bond1 {

rx_queue_ids 0

tx_queue_ids 0

}

port bond2 {

rx_queue_ids 0

tx_queue_ids 0

}

}

The binding configuration of DPVS is the same as that of direct operation in Linux. After the bonding configuration is successful, you only need to operate the generated bonding network card. Use the dpip command to view the working status of the corresponding network card: for example, the following network card works in the mode of full duplex and 20000 Mbps rate, the MTU is 1500, and 16 transceiver queues are configured in DPVS.

$ dpip link show

5: bond1: socket 0 mtu 1500 rx-queue 15 tx-queue 16

UP 20000 Mbps full-duplex auto-nego

addr AA:BB:CC:11:22:33

6: bond2: socket 0 mtu 1500 rx-queue 15 tx-queue 16

UP 20000 Mbps full-duplex auto-nego

addr AA:BB:CC:12:34:56

3. IPv6 simple configuration

3.1 DPVS configuration process

The simple configuration method of IPv6 is the same as that of IPv4, except that the corresponding IPv4 address is replaced by IPv6 address. At the same time, it should be noted that when specifying the port of IPv6 address, you need to use [] to enclose the IP address.

[the external chain picture transfer fails. The source station may have an anti-theft chain mechanism. It is recommended to save the picture and upload it directly (img-x8oget1u-164578284636)( https://resource.tinychen.com/dpvs-two-arm-fnat-ipv6-fakeip.drawio.svg )]

# Add VIP and related routes

$ dpip addr add 2001::201/128 dev bond2

$ dpip route -6 add 2001::/64 dev bond2

$ dpip route -6 add 2407::/64 dev bond1

$ dpip route -6 add default via 2001::1 dev bond2

# Configure ipvsadm and RS

$ ipvsadm -A -t [2001::201]:80 -s rr

$ ipvsadm -a -t [2001::201]:80 -r [2407::1]:80 -b

$ ipvsadm -a -t [2001::201]:80 -r [2407::2]:80 -b

# Add IPv6 LIP

$ ipvsadm --add-laddr -z 2407::201 -t [2001::201]:80 -F bond1

$ dpip addr show

inet6 2407::201/128 scope global bond1

valid_lft forever preferred_lft forever

inet6 2001::201/128 scope global bond2

valid_lft forever preferred_lft forever

$ dpip route -6 show

inet6 2001::201/128 dev bond2 mtu 1500 scope host

inet6 2407::201/128 dev bond1 mtu 1500 scope host

inet6 2407::/64 dev bond1 mtu 1500 scope link

inet6 2001::/64 dev bond2 mtu 1500 scope link

inet6 default via 2001::1 dev bond2 mtu 1500 scope global

$ ipvsadm -Ln

IP Virtual Server version 0.0.0 (size=0)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP [2001::201]:80 rr

-> [2407::1]:80 FullNat 1 0 0

-> [2407::2]:80 FullNat 1 0 0

3.2 effect test

Test the effect and confirm that nginx on RS can normally return to the real IP and port of the client, indicating that the configuration is normal.

$ curl -6 "http://\[2001::201\]" Your IP and port is [2408::1]:38383

4. NAT64 configuration

4.1 DPVS configuration process

The architecture diagram is not much different from the previous two, but the IP is slightly different

[the external chain image transfer fails. The source station may have an anti-theft chain mechanism. It is recommended to save the image and upload it directly (img-gqb1l7wo-164578284637)( https://resource.tinychen.com/dpvs-two-arm-fnat-nat64-fakeip.drawio.svg )]

# Add VIP and related routes

$ dpip addr add 2001::201/128 dev bond2

$ dpip route -6 add 2001::/64 dev bond2

$ dpip route add 192.168.229.0/23 dev bond1

$ dpip route -6 add default via 2001::1 dev bond2

# Configure ipvsadm and RS

$ ipvsadm -A -t [2001::201]:80 -s rr

$ ipvsadm -a -t [2001::201]:80 -r 192.168.229.1:80 -b

$ ipvsadm -a -t [2001::201]:80 -r 192.168.229.2:80 -b

# Add IPv6 LIP

$ ipvsadm --add-laddr -z 192.168.229.201 -t [2001::201]:80 -F bond1

$ dpip addr show

inet6 2001::201/128 scope global bond2

valid_lft forever preferred_lft forever

inet 192.168.229.201/32 scope global bond1

valid_lft forever preferred_lft forever

$ dpip route show

inet 192.168.229.201/32 via 0.0.0.0 src 0.0.0.0 dev bond1 mtu 1500 tos 0 scope host metric 0 proto auto

inet 192.168.229.0/23 via 0.0.0.0 src 0.0.0.0 dev bond1 mtu 1500 tos 0 scope link metric 0 proto auto

$ dpip route -6 show

inet6 2001::201/128 dev bond2 mtu 1500 scope host

inet6 2001::/64 dev bond2 mtu 1500 scope link

inet6 default via 2001::1 dev bond2 mtu 1500 scope global

$ ipvsadm -ln

IP Virtual Server version 0.0.0 (size=0)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP [2001::201]:80 rr

-> 192.168.229.1:80 FullNat 1 0 0

-> 192.168.229.2:80 FullNat 1 0 0

Test effect

$ curl -6 "http://\[2001::201\]" Your IP and port is 192.168.229.201:1035

From the above test results, even if the TOA module is installed, the source IP address of the client in NAT64 mode cannot be obtained, and all client IP and ports will become the IP and port when LIP is forwarded. If there is no demand for the source IP, this problem can be ignored. If there is a demand, the client program above RS needs to be changed. Let's take nginx as an example.

4.2 NGINX supports NAT64

dpvs also provides a nat64 toa module for nginx. When VIP is ipv6 and RS is ipv4, you can use this module to obtain the user's real ipv6 address in nginx. We need to apply this patch before compiling and installing nginx.

From the official file name, we can see that the patch should be made based on version 1.14.0. First, we can use the old version of version 1.14.0 to patch normally, and the subsequent compilation and installation can also be carried out normally

[root@tiny-centos7 nginx-1.14.0]# pwd /home/nginx-1.14.0 [root@tiny-centos7 nginx-1.14.0]# ls auto CHANGES CHANGES.ru conf configure contrib html LICENSE man nginx-1.14.0-nat64-toa.patch README src [root@tiny-centos7 nginx-1.14.0]# cp /home/dpvs/kmod/toa/example_nat64/nginx/nginx-1.14.0-nat64-toa.patch ./ [root@tiny-centos7 nginx-1.14.0]# patch -p 1 < nginx-1.14.0-nat64-toa.patch patching file src/core/ngx_connection.h patching file src/core/ngx_inet.h patching file src/event/ngx_event_accept.c patching file src/http/ngx_http_variables.c

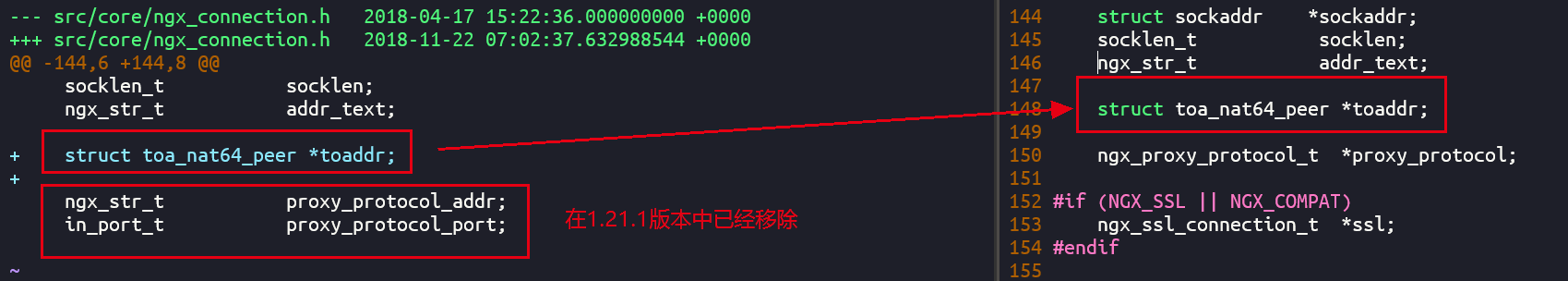

Errors will be reported when using the latest nginx-1.21.1 version

[root@tiny-centos7 nginx-1.21.1]# cp /home/dpvs/kmod/toa/example_nat64/nginx/nginx-1.14.0-nat64-toa.patch ./ [root@tiny-centos7 nginx-1.21.1]# pwd /home/nginx-1.21.1 [root@tiny-centos7 nginx-1.21.1]# ls auto CHANGES CHANGES.ru conf configure contrib html LICENSE man nginx-1.14.0-nat64-toa.patch README src [root@tiny-centos7 nginx-1.21.1]# patch -p 1 < nginx-1.14.0-nat64-toa.patch patching file src/core/ngx_connection.h Hunk #1 FAILED at 144. 1 out of 1 hunk FAILED -- saving rejects to file src/core/ngx_connection.h.rej patching file src/core/ngx_inet.h Hunk #1 succeeded at 128 with fuzz 2 (offset 2 lines). patching file src/event/ngx_event_accept.c Hunk #1 succeeded at 17 (offset -5 lines). Hunk #2 succeeded at 30 (offset -5 lines). Hunk #3 succeeded at 172 (offset -5 lines). patching file src/http/ngx_http_variables.c Hunk #1 succeeded at 145 (offset 2 lines). Hunk #2 succeeded at 398 (offset 15 lines). Hunk #3 succeeded at 1311 (offset -11 lines).

A closer look at the contents of the patch file shows that the error is due to the removal of several lines of code from the corresponding part in version 1.21.1, which makes the patch unable to match. We add that line of code manually

Then we can compile and install normally. After that, we can add $toa to the log_ remote_ Addr and $toa_remote_port to obtain the real IP address of the client in NAT64 mode.

4.3 effect test

The test again found that the source IP address and port number of the real client can be displayed.

$ curl -6 "http://\[2001::201\]" Your remote_addr and remote_port is 192.168.229.201:1030 Your toa_remote_addr and toa_remote_port is [2408::1]:64920 # At the same time, you can also see the corresponding fields in the log of nginx toa_remote_addr=2408::1 | toa_remote_port=64920 | remote_addr=192.168.229.201 | remote_port=1030

5. keepalived configuration

5.1 architecture diagram

There are two benefits to using a keepalived configuration:

- VIP, LIP, RS and other configuration parameters can be solidified in the keepalived configuration file without manual operation using commands or scripts every time

- keepalived can use VRRP protocol to configure master backup mode to avoid single point problem

The official keepalived configuration network topology uses the single arm mode. Here we modify it to the double arm mode; At the same time, it should be noted that the keepalived version used by DPVS is a modified version, which is slightly different from the original version in configuration syntax and parameters.

As mentioned above, keepalived also supports three modes: IPv4-IPv4 mode, IPv6-IPv6 mode and NAT64 mode (IPv6-IPv4). The difference between the three modes is only that the route is different and the configuration file of keepalived is slightly different.

[the external link image transfer fails. The source station may have an anti-theft chain mechanism. It is recommended to save the image and upload it directly (img-pwdhgsqj-16457882784639) (C: \ users \ tinychen \ onedrive \ blogs \ pic_cache \ 20210810 DPVS fullnat management \ DPVS two arm fnat kept fakeip. Draw. SVG)]

5.2 configure kni network card

The configuration of keepalived requires a kni network card capable of normal network communication in the normal Linux network stack (simple user state network stack not implemented by DPVS). The configuration of kni network card is exactly the same as that of ordinary network card. You only need to change the DEVICE in the configuration file to the corresponding kni network card.

The existence of the kni network card depends on the existence of the dpvs process. If the dpvs process is restarted, the kni network card will not be restarted, but will be in the DOWN state until it is manually enabled (ifup)

$ cat /etc/sysconfig/network-scripts/ifcfg-bond2.kni

DEVICE=bond2.kni

BOOTPROTO=static

ONBOOT=yes

IPADDR=10.0.96.200

NETMASK=255.255.255.0

GATEWAY=10.0.96.254

$ ip a

32: bond2.kni: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether a0:36:9f:f0:e4:c0 brd ff:ff:ff:ff:ff:ff

inet 10.0.96.200/24 brd 10.0.96.255 scope global bond2.kni

valid_lft forever preferred_lft forever

inet6 fe80::a236:9fff:fef0:e4c0/64 scope link

valid_lft forever preferred_lft forever

Our DPVS In the conf configuration file, a kni network card (generally named dpdk0.kni or bond0.kni, etc.) will be configured for each dpdk network card or bond network card. In the previous simple configuration steps, we directly add the VIP to the dpdk network card, but this can not realize the master-slave switching of VIP. Therefore, we need to hand over the VIP to the keepalived program.

5.3 configuring routing

In keepalived mode, for FullNAT in dual arm network mode, the routes we need to add can be intuitively divided into three parts: VIP network segment route, RS/LIP network segment route and default route.

# IPv4 network mode $ dpip route add 10.0.96.0/24 dev bond2 # Routing of VIP network segment $ dpip route add 192.168.229.0/23 dev bond1 # Routing of RS/LIP network segment $ dpip route add default via 10.0.96.254 dev bond2 # Default route # IPv6 network mode $ dpip route -6 add 2001::/64 dev bond2 # Routing of VIP network segment $ dpip route -6 add 2407::/64 dev bond1 # Routing of RS/LIP network segment $ dpip route -6 add default via 2001::1 dev bond2 # Default route # NAT64 mode (IPv6-IPv4) # The difference between this mode is that RS needs to change to the route of IPv4 network segment $ dpip route -6 add 2001::/64 dev bond2 # Routing of VIP network segment $ dpip route add 192.168.229.0/23 dev bond1 # Routing of RS/LIP network segment $ dpip route -6 add default via 2001::1 dev bond2 # Default route

5.4 configuring keepalived

First, we use systemctl to manage keepalived. First, we write a unit file. The path in the configuration should be replaced by the keepalived binary file of DPVS customized version and the path of keepalived configuration file (absolute path is recommended)

$ cat /usr/lib/systemd/system/keepalived.service [Unit] Description=DPVS modify version keepalived After=syslog.target network-online.target [Service] Type=forking PIDFile=/var/run/keepalived.pid KillMode=process ExecStart=/path/to/dpvs/bin/keepalived -f /etc/keepalived/keepalived.conf -D -d -S 0 ExecReload=/bin/kill -HUP $MAINPID [Install] WantedBy=multi-user.target

Then we modify the keepalived log and output it to the specified file to facilitate us to locate the problem

# If the system uses rsyslog service to manage logs, you can modify / etc / rsyslog Conf adds the following configuration local0.* /path/to/keepalived.log

Finally, we restart the relevant rsyslog log service and kept

$ systemctl daemon-reload $ systemctl enable rsyslog.service $ systemctl restart rsyslog.service

5.5 keepalived.conf

Note that even if the RS is the same, NAT64 mode and ordinary IPv4 mode cannot operate in the same VRRP_ Both IPv4 address and IPv6 address are defined in instance because they use different VRRP protocol versions (VRRP and VRRP6)

The following takes NAT64 and IPv4 network configurations as examples to intercept some key configurations

! Configuration File for keepalived

global_defs {

router_id DPVS_TEST

}

# Configure LOCAL IP

# The network card uses the lan network segment network card of DPDK

local_address_group laddr_g1 {

192.168.229.201 bond1

}

# Configure VIP in IPv4 mode

vrrp_instance VI_1 {

# Confirm that the status of the VIP is MASTER or BACKUP

state MASTER

# interface is specified as the kni network card that can be recognized by the Linux network stack and virtualized by dpvs

# The keepalived mode needs to ensure that the kni network card is in the up state, which is not required for previous simple configurations

interface bond2.kni

# dpdk_ The interface is specified as the wan interface network card, that is, the dpdk network card where the VIP is located

dpdk_interface bond2

# Virtual route ID, which needs to be globally unique

virtual_router_id 201

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass dpvstest

}

# VIP can be configured in a group, but IPv6 and IPv4 cannot be mixed

virtual_ipaddress {

10.0.96.201

10.0.96.202

}

}

# Configure VIP in IPv6 mode

vrrp_instance VI_2 {

state MASTER

interface bond2.kni

dpdk_interface bond2

virtual_router_id 202

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass dpvstest

}

virtual_ipaddress {

2001::201

2001::202

}

}

# Configure the corresponding VIP and RS

virtual_server_group 10.0.96.201-80 {

10.0.96.201 80

10.0.96.202 80

}

virtual_server group 10.0.96.201-80 {

delay_loop 3

lb_algo rr

lb_kind FNAT

protocol TCP

laddr_group_name laddr_g1

real_server 192.168.229.1 80 {

weight 100

inhibit_on_failure

TCP_CHECK {

nb_sock_retry 2

connect_timeout 3

connect_port 80

}

}

real_server 192.168.229.2 80 {

weight 100

inhibit_on_failure

TCP_CHECK {

nb_sock_retry 2

connect_timeout 3

connect_port 80

}

}

}

virtual_server_group 10.0.96.201-80-6 {

2001::201 80

2001::202 80

}

virtual_server group 10.0.96.201-80-6 {

delay_loop 3

lb_algo rr

lb_kind FNAT

protocol TCP

laddr_group_name laddr_g1

real_server 192.168.229.1 80 {

weight 100

inhibit_on_failure

TCP_CHECK {

nb_sock_retry 2

connect_timeout 3

connect_port 80

}

}

real_server 192.168.229.2 80 {

weight 100

inhibit_on_failure

TCP_CHECK {

nb_sock_retry 2

connect_timeout 3

connect_port 80

}

}

}

- After keepalived is started, we can use the dpip command to view each defined VIP. In the ipvsadm command, we should see that the status of each group of RS is normal

- However, it is necessary to ensure that the dpvs must be in the normal running state during the operation of keepalived, and the kni network card specified by the interface parameter in the configuration file is in the normal running state

- The relevant routes of each network card and network segment still need to be added manually (IPv4, IPv6)

# Check whether each VIP and LIP configured is effective

$ dpip addr show

inet 10.0.96.202/32 scope global bond2

valid_lft forever preferred_lft forever

inet6 2001::202/128 scope global bond2

valid_lft forever preferred_lft forever

inet6 2001::201/128 scope global bond2

valid_lft forever preferred_lft forever

inet 10.0.96.201/32 scope global bond2

valid_lft forever preferred_lft forever

inet 192.168.229.201/32 scope global bond1

valid_lft forever preferred_lft forever

# Check whether the RS service of each group is normal

$ ipvsadm -ln

IP Virtual Server version 0.0.0 (size=0)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 10.0.96.201:80 rr

-> 192.168.229.1:80 FullNat 100 0 0

-> 192.168.229.2:80 FullNat 100 0 0

TCP 10.0.96.201:443 rr

-> 192.168.229.1:443 FullNat 100 0 0

-> 192.168.229.2:443 FullNat 100 0 0

TCP 10.0.96.202:80 rr

-> 192.168.229.1:80 FullNat 100 0 0

-> 192.168.229.2:80 FullNat 100 0 0

TCP 10.0.96.202:443 rr

-> 192.168.229.1:443 FullNat 100 0 0

-> 192.168.229.2:443 FullNat 100 0 0

TCP [2001::201]:80 rr

-> 192.168.229.1:80 FullNat 100 0 0

-> 192.168.229.2:80 FullNat 100 0 0

TCP [2001::201]:443 rr

-> 192.168.229.1:443 FullNat 100 0 0

-> 192.168.229.2:443 FullNat 100 0 0

TCP [2001::202]:80 rr

-> 192.168.229.1:80 FullNat 100 0 0

-> 192.168.229.2:80 FullNat 100 0 0

TCP [2001::202]:443 rr

-> 192.168.229.1:443 FullNat 100 0 0

-> 192.168.229.2:443 FullNat 100 0 0

# Use curl to test whether IPv6 services can go through

$ curl -6 "http://\[2001::202\]"

Your remote_addr and remote_port is 192.168.229.201:1034

Your toa_remote_addr and toa_remote_port is [2408::1]:9684

# Use curl to test whether the IPv4 service can go through

$ curl 10.0.96.201

Your remote_addr and remote_port is 172.16.0.1:42254

Your toa_remote_addr and toa_remote_port is -:-

Finally, it should be reminded that if NAT64 mode is used, nginx cannot directly obtain the real source IP. You need to set the XFF header, for example:

proxy_set_header X-Forwarded-For "$real_remote_addr,$proxy_add_x_forwarded_for";

map $toa_remote_addr $real_remote_addr {

default $toa_remote_addr;

'-' $remote_addr;

}

map $toa_remote_port $real_remote_port {

default $toa_remote_port;

'-' $remote_port;

}

6. Summary

Among the above configurations, the best one that can be used in the production environment is basically the kept active standby mode. In addition, there is a multi active mode that requires the switch to support ECMP. It has not been tested temporarily because of limited conditions, and it can be supplemented later if conditions are available.

As for the selection of NAT64 mode and IPv6-IPv6 mode, if RS is nginx, the difference between the two modes lies in whether to be compatible on nginx or configure IPv6 network on RS, which depends on the actual network conditions and operation and maintenance management tools; If RS is another third-party program and doesn't want to make too many intrusive changes to the source code, it's best to use IPv6-IPv6 mode directly.