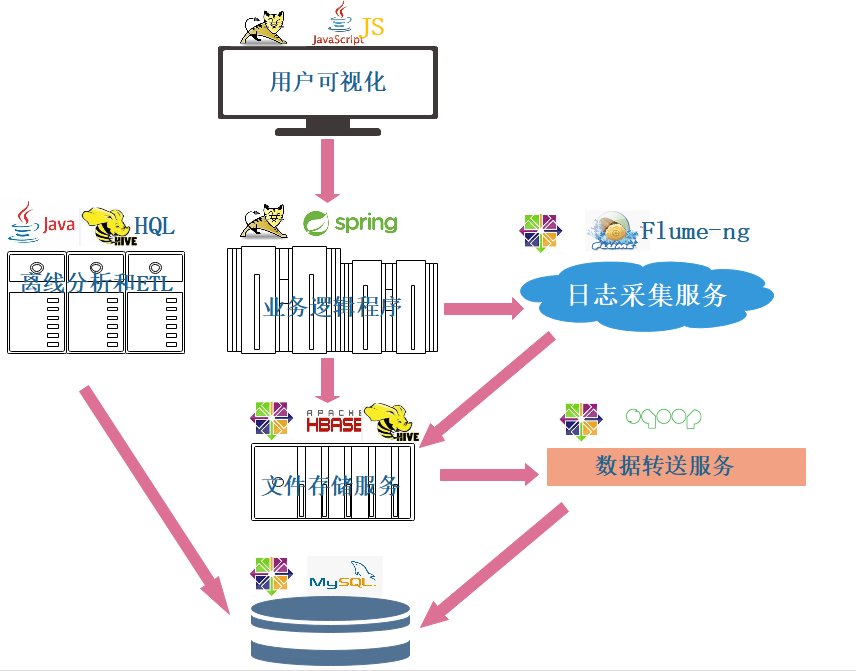

Project architecture design

Java project collection

Project system architecture

Based on the real business data architecture of an e-commerce website, the project realizes the data from collection to use through multi-directional closed-loop business such as front-end application, back-end program, data analysis and platform deployment. A set of e-commerce log analysis project in line with the teaching system has been formed, which is mainly realized through off-line technology.

- User visualization: it is mainly responsible for the interaction with users and the display of business data. The main body is implemented in JS, not on tomcat server.

- Business logic program: it mainly realizes the overall business logic, which is built through spring to meet the business requirements. Deployed on tomcat.

- data storage

- Business database: the project adopts the widely used relational database mysql, which is mainly responsible for the storage of platform business logic data

- HDFS distributed file storage service: the project adopts HBase+Hive, which is mainly used to store the full amount of historical business data and support the demand for high-speed acquisition, as well as the storage of massive data, so as to support future decision analysis.

- Offline analysis part

- Log collection service: collect the user's access behavior to the page in the business platform by using flume ng and send it to the HDFS cluster regularly

- Offline analysis and ETL: the batch statistical business is realized by MapReduce+Hivesql to realize the statistical task of indicator data

- Data transfer service: it is mainly responsible for transferring data to Hive by batch processing business data with sqoop

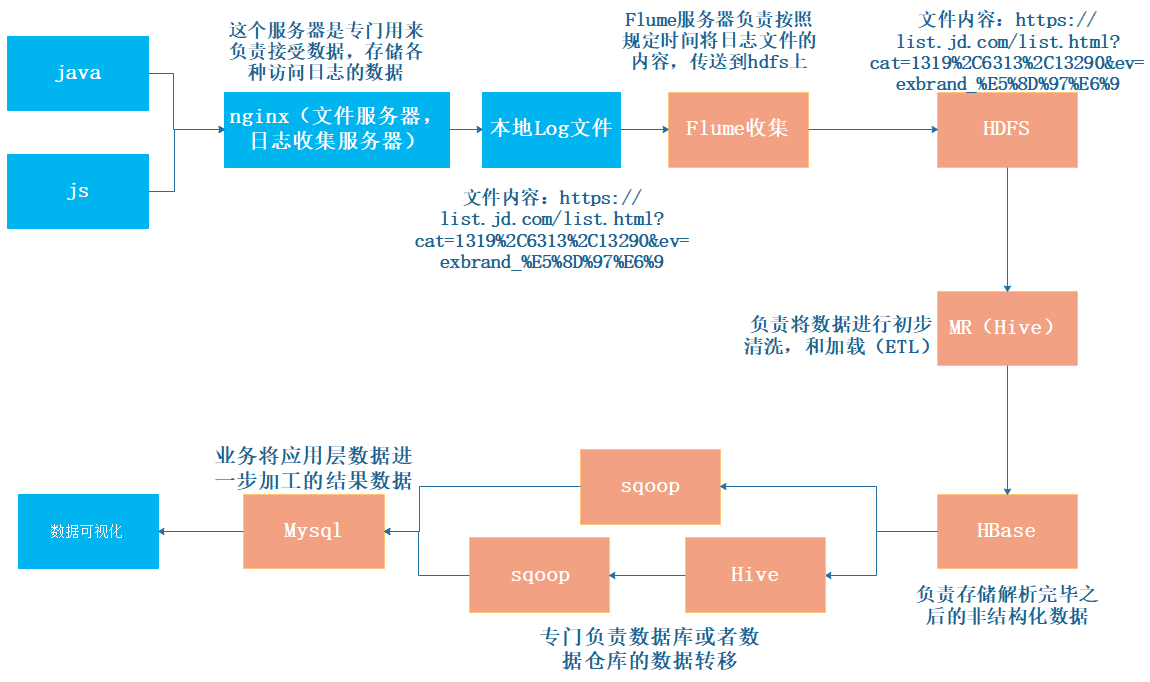

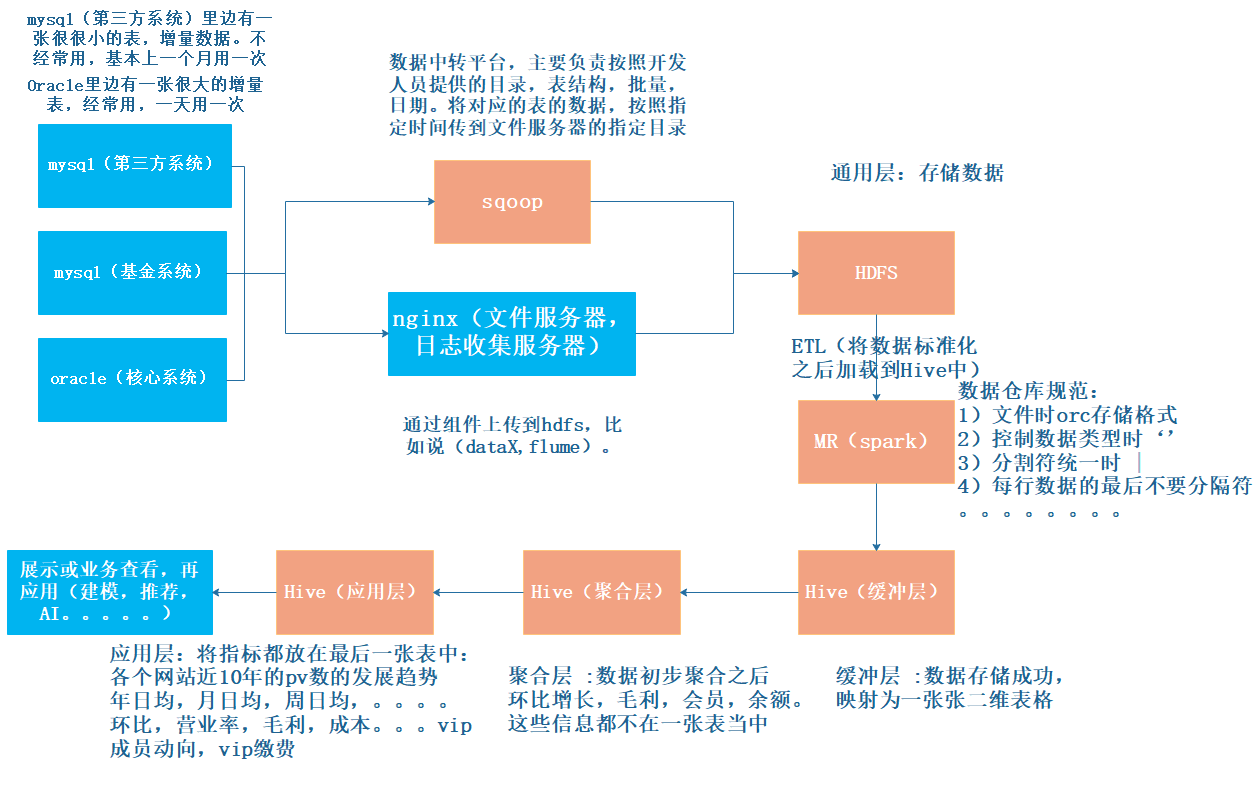

Project data flow

-

Analysis system (bf_transformer)

- From data collection to page presentation

-

Log collection section

- Flume reads log updates from the operation logs of business services and regularly pushes the updated logs to HDFS; After receiving these logs, HDFS filters the obtained log information through the MR program to obtain the user access data stream UID | mid | platform | browser | timestamp; After the calculation is completed, the data is merged with the data in HBase.

-

ETL part

-

Load the data initialized by the system into HBase through MapReduce.

-

Offline analysis part

-

The offline statistics service and offline analysis service can be scheduled through Oozie, and the trigger operation of tasks can be completed through the set running time

-

The offline analysis service loads data from HBase, implements multiple statistical algorithms, and writes the calculation results to Mysql;

-

Data warehouse analysis service

- Scheduling and execution of sql script

-

The data warehouse analysis service can be scheduled through Oozie, and the trigger operation of tasks can be completed through the set running time

-

The data analysis service loads data from the database of each system into HDFS. After HDFS receives these logs, Filter the acquired data through MR program (unify the data format). After the calculation, merge the data with the data in Hive; after Hive obtains these data, logically process the acquired data through HQL script to realize transaction information, access information and multiple indicators; after the calculation, merge the data with the data in Hive.

-

Application background execution workflow

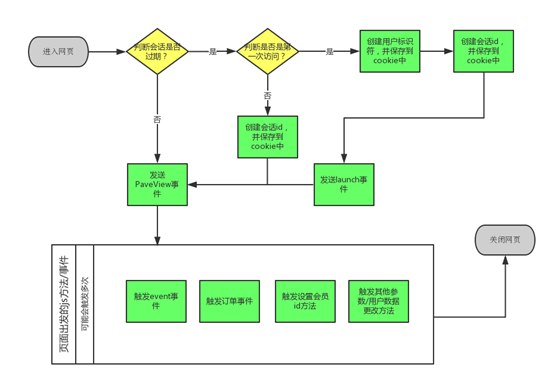

Note: instead of using ip to indicate the uniqueness of the user, we fill a uuid in the cookie to indicate the uniqueness of the user.

In our js sdk, different events are divided according to different data collected.

- For example, pageview event, the execution process of Js sdk is as follows:

-

analysis

- PC side event analysis

For our final different analysis modules, we need different data. Next, we analyze the data required by each module from each module. The basic user information is the analysis of the user's browsing behavior information, that is, we only need the pageview event;

Browser information analysis and region information analysis actually add the dimension information of browser and region on the basis of user basic information analysis. Among them, we can use the browser window navigator. The user agent is used for analysis. The regional information can be analyzed by collecting the user's ip address through the nginx server, that is, the pageview event can also meet the analysis of these two modules.

For external chain data analysis and user browsing depth analysis, we can add the current url of the visiting page and the url of the previous page to the pageview event for processing and analysis, that is, the pageview event can also meet the analysis of these two modules.

Order information analysis requires the pc to send an event generated by an order, so corresponding to the analysis of this module, we need a new event chargeRequest. For event analysis, we also need a pc to send a new event data, which can be defined as event. In addition, we also need to set up a launch event to record the access of new users.

The format of data url sent by various events on Pc side is as follows, and the parameters behind the url are the data we collected: http://shsxt.com/shsxt.gif?requestdata

Final analysis module PC js sdk event User basic information analysis pageview event Browser information analysis pageview event Regional information analysis pageview event External chain data analysis pageview event User browsing depth analysis pageview event Order information analysis chargeRequest event Event analysis Event event User basic information modification launch event

PC side JS and SDK events

- General parameters

The same information will be returned in all buried points.

| name | content |

|---|---|

| Data sent | u_sd=8E9559B3-DA35-44E1-AC98-85EB37D1F263&c_time= 1449137597974&ver=1&pl=website&sdk=js& b_rst=1920*1080&u_ud=12BF4079-223E-4A57-AC60-C1A0 4D8F7A2F&b_iev=Mozilla%2F5.0%20(Windows%20NT%206. 1%3B%20WOW64)%20AppleWebKit%2F537.1%20(KHTML%2C%2 0like%20Gecko)%20Chrome%2F21.0.1180.77%20Safari% 2F537.1&l=zh-CN&en=e_l |

| Parameter name | type | describe |

|---|---|---|

| u_sd | string | Session id |

| c_time | string | Client creation time |

| ver | string | Version number, eg: 0.0 one |

| pl | string | Platform, eg: website |

| sdk | string | Sdk type, eg: js |

| b_rst | string | Browser resolution, eg: 1800*678 |

| u_ud | string | User / visitor unique identifier |

| b_iev | string | Browser information useragent |

| l | string | Client language |

- Launch event

This event is triggered when the user visits the website for the first time. No external call interface is provided, and only the data collection of this event is realized.

| name | content |

|---|---|

| Data sent | en=e_l&General parameters |

| Parameter name | type | describe |

|---|---|---|

| en | string | Event name, eg: e_l |

- Member login time

This event is triggered when the user logs in to the website. No external call interface is provided, and only the data collection of this event is realized.

| name | content |

|---|---|

| Data sent | u_mid=phone&General parameters |

| Parameter name | type | describe |

|---|---|---|

| u_mid | string | The member id is consistent with the business system |

- Pageview event, which depends on onPageView class

This event is triggered when the user accesses / refreshes the page. This event will be called automatically, or it can be called manually by the programmer.

| Method name | content |

|---|---|

| Data sent | en=e_pv&p_ref=www.shsxt.com%3A8080&p_url =http%3A%2F%2Fwww.shsxt.com%3A8080%2Fvst_track%2Findex.html&General parameters |

| Parameter name | type | describe |

|---|---|---|

| en | string | Event name, eg: e_pv |

| p_url | string | url of the current page |

| p_ref | string | url of the previous page |

-

ChargeSuccess event

This event is triggered when the user successfully places an order, which needs to be actively called by the program.

| Method name | onChargeRequest |

|---|---|

| Data sent | oid=orderid123&on=%E4%BA%A7%E5%93% 81%E5%90%8D%E7%A7%B0&cua=1000&cut=%E4%BA%BA%E6%B0 %91%E5%B8%81&pt=%E6%B7%98%E5%AE%9&en=e_cs &General parameters |

| parameter | type | Required | describe |

|---|---|---|---|

| orderId | string | yes | Order id |

| on | String | yes | Product purchase description name |

| cua | double | yes | Order price |

| cut | String | yes | Currency type |

| pt | String | yes | Payment method |

| en | String | yes | Event name, eg: e_cs |

-

Chargefind event

This event is triggered when the user fails to place an order, which needs to be actively called by the program.

| Method name | onChargeRequest |

|---|---|

| Data sent | oid=orderid123&on=%E4%BA%A7%E5%93% 81%E5%90%8D%E7%A7%B0&cua=1000&cut=%E4%BA%BA%E6%B0 %91%E5%B8%81&pt=%E6%B7%98%E5%AE%9&en=e_cr &General parameters |

| parameter | type | Required | describe |

|---|---|---|---|

| orderId | string | yes | Order id |

| on | String | yes | Product purchase description name |

| cua | double | yes | Order price |

| cut | String | yes | Currency type |

| pt | String | yes | Payment method |

| en | String | yes | Event name, eg: e_cr |

- Event event

When a visitor / user triggers a business defined event, the front-end program calls this method.

| Method name | onEventDuration |

|---|---|

| Data sent | ca=%E7%B1%BB%E5%9E%8B&ac=%E5%8A%A8%E4%BD%9C kv_p_url=http%3A%2F%2Fwwwshsxt...com %3A8080%2Fvst_track%2Findex.html&kv_%E5%B1%9E%E6 %80%A7key=%E5%B1%9E%E6%80%A7value&du=1000& en=e_e&General parameters |

| parameter | type | Required | describe |

|---|---|---|---|

| ca | string | yes | Category name of Event |

| ac | String | yes | action name of Event |

| kv_p_url | map | no | Custom properties for Event |

| du | long | no | Duration of Event |

| en | String | yes | Event name, eg: e_e |

-

Data parameter description

Collect different data in different events and send it to nginx server, but in fact, these collected data still have some commonalities. The possible parameters used are described as follows:

Parameter name type describe en string Event name, eg: e_pv ver string Version number, eg: 0.0 one pl string Platform, eg: website sdk string Sdk type, eg: js b_rst string Browser resolution, eg: 1800*678 b_iev string Browser information useragent u_ud string User / visitor unique identifier l string Client language u_mid string The member id is consistent with the business system u_sd string Session id c_time string Client time p_url string url of the current page p_ref string url of the previous page tt string Title of the current page ca string Category name of Event ac string action name of Event kv_* string Custom properties for Event du string Duration of Event oid string Order id on string Order name cua string Payment amount cut string Payment currency type pt string Payment method -

The order workflow is as follows: (similar to refund)

-

analysis

- Program background event analysis

In this project, only the chargeSuccess event will start in the program background. The main function of this event is to send the information of order success to the nginx server. The sending format is the same as the pc sending method, and it also accesses the same url for data transmission. The format is:

Final analysis module PC js sdk event Order information analysis chargeSuccess event chargereturn event -

chargeSuccess event

This event is triggered when the member finally pays successfully, which needs to be actively called by the program.

Method name onChargeSuccess Data sent u_mid=shsxt&c_time=1449142044528&oid=orderid123&ver=1&en=e_cs&pl= javaserver&sdk=jdk parameter type Required describe orderId string yes Order id memberId string yes Member id -

Chargefind event

This event is triggered when a member performs a refund operation, which needs to be actively called by the program.

Method name onChargeRefund Data sent u_mid=shsxt&c_time=1449142044528&oid=orderid123 &ver=1&en=e_cr&pl=javaserver&sdk=jdk parameter type Required describe orderId string yes Order id memberId string yes Member id

-

Integration mode

The sdk of java can be directly introduced into the project or added to the classpath.

- Data parameter description

The parameters are described as follows:

| Parameter name | type | describe |

|---|---|---|

| en | string | Event name, eg: e_cs |

| ver | string | Version number, eg: 0.0 one |

| pl | string | Platform, eg: website,javaweb,php |

| sdk | string | Sdk type, eg: java |

| u_mid | string | The member id is consistent with the business system |

| c_time | string | Client time |

| oid | string | Order id |

Project data model

-

HBase storage structure

Here, we use the method of including timestamp in rowkey; hbase column clusters use log to mark column clusters. So finally, we create a single column cluster rowkey eventlog table with timestamp.

- create ‘eventlog’, ‘log’. rowkey design rules are: timestamp + (uid + mid + EN) actual work content in CRC Coding Project

Environment construction

NginxLog file service (single node)

1. Upload and unzip

//To the root directory cd /root //Upload file rz //decompression tar -zxvf ./nginx-1.8.1.tar.gz //Delete compressed package rm -rf ./nginx-1.8.1.tar.gz

2. Compile and install

//Compile and install //Install the dependent programs required by nginx in advance yum install gcc pcre-devel zlib-devel openssl-devel -y //Locate the configure file cd nginx-1.8.1/ //Execute compilation ./configure --prefix=/opt/sxt/nginx //install make && make install

3. Start verification

//Find nginx startup file cd /opt/sxt/nginx/sbin //start-up ./nginx //web side authentication shsxt-hadoop101:80 //Common commands nginx -s reload nginx -s quit

Flume ng (single node)

- Upload and decompress

//Upload data file mkdir -p /opt/sxt/flume cd /opt/sxt/flume rz //decompression tar -zxvf apache-flume-1.6.0-bin.tar.gz //delete rm -rf apache-flume-1.6.0-bin.tar.gz

- Modify profile

cd /opt/sxt/flume/apache-flume-1.6.0-bin/conf cp flume-env.sh.template flume-env.sh vim flume-env.sh export JAVA_HOME=/usr/java/jdk1.8.0_231-amd64 # Set the used memory size. This is only used when chnnel is set to memory storage # export JAVA_OPTS="-Xms100m -Xmx2000m -Dcom.sun.management.jmxremote"

- Modify environment variables

vim /etc/profile export FLUME_HOME=/opt/sxt/flume/apache-flume-1.6.0-bin export PATH=$FLUME_HOME/bin: source /etc/profile

- verification

flume-ng version

Sqoop (single node)

- install

Upload, decompress, modify and verify the configuration file

//create folder

mkdir -p /opt/sxt/sqoop

//upload

rz

//decompression

tar -zxvf sqoop-1.4.6.bin__hadoop-2.0.4-alpha.tar.gz

//delete

tar -zxvf sqoop-1.4.6.bin__hadoop-2.0.4-alpha.tar.gz

//Configure environment variables

export SQOOP_HOME=/opt/sxt/sqoop/sqoop-1.4.6.bin__hadoop-2.0.4-alpha

export PATH=$SQOOP_HOME/bin:

source /etc/profile

//Add mysql connection package

cd ./sqoop-1.4.6.bin__hadoop-2.0.4-alpha/lib/

//rename profile

cd /opt/sxt/sqoop/sqoop-1.4.6.bin__hadoop-2.0.4-alpha/conf

mv sqoop-env-template.sh sqoop-env.sh

//Modify the configuration file (comment out the unused component information)

cd /opt/sxt/sqoop/sqoop-1.4.6.bin__hadoop-2.0.4-alpha/bin/configure-sqoop

vim configure-sqoop

//Note the following

##if [ -z "${HCAT_HOME}" ]; then

## if [ -d "/usr/lib/hive-hcatalog" ]; then

## HCAT_HOME=/usr/lib/hive-hcatalog

## elif [ -d "/usr/lib/hcatalog" ]; then

## HCAT_HOME=/usr/lib/hcatalog

## else

## HCAT_HOME=${SQOOP_HOME}/../hive-hcatalog

## if [ ! -d ${HCAT_HOME} ]; then

## HCAT_HOME=${SQOOP_HOME}/../hcatalog

## fi

## fi

##fi

##if [ -z "${ACCUMULO_HOME}" ]; then

## if [ -d "/usr/lib/accumulo" ]; then

## ACCUMULO_HOME=/usr/lib/accumulo

## else

## ACCUMULO_HOME=${SQOOP_HOME}/../accumulo

## fi

##fi

## Moved to be a runtime check in sqoop.

##if [ ! -d "${HCAT_HOME}" ]; then

## echo "Warning: $HCAT_HOME does not exist! HCatalog jobs will fail."

## echo 'Please set $HCAT_HOME to the root of your HCatalog installation.'

##fi

##if [ ! -d "${ACCUMULO_HOME}" ]; then

## echo "Warning: $ACCUMULO_HOME does not exist! Accumulo imports will fail."

## echo 'Please set $ACCUMULO_HOME to the root of your Accumulo installation.'

##fi

##export HCAT_HOME

##export ACCUMULO_HOME

//verification

sqoop version

//Verify sqoop and database connections

sqoop list-databases -connect jdbc:mysql://shsxt-hadoop101:3306/ -username root -password 123456

Integration of Hive and HBase

hive and hbase synchronization https://cwiki.apache.org/confluence/display/Hive/HBaseIntegration

- Map data from HBase to Hive

- Because they are stored on HDFS

- Therefore, Hive can specify the data storage path of HBase when creating tables

- However, deleting the Hive table does not delete the Hbase

- Conversely, if HBase is deleted, Hive's data will be deleted

//Lose the jar package, configure the cluster information, and specify the HBase mapped data when creating the table

cp /opt/sxt/apache-hive-1.2.1-bin/lib/hive-hbase-handler-1.2.1.jar /opt/sxt/hbase-1.4.13/lib/

//Check whether the jar has been uploaded successfully (three nodes)

ls /opt/sxt/hbase-1.4.13/lib/hive-hbase-handler-*

//Add attributes to hive's configuration file:

vim /opt/sxt/apache-hive-1.2.1-bin/conf/hive-site.xml

//New: (all three nodes are added)

<property>

<name>hbase.zookeeper.quorum</name>

<value>shsxt-hadoop101:2181,shsxt-hadoop102:2181,shsxt-hadoop103:2181</value>

</property>

//verification

//First create a temporary table in hive, and then query this table

CREATE EXTERNAL TABLE brower1 (

`id` string,

`name` string,

`version` string)

STORED BY 'org.apache.hadoop.hive.hbase.HBaseStorageHandler'

WITH SERDEPROPERTIES ("hbase.columns.mapping" = "info:browser,info:browser_v")

TBLPROPERTIES ("hbase.table.name" = "event");

CREATE EXTERNAL TABLE tmp_order (

`key` string,

`name` string,

`age` string)

ROW FORMAT SERDE 'org.apache.hadoop.hive.hbase.HBaseSerDe'

STORED BY 'org.apache.hadoop.hive.hbase.HBaseStorageHandler'

with serdeproperties('hbase.columns.mapping'=':key,info:u_ud,info:u_sd')

tblproperties('hbase.table.name'='event');

Hive on Tez (single node)

1. Add apache-tez-0.8 Deploy the compressed package in the 5-bin / share directory to HDFS

cd /opt/bdp/ rz tar -zxvf apache-tez-0.8.5-bin.tar.gz rm -rf apache-tez-0.8.5-bin.tar.gz cd apache-tez-0.8.5-bin/share/ hadoop fs -mkdir -p /bdp/tez/ hadoop fs -put tez.tar.gz /bdp/tez/ hadoop fs -chmod -R 777 /bdp hadoop fs -ls /bdp/tez/

2. Create tez site in {hide_home} / conf directory XML file, as follows:

cd /opt/bdp/apache-hive-1.2.1-bin/conf/

vim tez-site.xml

<?xml version="1.0" encoding="UTF-8"?>

<configuration>

<property>

<name>tez.lib.uris</name>

<value>/bdp/tez/tez.tar.gz</value>

</property>

<property>

<name>tez.container.max.java.heap.fraction</name>

<value>0.2</value>

</property>

</configuration>

Note: tez lib. The path of the URI configuration is tez. In the previous step tar. HDFS path for GZ compressed package deployment. Also tez site The XML file needs to be copied to the corresponding directories of the nodes where HiveServer2 and HiveMetastore services are located.

3. Add apache-tez-0.8 Tez. In the 5-bin / share directory tar. Unzip the GZ compressed package into the current lib directory

cd /opt/bdp/apache-tez-0.8.5-bin/share/ ll mkdir lib tar -zxvf tez.tar.gz -C lib/

4. Copy all jar packages under lib and lib/lib directories to {HIVE_HOME}/lib directory

cd lib pwd scp -r *.jar /opt/bdp/apache-hive-1.2.1-bin/lib/ scp -r lib/*.jar /opt/bdp/apache-hive-1.2.1-bin/lib/ ll /opt/bdp/apache-hive-1.2.1-bin/lib/tez-*

Note: Tez's dependent packages need to be copied to the corresponding directories of the nodes where HiveServer2 and HiveMetastore services are located.

5. After completing the above operations, restart the HiveServer and HiveMetastore services

nohup hive --service metastore > /dev/null 2>&1 & nohup hiveserver2 > /dev/null 2>&1 & netstat -apn |grep 10000 netstat -apn |grep 9083

Hive2 On Tez test: test with hive command

hive set hive.tez.container.size=3020; set hive.execution.engine=tez; use bdp; select count(*) from test;

Oozie build

Deploy Hadoop (CDH version)

-

Modify Hadoop configuration

core-site.xml

<!-- Oozie Server of Hostname --> <property> <name>hadoop.proxyuser.atguigu.hosts</name> <value>*</value> </property> <!-- Allowed to be Oozie Proxy user group --> <property> <name>hadoop.proxyuser.atguigu.groups</name> <value>*</value> </property>mapred-site.xml

<!-- to configure MapReduce JobHistory Server Address, default port 10020 --> <property> <name>mapreduce.jobhistory.address</name> <value>shsxt_hadoop102:10020</value> </property> <!-- to configure MapReduce JobHistory Server web ui Address, default port 19888 --> <property> <name>mapreduce.jobhistory.webapp.address</name> <value>shsxt_hadoop102:19888</value> </property>

yarn-site.xml

<!-- Task history service --> <property> <name>yarn.log.server.url</name> <value>http://shsxt_hadoop102:19888/jobhistory/logs/</value> </property>

Remember to synchronize scp to other machine nodes after completion

- Restart Hadoop cluster

sbin/start-dfs.sh sbin/start-yarn.sh sbin/mr-jobhistory-daemon.sh start historyserver

Note: if you need to start JobHistoryServer, you'd better run an MR task for testing.

Deploy Oozie

- Unzip Oozie

tar -zxvf /opt/sxt/cdh/oozie-4.0.0-cdh5.3.6.tar.gz -C ./

- In the oozie root directory, unzip oozie-hadoop libs-4.0 0-cdh5. 3.6. tar. gz

tar -zxvf oozie-hadooplibs-4.0.0-cdh5.3.6.tar.gz -C ../

After completion, the Hadoop LIBS directory will appear under the Oozie directory.

- Create the libext directory under the Oozie directory

mkdir libext/

-

Copy dependent Jar package

- Copy the jar package in Hadoop LIBS to the libext Directory:

cp -ra hadooplibs/hadooplib-2.5.0-cdh5.3.6.oozie-4.0.0-cdh5.3.6/* libext/

- Copy the Mysql driver package to the libext Directory:

cp -a /root/mysql-connector-java-5.1.27-bin.jar ./libext/

-

Add ext-2.2 Zip to the libext / directory

ext is a js framework for displaying oozie front-end pages:

cp -a /root/ext-2.2.zip libext/

-

Modify Oozie profile

oozie-site.xml

Properties: oozie.service.JPAService.jdbc.driver Attribute value: com.mysql.jdbc.Driver Explanation: JDBC Drive of Properties: oozie.service.JPAService.jdbc.url Attribute value: jdbc:mysql://shsxt_hadoop101:3306/oozie Explanation: oozie Required database address Properties: oozie.service.JPAService.jdbc.username Attribute value: root Explanation: database user name Properties: oozie.service.JPAService.jdbc.password Attribute value: 123456 Explanation: database password Properties: oozie.service.HadoopAccessorService.hadoop.configurations Attribute value:*=/opt/sxt/cdh/hadoop-2.5.0-cdh5.3.6/etc/hadoop Explanation: let Oozie quote Hadoop Configuration file for

-

Create Oozie's database in Mysql

Enter Mysql and create oozie database:

mysql -uroot -p000000 create database oozie; grant all on *.* to root@'%' identified by '123456'; flush privileges; exit;

-

Initialize Oozie

1) Upload yarn.com under Oozie directory tar. GZ file to HDFS:

Tip: yarn tar. GZ files will unzip themselves

bin/oozie-setup.sh sharelib create -fs hdfs://shsxt_hadoop102:8020 -locallib oozie-sharelib-4.0.0-cdh5.3.6-yarn.tar.gz

After successful execution, go to 50070 to check whether there is file generation in the corresponding directory.

2) Create oozie SQL file

bin/ooziedb.sh create -sqlfile oozie.sql -run

3) Package the project and generate the war package

bin/oozie-setup.sh prepare-war

-

Startup and shutdown of Oozie

The startup command is as follows:

bin/oozied.sh start

The closing command is as follows:

bin/oozied.sh stop

Project realization

System environment:

| system | edition |

|---|---|

| windows | 10 professional edition |

| linux | CentOS 7 |

Development tools:

| tool | edition |

|---|---|

| idea | 2019.2.4 |

| maven | 3.6.2 |

| JDK | 1.8+ |

Cluster environment:

| frame | edition |

|---|---|

| hadoop | 2.6.5 |

| zookeeper | 3.4.10 |

| hbase | 1.3.1 |

| flume | 1.6.0 |

| sqoop | 1.4.6 |

Hardware environment:

| Hardware | hadoop102 | hadoop103 | hadoop104 |

|---|---|---|---|

| Memory | 1G | 1G | 1G |

| CPU | 2 nucleus | 1 core | 1 core |

| Hard disk | 50G | 50G | 50G |

Data production

data structure

We will use the method of including timestamp in rowkey in HBase; HBase column clusters use log to mark column clusters. So finally, we create a single column cluster rowkey eventlog table with timestamp. create ‘eventlog’, ‘log’

The design rule of rowkey is: timestamp+(uid+mid+en)crc code

| Listing | explain | distance |

|---|---|---|

| browser | Browser name | 360 |

| browser_v | Browser version | 3 |

| city | city | Guiyang City |

| country | country | China |

| en | Event name | e_l |

| os | operating system | linux |

| os_v | Operating system version | 1 |

| p_url | url of the current page | http://www.tmall.com |

| pl | platform | website |

| province | Provinces and cities | Guizhou Province |

| s_time | system time | 1595605873000 |

| u_sd | Session id | 12344F83-6357-4A64-8527-F09216974234 |

| u_ud | User id | 26866661 |

Write code

Create a new maven project: shsxt_ecshop

- pom file configuration (you need to customize the name of the package and the technical version used, and the following is required):

<?xml version="1.0" encoding="UTF-8"?> <project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 https://maven.apache.org/xsd/maven-4.0.0.xsd"> <modelVersion>4.0.0</modelVersion> <parent> <groupId>org.springframework.boot</groupId> <artifactId>spring-boot-starter-parent</artifactId> <version>2.3.2.RELEASE</version> <relativePath/> <!-- lookup parent from repository --> </parent> <groupId>com.xxxx</groupId> <artifactId>bigdatalog</artifactId> <version>0.0.1-SNAPSHOT</version> <name>bigdatalog</name> <description>Demo project for Spring Boot</description> <properties> <java.version>1.8</java.version> </properties> <dependencies> <dependency> <groupId>org.springframework.boot</groupId> <artifactId>spring-boot-starter-freemarker</artifactId> </dependency> <dependency> <groupId>org.springframework.boot</groupId> <artifactId>spring-boot-starter-web</artifactId> </dependency> <dependency> <groupId>org.springframework.boot</groupId> <artifactId>spring-boot-starter-test</artifactId> <scope>test</scope> <exclusions> <exclusion> <groupId>org.junit.vintage</groupId> <artifactId>junit-vintage-engine</artifactId> </exclusion> </exclusions> </dependency> </dependencies> <build> <plugins> <plugin> <groupId>org.springframework.boot</groupId> <artifactId>spring-boot-maven-plugin</artifactId> </plugin> </plugins> </build> </project>

-

analytics.js: new js file

Create an anonymous self calling function: simple operations for declaring cookie s (get, set), parameter settings (start a session, the user logs in for the first time, the user visits the page, the user order request, the user-defined event, the method that must be executed before executing the external method, send the data to the server, add the common part sent to the log collection server to the data, and return the string with the parameter code).

Tip: a custom parameter is required: serverurl:“ http://bd1601/shsxt.jpg "

(function () {

//The cookie is used to determine whether it is a new access.

var CookieUtil = {

// get the cookie of the key is name

get: function (name) {

var cookieName = encodeURIComponent(name) + "=", cookieStart = document.cookie

.indexOf(cookieName), cookieValue = null;

if (cookieStart > -1) {

var cookieEnd = document.cookie.indexOf(";", cookieStart);

if (cookieEnd == -1) {

cookieEnd = document.cookie.length;

}

cookieValue = decodeURIComponent(document.cookie.substring(

cookieStart + cookieName.length, cookieEnd));

}

return cookieValue;

},

// set the name/value pair to browser cookie

set: function (name, value, expires, path, domain, secure) {

var cookieText = encodeURIComponent(name) + "="

+ encodeURIComponent(value);

if (expires) {

// set the expires time

var expiresTime = new Date();

expiresTime.setTime(expires);

cookieText += ";expires=" + expiresTime.toGMTString();

}

if (path) {

cookieText += ";path=" + path;

}

if (domain) {

cookieText += ";domain=" + domain;

}

if (secure) {

cookieText += ";secure";

}

document.cookie = cookieText;

},

setExt: function (name, value) {

this.set(name, value, new Date().getTime() + 315360000000, "/");

}

};

//===================================================================================

// The subject is actually tracker js

var tracker = {

// config

clientConfig: {

//Address of the log server

serverUrl: "http://bd1601/shsxt.jpg",

//session expiration time

sessionTimeout: 360, // 360s -> 6min

//Maximum waiting time

maxWaitTime: 3600, // 3600s -> 60min -> 1h

//Version version

ver: "1"

},

//cookie expiration time

cookieExpiresTime: 315360000000, // cookie expiration time, 10 years

//General data

columns: {

// The name of the column sent to the server

eventName: "en",

version: "ver",

platform: "pl",

sdk: "sdk",

uuid: "u_ud",

memberId: "u_mid",

sessionId: "u_sd",

clientTime: "c_time",

language: "l",

userAgent: "b_iev",

resolution: "b_rst",

currentUrl: "p_url",

referrerUrl: "p_ref",

title: "tt",

orderId: "oid",

orderName: "on",

currencyAmount: "cua",

currencyType: "cut",

paymentType: "pt",

category: "ca",

action: "ac",

kv: "kv_",

duration: "du"

},

//Value to set to common data

keys: {

pageView: "e_pv",

chargeRequestEvent: "e_crt",

launch: "e_l",

eventDurationEvent: "e_e",

sid: "bftrack_sid",

uuid: "bftrack_uuid",

mid: "bftrack_mid",

preVisitTime: "bftrack_previsit",

},

/**

* Get session id

*/

getSid: function () {

return CookieUtil.get(this.keys.sid);

},

/**

* Save session id to cookie

*/

setSid: function (sid) {

if (sid) {

CookieUtil.setExt(this.keys.sid, sid);

}

},

/**

* Get uuid from cookie

*/

getUuid: function () {

return CookieUtil.get(this.keys.uuid);

},

/**

* Save uuid to cookie

*/

setUuid: function (uuid) {

if (uuid) {

CookieUtil.setExt(this.keys.uuid, uuid);

}

},

/**

* Get memberID

*/

getMemberId: function () {

return CookieUtil.get(this.keys.mid);

},

/**

* Set mid

*/

setMemberId: function (mid) {

if (mid) {

CookieUtil.setExt(this.keys.mid, mid);

}

},

//Start a session

startSession: function () {

// Method triggered when js is loaded

if (this.getSid()) {

// The session id exists, indicating that the uuid also exists

if (this.isSessionTimeout()) {

// The session expires and a new session is generated

this.createNewSession();

} else {

// The session has not expired. Update the latest access time

this.updatePreVisitTime(new Date().getTime());

}

} else {

// The session id does not exist, indicating that the uuid does not exist

this.createNewSession();

}

//If not, just come in and return to pv

this.onPageView();

},

//User first login

onLaunch: function () {

// Trigger launch event

var launch = {};

launch[this.columns.eventName] = this.keys.launch; // Set event name

this.setCommonColumns(launch); // Set public columns

this.sendDataToServer(this.parseParam(launch)); // Finally send encoded data

},

//User access page

onPageView: function () {

// Trigger page view event

if (this.preCallApi()) {

var time = new Date().getTime();

var pageviewEvent = {};

pageviewEvent[this.columns.eventName] = this.keys.pageView;

pageviewEvent[this.columns.currentUrl] = window.location.href; // Set current url

pageviewEvent[this.columns.referrerUrl] = document.referrer; // Set the url of the previous page

pageviewEvent[this.columns.title] = document.title; // Set title

this.setCommonColumns(pageviewEvent); // Set public columns

this.sendDataToServer(this.parseParam(pageviewEvent)); // Finally send encoded data

this.updatePreVisitTime(time);

}

},

//User order request

onChargeRequest: function (orderId, name, currencyAmount, currencyType, paymentType) {

// Event triggered to generate an order

if (this.preCallApi()) {

if (!orderId || !currencyType || !paymentType) {

this.log("order id,Currency type and payment method cannot be blank");

return;

}

if (typeof (currencyAmount) == "number") {

// Amount must be a number

var time = new Date().getTime();

var chargeRequestEvent = {};

chargeRequestEvent[this.columns.eventName] = this.keys.chargeRequestEvent;

chargeRequestEvent[this.columns.orderId] = orderId;

chargeRequestEvent[this.columns.orderName] = name;

chargeRequestEvent[this.columns.currencyAmount] = currencyAmount;

chargeRequestEvent[this.columns.currencyType] = currencyType;

chargeRequestEvent[this.columns.paymentType] = paymentType;

this.setCommonColumns(chargeRequestEvent); // Set public columns

this.sendDataToServer(this.parseParam(chargeRequestEvent)); // Finally send encoded data

this.updatePreVisitTime(time);

} else {

this.log("Order amount must be numeric");

return;

}

}

},

//User defined events

onEventDuration: function (category, action, map, duration) {

// Trigger event

if (this.preCallApi()) {

if (category && action) {

var time = new Date().getTime();

var event = {};

event[this.columns.eventName] = this.keys.eventDurationEvent;

event[this.columns.category] = category;

event[this.columns.action] = action;

if (map) {

for (var k in map) {

if (k && map[k]) {

event[this.columns.kv + k] = map[k];

}

}

}

if (duration) {

event[this.columns.duration] = duration;

}

this.setCommonColumns(event); // Set public columns

this.sendDataToServer(this.parseParam(event)); // Finally send encoded data

this.updatePreVisitTime(time);

} else {

this.log("category and action Cannot be empty");

}

}

},

/**

* Methods that must be executed before executing external methods

*/

preCallApi: function () {

if (this.isSessionTimeout()) {

// If true, it indicates that a new one needs to be created

this.startSession();

} else {

this.updatePreVisitTime(new Date().getTime());

}

return true;

},

//Send data to server

sendDataToServer: function (data) {

// alert(data);

// Send data to the server, where data is a string

var that = this;

var i2 = new Image(1, 1);

// <img src="url"></img>

i2.onerror = function () {

// Retry operation can be performed here

};

//http:/bd1601/log. gif? Data is the parameter to be uploaded

i2.src = this.clientConfig.serverUrl + "?" + data;

},

/**

* Add the common part sent to the log collection server to the data

*/

setCommonColumns: function (data) {

data[this.columns.version] = this.clientConfig.ver;

data[this.columns.platform] = "website";

data[this.columns.sdk] = "js";

data[this.columns.uuid] = this.getUuid(); // Set user id

data[this.columns.memberId] = this.getMemberId(); // Set member id

data[this.columns.sessionId] = this.getSid(); // Set sid

data[this.columns.clientTime] = new Date().getTime(); // Set client time

data[this.columns.language] = window.navigator.language; // Set browser language

data[this.columns.userAgent] = window.navigator.userAgent; // Set browser type

data[this.columns.resolution] = screen.width + "*" + screen.height; // Set browser resolution

},

/**

* Create a new member and judge whether it is the first time to visit the page. If so, send the launch event.

*/

createNewSession: function () {

var time = new Date().getTime(); // Get current operation time

// 1. Update the session

var sid = this.generateId(); // Generate a session id

this.setSid(sid);

this.updatePreVisitTime(time); // Update last access time

// 2. View uuid

if (!this.getUuid()) {

// The uuid does not exist. First create the uuid, then save it to the cookie, and finally trigger the launch event

var uuid = this.generateId(); // Product uuid

this.setUuid(uuid);

this.onLaunch();

}

},

/**

* Parameter encoding return string

*/

parseParam: function (data) {

var params = "";

// {key:value,key2:value2}

for (var e in data) {

if (e && data[e]) {

params += encodeURIComponent(e) + "="

+ encodeURIComponent(data[e]) + "&";

}

}

if (params) {

return params.substring(0, params.length - 1);

} else {

return params;

}

},

/**

* Generate uuid

*/

generateId: function () {

var chars = '0123456789ABCDEFGHIJKLMNOPQRSTUVWXYZabcdefghijklmnopqrstuvwxyz';

var tmpid = [];

var r;

tmpid[8] = tmpid[13] = tmpid[18] = tmpid[23] = '-';

tmpid[14] = '4';

for (i = 0; i < 36; i++) {

if (!tmpid[i]) {

r = 0 | Math.random() * 16;

tmpid[i] = chars[(i == 19) ? (r & 0x3) | 0x8 : r];

}

}

return tmpid.join('');

},

/**

* Judge whether the session expires, and check whether the current time and the latest access time interval are less than this clientConfig. sessionTimeout<br/>

* If it is less than, return false; Otherwise, return true.

*/

isSessionTimeout: function () {

var time = new Date().getTime();

var preTime = CookieUtil.get(this.keys.preVisitTime);

if (preTime) {

// If the latest access time exists, judge the interval

return time - preTime > this.clientConfig.sessionTimeout * 1000;

}

return true;

},

/**

* Update last access time

*/

updatePreVisitTime: function (time) {

CookieUtil.setExt(this.keys.preVisitTime, time);

},

/**

* Print log

*/

log: function (msg) {

console.log(msg);

},

};

// Method name of external exposure

window.__AE__ = {

startSession: function () {

tracker.startSession();

},

onPageView: function () {

tracker.onPageView();

},

onChargeRequest: function (orderId, name, currencyAmount, currencyType, paymentType) {

tracker.onChargeRequest(orderId, name, currencyAmount, currencyType, paymentType);

},

onEventDuration: function (category, action, map, duration) {

tracker.onEventDuration(category, action, map, duration);

},

setMemberId: function (mid) {

tracker.setMemberId(mid);

}

};

// Automatic loading method

var autoLoad = function () {

// Set parameters

var _aelog_ = _aelog_ || window._aelog_ || [];

var memberId = null;

for (i = 0; i < _aelog_.length; i++) {

_aelog_[i][0] === "memberId" && (memberId = _aelog_[i][1]);

}

// Set the value of memberid according to the given memberid

memberId && __AE__.setMemberId(memberId);

// Start session

__AE__.startSession();

};

autoLoad();

})();

-

AnalyticsEngineSDK.java

Judge whether the order id and user id are empty. If not, build the url through the build method, and then send the url through the SendDataMonitor class

Need custom accessurl =“ http://bd1601/shsxt.jpg ";

/**

* Analysis engine sdk java server-side data collection

*

* @author root

* @version 1.0

*

*/

public class AnalyticsEngineSDK {

// Log print object

private static final Logger log = Logger.getGlobal();

// The body of the request url

public static final String accessUrl = "http://bd1601/shsxt.jpg";

private static final String platformName = "java_server";

private static final String sdkName = "jdk";

private static final String version = "1";

/**

* Trigger the event of successful order payment and send the event data to the server

*

* @param orderId

* Order payment id

* @param memberId

* Order payment member id

* @return If the data is successfully sent (added to the send queue), then true is returned; Otherwise, it returns false (parameter exception & failed to add to send queue)

*/

public static boolean onChargeSuccess(String orderId, String memberId) {

try {

if (isEmpty(orderId) || isEmpty(memberId)) {

// Order id or memberid is null

log.log(Level.WARNING, "order id And members id Cannot be empty");

return false;

}

// When the code is executed here, it means that neither the order id nor the member id is empty.

Map<String, String> data = new HashMap<String, String>();

data.put("u_mid", memberId);

data.put("oid", orderId);

data.put("c_time", String.valueOf(System.currentTimeMillis()));

data.put("ver", version);

data.put("en", "e_cs");

data.put("pl", platformName);

data.put("sdk", sdkName);

// Create url

String url = buildUrl(data);

// Send url & Add url to queue

SendDataMonitor.addSendUrl(url);

return true;

} catch (Throwable e) {

log.log(Level.WARNING, "Sending data exception", e);

}

return false;

}

/**

* Trigger the order refund event and send the refund data to the server

*

* @param orderId

* Refund order id

* @param memberId

* Refund member id

* @return Returns true if the data is sent successfully. Otherwise, false is returned.

*/

public static boolean onChargeRefund(String orderId, String memberId) {

try {

if (isEmpty(orderId) || isEmpty(memberId)) {

// Order id or memberid is null

log.log(Level.WARNING, "order id And members id Cannot be empty");

return false;

}

// When the code is executed here, it means that neither the order id nor the member id is empty.

Map<String, String> data = new HashMap<String, String>();

data.put("u_mid", memberId);

data.put("oid", orderId);

data.put("c_time", String.valueOf(System.currentTimeMillis()));

data.put("ver", version);

data.put("en", "e_cr");

data.put("pl", platformName);

data.put("sdk", sdkName);

// Build url

String url = buildUrl(data);

// Send url & Add url to queue

SendDataMonitor.addSendUrl(url);

return true;

} catch (Throwable e) {

log.log(Level.WARNING, "Sending data exception", e);

}

return false;

}

/**

* Build the url based on the passed in parameters

*

* @param data

* @return

* @throws UnsupportedEncodingException

*/

private static String buildUrl(Map<String, String> data)

throws UnsupportedEncodingException {

StringBuilder sb = new StringBuilder();

//http://node01/log.gif?

sb.append(accessUrl).append("?");

for (Map.Entry<String, String> entry : data.entrySet()) {

if (isNotEmpty(entry.getKey()) && isNotEmpty(entry.getValue())) {

sb.append(entry.getKey().trim())

.append("=")

.append(URLEncoder.encode(entry.getValue().trim(), "utf-8"))

.append("&");

}

}

return sb.substring(0, sb.length() - 1);// Remove last&

}

/**

* Judge whether the string is empty. If it is empty, return true. Otherwise, false is returned.

*

* @param value

* @return

*/

private static boolean isEmpty(String value) {

return value == null || value.trim().isEmpty();

}

/**

* Judge whether the string is not empty. If not, return true. If it is empty, false is returned.

*

* @param value

* @return

*/

private static boolean isNotEmpty(String value) {

return !isEmpty(value);

}

}

-

SendDataMonitor.java

Send url requests, use getSendDataMonitor, this single instance mode constructor private, then call the written static method to return to SendDataMonitor object, then call the queue queue of the object to add url continuously, then SendDataMonitor.monitor.run(); This method continuously circularly obtains the url in the queue for transmission

No customization required

/**

* The monitor that sends url data, which is used to start a separate thread to send data

*

* @author root

*

*/

public class SendDataMonitor {

// Logging object

private static final Logger log = Logger.getGlobal();

// Blocking queue, where the user stores the sending url

private BlockingQueue<String> queue = new LinkedBlockingQueue<String>();

// A class object for a single column

private static SendDataMonitor monitor = null;

private SendDataMonitor() {

// Private construction method to create single column mode

}

/**

* Get the monitor object instance of single column, double check

*

* @return

*/

public static SendDataMonitor getSendDataMonitor() {

if (monitor == null) {

synchronized (SendDataMonitor.class) {

if (monitor == null) {

monitor = new SendDataMonitor();

Thread thread = new Thread(new Runnable() {

@Override

public void run() {

// The specific processing method is invoked in the thread.

SendDataMonitor.monitor.run();

}

});

// When testing, it is not set to guard mode

// thread.setDaemon(true);

thread.start();

}

}

}

return monitor;

}

/**

* Add a url to the queue

*

* @param url

* @throws InterruptedException

*/

public static void addSendUrl(String url) throws InterruptedException {

getSendDataMonitor().queue.put(url);

}

/**

* Specifically implement the method of sending url

*

*/

private void run() {

while (true) {

try {

String url = this.queue.take();

// Official send url

HttpRequestUtil.sendData(url);

} catch (Throwable e) {

log.log(Level.WARNING, "send out url abnormal", e);

}

}

}

/**

* Internal class, http tool class for users to send data

*

* @author root

*

*/

public static class HttpRequestUtil {

/**

* Specific method of sending url

*

* @param url

* @throws IOException

*/

public static void sendData(String url) throws IOException {

HttpURLConnection con = null;

BufferedReader in = null;

try {

URL obj = new URL(url); // Create url object

con = (HttpURLConnection) obj.openConnection(); // Open url connection

// Set connection parameters

con.setConnectTimeout(5000); // Connection expiration time

con.setReadTimeout(5000); // Read data expiration time

con.setRequestMethod("GET"); // Set the request type to get

System.out.println("send out url:" + url);

// Send connection request

in = new BufferedReader(new InputStreamReader(

con.getInputStream()));

// TODO: consider here whether you can

} finally {

try {

if (in != null) {

in.close();

}

} catch (Throwable e) {

// nothing

}

try {

con.disconnect();

} catch (Throwable e) {

// nothing

}

}

}

}

}

-

Test.java

Test payment status log

No customization required

public class Test {

public static String day = "20190607";

public static void main(String[] args) {

System.out.println("=================Start walking you=================");

//Insert code to collect logs

//When payment is successful

AnalyticsEngineSDK.onChargeSuccess("orderid123", "zhangsan");

//When payment fails

AnalyticsEngineSDK.onChargeRefund("orderid456", "lisi");

System.out.println("==========Continue to execute my code====================");

//Normal code, continue execution

}

}

Packaging test

Testing in Linux

Upload the jar package to the specified directory of linux

mkdir /opt/sxt/datalog/ cd /opt/sxt/datalog/

Start project

nohup java -jar bigdatalog.jar >>/opt/sxt/datalog/runlog.log 2>&1 &

verification

http://192.168.58.201:8080/

data acquisition

Idea:

- Configure nginx and start the cluster and nginx

- Configure flume

- Start flume monitoring task

- Run log production script

- Observation test

Nginx configuration

Create a new nginx configuration file: nginx conf

New nginx profile

//Find nginx conf cd /opt/sxt/nginx/conf //Modify file vim nginx.conf

Modify the following

#############Modification content:Unlock notes log_format my_format,Increase again location####################

worker_processes 1;

events {

worker_connections 1024;

}

http {

include mime.types;

default_type application/octet-stream;

log_format my_format '$remote_addr^A$msec^A$http_host^A$request_uri';

sendfile on;

keepalive_timeout 65;

server {

listen 80;

server_name localhost;

location / {

root html;

index index.html index.htm;

}

location = /shsxt.jpg {

default_type image/gif;

access_log /opt/data/access.log my_format;

}

}

verification

###############################Restart verification########################################### //Create LOG storage directory mkdir -p /opt/sxt/ //Monitoring log file tail -F /opt/sxt/access.log //verification shsxt-hadoop101/shsxt.jpg

Create script for cutting Log file

##############################establish nginx Log cutting script#######################################

//Create script

vim nginx.log.sh

##############################Add the following#######################################

#nginx log cutting script

#!/bin/bash

#Set log file storage directory

logs_path="/opt/data/"

#Set backup directory

logs_bak_path="/opt/data/access_logs_bak/"

#Rename log file

mv ${logs_path}access.log ${logs_bak_path}access_$(date "+%Y%m%d").log

#Reload nginx: regenerate a new log file

/opt/bdp/nginx/sbin/nginx -s reload

Flume configuration

Create a new Flume configuration file: example conf

New Flume profile

//Create a directory dedicated to preventing flume configuration files mkdir -p /opt/bdp/flume/options //Move to profile directory cd /opt/bdp/flume/options //Create first profile vim example.conf

Modify Flume configuration file: add the following content

a1.sources = r1 a1.sinks = k1 a1.channels = c1 a1.sources.r1.type = spooldir a1.sources.r1.spoolDir = /opt/data/access_logs_bak a1.sinks.k1.type=hdfs a1.sinks.k1.hdfs.path=hdfs://bdp/data/test/flume/%Y%m%d #Prefix of uploaded file a1.sinks.k1.hdfs.filePrefix = access_logs ##New files are generated every 60s or when the file size exceeds 10M # Create a new file when there are many messages in hdfs. 0 is not based on the number of messages a1.sinks.k1.hdfs.rollCount=0 # How long does hdfs create a new file? 0 is not based on time a1.sinks.k1.hdfs.rollInterval=0 # Create a new file when hdfs is large. 0 is not based on the file size a1.sinks.k1.hdfs.rollSize=0 # When no data is written to the currently opened temporary file within the time (seconds) specified by this parameter, the temporary file will be closed and renamed to the target file a1.sinks.k1.hdfs.idleTimeout=3 a1.sinks.k1.hdfs.fileType=DataStream a1.sinks.k1.hdfs.useLocalTimeStamp=true ## Generate a directory every five minutes: # Whether to enable discard in time. Discard here is similar to rounding, which will be described later. If enabled, all time expressions except% t will be affected a1.sinks.k1.hdfs.round=true # The value of "discard" in time; a1.sinks.k1.hdfs.roundValue=5 # The unit of "discarding" in time, including: second,minute,hour a1.sinks.k1.hdfs.roundUnit=minute # Use a channel which buffers events in memory a1.channels.c1.type = memory a1.channels.c1.capacity = 1000 a1.channels.c1.transactionCapacity = 100 # Bind the source and sink to the channel a1.sources.r1.channels = c1 a1.sinks.k1.channel = c1

Start flume

nohup flume-ng agent -n a1 -c options/ -f example.conf -Dflume.root.logger=INFO,console >> /root/flume_log 2>&1 &

Start the production script and observe the test

cat /root/flume_log

Data consumption

If the above operations are successful, start to write the code for operating HBase to consume data, and store the generated data in HBase in real time.

Idea:

-

Write MR, read the data in HDFS cluster and print it to the console to observe whether it is successful;

-

Now that the data in HDFS can be read, the read data can be written to HBase, so write and call HBaseAPI related methods to write the data read from HDFS to HBase;

-

The above two steps are enough to complete the tasks of consuming and storing data, but involve decoupling, so some attribute files need to be externalized in the process, and HBase generic methods need to be encapsulated in a class.

Create a new module project: shsxt_ecshop_loganalyse

- pom.xml file configuration:

No customization required

<?xml version="1.0" encoding="UTF-8"?>

<project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0</modelVersion>

<groupId>org.example</groupId>

<artifactId>shsxt_ecshop_loganalyse</artifactId>

<version>1.0</version>

<!--Use Ali's maven Warehouse-->

<repositories>

<repository>

<id>central</id>

<name>aliyun maven</name>

<url>http://maven.aliyun.com/nexus/content/groups/public/</url>

<layout>default</layout>

</repository>

</repositories>

<!--Set basic project parameters-->

<properties>

<project.build.sourceEncoding>UTF-8</project.build.sourceEncoding>

<maven.compiler.source>1.8</maven.compiler.source>

<maven.compiler.target>1.8</maven.compiler.target>

</properties>

<!--Add dependency-->

<dependencies>

<!--add to hadoop Command dependency-->

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-common</artifactId>

<version>2.6.5</version>

</dependency>

<!--add to hadoop Client dependency-->

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-client</artifactId>

<version>2.6.5</version>

</dependency>

<!--add to hdfs Dependence of-->

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-hdfs</artifactId>

<version>2.6.5</version>

</dependency>

<!--add to mapreduce Client code dependencies for-->

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-mapreduce-client-core</artifactId>

<version>2.6.5</version>

</dependency>

<!--add to hive Data file dependency-->

<dependency>

<groupId>org.apache.hive</groupId>

<artifactId>hive-exec</artifactId>

<version>2.3.7</version>

</dependency>

<!--add to hbase Client dependencies for-->

<dependency>

<groupId>org.apache.hbase</groupId>

<artifactId>hbase-client</artifactId>

<version>1.4.13</version>

</dependency>

<!--add to hbase Server dependency-->

<dependency>

<groupId>org.apache.hbase</groupId>

<artifactId>hbase-server</artifactId>

<version>1.4.13</version>

</dependency>

<!--add to musql Connection dependency-->

<dependency>

<groupId>mysql</groupId>

<artifactId>mysql-connector-java</artifactId>

<version>8.0.20</version>

</dependency>

<!--Add and resolve browser dependencies-->

<dependency>

<groupId>cz.mallat.uasparser</groupId>

<artifactId>uasparser</artifactId>

<version>0.6.2</version>

</dependency>

<!--Add test dependency-->

<dependency>

<groupId>junit</groupId>

<artifactId>junit</artifactId>

<version>4.11</version>

<scope>test</scope>

</dependency>

</dependencies>

</project>

Function of ETL code

- Remove dirty data

- Split the data into formats that can be processed directly (parse IP, parse browser, split log)

- Store the results of data cleaning in HBase

ETL coding

-

IpSeeker: creates a new IpSeeker class file

Parsing ip: used to read QQwry Dat file to get the location according to ip, QQwry The format of DAT is:

I File header, 8 bytes in total

-

Absolute offset of the first starting IP, 4 bytes

-

Absolute offset of the last starting IP, 4 bytes

II "End address / country / region" record area each record followed by a four byte ip address is divided into two parts

-

National Records

-

Regional records, but regional records are not necessarily available.

Moreover, there are two forms of national records and regional records

-

String ending with 0

-

4 bytes, one byte may be 0x1 or 0x2

a. When it is 0x1, it means that the absolute offset is followed by a region record. Note that it is after the absolute offset, not after these four bytes

b. When it is 0x2, it indicates that there is no area record after absolute offset

Whether 0x1 or 0x2, the last three bytes are the absolute offset in the file of the actual country name. If it is a regional record, the meanings of 0x1 and 0x2 are unknown, but if these two bytes appear, they must be offset by 3 bytes. If not, it is a 0-ending string

-

III "Start address / end address offset" record area

Each record is 7 bytes, arranged from small to large according to the starting address

a. starting IP address, 4 bytes

b. absolute offset of end ip address, 3 bytes

Note that the ip address and all offsets in this file are in little endian format, while java is in big endian format. Pay attention to conversion

No customization required

-

public class IPSeeker {

// Some fixed constants, such as record length, etc

private static final int IP_RECORD_LENGTH = 7;

private static final byte AREA_FOLLOWED = 0x01;

private static final byte NO_AREA = 0x2;

// It is used as a cache. When querying an ip, first check the cache to reduce unnecessary repeated searches

private Hashtable ipCache;

// Random file access class

private RandomAccessFile ipFile;

// Memory mapping file

private MappedByteBuffer mbb;

// The first mock exam example

private static IPSeeker instance = null;

// Absolute offset of the start and end of the start region

private long ipBegin, ipEnd;

// Temporary variables used to improve efficiency

private IPLocation loc;

private byte[] buf;

private byte[] b4;

private byte[] b3;

/**

* private constructors

*/

protected IPSeeker() {

ipCache = new Hashtable();

loc = new IPLocation();

buf = new byte[100];

b4 = new byte[4];

b3 = new byte[3];

try {

String ipFilePath = IPSeeker.class.getResource("/qqwry.dat")

.getFile();

ipFile = new RandomAccessFile(ipFilePath, "r");

} catch (FileNotFoundException e) {

System.out.println("IP Address information file not found, IP The display function will not be available");

ipFile = null;

}

// If the file is opened successfully, read the file header information

if (ipFile != null) {

try {

ipBegin = readLong4(0);

ipEnd = readLong4(4);

if (ipBegin == -1 || ipEnd == -1) {

ipFile.close();

ipFile = null;

}

} catch (IOException e) {

System.out.println("IP Address information file format error, IP The display function will not be available");

ipFile = null;

}

}

}

/**

* @return Single instance

*/

public static IPSeeker getInstance() {

if (instance == null) {

instance = new IPSeeker();

}

return instance;

}

/**

* Given the incomplete name of a location, a series of IP range records containing s substrings are obtained

* @param s Ground point string

* @return List containing IPEntry type

*/

public List getIPEntriesDebug(String s) {

List ret = new ArrayList();

long endOffset = ipEnd + 4;

for (long offset = ipBegin + 4; offset <= endOffset; offset += IP_RECORD_LENGTH) {

// Read end IP offset

long temp = readLong3(offset);

// If temp is not equal to - 1, read the location information of IP

if (temp != -1) {

IPLocation loc = getIPLocation(temp);

// Judge whether the location contains s substring. If so, add the record to the List. If not, continue

if (loc.country.indexOf(s) != -1 || loc.area.indexOf(s) != -1) {

IPEntry entry = new IPEntry();

entry.country = loc.country;

entry.area = loc.area;

// Get start IP

readIP(offset - 4, b4);

entry.beginIp = IPSeekerUtils.getIpStringFromBytes(b4);

// Get end IP

readIP(temp, b4);

entry.endIp = IPSeekerUtils.getIpStringFromBytes(b4);

// Add this record

ret.add(entry);

}

}

}

return ret;

}

/** */

/**

* Given the incomplete name of a location, a series of IP range records containing s substrings are obtained

*

* @param s

* Ground point string

* @return List containing IPEntry type

*/

public List getIPEntries(String s) {

List ret = new ArrayList();

try {

// Mapping IP information files to memory

if (mbb == null) {

FileChannel fc = ipFile.getChannel();

mbb = fc.map(FileChannel.MapMode.READ_ONLY, 0, ipFile.length());

mbb.order(ByteOrder.LITTLE_ENDIAN);

}

int endOffset = (int) ipEnd;

for (int offset = (int) ipBegin + 4; offset <= endOffset; offset += IP_RECORD_LENGTH) {

int temp = readInt3(offset);

if (temp != -1) {

IPLocation loc = getIPLocation(temp);

// Judge whether the location contains s substring. If so, add the record to the List. If not, continue

if (loc.country.indexOf(s) != -1

|| loc.area.indexOf(s) != -1) {

IPEntry entry = new IPEntry();

entry.country = loc.country;

entry.area = loc.area;

// Get start IP

readIP(offset - 4, b4);

entry.beginIp = IPSeekerUtils.getIpStringFromBytes(b4);

// Get end IP

readIP(temp, b4);

entry.endIp = IPSeekerUtils.getIpStringFromBytes(b4);

// Add this record

ret.add(entry);

}

}

}

} catch (IOException e) {

System.out.println(e.getMessage());

}

return ret;

}

/** */

/**

* Read an int three bytes from the offset position of the memory mapping file

*

* @param offset

* @return

*/

private int readInt3(int offset) {

mbb.position(offset);

return mbb.getInt() & 0x00FFFFFF;

}

/** */

/**

* Read an int three bytes from the current location of the memory mapped file

*

* @return

*/

private int readInt3() {

return mbb.getInt() & 0x00FFFFFF;

}

/** */

/**

* Get country name according to IP

*

* @param ip

* ip Byte array form of

* @return Country name string

*/

public String getCountry(byte[] ip) {

// Check whether the ip address file is normal

if (ipFile == null)

return "FALSE IP Database file";

// Save ip and convert ip byte array to string form

String ipStr = IPSeekerUtils.getIpStringFromBytes(ip);

// First check whether the cache already contains the results of this ip, and no more search files

if (ipCache.containsKey(ipStr)) {

IPLocation loc = (IPLocation) ipCache.get(ipStr);

return loc.country;

} else {

IPLocation loc = getIPLocation(ip);

ipCache.put(ipStr, loc.getCopy());

return loc.country;

}

}

/** */

/**

* Get country name according to IP

*

* @param ip

* IP String form of

* @return Country name string

*/

public String getCountry(String ip) {

return getCountry(IPSeekerUtils.getIpByteArrayFromString(ip));

}

/** */

/**

* Get region name according to IP

*

* @param ip

* ip Byte array form of

* @return Region name string

*/

public String getArea(byte[] ip) {

// Check whether the ip address file is normal

if (ipFile == null)

return "FALSE IP Database file";

// Save ip and convert ip byte array to string form

String ipStr = IPSeekerUtils.getIpStringFromBytes(ip);

// First check whether the cache already contains the results of this ip, and no more search files

if (ipCache.containsKey(ipStr)) {

IPLocation loc = (IPLocation) ipCache.get(ipStr);

return loc.area;

} else {

IPLocation loc = getIPLocation(ip);

ipCache.put(ipStr, loc.getCopy());

return loc.area;

}

}

/**

* Get region name according to IP

*

* @param ip

* IP String form of

* @return Region name string

*/

public String getArea(String ip) {

return getArea(IPSeekerUtils.getIpByteArrayFromString(ip));

}

/** */

/**

* Search the ip information file according to the ip to obtain the IPLocation structure, and the searched ip parameters are obtained from the class member ip

*

* @param ip

* IP to query

* @return IPLocation structure

*/

public IPLocation getIPLocation(byte[] ip) {

IPLocation info = null;

long offset = locateIP(ip);

if (offset != -1)

info = getIPLocation(offset);

if (info == null) {

info = new IPLocation();

info.country = "Unknown country";

info.area = "Unknown region";

}

return info;

}

/**

* Read 4 bytes from the offset position as a long. Because java is in big endian format, there is no way to use such a function for conversion

*

* @param offset

* @return Read the long value of the file, and return - 1, indicating that reading the file failed

*/

private long readLong4(long offset) {

long ret = 0;

try {

ipFile.seek(offset);

ret |= (ipFile.readByte() & 0xFF);

ret |= ((ipFile.readByte() << 8) & 0xFF00);

ret |= ((ipFile.readByte() << 16) & 0xFF0000);

ret |= ((ipFile.readByte() << 24) & 0xFF000000);

return ret;

} catch (IOException e) {

return -1;

}

}

/**

* Read three bytes from the offset position as a long. Because java is in big endian format, there is no way to use such a function for conversion

*

* @param offset

* @return Read the long value of the file, and return - 1, indicating that reading the file failed

*/

private long readLong3(long offset) {

long ret = 0;

try {

ipFile.seek(offset);

ipFile.readFully(b3);

ret |= (b3[0] & 0xFF);

ret |= ((b3[1] << 8) & 0xFF00);

ret |= ((b3[2] << 16) & 0xFF0000);

return ret;

} catch (IOException e) {

return -1;

}

}

/**

* Read 3 bytes from the current position and convert to long

*

* @return

*/

private long readLong3() {

long ret = 0;

try {

ipFile.readFully(b3);

ret |= (b3[0] & 0xFF);

ret |= ((b3[1] << 8) & 0xFF00);

ret |= ((b3[2] << 16) & 0xFF0000);

return ret;

} catch (IOException e) {

return -1;

}

}

/**

* Read the four byte ip address from the offset position and put it into the ip array. The read ip is in big endian format, but

* The file is in the form of little endian, which will be converted

*

* @param offset

* @param ip

*/

private void readIP(long offset, byte[] ip) {

try {

ipFile.seek(offset);

ipFile.readFully(ip);

byte temp = ip[0];

ip[0] = ip[3];

ip[3] = temp;

temp = ip[1];

ip[1] = ip[2];

ip[2] = temp;

} catch (IOException e) {

System.out.println(e.getMessage());

}

}

/**

* Read the four byte ip address from the offset position and put it into the ip array. The read ip is in big endian format, but

* The file is in the form of little endian, which will be converted

*

* @param offset

* @param ip

*/

private void readIP(int offset, byte[] ip) {

mbb.position(offset);

mbb.get(ip);

byte temp = ip[0];

ip[0] = ip[3];

ip[3] = temp;

temp = ip[1];

ip[1] = ip[2];

ip[2] = temp;

}

/**

* Compare the class member ip with beginIp. Note that the beginIp is big endian

*

* @param ip

* IP to query

* @param beginIp

* IP compared with queried IP

* @return Equal returns 0, ip greater than beginIp returns 1, less than - 1.

*/

private int compareIP(byte[] ip, byte[] beginIp) {

for (int i = 0; i < 4; i++) {

int r = compareByte(ip[i], beginIp[i]);

if (r != 0)

return r;

}

return 0;

}

/**

* Compare two byte s as unsigned numbers

*

* @param b1

* @param b2

* @return If b1 is greater than b2, it returns 1, equal returns 0, and less than - 1

*/

private int compareByte(byte b1, byte b2) {

if ((b1 & 0xFF) > (b2 & 0xFF)) // Compare whether greater than

return 1;

else if ((b1 ^ b2) == 0)// Judge whether they are equal

return 0;

else

return -1;

}

/**

* This method will locate the record containing the ip country and region according to the ip content, return an absolute offset, and use the dichotomy method to find it.

*

* @param ip

* IP to query

* @return If found, return the offset of the end IP. If not found, return - 1

*/

private long locateIP(byte[] ip) {

long m = 0;

int r;

// Compare first ip entry

readIP(ipBegin, b4);

r = compareIP(ip, b4);

if (r == 0)

return ipBegin;

else if (r < 0)

return -1;

// Start binary search

for (long i = ipBegin, j = ipEnd; i < j;) {

m = getMiddleOffset(i, j);

readIP(m, b4);

r = compareIP(ip, b4);

// log.debug(Utils.getIpStringFromBytes(b));

if (r > 0)

i = m;

else if (r < 0) {

if (m == j) {

j -= IP_RECORD_LENGTH;

m = j;

} else

j = m;

} else

return readLong3(m + 4);

}

// If the loop ends, then i and j must be equal. This record is the most likely record, but it is not

// It must be. Also check it. If so, return the absolute offset of the end address area

m = readLong3(m + 4);

readIP(m, b4);

r = compareIP(ip, b4);

if (r <= 0)

return m;

else

return -1;

}

/**

* Get the offset recorded in the middle of the begin offset and end offset

*

* @param begin

* @param end

* @return

*/

private long getMiddleOffset(long begin, long end) {

long records = (end - begin) / IP_RECORD_LENGTH;

records >>= 1;

if (records == 0)

records = 1;

return begin + records * IP_RECORD_LENGTH;

}

/**

* Given the offset of an ip country and region record, an IPLocation structure is returned

*

* @param offset

* @return

*/

private IPLocation getIPLocation(long offset) {

try {

// Skip 4-byte ip

ipFile.seek(offset + 4);

// Read the first byte to determine whether the flag byte

byte b = ipFile.readByte();

if (b == AREA_FOLLOWED) {

// Read country offset

long countryOffset = readLong3();

// Jump to offset

ipFile.seek(countryOffset);

// Check the flag byte again, because this place may still be a redirect at this time

b = ipFile.readByte();

if (b == NO_AREA) {

loc.country = readString(readLong3());

ipFile.seek(countryOffset + 4);

} else

loc.country = readString(countryOffset);

// Read region flag

loc.area = readArea(ipFile.getFilePointer());

} else if (b == NO_AREA) {

loc.country = readString(readLong3());

loc.area = readArea(offset + 8);

} else {

loc.country = readString(ipFile.getFilePointer() - 1);

loc.area = readArea(ipFile.getFilePointer());

}

return loc;

} catch (IOException e) {

return null;

}

}

/**

* @param offset

* @return

*/

private IPLocation getIPLocation(int offset) {

// Skip 4-byte ip

mbb.position(offset + 4);

// Read the first byte to determine whether the flag byte

byte b = mbb.get();

if (b == AREA_FOLLOWED) {

// Read country offset

int countryOffset = readInt3();

// Jump to offset

mbb.position(countryOffset);

// Check the flag byte again, because this place may still be a redirect at this time

b = mbb.get();

if (b == NO_AREA) {

loc.country = readString(readInt3());

mbb.position(countryOffset + 4);

} else

loc.country = readString(countryOffset);

// Read region flag

loc.area = readArea(mbb.position());

} else if (b == NO_AREA) {

loc.country = readString(readInt3());

loc.area = readArea(offset + 8);

} else {

loc.country = readString(mbb.position() - 1);

loc.area = readArea(mbb.position());

}

return loc;

}

/**

* Starting from the offset, parse the following bytes and read out a region name

*

* @param offset

* @return Region name string

* @throws IOException

*/

private String readArea(long offset) throws IOException {

ipFile.seek(offset);

byte b = ipFile.readByte();

if (b == 0x01 || b == 0x02) {

long areaOffset = readLong3(offset + 1);

if (areaOffset == 0)

return "Unknown region";

else

return readString(areaOffset);

} else

return readString(offset);

}

/**

* @param offset

* @return

*/

private String readArea(int offset) {

mbb.position(offset);

byte b = mbb.get();

if (b == 0x01 || b == 0x02) {

int areaOffset = readInt3();

if (areaOffset == 0)

return "Unknown region";

else

return readString(areaOffset);

} else

return readString(offset);

}

/**

* Reads a string ending in 0 from the offset

*

* @param offset

* @return An error occurred while reading the string. An empty string was returned

*/

private String readString(long offset) {

try {

ipFile.seek(offset);

int i;

for (i = 0, buf[i] = ipFile.readByte(); buf[i] != 0; buf[++i] = ipFile

.readByte())

;

if (i != 0)

return IPSeekerUtils.getString(buf, 0, i, "GBK");

} catch (IOException e) {

System.out.println(e.getMessage());

}

return "";

}

/**