preface

What is EFAK

EFAK (Eagle For Apache Kafka, formerly known as Kafka Eagle) EFAK is an open source visualization and management software. kafka cluster can be queried, visualized and monitored. It is a tool to convert kafka cluster data into graphic visualization.

Why EFAK

-

Apache Kafka does not officially provide monitoring systems or pages.

-

The open source Kafka monitoring system has too few functions or maintenance is suspended.

-

The existing monitoring system is difficult to configure and use.

-

Some monitoring systems cannot meet the integration with existing IM, such as wechat, nailing, etc.

install

download

You can download EFAK source code on GitHub, compile and install it yourself, or download binary tar.gz file.

| EFAK | memory pool |

|---|---|

| Github | https://github.com/smartloli/EFAK |

| download | http://download.kafka-eagle.org/ |

ps: it is recommended to use the official compiled binary installation package

"Install JDK"

If there is a JDK environment on the Linux server, this step can be ignored and proceed to the next step of installation. If there is no JDK, please download the JDK from the Oracle official website first.

JAVA_ The home configuration extracts the binary installation package to the specified directory:

cd /usr/java tar -zxvf jdk-xxxx.tar.gz mv jdk-xxxx jdk1.8 vi /etc/profile export JAVA_HOME=/usr/java/jdk1.8 export PATH=$PATH:$JAVA_HOME/bin

Then we use/ etc/profile makes the configuration take effect immediately.

"Extract EFAK"

Here we unzip it to the / data/soft/new directory and unzip it:

tar -zxvf efak-xxx-bin.tar.gz

If the version has been installed before, delete the modified version and rename the current version, as shown below:

rm -rf efak mv efak-xxx efak

Then, configure the EFAK configuration file

vi /etc/profileexport KE_HOME=/data/soft/new/efakexport PATH=$PATH:$KE_HOME/bin

Finally, we use/ etc/profile makes the configuration take effect immediately.

Configure EFAK system files to configure EFAK according to the actual situation of their Kafka cluster, such as zookeeper address, version type of Kafka cluster (zk is a low version, Kafka is a high version), Kafka cluster with security authentication enabled, etc.

cd ${KE_HOME}/conf

vi system-config.properties

# Multi zookeeper&kafka cluster list -- The client connection address of the Zookeeper cluster is set here

efak.zk.cluster.alias=cluster1,cluster2

cluster1.zk.list=tdn1:2181,tdn2:2181,tdn3:2181

cluster2.zk.list=xdn1:2181,xdn2:2181,xdn3:2181

# Add zookeeper acl

cluster1.zk.acl.enable=false

cluster1.zk.acl.schema=digest

cluster1.zk.acl.username=test

cluster1.zk.acl.password=test123

# Kafka broker nodes online list

cluster1.efak.broker.size=10

cluster2.efak.broker.size=20

# Zkcli limit -- Zookeeper cluster allows the number of clients to connect to

# If you enable distributed mode, you can set value to 4 or 8

kafka.zk.limit.size=16

# EFAK webui port -- WebConsole port access address

efak.webui.port=8048

######################################

# EFAK enable distributed

######################################

efak.distributed.enable=false

# master worknode set status to master, other node set status to slave

efak.cluster.mode.status=slave

# deploy efak server address

efak.worknode.master.host=localhost

efak.worknode.port=8085

# Kafka offset storage -- Offset stored in a Kafka cluster, if stored in the zookeeper, you can not use this option

cluster1.efak.offset.storage=kafka

cluster2.efak.offset.storage=kafka

# Whether the Kafka performance monitoring diagram is enabled

efak.metrics.charts=false

# EFAK keeps data for 30 days by default

efak.metrics.retain=30

# If offset is out of range occurs, enable this property -- Only suitable for kafka sql

efak.sql.fix.error=false

efak.sql.topic.records.max=5000

# Delete kafka topic token -- Set to delete the topic token, so that administrators can have the right to delete

efak.topic.token=keadmin

# Kafka sasl authenticate

cluster1.efak.sasl.enable=false

cluster1.efak.sasl.protocol=SASL_PLAINTEXT

cluster1.efak.sasl.mechanism=SCRAM-SHA-256

cluster1.efak.sasl.jaas.config=org.apache.kafka.common.security.scram.ScramLoginModule required username="admin" password="admin-secret";

# If not set, the value can be empty

cluster1.efak.sasl.client.id=

# Add kafka cluster cgroups

cluster1.efak.sasl.cgroup.enable=false

cluster1.efak.sasl.cgroup.topics=kafka_ads01,kafka_ads02

cluster2.efak.sasl.enable=true

cluster2.efak.sasl.protocol=SASL_PLAINTEXT

cluster2.efak.sasl.mechanism=PLAIN

cluster2.efak.sasl.jaas.config=org.apache.kafka.common.security.plain.PlainLoginModule required username="admin" password="admin-secret";

cluster2.efak.sasl.client.id=

cluster2.efak.sasl.cgroup.enable=false

cluster2.efak.sasl.cgroup.topics=kafka_ads03,kafka_ads04

# Default use sqlite to store data

efak.driver=org.sqlite.JDBC

# It is important to note that the '/hadoop/kafka-eagle/db' path must be exist.

efak.url=jdbc:sqlite:/hadoop/kafka-eagle/db/ke.db

efak.username=root

efak.password=smartloli

# (Optional) set mysql address

#efak.driver=com.mysql.jdbc.Driver

#efak.url=jdbc:mysql://127.0.0.1:3306/ke?useUnicode=true&characterEncoding=UTF-8&zeroDateTimeBehavior=convertToNull

#efak.username=root

#efak.password=smartloli

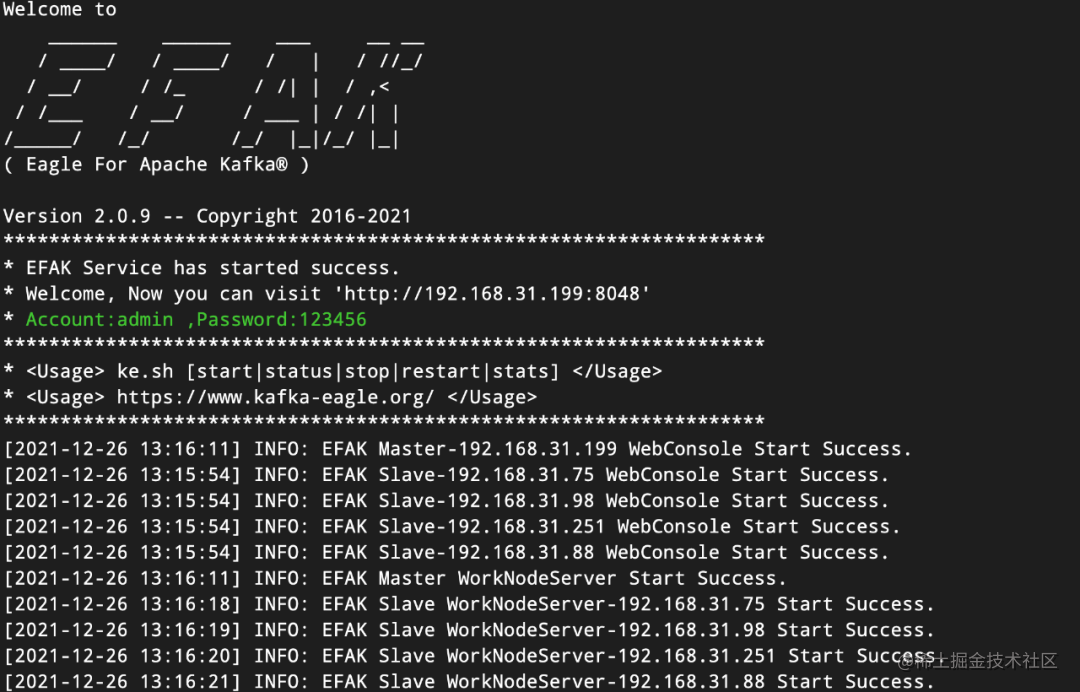

"Start EFAK server (independent)" in $Ke_ In the home / bin directory, there is a Ke SH script file. Execute the startup command as follows:

cd ${KE_HOME}/bin

chmod +x ke.sh ke.sh startThen, when the EFAK server restarts or stops, execute the following command:

ke.sh restart ke.sh stop

As shown in the figure below:

"Start EFAK server (distributed)"

At $Ke_ In the home / bin directory, there is a Ke SH script file. Execute the startup command as follows:

cd ${KE_HOME}/bin

# sync efak package to other worknode node

# if $KE_HOME is /data/soft/new/efak

for i in `cat $KE_HOME/conf/works`;do scp -r $KE_HOME $i:/data/soft/new;done

# sync efak server .bash_profile environment

for i in `cat $KE_HOME/conf/works`;do scp -r ~/.bash_profile $i:~/;done

chmod +x ke.sh

ke.sh cluster start

Then, when the EFAK server restarts or stops, execute the following command:

ke.sh cluster restart ke.sh cluster stop

As shown in the figure below:

use

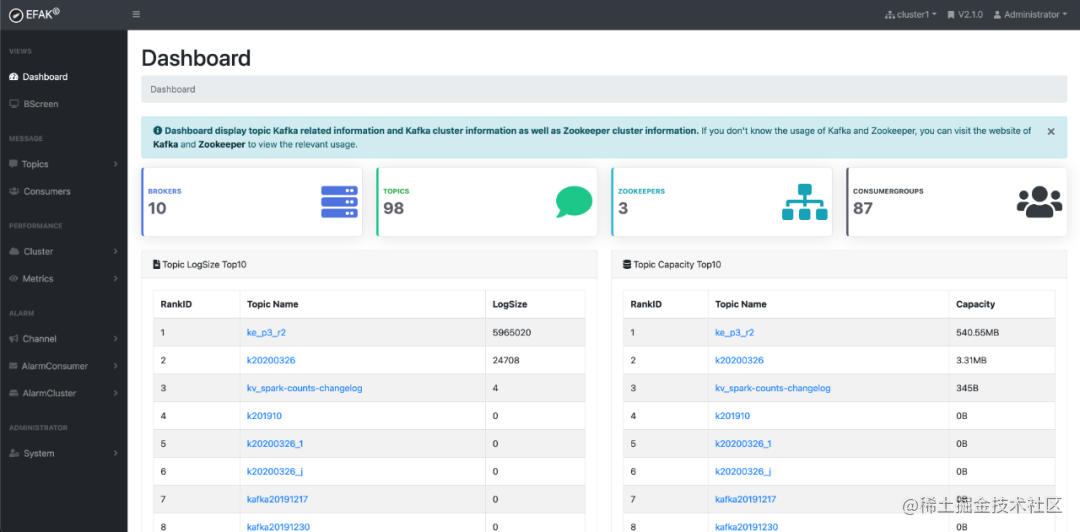

Dashboard

Check the information of Kafka brokers, topics, consumer s, Zookeepers, etc

create themes

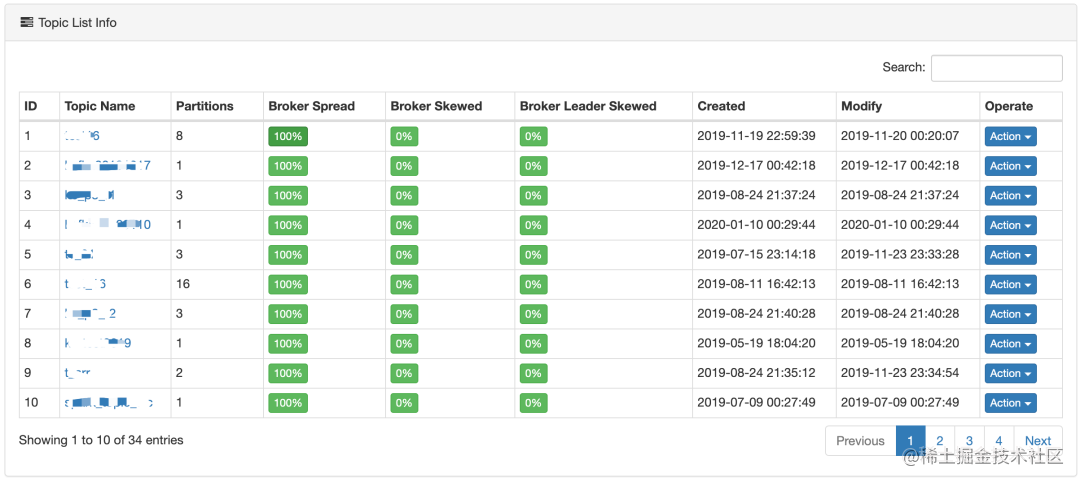

List topics

This module tracks all topics in the Kafka cluster, including the number of partitions, creation time and modifying topics, as shown in the following figure:

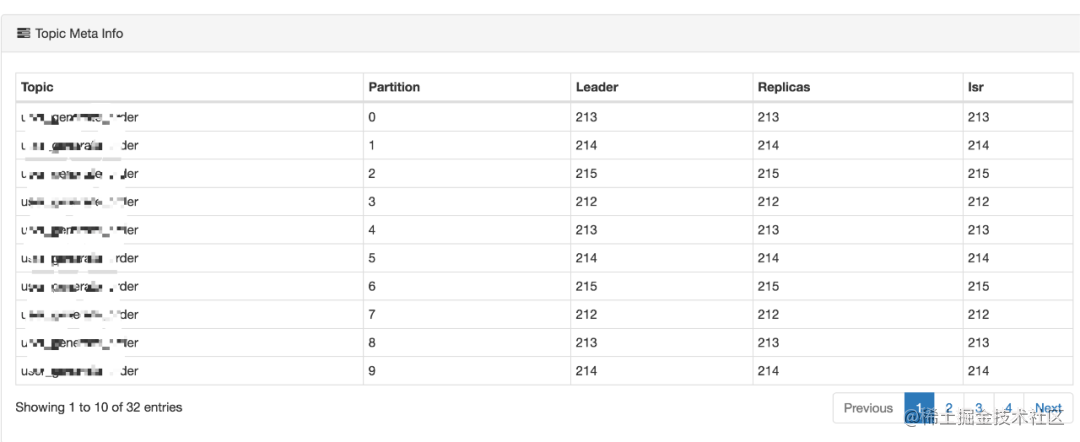

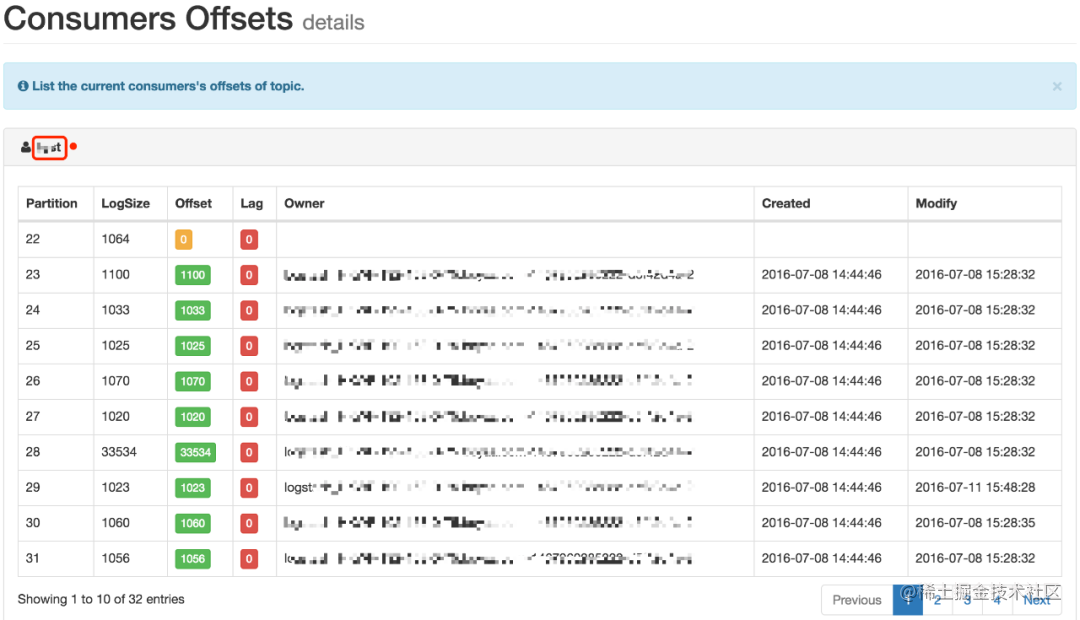

Subject details

Each Topic corresponds to a hyperlink. You can view the details of the Topic, as shown in the following figure:

Consumption

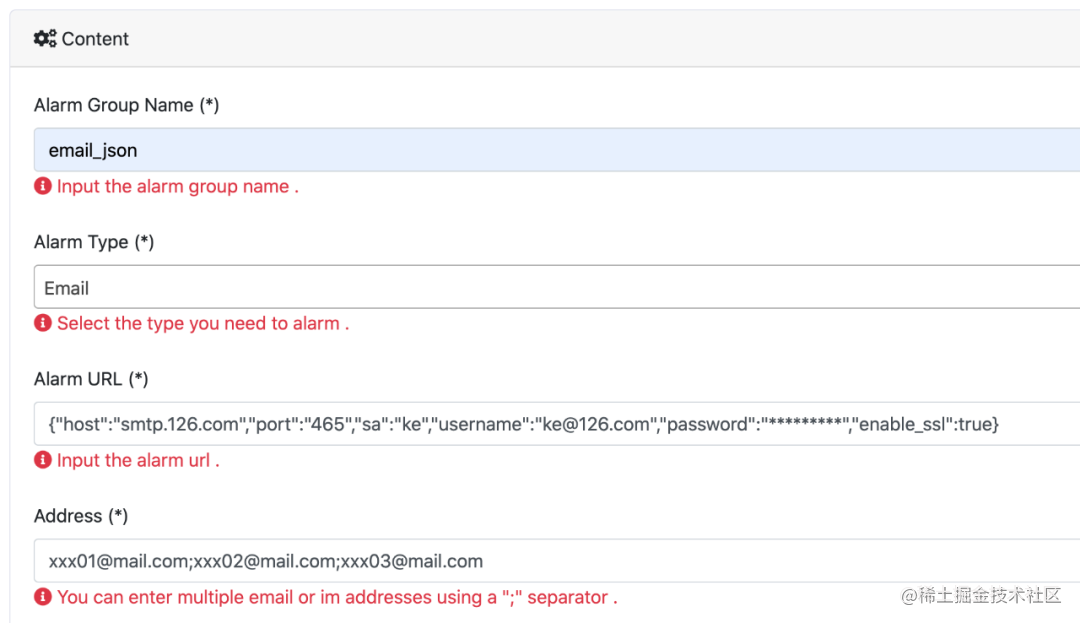

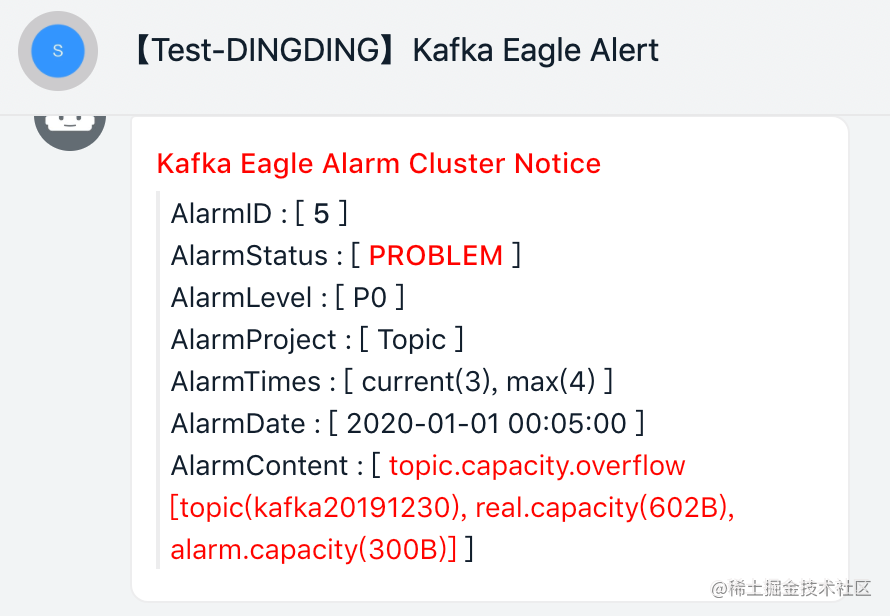

Data early warning

Configure the user name and password of the mail server, and you can see the corresponding alert data

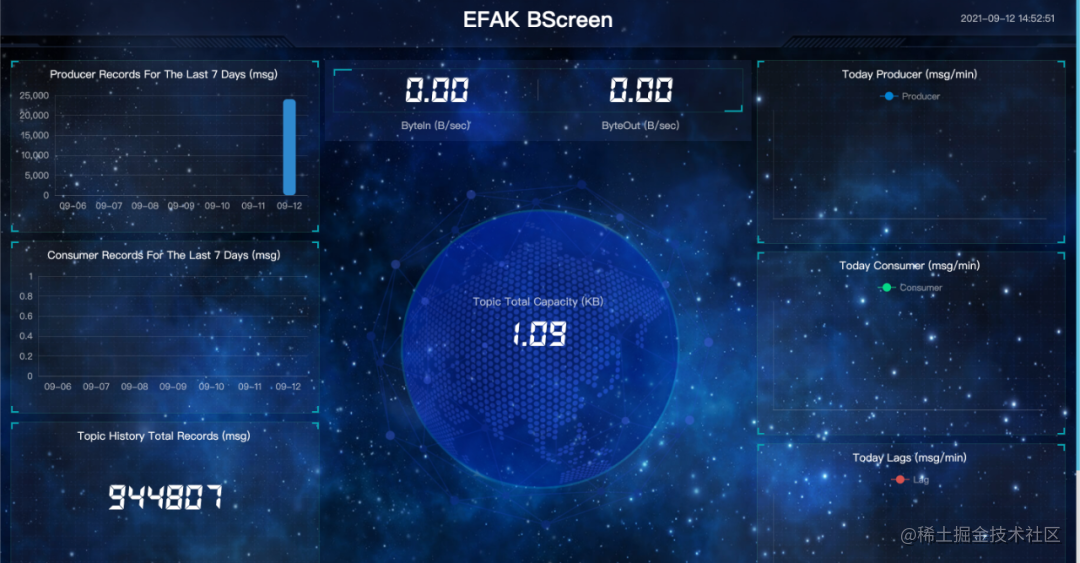

And the final big screen display

The above is the installation and use of EFAK. There are many functions in specific projects. It is recommended that you can use EFAK as Kafka's visual management software

Scan code concerns my official account of WeChat: Java architect's advanced programming to get the latest interview questions, e-books.

Focus on sharing Java technology dry goods, including JVM, SpringBoot, SpringCloud, database, architecture design, interview questions, e-books, etc. we look forward to your attention!