1, Foreword

1-1. What is ElasticJob

We used scheduled tasks in the SpringBoot project before. First, we started the scheduled task @ enableshcheduling, and then used @ Scheduled(cron = "*/1 * *?"), This is easy to use and has no problem.

But think about this scenario. If a service can't meet our needs, what should we do at this time? It's easy to think of clusters and deploy multiple copies.

Generally, we only need to add A load function before deploying multiple services. After all, each service provides the same service. But timing tasks are not good. For example, the fun method of service A runs half way, and then the fun method of service B starts running. This will certainly cause problems.

Our ElasticJob can solve this problem and support horizontal expansion / contraction. Of course, it also comes with other functions, such as good-looking and easy-to-use operation interface, exception notification (e-mail, enterprise wechat, nailing), etc.

1-2. Others

ElasticJob depends on zookeeper (multiple services must communicate to achieve the above functions). You can install it yourself. If you just test, you can install a windows version.

ElasticJob provides two modules, ElasticJob lite and ElasticJob cloud. We will only introduce the use of ElasticJob Lite below.

2, Use

If the modified yml document does not take effect, there are two methods as follows

- Change the name space of zookeeper

- Add overwrite under the job in the configuration file: true

2-1. Operation

Homework is actually a scheduled task. Every job is a scheduled task.

There are many types of jobs, such as simple jobs, data flow jobs, script jobs and HTTP jobs (provided in 3.0.0-beta)

2-1-1. Ordinary operation

Ordinary jobs only need to implement the SimpleJob interface and then override the execute method (which is also the most used job)

import org.apache.shardingsphere.elasticjob.api.ShardingContext;

import org.apache.shardingsphere.elasticjob.simple.job.SimpleJob;

import org.springframework.stereotype.Component;

@Component

public class MyElasticJob implements SimpleJob {

@Override

public void execute(ShardingContext context) {

System.out.println(context.getShardingTotalCount() + " " + context.getShardingItem());

}

}

2-1-2. Data flow operation

MyDataflowJob

import com.xdx97.elasticjob.bean.XdxBean;

import org.apache.shardingsphere.elasticjob.api.ShardingContext;

import org.apache.shardingsphere.elasticjob.dataflow.job.DataflowJob;

import org.springframework.stereotype.Component;

import java.util.ArrayList;

import java.util.List;

@Component

public class MyDataflowJob implements DataflowJob<XdxBean> {

@Override

public List<XdxBean> fetchData(ShardingContext shardingContext) {

List<XdxBean> foos = new ArrayList<>();

double random = Math.random();

System.out.println("fetchData------ " + random);

if (random > 0.5){

XdxBean foo = new XdxBean();

foo.setName("Xiaodaoxian");

foos.add(foo);

}

return foos;

}

@Override

public void processData(ShardingContext shardingContext, List<XdxBean> list) {

System.out.println("coming processData------");

}

}

XdxBean

public class XdxBean {

private String name;

public String getName() {

return name;

}

public void setName(String name) {

this.name = name;

}

}

Operation results:

fetchData------ 0.13745888666984807 fetchData------ 0.2922741337641118 fetchData------ 0.7834818165147507 coming processData------ fetchData------ 0.8177868853353837 coming processData------ fetchData------ 0.14076346085285385

Note: math The data generated by random() is between 0-1.

From the above running results, we can conclude that the so-called data flow job is actually a scheduled task, but when the scheduled task generates data, it will carry the data to call the processData() method

2-1-3. Script operation

I don't feel much use, so I don't study the relationship with time

2-1-4. HTTP job (provided in 3.0.0-beta)

I don't feel much use, so I don't study the relationship with time

2-2. Job scheduling (based on SpringBoot)

ElasticJob provides three usage methods: Java based, SpringBoot based and configuration file based. It is also troublesome to introduce all three. The principle is the same. I will only introduce SpringBoot here.

2-2-1. Import pom file

<!-- https://mvnrepository.com/artifact/org.springframework.boot/spring-boot-starter -->

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter</artifactId>

<version>2.2.0.RELEASE</version>

</dependency>

<!-- https://mvnrepository.com/artifact/org.springframework.boot/spring-boot-starter-web -->

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-web</artifactId>

<version>2.2.0.RELEASE</version>

</dependency>

<dependency>

<groupId>org.apache.shardingsphere.elasticjob</groupId>

<artifactId>elasticjob-lite-spring-boot-starter</artifactId>

<version>3.0.0-RC1</version>

</dependency>

There is a pit here. The official document only says that we are integrated with SpringBoot, but we do not provide a complete pom file, which leads to my card for a long time.

There are database related connections in this starter. We're just doing a simple test. If you don't want to configure the data source, just change the startup class annotation

@SpringBootApplication(exclude = { DataSourceAutoConfiguration.class })

2-2-2. Configuration file

server:

port: 8085

elasticjob:

regCenter:

#zookeeper's ip:port

serverLists: 127.0.0.1:2181

#Name and life space, just define it yourself

namespace: my-job4

jobs:

#Your scheduled task name, user-defined name

myElasticJob:

#The full pathname of the scheduled task

elasticJobClass: com.elastic.job.MyElasticJob

#cron expression for scheduled task execution

cron: 0/5 * * * * ?

#Number of slices

shardingTotalCount: 10

2-2-3. Number of pieces

It is the core of horizontal expansion. For example, 10 slices are defined above (the corresponding slice names are 0-9). Suppose that our timing task is executed every 1 minute, and the timing method is execute.

When we have only one server, execute will be called ten times every minute (the fragment names (0-9) are different each time).

When we have two servers, servers A and B call execute five times every minute (the fragment names (A0-4, B5-9) are different each time)

Based on the above description, we can follow this analogy. When there are three servers, A (3), B (3) and C (4), it is easy to expand horizontally.

Based on the above slicing function, our homework also needs to be modified

public void execute(ShardingContext context) {

System.out.println(context.getShardingTotalCount() + " " + context.getShardingItem());

switch (context.getShardingItem()) {

case 0:

// do something by sharding item 0

break;

case 1:

// do something by sharding item 1

break;

case 2:

// do something by sharding item 2

break;

// case n: ...

}

}

If you copy five copies of the following test code and start it at one time (wait a minute and wait a while), you will find that the area allocated to each server is changing. This rule will also be found when the services are shut down in turn.

2-2-4. Manual call

For example, we need to call this scheduled task temporarily, but its interval must be a few hours or more.

The official operation is as follows, but I have tried for a long time and still haven't solved the error, so I implemented it according to the Java based calling method

The implementation code is as follows:

import org.apache.shardingsphere.elasticjob.api.JobConfiguration;

import org.apache.shardingsphere.elasticjob.lite.api.bootstrap.impl.OneOffJobBootstrap;

import org.apache.shardingsphere.elasticjob.reg.base.CoordinatorRegistryCenter;

import org.apache.shardingsphere.elasticjob.reg.zookeeper.ZookeeperConfiguration;

import org.apache.shardingsphere.elasticjob.reg.zookeeper.ZookeeperRegistryCenter;

import org.springframework.web.bind.annotation.GetMapping;

import org.springframework.web.bind.annotation.RestController;

@RestController

public class OneOffJobController {

@GetMapping("/execute")

public String executeOneOffJob() {

OneOffJobBootstrap jobBootstrap = new OneOffJobBootstrap(createRegistryCenter(), new MyElasticJob(), createJobConfiguration());

// One time scheduling can be called multiple times

jobBootstrap.execute();

return "success";

}

private static CoordinatorRegistryCenter createRegistryCenter() {

CoordinatorRegistryCenter regCenter = new ZookeeperRegistryCenter(new ZookeeperConfiguration("127.0.0.1:2181", "my-job4"));

regCenter.init();

return regCenter;

}

private static JobConfiguration createJobConfiguration() {

// Create job configuration

return JobConfiguration.newBuilder("myElasticJob", 10).cron("").build();

}

}

In fact, the above can be made general, and the parameters are passed externally. As for the job object, we can pass a string and create an object through reflection.

Class classType = Class.forName("com.elastic.job.MyElasticJob");

ElasticJob obj = (ElasticJob)classType.newInstance();

After the operation and maintenance interface is configured later, if you find that it doesn't need to be so troublesome, just click on the operation and maintenance interface (you don't need to provide an interface)

2-3. Configure error handling strategy

When an exception occurs in the execution of a scheduled task, what should we do to deal with it. The following six strategies are officially provided. I will demonstrate an email notification strategy here. Those who are interested or need to study other strategies.

| Error handling policy name | explain | Built in | Default | Is additional configuration required |

|---|---|---|---|---|

| Logging policy | Record the job exception log without interrupting job execution | yes | yes | |

| Throw exception strategy | Throw a system exception and interrupt job execution | yes | ||

| Ignore exception policy | Ignore system exceptions without interrupting job execution | yes | ||

| Mail notification policy | Send mail message notifications without interrupting job execution | yes | ||

| Enterprise wechat notification strategy | Send enterprise wechat message notification without interrupting job execution | yes | ||

| Nailing notification policy | Send nail message notification without interrupting job execution | yes |

2-3-1. Introduction of pom

<dependency>

<groupId>org.apache.shardingsphere.elasticjob</groupId>

<artifactId>elasticjob-error-handler-email</artifactId>

<version>3.0.0-RC1</version>

</dependency>

2-3-2. Modify configuration file

server:

port: 8085

elasticjob:

regCenter:

#zookeeper's ip:port

serverLists: 127.0.0.1:2181

#Name and life space, just define it yourself

namespace: my-job5

jobs:

#Your scheduled task name, user-defined name

oneSimpleJob:

#The full pathname of the scheduled task

elasticJobClass: com.xdx97.elasticjob.job.OneSimpleJob

#cron expression for scheduled task execution

cron: 0/30 * * * * ?

#Number of slices

shardingTotalCount: 1

jobErrorHandlerType: EMAIL

overwrite: true

props:

email:

#Mail server address

host: smtp.126.com

#Mail server port

port: 465

#Mail server user name

username: xxxxxxx

#Mail server password

password: xxxxx

#Enable SSL encrypted transport

useSsl: true

#Mail subject

subject: ElasticJob error message

#Sender email address

from: xxxxx@126.com

#Recipient email address

to: xxx@qq.com

#CC email address

cc: xxxxxx

#Confidential email address

bcc: xxxxx

# Enable debugging mode

debug: false

2-3-3. Use

We only need to throw an exception in the above scheduled task and we will receive the email.

2-3-4. Others

There is a pit in it. According to the official documents, we think that props and jobs are of the same level, but they are not

If you want to configure multiple scheduled tasks and the notification objects of each scheduled task are different, you can configure multiple copies of the above. After all, each props belongs to each scheduled task.

2-4. Operation listener

Elasticjob Lite provides a job listener for listening before and after task execution. Listeners are divided into regular listeners executed by each job node and distributed listeners executed by only a single node in a distributed scenario. This section details how they are used.

After the job dependency (DAG) function is developed, you may consider deleting the job listener function.

The job listener document does not provide a springboot method. I tried it myself for a long time but failed. I didn't reply to the issue for the time being. Let's do it first.

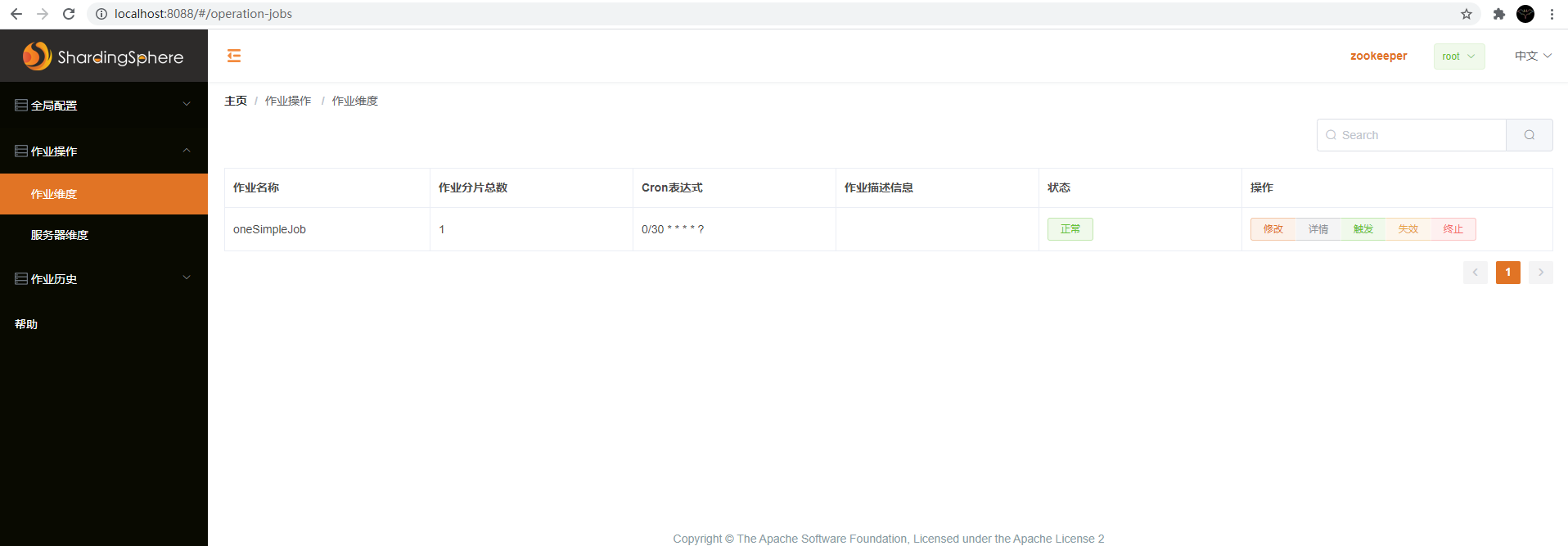

3, Operation and maintenance platform configuration

If the above distributed fragment timing task makes me think it's awesome, then this operation and maintenance platform really brightens my eyes.

3-1. Installation and deployment

This ui is a separate interface, and it has nothing to do with our specific services. So we don't need to modify any previous code at all. We just need to download it and start it.

3-1-1. Download

3-1-2. Startup

Here, if you decompress and start directly under the window, an error will be reported (the startup class cannot be found), which is due to the problem of decompression. (I also put the compressed package in the following source code)

My solution is to upload the compressed package to linux, unzip it and then pull it down.

tar zxvf apache-shardingsphere-elasticjob-3.0.0-RC1-lite-ui-bin.tar

3-1-3. Use

Browser open http://localhost:8088/ , username / password: root/root

Then go to global configuration > registry configuration and configure your zookeeper address

The specific operations are very simple, just click the button and don't say

4, Video address

https://www.bilibili.com/video/BV19L411p7qR

5, Source code acquisition

Pay attention to the following official account of WeChat, reply to key words: elasticJobLiteDemo