·Please refer to the catalogue of this series: [English text classification practice] 1 - overview of practical projects

·Download the actual project resources: English text classification based on neural network zip(pytorch)

[1] Why clean text

this involves the two concepts of dictionary and word vector in the task of text classification.

first of all, it is clear that what we do is "English text classification", so we don't need word segmentation like Chinese, just intercept English words according to the space.

hypothetical training set train There are 10w texts in CSV. We use spaces as separators to intercept English words. A total of 2w words are intercepted. We number these words from 0 to 2w, which is called "dictionary".

[note]: such a dictionary is unacceptable. From the perspective of machine learning, some words in the dictionary only appear once or twice, which has no impact on the classification. On the contrary, it increases the amount of calculation of the algorithm (you can learn more about the idea of TF-IDF). Similarly, in the neural network model, each word also needs to be represented as an n-dimensional word vector, so we may only go to the first 1w words with the highest frequency and put them into the "dictionary".

you know, we can't intercept English words only with spaces as separators, because there are no problems in the text, such as special characters, punctuation, case of opening words, etc., so we need to clean the data.

so how do we judge the pros and cons of the cleaned dictionary?

since we will use the pre trained word vector, we can take the dictionary coverage in the pre trained word vector as a reference.

[note]: using pre training word vector is a common operation, which needs to be understood carefully.

summary: due to the problems of special characters, punctuation marks, case of opening words and so on in the text, which will interfere with the extracted dictionary, the text data needs to be cleaned. We calculate the coverage of the extracted dictionary and the dictionary in the pre training word vector as the standard of the extracted dictionary.

[2] Extract dataset dictionary

vocab dictionary to establish the mapping between words and their occurrence frequency. The code is as follows:

# ## Create an English Dictionary

def build_vocab(sentences, verbose=True):

vocab = {}

for sentence in tqdm(sentences, disable=(not verbose)):

for word in sentence:

try:

vocab[word] += 1

except KeyError:

vocab[word] = 1

return vocab

# ## Progress bar initialization

tqdm.pandas()

# ## Load dataset

df = pd.read_csv("../@_data set/TLND/data/labelled_newscatcher_dataset.csv", encoding='utf-8', sep=';')

# ## Create dictionary

sentences = df['title'].progress_apply(lambda x: x.split()).values

vocab = build_vocab(sentences)

let's take a look at the established vocab dictionary. Take a few words:

{'Insulated': 3, 'Mergers': 9, 'Acquisitions': 9}

[3] Load pre training embeddings

word vector refers to using a set of numerical values to represent a Chinese character or word, which is also because the computer can only perform numerical calculation. The simplest method is one hot. If there are 10000 words in total, the word vector is 10000 dimensions. The dimension corresponding to the word is 1 and the others are 0, but this representation dimension is too high and too sparse.

so later, they began to use a dense vector with small dimensions. Word vectors are generally 50100200 or 300 dimensions. Pre training refers to training this kind of word vector in advance. Corresponding to some tasks, you can enter the word id, and then train the word vector inside the specific task. The word vector produced in this way does not have generality, while the pre training word vector is the result of training on a large sample and has good generality. No matter what task, you can directly use the specific training method.

from word2vec, elmo to bert.

the download address of some pre training word vectors is:

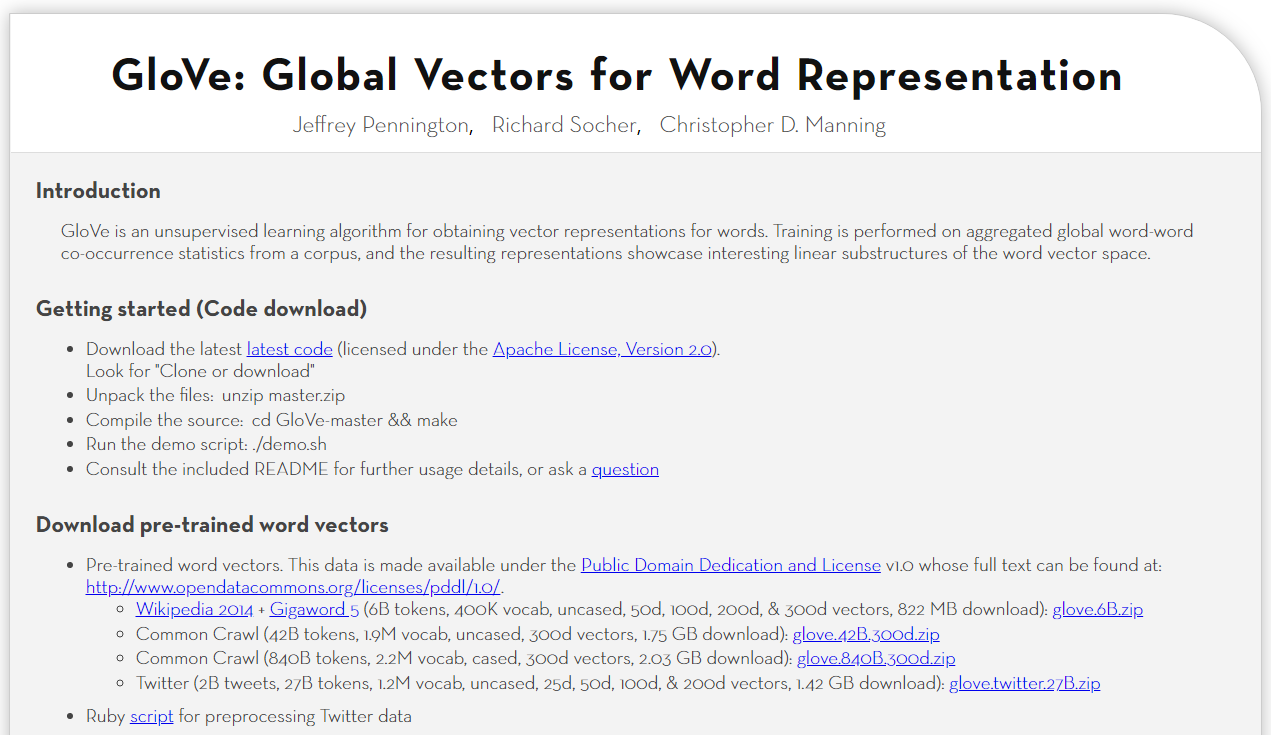

glove

website: https://nlp.stanford.edu/projects/glove/

GLOVE works similar to Word2Vec. As can be seen above, Word2Vec is a "prediction" model, which predicts the context of a given word. GLOVE learns by constructing a co-occurrence matrix (words X context), which mainly calculates the frequency of words in the context. Because it is a huge matrix, we decompose this matrix to get a low dimensional representation. There are many details that complement each other, but this is only a rough idea.

fasttext

website: https://fasttext.cc/

FastText is very different from the above two embeddings. Word2Vec and GLOVE use each word as the smallest training unit, while FastText uses n-gram characters as the smallest unit. For example, the word vector, "apple", can be broken down into different units of the word vector, such as "ap", "app", "ple". The biggest advantage of using FastText is that it can generate better embedding for rare words, even words not seen during training, because the n-gram character vector is shared with other words. This is something Word2Vec and GLOVE cannot do.

after downloading the pre training word vector, try to load the word vector. The code is as follows:

# ## Load pre training word vector

def load_embed(file):

def get_coefs(word, *arr):

return word, np.asarray(arr, dtype='float32')

if file == '../@_Word vector/fasttext/wiki-news-300d-1M.vec':

embeddings_index = dict(get_coefs(*o.split(" ")) for o in open(file,encoding='utf-8') if len(o) > 100)

else:

embeddings_index = dict(get_coefs(*o.split(" ")) for o in open(file, encoding='latin'))

return embeddings_index

# ## Load word vector

glove = '../@_Word vector/glove/glove.6B.50d.txt'

fasttext = '../@_Word vector/fasttext/wiki-news-300d-1M.vec'

embed_glove = load_embed(glove)

embed_fasttext = load_embed(fasttext)

[4] Check the coverage of pre training embeddings and vocab

after creating the dictionary and loading the pre training word vector, we write code to check the coverage:

# ## Pre check the coverage of cavements

def check_coverage(vocab, embeddings_index):

known_words = {} # Words for both

unknown_words = {} # Words that cannot be overwritten by embeddings

nb_known_words = 0 # Corresponding quantity

nb_unknown_words = 0

# for word in vocab.keys():

for word in tqdm(vocab):

try:

known_words[word] = embeddings_index[word]

nb_known_words += vocab[word]

except:

unknown_words[word] = vocab[word]

nb_unknown_words += vocab[word]

pass

print('Found embeddings for {:.2%} of vocab'.format(len(known_words) / len(vocab))) # Percentage of words covered

print('Found embeddings for {:.2%} of all text'.format(

nb_known_words / (nb_known_words + nb_unknown_words))) # The percentage of text covered, which is different from the previous indicator, is that words are repeated in the text.

unknown_words = sorted(unknown_words.items(), key=operator.itemgetter(1))[::-1]

print("unknown words : ", unknown_words[:30])

return unknown_words

oov_glove = check_coverage(vocab, embed_glove)

oov_fasttext = check_coverage(vocab, embed_fasttext)

View output:

100%|██████████| 108774/108774 [00:00<00:00, 343998.85it/s]

100%|██████████| 108774/108774 [00:00<00:00, 313406.97it/s]

100%|██████████| 123225/123225 [00:00<00:00, 882523.98it/s]

Found embeddings for 18.88% of vocab

Found embeddings for 51.99% of all text

unknown words : [('COVID-19', 6245), ('The', 5522), ('New', 3488), ('Market', 2932), ('Covid-19', 2621), ('–', 2521), ('To', 2458), ('US', 2184), ('Coronavirus', 2122), ('How', 2041), ('A', 2029), ('In', 1904), ('Global', 1786), ('Is', 1720), ('With', 1583), ('Of', 1460), ('For', 1450), ('Trump', 1440), ('And', 1361), ('Man', 1340), ('August', 1310), ('Apple', 1148), ('UK', 1132), ('On', 1122), ('What', 1113), ('Coronavirus:', 1077), ('Google', 1054), ('League', 1044), ('Why', 1032), ('China', 987)]

100%|██████████| 123225/123225 [00:00<00:00, 786827.09it/s]

Found embeddings for 50.42% of vocab

Found embeddings for 87.50% of all text

unknown words : [('COVID-19', 6245), ('Covid-19', 2621), ('Coronavirus:', 1077), ('Covid', 824), ('2020:', 736), ('COVID', 670), ('TikTok', 525), ('cases,', 470), ("Here's", 414), ('LIVE:', 345), ('Size,', 318), ('Share,', 312), ('COVID-19:', 297), ('news:', 280), ('Trends,', 270), ('TheHill', 263), ('coronavirus:', 259), ('Report:', 256), ('Analysis,', 247), ('Fortnite', 245), ("won't", 242), ('Growth,', 234), ("It's", 234), ('Covid-19:', 229), ("it's", 224), ("Trump's", 223), ('English.news.cn', 203), ('COVID-19,', 196), ('Here's', 196), ('updates:', 177)]

analyze the above output:

the problem of uppercase is very serious. Let's first convert uppercase letters to lowercase letters in the text of the dataset.

[5] All text words are lowercase

# ## Dictionary all lowercase

print("=========After converting to lowercase")

sentences = df['title'].apply(lambda x: x.lower())

sentences = sentences.progress_apply(lambda x: x.split()).values

vocab_low = build_vocab(sentences)

oov_glove = check_coverage(vocab_low, embed_glove)

oov_fasttext = check_coverage(vocab_low, embed_fasttext)

View output:

=========After converting to lowercase

100%|██████████| 108774/108774 [00:00<00:00, 354067.79it/s]

100%|██████████| 108774/108774 [00:00<00:00, 317075.17it/s]

100%|██████████| 102482/102482 [00:00<00:00, 1007395.77it/s]

Found embeddings for 42.97% of vocab

Found embeddings for 86.47% of all text

unknown words : [('covid-19', 8897), ('–', 2521), ('covid', 1543), ('coronavirus:', 1338), ('2020:', 736), ('—', 626), ('tiktok', 529), ('covid-19:', 526), ("here's", 525), ('cases,', 502), ('live:', 464), ("it's", 459), ('news:', 363), ('size,', 326), ('updates:', 325), ('share,', 316), ("won't", 310), ("don't", 297), ('report:', 295), ("'the", 291), ('review:', 287), ('trends,', 280), ('covid-19,', 269), ('thehill', 263), ('update:', 260), ('analysis,', 253), ('here's', 252), ("'i", 249), ('fortnite', 245), ('growth,', 244)]

100%|██████████| 102482/102482 [00:00<00:00, 778784.32it/s]

Found embeddings for 37.95% of vocab

Found embeddings for 85.17% of all text

unknown words : [('covid-19', 8897), ('covid', 1543), ('coronavirus:', 1338), ('2020:', 736), ('tiktok', 529), ('covid-19:', 526), ("here's", 525), ('cases,', 502), ('live:', 464), ("it's", 459), ('meghan', 401), ('news:', 363), ('size,', 326), ('updates:', 325), ('share,', 316), ("won't", 310), ("don't", 297), ('huawei', 297), ('report:', 295), ('sushant', 292), ('review:', 287), ('trends,', 280), ('covid-19,', 269), ('thehill', 263), ('update:', 260), ('analysis,', 253), ('here's', 252), ('fortnite', 245), ('growth,', 244), ('xiaomi', 237)]

it can be seen that after being converted to lowercase, the coverage has been greatly improved, but the coverage of about 85% is far from enough. We try to remove special characters again.

[6] Remove special characters

# ## Remove special characters

def clean_special_chars(text, punct, mapping):

for p in mapping:

text = text.replace(p, mapping[p])

for p in punct:

text = text.replace(p, f' {p} ')

specials = {'\u200b': ' ', '...': ' ... ', '\ufeff': '', 'करना': '', 'है': ''} # Other special characters that I have to deal with in last

for s in specials:

text = text.replace(s, specials[s])

return text

# ## Remove special characters

print("=========After removing special characters")

punct = "/-'?!.,#$%\'()*+-/:;<=>@[\\]^_`{|}~" + '""""'' + '∞θ÷α•à−β∅³π'₹´°£€\×™√²—–&'

punct_mapping = {"'": "'", "₹": "e", "´": "'", "°": "", "€": "e", "™": "tm", "√": " sqrt ", "×": "x", "²": "2", "—": "-", "–": "-", "'": "'", "_": "-", "`": "'", '"': '"', '"': '"', '"': '"', "£": "e", '∞': 'infinity', 'θ': 'theta', '÷': '/', 'α': 'alpha', '•': '.', 'à': 'a', '−': '-', 'β': 'beta', '∅': '', '³': '3', 'π': 'pi', }

sentences = df['title'].apply(lambda x: clean_special_chars(x, punct, punct_mapping))

sentences = sentences.apply(lambda x: x.lower()).progress_apply(lambda x: x.split()).values

vocab_punct = build_vocab(sentences)

oov_glove = check_coverage(vocab_punct, embed_glove)

oov_fasttext = check_coverage(vocab_punct, embed_fasttext)

View output:

=========After removing special characters

100%|██████████| 108774/108774 [00:00<00:00, 359101.46it/s]

100%|██████████| 108774/108774 [00:00<00:00, 368426.97it/s]

100%|██████████| 55420/55420 [00:00<00:00, 1182299.35it/s]

Found embeddings for 80.79% of vocab

Found embeddings for 97.48% of all text

unknown words : [('covid', 11852), ('tiktok', 680), ('fortnite', 372), ('bbnaija', 288), ('thehill', 263), ('ps5', 260), ('oneplus', 212), ('jadon', 168), ('havertz', 144), ('wechat', 135), ('researchandmarkets', 132), ('redmi', 129), ('realme', 116), ('brexit', 108), ('xcloud', 94), ('valorant', 84), ('note20', 69), ('airpods', 68), ('nengi', 67), ('vaping', 64), ('fiancé', 62), ('selfie', 62), ('pokémon', 60), ('1000xm4', 59), ('sarri', 55), ('iqoo', 52), ('miui', 52), ('wassce', 51), ('beyoncé', 50), ('kiddwaya', 49)]

100%|██████████| 55420/55420 [00:00<00:00, 1066584.97it/s]

Found embeddings for 67.35% of vocab

Found embeddings for 95.61% of all text

unknown words : [('covid', 11852), ('tiktok', 680), ('meghan', 435), ('fortnite', 372), ('huawei', 364), ('sushant', 326), ('markle', 302), ('bbnaija', 288), ('xiaomi', 276), ('thehill', 263), ('ps5', 260), ('oneplus', 212), ('degeneres', 176), ('jadon', 168), ('buhari', 164), ('cagr', 162), ('solskjaer', 150), ('havertz', 144), ('perseid', 140), ('wechat', 135), ('researchandmarkets', 132), ('redmi', 129), ('fauci', 98), ('xcloud', 94), ('ardern', 93), ('valorant', 84), ('rtx', 83), ('akufo', 82), ('sadc', 82), ('kildare', 82)]

it can be seen that after removing special characters, the coverage rate reaches 97.5%, which is enough. Look at new words. These are new words that have come out in recent years, such as' covid 'and' tiktok ', which cannot be changed.

[note]: because the pre training word vector is trained on a large corpus, after using the pre training word vector, we can choose not to modify the parameters of the embedded layer during back propagation (fixed), or after using the pre training word vector, Continue to modify the parameters of the embedded layer during back propagation (this is called fine tuning, which is becoming more and more popular with Bert's proposal).

[7] Proceed to the next actual combat