TL;DR

In this article, we use Kubernetes' native network strategy and Cilium's network strategy to realize the isolation of Pod network level. The difference is that the former only provides the network strategy based on L3/4; The latter supports L3/4 and L7 network policies.

Improving network security through network strategy can greatly reduce the cost of implementation and maintenance, and has little impact on the system.

Cilium, especially based on eBPF technology, solves the problem of insufficient kernel scalability and provides safe, reliable and observable network connection for workload from the kernel level.

background

Why is there a security risk in Kubernetes network? The pods in the cluster are not isolated by default, that is, the networks between pods are interconnected and can communicate with each other.

There will be problems here. For example, because data sensitive service B only allows specific service A to access, while Service C cannot access B. To prohibit Service C from accessing service B, there are several schemes:

- Provide a general solution in the SDK to realize the function of white list. First, the request must have the identification of the source, and then the server can receive the request of releasing the specific identification set by the rule and reject other requests.

- The cloud native solution uses the RBAC and mTLS functions of the service grid. The implementation principle of RBAC is similar to the SDK scheme of application layer, but it belongs to the abstract general scheme of infrastructure layer; mTLS is more complex. Authentication is performed in the connection handshake stage, involving certificate issuance, authentication and other operations.

The above two schemes have their own advantages and disadvantages:

- The SDK scheme is simple to implement, but large-scale systems will face problems such as difficult upgrade and promotion and high cost of multilingual support.

- The solution of service grid is a general solution of infrastructure layer, which naturally supports multilingualism. However, for users without landing grid, the architecture changes greatly and the cost is high. If only to solve the security problem, the cost performance of using the grid scheme is very low, not to mention the difficulty of landing such as the implementation of the existing grid and the high cost of use and maintenance in the later stage.

Continue to find solutions at the lower level of infrastructure, starting from the network layer. Kubernetes provided Network policy "Network level isolation" can be realized.

Example application

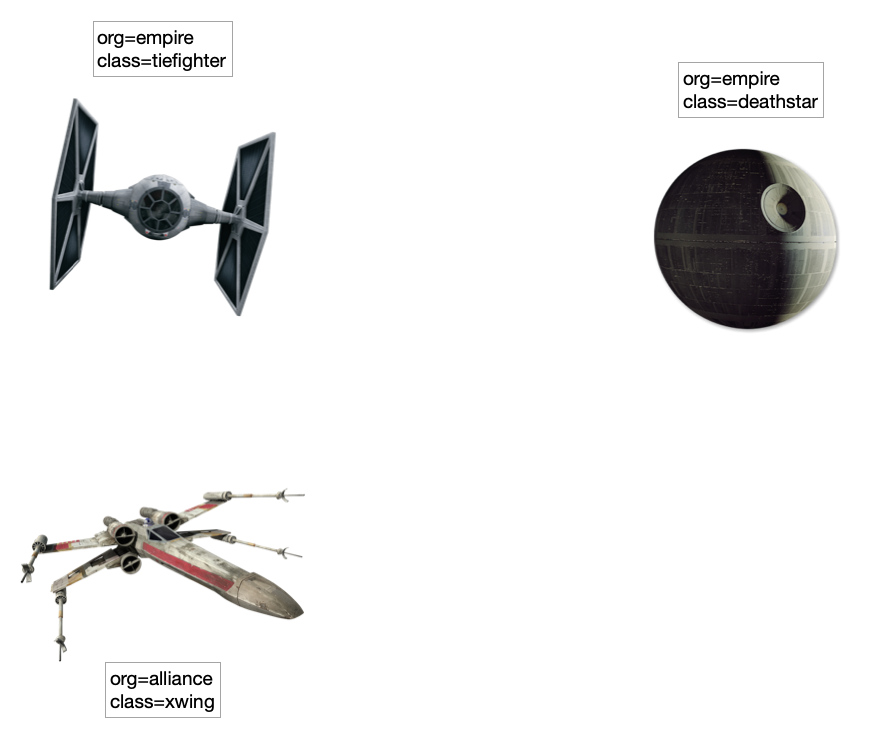

Before further demonstrating the scheme of NetworkPolicy, let's introduce the sample application for demonstration. We use Cilium in interactive tutorials Cilium getting started Star Wars scenarios used in.

Here are three applications that Star Wars fans are probably familiar with:

- Death Star: it provides web services on port 80 with two copies. It provides "landing" services for imperial fighters through the load balancing of Kubernetes Service.

- Titanium fighter: execute landing request.

- X-Wing fighter xwing: execute landing request.

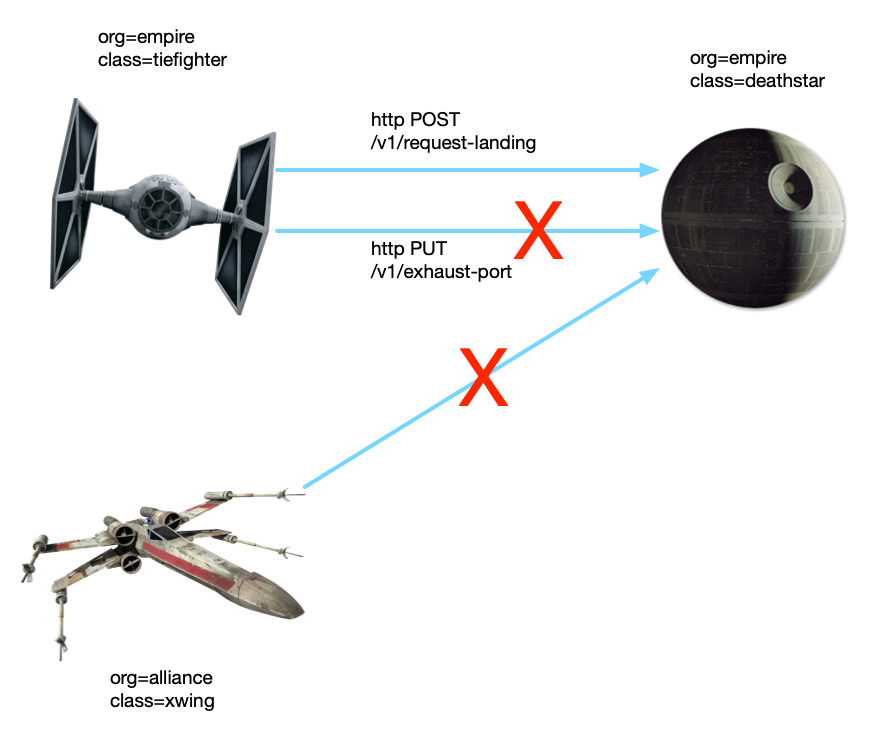

As shown in the figure, we use Label to identify three applications: org and class. When implementing the network policy, we will use these two tags to identify the load.

# app.yaml

---

apiVersion: v1

kind: Service

metadata:

name: deathstar

labels:

app.kubernetes.io/name: deathstar

spec:

type: ClusterIP

ports:

- port: 80

selector:

org: empire

class: deathstar

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: deathstar

labels:

app.kubernetes.io/name: deathstar

spec:

replicas: 2

selector:

matchLabels:

org: empire

class: deathstar

template:

metadata:

labels:

org: empire

class: deathstar

app.kubernetes.io/name: deathstar

spec:

containers:

- name: deathstar

image: docker.io/cilium/starwars

---

apiVersion: v1

kind: Pod

metadata:

name: tiefighter

labels:

org: empire

class: tiefighter

app.kubernetes.io/name: tiefighter

spec:

containers:

- name: spaceship

image: docker.io/tgraf/netperf

---

apiVersion: v1

kind: Pod

metadata:

name: xwing

labels:

app.kubernetes.io/name: xwing

org: alliance

class: xwing

spec:

containers:

- name: spaceship

image: docker.io/tgraf/netperf

Kubernetes network strategy

Can pass Official documents For more details, here we will release the configuration directly:

# native/networkpolicy.yaml

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: policy

namespace: default

spec:

podSelector:

matchLabels:

org: empire

class: deathstar

policyTypes:

- Ingress

ingress:

- from:

- podSelector:

matchLabels:

org: empire

ports:

- protocol: TCP

port: 80

- Pod selector: indicates the workload balancing to apply the network policy. Select two pods of deathstar through label.

- policyTypes: indicates the type of traffic, which can be either progress or progress or both. Here, the use of Ingress means that the rules are executed for the inbound traffic of the selected deathstar Pod.

- ingress.from: indicates the source workload of traffic. It is also selected by using podSelector and Label. org=empire is selected here, that is, all "imperial fighters".

- ingress.ports: indicates the entry port of traffic. The service ports of deathstar are listed here.

Next, let's test it.

test

Prepare the environment first, and we will use it K3s As a Kubernetes environment. However, because the default CNI plug-in Flannel of K3s does not support network policy, we need to change the plug-in. Choose here Calico , i.e. K3s + Calico scheme.

First create a single node cluster:

curl -sfL https://get.k3s.io | K3S_KUBECONFIG_MODE="644" INSTALL_K3S_EXEC="--flannel-backend=none --cluster-cidr=10.42.0.0/16 --disable-network-policy --disable=traefik" sh -

At this time, all pods are in Pending status, because Calico needs to be installed:

kubectl apply -f https://projectcalico.docs.tigera.io/manifests/calico.yaml

After Calico runs successfully, all pods will also run successfully.

The next step is to deploy the application:

kubectl apply -f app.yaml

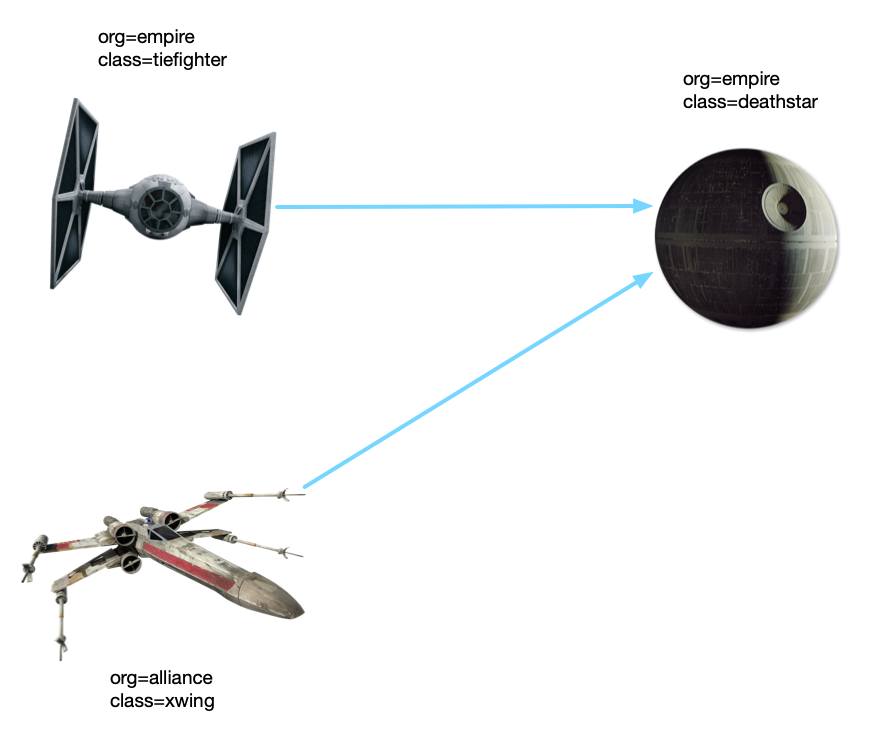

Before executing the strategy, execute the following command to see if "the fighter can land on the Death Star":

kubectl exec tiefighter -- curl -s -XPOST deathstar.default.svc.cluster.local/v1/request-landing Ship landed kubectl exec xwing -- curl -s -XPOST deathstar.default.svc.cluster.local/v1/request-landing Ship landed

As a result, both "fighters" (Pod load) can access the deathstar service.

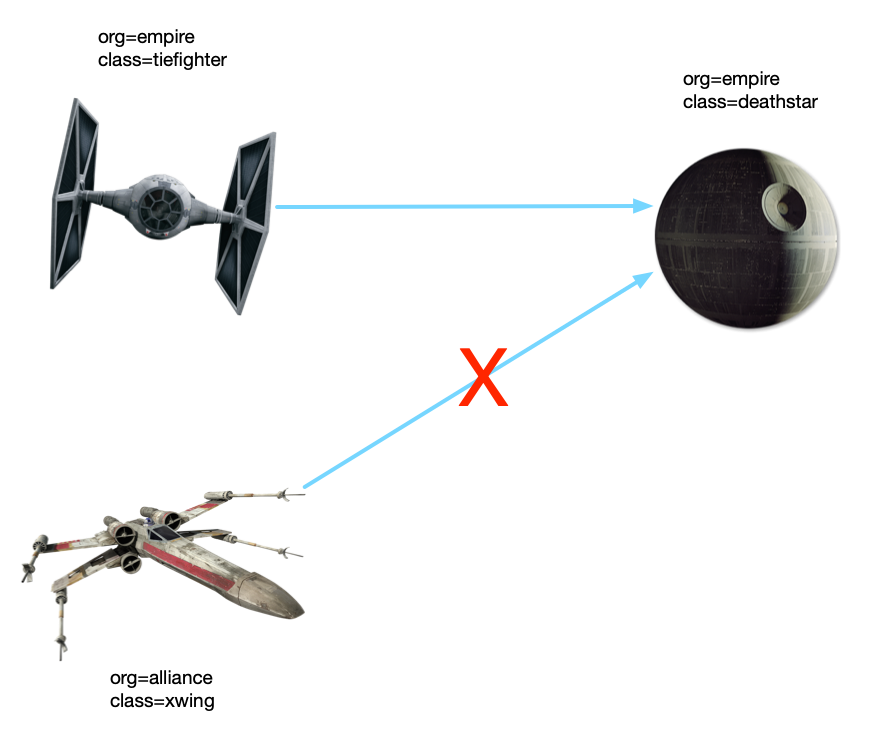

Execute network policy at this time:

kubectl apply -f native/networkpolicy.yaml

Try "login" again, and xwing's login request will stop there (you need to use ctrl+c to exit, or add -- connect timeout 2 when requesting).

reflection

Kubernetes network strategy is used to realize what we want, and the function of white list is added to the service from the network level. This scheme has no transformation cost and has little impact on the system.

Cilium's over before he shows up? Let's continue:

Sometimes our service will expose some management endpoints, and the system calls to perform some management operations, such as hot update, restart, etc. These endpoints are not allowed to be called by ordinary services, otherwise serious consequences will be caused.

For example, in the example, tiefighter accesses deathstar's management endpoint / exhaust port:

kubectl exec tiefighter -- curl -s -XPUT deathstar.default.svc.cluster.local/v1/exhaust-port

Panic: deathstar exploded

goroutine 1 [running]:

main.HandleGarbage(0x2080c3f50, 0x2, 0x4, 0x425c0, 0x5, 0xa)

/code/src/github.com/empire/deathstar/

temp/main.go:9 +0x64

main.main()

/code/src/github.com/empire/deathstar/

temp/main.go:5 +0x85

There is a Panic error. Check the Pod and you will find that dealthstar is hung.

Kubernetes' network strategy can only work in L3/4 layer, but it can't do anything about L7 layer.

Still want to invite Cilium out.

Cilium network strategy

Since Cilium involves many knowledge points such as Linux kernel and network, it is very long to clarify the implementation principle. Therefore, only the introduction of the official website is extracted here. Later, I hope to write another article on implementation when I have time.

Introduction to Cilium

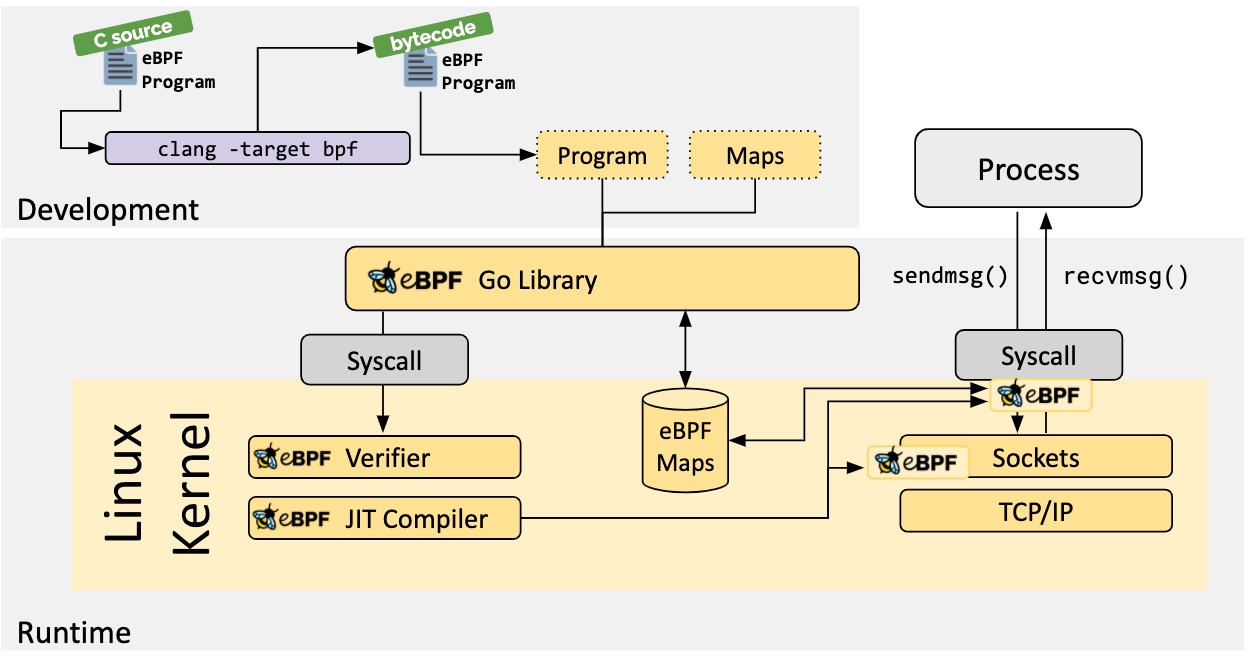

Cilium Is an open source software used to provide, protect and observe network connections between container workloads (cloud native), which is powered by revolutionary kernel technology eBPF Push.

What is eBPF?

The Linux kernel has always been an ideal place for monitoring / observability, networking and security functions. However, in many cases, this is not easy, because these tasks need to modify the kernel source code or load kernel modules. The final implementation form is to superimpose new abstractions on the existing layers of abstractions. eBPF is a revolutionary technology. It can run sandbox programs in the kernel without modifying the kernel source code or loading kernel modules.

After turning the Linux kernel into programmable, we can build more intelligent and functional infrastructure software based on the existing (rather than adding new) abstraction layer, without increasing the complexity of the system and sacrificing execution efficiency and security.

Let's take a look at Cilium's network strategy:

# cilium/networkpolicy-L4.yaml

apiVersion: "cilium.io/v2"

kind: CiliumNetworkPolicy

metadata:

name: "rule1"

spec:

description: "L7 policy to restrict access to specific HTTP call"

endpointSelector:

matchLabels:

org: empire

class: deathstar

ingress:

- fromEndpoints:

- matchLabels:

org: empire

toPorts:

- ports:

- port: "80"

protocol: TCP

It is not different from Kubernetes' native network strategy. We can understand it with reference to the previous introduction. We directly enter the test.

test

Since Cilium itself implements CNI, the previous cluster cannot be used. Uninstall the cluster first:

k3s-uninstall.sh # !!! Remember to clean up the previous cni plug-ins sudo rm -rf /etc/cni/net.d

Use the same command to create a single node cluster:

curl -sfL https://get.k3s.io | K3S_KUBECONFIG_MODE="644" INSTALL_K3S_EXEC="--flannel-backend=none --cluster-cidr=10.42.0.0/16 --disable-network-policy --disable=traefik" sh - # cilium uses this variable export KUBECONFIG=/etc/rancher/k3s/k3s.yaml

Next, install Cilium CLI:

curl -L --remote-name-all https://github.com/cilium/cilium-cli/releases/latest/download/cilium-linux-amd64.tar.gz{,.sha256sum}

sha256sum --check cilium-linux-amd64.tar.gz.sha256sum

sudo tar xzvfC cilium-linux-amd64.tar.gz /usr/local/bin

rm cilium-linux-amd64.tar.gz{,.sha256sum}

cilium version

cilium-cli: v0.10.2 compiled with go1.17.6 on linux/amd64

cilium image (default): v1.11.1

cilium image (stable): v1.11.1

cilium image (running): unknown. Unable to obtain cilium version, no cilium pods found in namespace "kube-system"

Install Cilium to the cluster:

cilium install

After Cilium runs successfully:

cilium status

/¯¯\

/¯¯\__/¯¯\ Cilium: OK

\__/¯¯\__/ Operator: OK

/¯¯\__/¯¯\ Hubble: disabled

\__/¯¯\__/ ClusterMesh: disabled

\__/

Deployment cilium-operator Desired: 1, Ready: 1/1, Available: 1/1

DaemonSet cilium Desired: 1, Ready: 1/1, Available: 1/1

Containers: cilium Running: 1

cilium-operator Running: 1

Cluster Pods: 3/3 managed by Cilium

Image versions cilium-operator quay.io/cilium/operator-generic:v1.11.1@sha256:977240a4783c7be821e215ead515da3093a10f4a7baea9f803511a2c2b44a235: 1

cilium quay.io/cilium/cilium:v1.11.1@sha256:251ff274acf22fd2067b29a31e9fda94253d2961c061577203621583d7e85bd2: 1

Deploy application:

kubectl apply -f app.yaml

Test service call after application startup:

kubectl exec tiefighter -- curl -s -XPOST deathstar.default.svc.cluster.local/v1/request-landing Ship landed kubectl exec xwing -- curl -s -XPOST deathstar.default.svc.cluster.local/v1/request-landing Ship landed

Execute L4 network policy:

kubectl apply -f cilium/networkpolicy-L4.yaml

Try to "land" the death star again, and the xwing fighter is also unable to land, indicating that the L4 layer rules take effect.

Let's try the rule of L7 layer again:

# cilium/networkpolicy-L7.yaml

apiVersion: "cilium.io/v2"

kind: CiliumNetworkPolicy

metadata:

name: "rule1"

spec:

description: "L7 policy to restrict access to specific HTTP call"

endpointSelector:

matchLabels:

org: empire

class: deathstar

ingress:

- fromEndpoints:

- matchLabels:

org: empire

toPorts:

- ports:

- port: "80"

protocol: TCP

rules:

http:

- method: "POST"

path: "/v1/request-landing"

Execution rules:

kubectl apply -f cilium/networkpolicy-L7.yaml

This time, use tielighter to call the management interface of the Death Star:

kubectl exec tiefighter -- curl -s -XPUT deathstar.default.svc.cluster.local/v1/exhaust-port Access denied # The login interface works normally kubectl exec tiefighter -- curl -s -XPOST deathstar.default.svc.cluster.local/v1/request-landing Ship landed

This time, Access denied is returned, indicating that the rules of L7 layer have taken effect.

The article is unified in the official account of the cloud.