Introduction to TiDB

TiDB is an open-source distributed relational database independently designed and developed by PingCAP company. It is an integrated distributed database product that supports both online transaction processing and online analytical processing (HTAP). It has horizontal expansion or reduction, financial level high availability, real-time HTAP, cloud native distributed database Compatible with MySQL 5.7 protocol and MySQL ecology. The goal is to provide users with one-stop OLTP (Online Transactional Processing), OLAP (Online Analytical Processing) and HTAP solutions. TiDB is suitable for various application scenarios such as high availability, strong consistency, large data scale and so on.

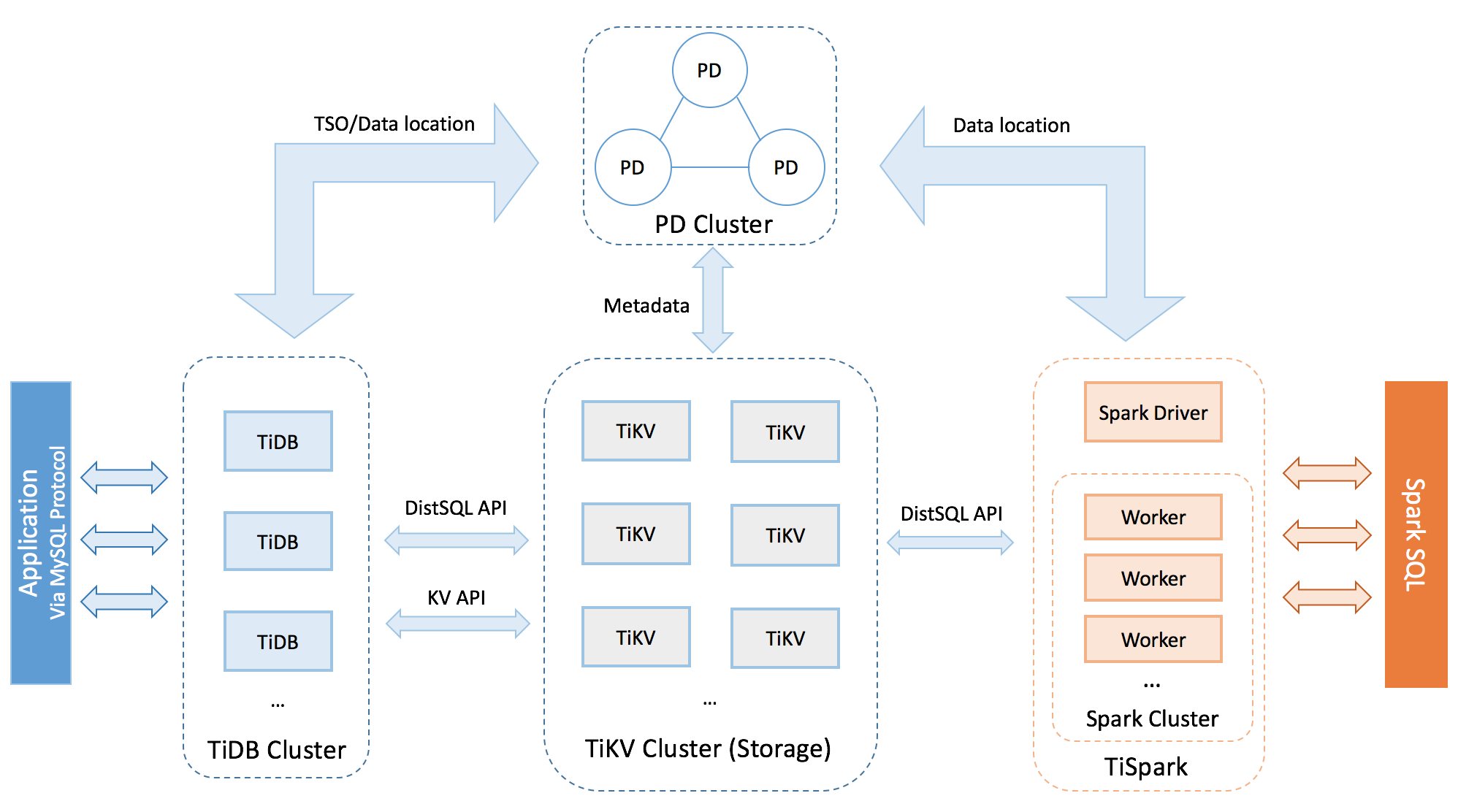

TiDB overall architecture

TiDB cluster is mainly divided into three components:

-

TiDB Server

TiDB Server is responsible for receiving SQL requests, processing SQL related logic, finding the TiKV address storing the data required for calculation through PD, interacting with TiKV to obtain data, and finally returning results. TiDB Server is stateless. It does not store data, but is only responsible for calculation. It can be expanded infinitely. It can provide a unified access address through load balancing components (such as LVS, HAProxy or F5).

-

PD Server

The Placement Driver (PD for short) is the management module of the whole cluster. It mainly works in three ways: one is to store the meta information of the cluster (which TiKV node a Key is stored in); The second is to schedule and load balance the TiKV cluster (such as data migration, Raft group leader migration, etc.); The third is to allocate globally unique and incremental transaction ID s.

PD is a cluster, which needs to deploy an odd number of nodes. Generally, it is recommended to deploy at least 3 nodes online. -

TiKV Server

TiKV Server is responsible for storing data. Externally, TiKV is a distributed key value storage engine that provides transactions. The basic unit for storing data is Region. Each Region is responsible for storing data of a Key Range (left closed right open interval from StartKey to EndKey). Each TiKV node is responsible for multiple regions. TiKV uses Raft protocol for replication to maintain data consistency and disaster recovery. Replicas are managed by Region. Multiple regions on different nodes form a Raft Group and are replicas of each other. The load balancing of data among multiple TiKV is scheduled by PD, which is also scheduled in Region.

-

TiSpark

TiSpark, as the main component of TiDB to solve the complex OLAP needs of users, runs Spark SQL directly on the TiDB storage layer, integrates the advantages of TiKV distributed cluster and the ecology of big data community. So far, TiDB can support OLTP and OLAP through a set of systems, avoiding the trouble of user data synchronization.

Experimental environment

| host | service |

|---|---|

| server5:172.25.12.5 | zabbix-server pd-server tidb-server |

| server6:172.25.12.6 | tikv-server |

| server7:172.25.12.7 | tikv-server |

| server8:172.25.12.8 | tikv-server |

TIDB replacing mysql database

1. Install tidb

tar zxf tidb-latest-linux-amd64.tar.gz -C /usr/local ##decompression cd /usr/local ln -s tidb-latest-linux-amd64/ tidb ##Establish soft connection

2. Start tidb

- Open pd

cd /user/local/tidb ./bin/pd-server --name=pd1 --data-dir=pd1 --client-urls="http://172.25.12.5:2379" --peer-urls="http://172.25.12.5:2380" --initial-cluster="pd1=http://172.25.12.5:2380" --log-file=pd.log &

View ports 2379 and 2380

- Turn on tikv

server6

./bin/tikv-server --pd="172.25.12.5:2379" --addr="172.25.12.6:20160" --data-dir=tikv1 --log-file=tikv.log &

server7

./bin/tikv-server --pd="172.25.12.5:2379" --addr="172.25.12.7:20160" --data-dir=tikv1 --log-file=tikv.log &

server8

./bin/tikv-server --pd="172.25.12.5:2379" --addr="172.25.12.8:20160" --data-dir=tikv1 --log-file=tikv.log &

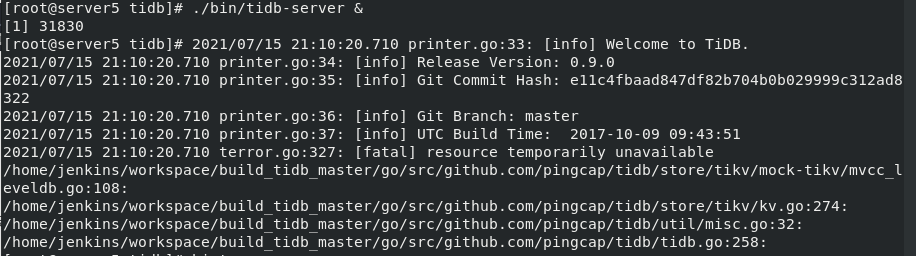

- Enable tidb

./bin/tidb-server &

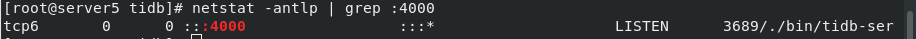

View port 4000

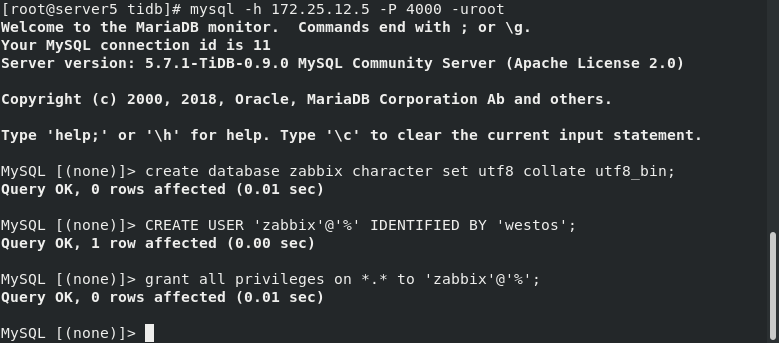

3. Database connection tidb

mysql -h 172.25.12.5 -P 4000 -uroot ##The database is connected to tidb through port 4000

create database zabbix character set utf8 collate utf8_bin; ##Create database CREATE USER 'zabbix'@'%' IDENTIFIED BY 'westos'; ##Create user grant all privileges on *.* to 'zabbix'@'%'; ##User authorization

4. Import database

cd /usr/share/doc/zabbix-server-mysql-4.0.32/ zcat create.sql.gz | mysql -h 172.25.12.5 -P 4000 -uroot zabbix

5. Modify zabbix configuration file

vim /etc/zabbix/zabbix_server.conf 91 DBHost=172.25.12.5 124 DBPassword=westos 139 DBPort=4000

6. Modify the port and ip of zabbix website

vim /etc/zabbix/web/zabbix.conf.php $DB['TYPE'] = 'MYSQL'; $DB['SERVER'] = '172.25.12.5'; $DB['PORT'] = '4000'; $DB['DATABASE'] = 'zabbix'; $DB['USER'] = 'zabbix'; $DB['PASSWORD'] = 'westos';

7. Restart service access http://172.25.12.5/zabbix

systemctl restart zabbix-server.service