1, Set up etcd cluster

There are three main types of self building clusters:

Static discovery: the nodes in the etcd cluster are known in advance. When starting, directly specify the addresses of each node of the etcd through the -- initial cluster parameter.

Etcd dynamic discovery: the premise of static configuration is that the information of each node is known in advance before building the cluster. However, in practical application, the ip of each node may not be known in advance. At this time, the built etcd can be used to help build a new etcd cluster. Use the existing etcd cluster as the data interaction point, and then realize the mechanism of service discovery through the existing cluster when expanding the new cluster. For example, the official: discovery etcd. io

DNS dynamic discovery: obtain the address information of other nodes through DNS query

2, Static deployment (premise)

The following is the information of two hosts (if you need to open the virtual machine, please do according to your own computer configuration)

Host IP (varies according to your own machine)

Demo 192.168.218.133

CentOS7 192.168.218.132

Cluster nodes are usually deployed as 3, 5, 7 and 9 nodes. Why can't you choose an even number of nodes?

1. The even number of node clusters is at a higher risk of unavailability, which is reflected in the high probability or equal votes in the process of selecting the master, so as to trigger the next round of election.

2. Even number of node clusters cannot work normally in some network segmentation scenarios. When the network segmentation occurs, the cluster nodes are split in half.

The cluster will not work at this time. According to the RAFT protocol, at this time, the cluster write operation cannot make most nodes agree, resulting in write failure and the cluster cannot work normally

Please ensure that etcd service is installed on both hosts and can be started normally

3, Cluster construction

1. Installation services (Reference) Basic introduction of microservice automation)

2. Edit etcd Conf file, add cluster information, and pay attention to modifying the corresponding ip address

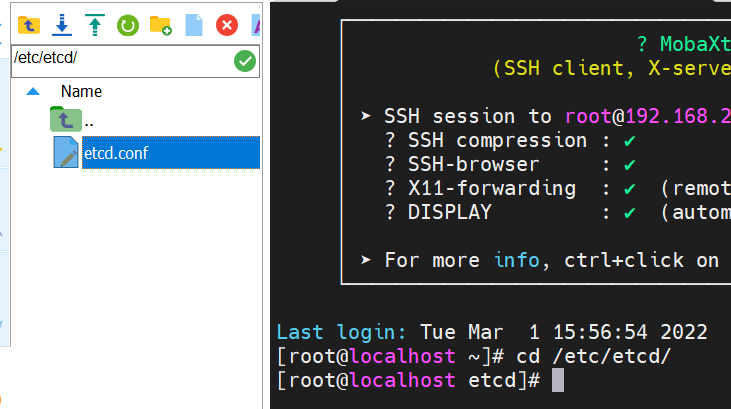

① . find the corresponding file:

② , edit file:

③ . document content:

######################################################### ###### Please modify the configuration according to the actual situation of each node: 1/3/4/5/6/7 ###### ######################################################### #[Member] #1. The node name must be unique ETCD_NAME="etcd01" #2. Set the directory where the data is saved ETCD_DATA_DIR="/var/lib/etcd" #3. url for listening to other etcd member s ETCD_LISTEN_PEER_URLS="http://192.168.218.132:2380" #4. Address of the node to provide external services ETCD_LISTEN_CLIENT_URLS="http://192.168.218.132:2379,http://127.0.0.1:2379" #[Clustering] #5. The publicly announced listening address of the node client ETCD_ADVERTISE_CLIENT_URLS="http://192.168.218.132:2379" #6. The peer URL address of the node member will be notified to other member nodes of the cluster ETCD_INITIAL_ADVERTISE_PEER_URLS="http://192.168.218.132:2380" #7. Information of all nodes in the cluster ETCD_INITIAL_CLUSTER="etcd01=http://192.168.218.132:2380,etcd02=http://192.168.218.133:2380" #8. Create a cluster token. This value is unique for each cluster ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster" #9. Initial cluster status. When creating a new cluster, this value is new; ETCD_INITIAL_CLUSTER_STATE="new" #10.flannel uses v2 API to operate etcd, while kubernetes uses v3 API to operate etcd # In order to be compatible with flannel, v2 version will be enabled by default, so it is set in the configuration file ETCD_ENABLE_V2="true"

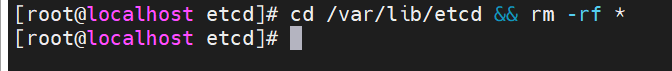

3. Modify / etc / etcd / etcd After the conf file, first delete the data saved in / var/lib/etcd directory, and then restart the service, otherwise it will fail

cd /var/lib/etcd && rm -rf *

4. Create an etcd service for node etcd01

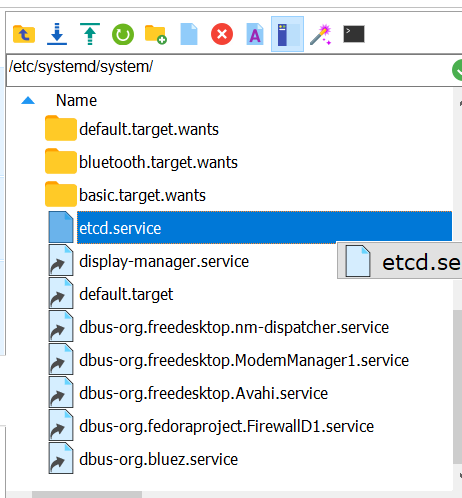

/etc/systemd/system/etcd.service

① , find file

② . edit the contents of the file

[Unit] Description=Etcd Server Documentation=https://github.com/etcd-io/etcd After=network.target [Service] User=root Type=notify ## Modify the parameter values of EnvironmentFile and ExecStart according to the actual situation ## 1.EnvironmentFile is the location of the configuration file. Note that "-" cannot be less EnvironmentFile=-/etc/etcd/etcd.conf ## 2.ExecStart is the location of etcd startup program ExecStart=/usr/local/bin/etcd Restart=always RestartSec=10s LimitNOFILE=65536 [Install] WantedBy=multi-user.target

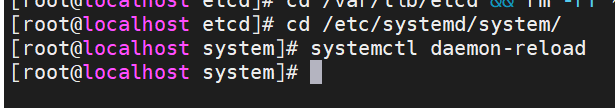

③ . refresh

systemctl daemon-reload

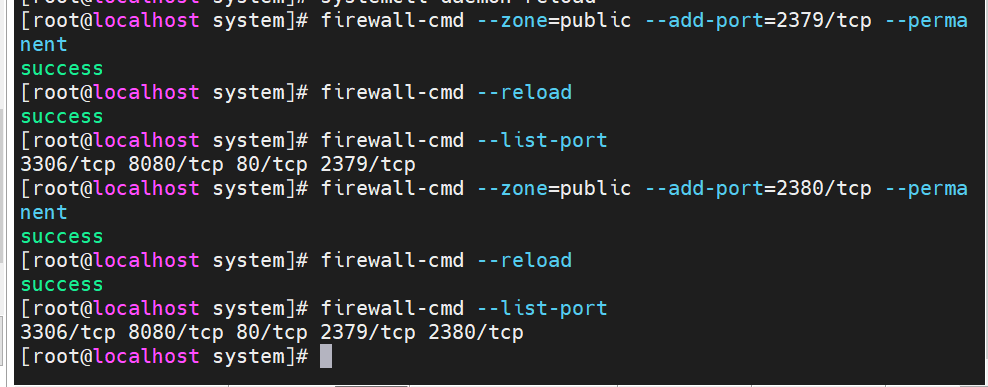

5. Because cross host communication is required, the firewall port needs to be opened

firewall-cmd --zone=public --add-port=2379/tcp --permanent

Restart firewall:

firewall-cmd --reload

See which ports are open:

firewall-cmd --list-port

6. Create an etcd service for node etcd02

① . check whether there is etcd service

② edit etcd Conf file, add cluster information, and pay attention to modifying the corresponding ip address

######################################################### ###### Please modify the configuration according to the actual situation of each node: 1/3/4/5/6/7 ###### ######################################################### #[Member] #1. The node name must be unique ETCD_NAME="etcd02" #2. Set the directory where the data is saved ETCD_DATA_DIR="/var/lib/etcd" #3. url for listening to other etcd member s ETCD_LISTEN_PEER_URLS="http://192.168.218.133:2380" #4. Address of the node to provide external services ETCD_LISTEN_CLIENT_URLS="http://192.168.218.133:2379,http://127.0.0.1:2379" #[Clustering] #5. The publicly announced listening address of the node client ETCD_ADVERTISE_CLIENT_URLS="http://192.168.218.133:2379" #6. The peer URL address of the node member will be notified to other member nodes of the cluster ETCD_INITIAL_ADVERTISE_PEER_URLS="http://192.168.218.133:2380" #7. Information of all nodes in the cluster ETCD_INITIAL_CLUSTER="etcd01=http://192.168.218.132:2380,etcd02=http://192.168.218.133:2380" #8. Create a cluster token. This value is unique for each cluster ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster" #9. Initial cluster status. When creating a new cluster, this value is new; ETCD_INITIAL_CLUSTER_STATE="new" #10.flannel uses v2 API to operate etcd, while kubernetes uses v3 API to operate etcd # In order to be compatible with flannel, v2 version will be enabled by default, so it is set in the configuration file ETCD_ENABLE_V2="true"

③ modify / etc / etcd / etcd After the conf file, first delete the data saved in / var/lib/etcd directory, and then restart the service, otherwise it will fail

cd /var/lib/etcd && rm -rf *

④ Create an etcd service for node etcd01: etcd service

[Unit] Description=Etcd Server Documentation=https://github.com/etcd-io/etcd After=network.target [Service] User=root Type=notify ## Modify the parameter values of EnvironmentFile and ExecStart according to the actual situation ## 1.EnvironmentFile is the location of the configuration file. Note that "-" cannot be less EnvironmentFile=-/etc/etcd/etcd.conf ## 2.ExecStart is the location of etcd startup program ExecStart=/usr/local/bin/etcd Restart=always RestartSec=10s LimitNOFILE=65536 [Install] WantedBy=multi-user.target

Refresh:

systemctl daemon-reload

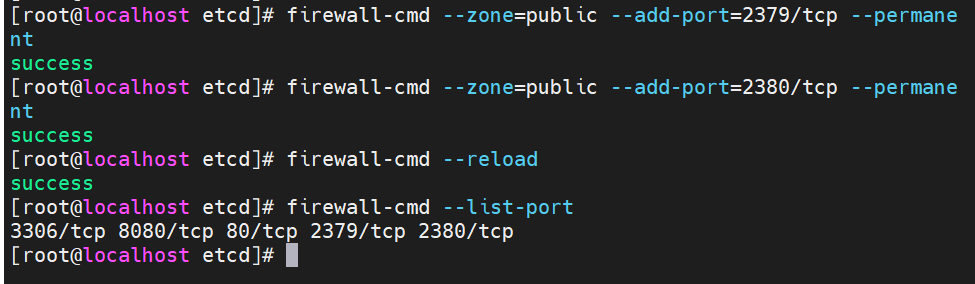

⑤ . open firewall port

firewall-cmd --zone=public --add-port=2379/tcp --permanent

firewall-cmd --zone=public --add-port=2380/tcp --permanent

firewall-cmd --reload

firewall-cmd --list-port

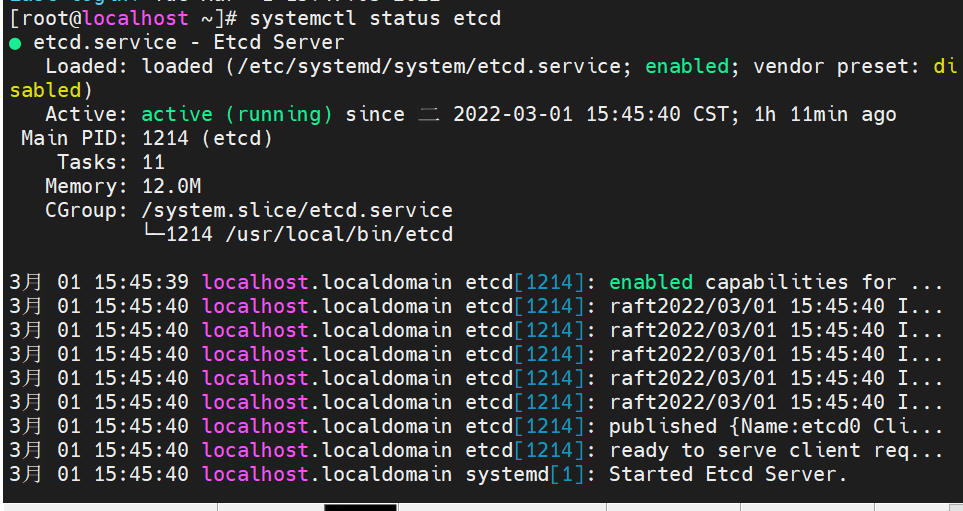

7. Two services on

etcd02:

etcd01:

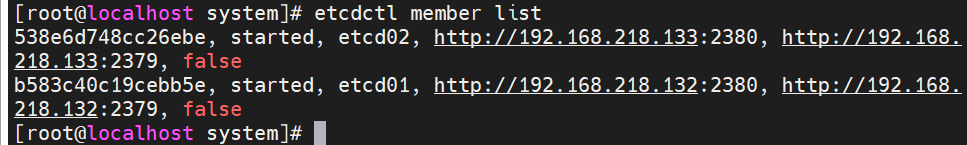

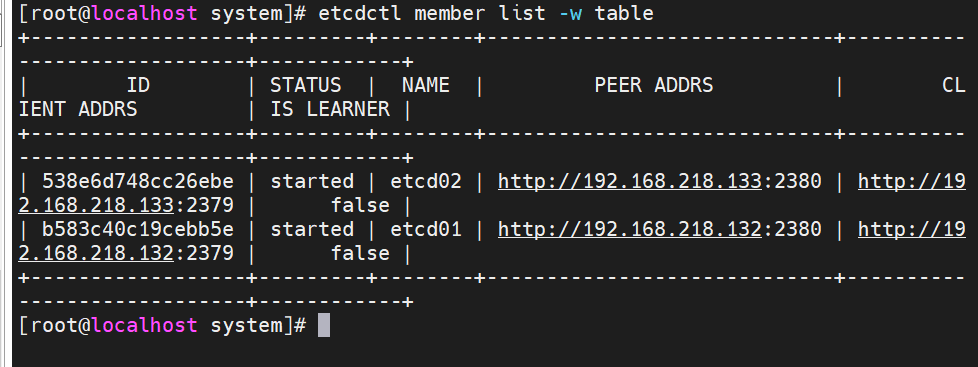

8. Cluster test

After the restart of each node, execute etcdctl member list on any node to list the information of all cluster nodes

etcdctl member list

etcdctl member list -w table

etcdctl endpoint health

etcdctl endpoint status

http command:

etcdctl --endpoints=http://192.168.199.160:2379,http://192.168.199.157:2379,http://192.168.199.158:2379 endpoint health

etcdctl --endpoints=http://192.168.27.120:2379 endpoint health

etcdctl --endpoints=http://192.168.27.129:2379 member list -w table

9. Service related instructions

systemctl daemon-reload

systemctl enable etcd

systemctl start etcd

systemctl status etcd

systemctl stop etcd

systemctl restart etcd