Exception code description

Just beginning to contact Hadoop,about MapReduce From time to time, I especially understand that the following records the problems and solutions that have been tangled for a day

1. Execute MapReduce task

hadoop jar wc.jar hejie.zheng.mapreduce.wordcount2.WordCountDriver /input /output

2. Jump out of exception Task failed task_1643869122334_0004_m_000000

[2022-02-03 15:10:59.255]Container killed on request. Exit code is 143 [2022-02-03 15:10:59.333]Container exited with a non-zero exit code 143. 2022-02-03 15:11:11,860 INFO mapreduce.Job: Task Id : attempt_1643869122334_0004_m_000000_2, Status : FAILED [2022-02-03 15:11:10.394]Container [pid=4334,containerID=container_1643869122334_0004_01_000004] is running 273537536B beyond the 'VIRTUAL' memory limit. Current usage: 68.0 MB of 1 GB physical memory used; 2.4 GB of 2.1 GB virtual memory used. Killing container. Dump of the process-tree for container_1643869122334_0004_01_000004 : |- PID PPID PGRPID SESSID CMD_NAME USER_MODE_TIME(MILLIS) SYSTEM_TIME(MILLIS) VMEM_USAGE(BYTES) RSSMEM_USAGE(PAGES) FULL_CMD_LINE |- 4343 4334 4334 4334 (java) 543 250 2518933504 17149 /opt/module/jdk1.8.0_212/bin/java -Djava.net.preferIPv4Stack=true -Dhadoop.metrics.log.level=WARN -Xmx820m -Djava.io.tmpdir=/opt/module/hadoop-3.1.3/data/nm-local-dir/usercache/user01/appcache/application_1643869122334_0004/container_1643869122334_0004_01_000004/tmp -Dlog4j.configuration=container-log4j.properties -Dyarn.app.container.log.dir=/opt/module/hadoop-3.1.3/logs/userlogs/application_1643869122334_0004/container_1643869122334_0004_01_000004 -Dyarn.app.container.log.filesize=0 -Dhadoop.root.logger=INFO,CLA -Dhadoop.root.logfile=syslog org.apache.hadoop.mapred.YarnChild 192.168.10.102 39922 attempt_1643869122334_0004_m_000000_2 4 |- 4334 4332 4334 4334 (bash) 2 6 9461760 270 /bin/bash -c /opt/module/jdk1.8.0_212/bin/java -Djava.net.preferIPv4Stack=true -Dhadoop.metrics.log.level=WARN -Xmx820m -Djava.io.tmpdir=/opt/module/hadoop-3.1.3/data/nm-local-dir/usercache/user01/appcache/application_1643869122334_0004/container_1643869122334_0004_01_000004/tmp -Dlog4j.configuration=container-log4j.properties -Dyarn.app.container.log.dir=/opt/module/hadoop-3.1.3/logs/userlogs/application_1643869122334_0004/container_1643869122334_0004_01_000004 -Dyarn.app.container.log.filesize=0 -Dhadoop.root.logger=INFO,CLA -Dhadoop.root.logfile=syslog org.apache.hadoop.mapred.YarnChild 192.168.10.102 39922 attempt_1643869122334_0004_m_000000_2 4 1>/opt/module/hadoop-3.1.3/logs/userlogs/application_1643869122334_0004/container_1643869122334_0004_01_000004/stdout 2>/opt/module/hadoop-3.1.3/logs/userlogs/application_1643869122334_0004/container_1643869122334_0004_01_000004/stderr [2022-02-03 15:11:10.532]Container killed on request. Exit code is 143 [2022-02-03 15:11:10.562]Container exited with a non-zero exit code 143. 2022-02-03 15:11:22,338 INFO mapreduce.Job: map 100% reduce 100% 2022-02-03 15:11:23,386 INFO mapreduce.Job: Job job_1643869122334_0004 failed with state FAILED due to: Task failed task_1643869122334_0004_m_000000 Job failed as tasks failed. failedMaps:1 failedReduces:0 killedMaps:0 killedReduces: 0 2022-02-03 15:11:23,653 INFO mapreduce.Job: Counters: 13 Job Counters Failed map tasks=4 Killed reduce tasks=1 Launched map tasks=4 Other local map tasks=3 Data-local map tasks=1 Total time spent by all maps in occupied slots (ms)=30921 Total time spent by all reduces in occupied slots (ms)=0 Total time spent by all map tasks (ms)=30921 Total vcore-milliseconds taken by all map tasks=30921 Total megabyte-milliseconds taken by all map tasks=31663104 Map-Reduce Framework CPU time spent (ms)=0 Physical memory (bytes) snapshot=0 Virtual memory (bytes) snapshot=0

3. Solution

Start with the solution and describe the cause of the problem

Reference articles Configuration in.

First, jump to hadoop-3.1.3\etc\hadoop directory and execute:

vim mapred-site.xml

Add the following code to configure the runtime memory (if the file content is relatively large, it can be configured according to the actual situation)

<property>

<name>mapreduce.map.memory.mb</name>

<value>1024</value>

<description>Modify each Map task The memory size applied by the container; Default 1 G</description>

</property>

<property>

<name>mapreduce.map.java.opts</name>

<value>-Xmx64m</value>

</property>

<property>

<name>mapreduce.reduce.memory.mb</name>

<value>1024</value>

</property>

<property>

<name>mapreduce.reduce.java.opts</name>

<value>-Xmx64m</value>

<description>The general setting is mapreduce.map.memory.mb 85 of%about</description>

</property>

<property>

<name>mapreduce.task.io.sort.mb</name>

<value>4</value>

4. Dig to the bottom

In fact, it can be passed http://hadoop103:8088/cluster , see the history of the current task and see where the specific problems occur:

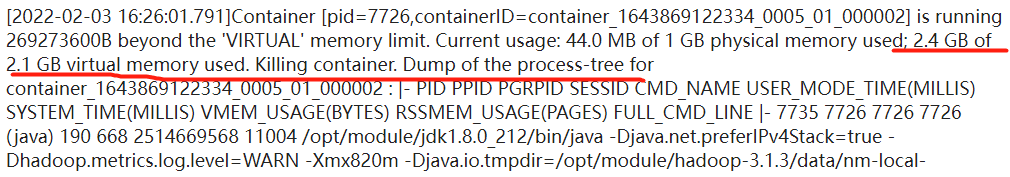

It can be seen that MapReduce has applied for 2.4GB of memory space, which exceeds the default virtual memory of 2.1GB. Insufficient memory leads to exceptions.

Modify mapred site XML memory size is enough.

(1)mapreduce.map.memory.mb is 1G by default, which is the memory allocated during map execution

(2)mapreduce.reduce.memory.mb is 1G by default, which is the memory allocated during reduce execution

(3)yarn.app.mapreduce.am.resource.mb is 1.5G by default, and the memory allocated by MR AppMaster

jvm parameter defaults

There are some jvm parameters below. Their default values are generally easy to ignore. Record them for easy query

-Xms by default, it is one 64th of the heap memory, with a minimum of 1M

-Xmx is one fourth or 1G of heap memory by default

-Xmn by default, the heap memory is one 64th of that

-20: Newratio defaults to 2

-20: Survivorratio defaults to 8

o -Xms: initial heap size, such as 4096M

o -Xmx: the maximum heap size, such as 4096M. If XMS > xmx, the default free heap memory less than 40% will trigger the adjustment to xmx, but MinheapFreeRation can be adjusted

o -XX:NewSize=n: set the size of the younger generation, such as 1024

o -Xmn1024M set the size of the younger generation to 1024M

o -XX:NewRatio=n: set the ratio of the old generation to the young generation. The recommended value is 3 to 5, such as 4, which means that the old generation: the young generation = 4:1, and the young generation accounts for 1 / 5 of the sum of the young generation and the old generation

o -XX:SurvivorRatio=n: the ratio of Eden district to two Survivor districts in the young generation. The recommended value is 3-5. Note that there are two in the Survivor area. For example, 8 means 2*Survivor:Eden=2:8. A Survivor area accounts for 1 / 10 of the whole young generation

-Xmx64M: maximum heap space 64MB

For an in-depth understanding of memory settings, please refer to Memory parameter understanding of Mapreduce under Yarn & XML parameter configuration

The above parameters can be adjusted according to practical needs to achieve the best effect.