Joint Development of Qt and Opencv

- qt installation

- opencv installation

- qt configuration opencv environment

- Mat qimage inversion Yx_opencv_ImgChange class

- Opencv Image Change Yx_opencv_Enhance Class

- Opencv Image Geometric Transform Yx_opencv_Enhance Class

- Opencv Image Enhancement Yx_opencv_Geom Class

- Opencv Image Corrosion Yx_opencv_Gray Class

Qt has powerful functions and convenience in visualization and interface development. opencv is a powerful open source image processing library. We often need to combine the two.

This article introduces the way of personal development and use, you can refer to below.

qt installation

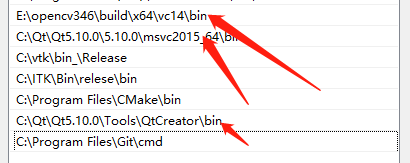

Official download can be installed directly, I directly use the vs2015 compiler and debugger. After installation, bin s can be added to the system path.

opencv installation

I use 3.46, opencv official website has provided exe direct installation, or can download the source code to compile itself, compiling nothing to note is to start choosing the right 32 or 64. After installation, bin s can be added to the system path.

The path is installed at its own location.

qt configuration opencv environment

After the qt and opencv environments were built, we jointly developed them.

In order to facilitate future use, we write the opencv configuration into the api separately.

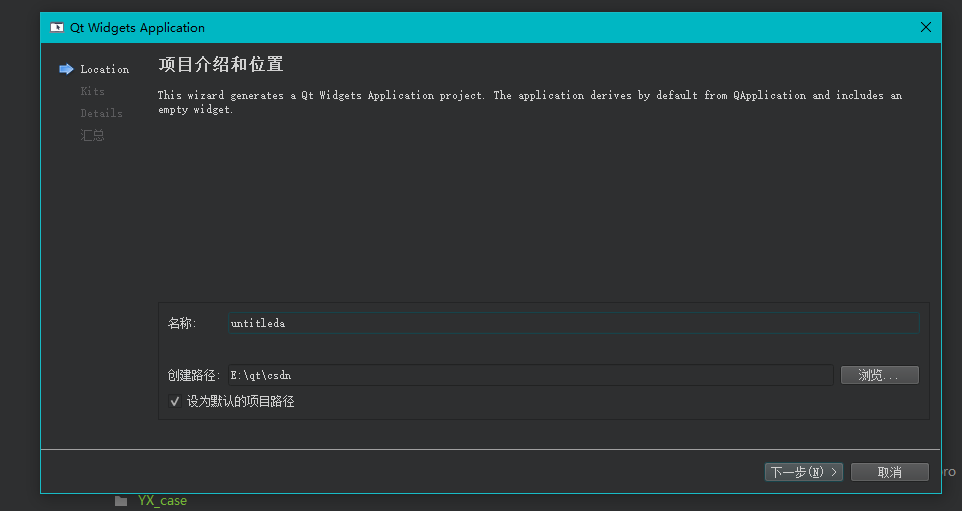

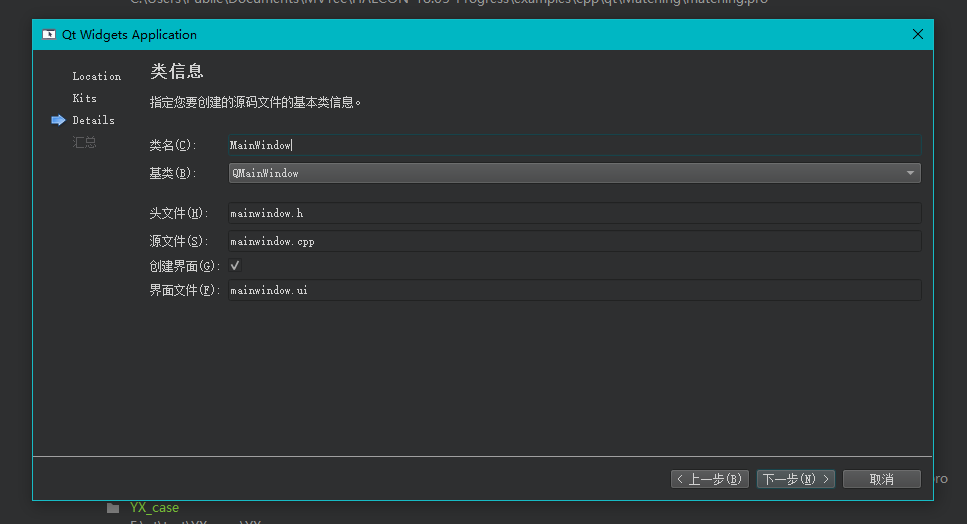

First, create a new qt widgets application program by default along the way

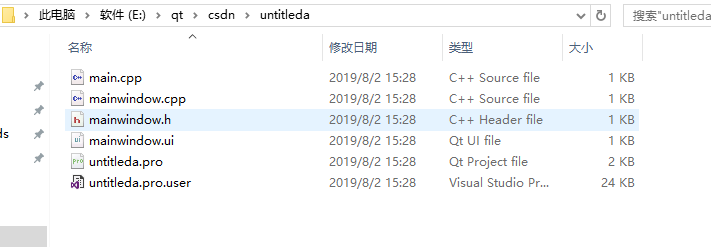

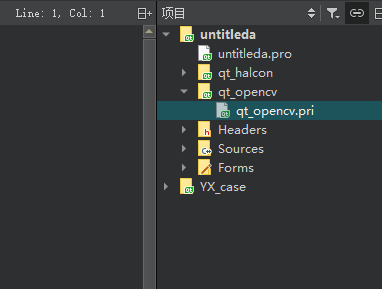

After building, select the. pro file, right-click on the resource manager to open, you can see the generated file.

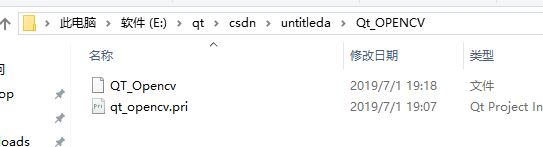

Under this folder, we create a new folder Qt_OPENCV folder to facilitate future porting.

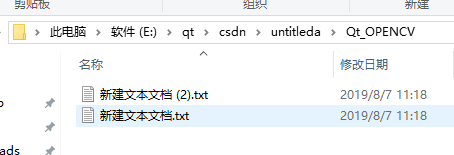

Create two new. txt files in the Qt_OPENCV folder. We write pri files and header files by hand. (Personally, I think pri is qmake for easy coding, just like placing pro directly.)

Renamed qt_halcon.pri and QT_Halcon

Open the two files in Notepad and write them separately

//qt_opencv.pri INCLUDEPATH+=E:/opencv346/build/include/opencv INCLUDEPATH+=E:/opencv346/build/include/opencv2 INCLUDEPATH+=E:/opencv346/build/include CONFIG(debug, debug|release):{ LIBS+=-LE:/opencv346/build/x64/vc14/lib\ -lopencv_world346d }else:CONFIG(release, debug|release):{ LIBS+=-LE:/opencv346/build/x64/vc14/lib\ -lopencv_world346 } HEADERS += \ $$PWD/qt_opencv.h SOURCES += \ $$PWD/qt_opencv.cpp

It is important to note that cv namespaces often conflict with others, so it is not recommended to start with / using namespace cv in qt environments

//QT_Opencv #ifndef QT_QTOPENCV_MODULE_H #define QT_QTOPENCV_MODULE_H #include qt_opencv.h #endif

qt_opencv.pri refers to halcon's header file and lib file

Specific paths should be determined according to their own installation

QT_Opencv is for our other files to call Opencv's header file.

qt_opencv.h is provided below

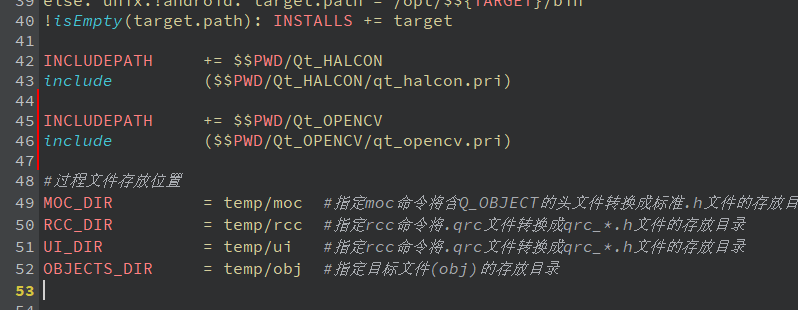

After we have built the template, we need to call it, open our pro file, and add the file at the end.

// untitleda.pro file addition INCLUDEPATH += $$PWD/Qt_OPENCV include ($$PWD/Qt_OPENCV/qt_opencv.pri) #Procedure file storage location MOC_DIR = temp/moc #Specify that the moc command will containQ_OBJECTHeader file conversion standard.h File storage directory RCC_DIR = temp/rcc #Specify that the rcc command will.qrc File Conversion qrc_*.h File storage directory UI_DIR = temp/ui #Specify that the rcc command will.qrc File Conversion qrc_*.h File storage directory OBJECTS_DIR = temp/obj #Designated target file(obj)Storage catalogue

After adding, select the. pro file and right-click qmake to see that the addition is complete.

At this point, the program's halcon environment is configured. The Qt_OPENCV folder can be used as our template. In the future, we need to use opencv to copy the folder directly.

Mat qimage inversion Yx_opencv_ImgChange class

Yx_opencv_ImgChange class under qt_opencv.h

class Yx_opencv_ImgChange; // Image conversion class Yx_opencv_ImgChange { public: Yx_opencv_ImgChange(); ~Yx_opencv_ImgChange(); QImage cvMat2QImage(const Mat& mat); // Mat changed to QImage Mat QImage2cvMat(QImage image); // Change QImage to Mat QImage splitBGR(QImage src, int color); // Extraction of RGB Components QImage splitColor(QImage src, String model, int color); // Extraction component };

QImage Yx_opencv_ImgChange::cvMat2QImage(const Mat& mat) // Mat changed to QImage { if (mat.type() == CV_8UC1) // single channel { QImage image(mat.cols, mat.rows, QImage::Format_Indexed8); image.setColorCount(256); // Gray scale 256 for (int i = 0; i < 256; i++) { image.setColor(i, qRgb(i, i, i)); } uchar *pSrc = mat.data; // Duplicate mat data for (int row = 0; row < mat.rows; row++) { uchar *pDest = image.scanLine(row); memcpy(pDest, pSrc, mat.cols); pSrc += mat.step; } return image; } else if (mat.type() == CV_8UC3) // 3 Channels { const uchar *pSrc = (const uchar*)mat.data; // Duplicate Pixels QImage image(pSrc, mat.cols, mat.rows, (int)mat.step, QImage::Format_RGB888); // R, G, B correspond to 0, 1, 2 return image.rgbSwapped(); // rgbSwapped is designed to show better colors. } else if (mat.type() == CV_8UC4) { const uchar *pSrc = (const uchar*)mat.data; // Duplicate Pixels // Create QImage with same dimensions as input Mat QImage image(pSrc,mat.cols, mat.rows, (int)mat.step, QImage::Format_ARGB32); // B,G,R,A correspond to 0, 1, 2, 3 return image.copy(); } else { return QImage(); } } Mat Yx_opencv_ImgChange::QImage2cvMat(QImage image) // Change QImage to Mat { Mat mat; switch (image.format()) { case QImage::Format_ARGB32: case QImage::Format_RGB32: case QImage::Format_ARGB32_Premultiplied: mat = Mat(image.height(), image.width(), CV_8UC4, (void*)image.constBits(), image.bytesPerLine()); break; case QImage::Format_RGB888: mat = Mat(image.height(), image.width(), CV_8UC3, (void*)image.constBits(), image.bytesPerLine()); cv::cvtColor(mat, mat, CV_BGR2RGB); break; case QImage::Format_Indexed8: mat = Mat(image.height(), image.width(), CV_8UC1, (void*)image.constBits(), image.bytesPerLine()); break; } return mat; } QImage Yx_opencv_ImgChange::splitBGR(QImage src, int color) // Extraction of RGB Components { Mat srcImg, dstImg; srcImg = QImage2cvMat(src); if (srcImg.channels() == 1) { QMessageBox message(QMessageBox::Information, QString::fromLocal8Bit("Tips"), QString::fromLocal8Bit("The image is a gray image.")); message.exec(); return src; } else { vector<Mat> m; split(srcImg, m); vector<Mat>Rchannels, Gchannels, Bchannels; split(srcImg, Rchannels); split(srcImg, Gchannels); split(srcImg, Bchannels); Rchannels[1] = 0; Rchannels[2] = 0; Gchannels[0] = 0; Gchannels[2] = 0; Bchannels[0] = 0; Bchannels[1] = 0; merge(Rchannels, m[0]); merge(Gchannels, m[1]); merge(Bchannels, m[2]); dstImg = m[color]; // Corresponding to B, G and R respectively QImage dst = cvMat2QImage(dstImg); return dst; } } QImage Yx_opencv_ImgChange::splitColor(QImage src, String model, int color) // Extraction component { Mat img = QImage2cvMat(src); Mat img_rgb, img_hsv, img_hls, img_yuv, img_dst; if (img.channels() == 1) { QUIHelper::showMessageBoxError("The image is a gray image."); return src; } else { vector <Mat> vecRGB, vecHsv, vecHls, vecYuv; img_hsv.create(img.rows, img.cols, CV_8UC3); img_hls.create(img.rows, img.cols, CV_8UC3); cvtColor(img, img_rgb, CV_BGR2RGB); cvtColor(img, img_hsv, CV_BGR2HSV); cvtColor(img, img_hls, CV_BGR2HLS); cvtColor(img, img_yuv, CV_BGR2YUV); split(img_rgb, vecRGB); split(img_hsv, vecHsv); split(img_hls, vecHls); split(img_yuv, vecYuv); if(model == "RGB") img_dst = vecRGB[color]; else if (model == "HSV") img_dst = vecHsv[color]; else if (model == "HLS") img_dst = vecHls[color]; else if (model == "YUV") img_dst = vecYuv[color]; else img_dst = img; QImage dst = cvMat2QImage(img_dst); return dst; } }

Opencv Image Change Yx_opencv_Enhance Class

Yx_opencv_Enhance class under qt_opencv.h

// Image change class Yx_opencv_Enhance { public: Yx_opencv_Enhance(); ~Yx_opencv_Enhance(); QImage Normalized(QImage src, int kernel_length); // Simple filtering QImage Gaussian(QImage src, int kernel_length); // Gauss filtering QImage Median(QImage src, int kernel_length); // median filtering QImage Sobel(QImage src, int kernel_length); // sobel edge detection QImage Laplacian(QImage src, int kernel_length); // laplacian edge detection QImage Canny(QImage src, int kernel_length, int lowThreshold); // canny edge detection QImage HoughLine(QImage src, int threshold, double minLineLength, double maxLineGap); // Line detection QImage HoughCircle(QImage src, int minRadius, int maxRadius); // Circle detection private: Yx_opencv_ImgChange *imgchangeClass; };

Yx_opencv_Enhance::Yx_opencv_Enhance() { imgchangeClass = new Yx_opencv_ImgChange; } Yx_opencv_Enhance::~Yx_opencv_Enhance() { } QImage Yx_opencv_Enhance::Normalized(QImage src,int kernel_length) // Simple filtering { Mat srcImg, dstImg; srcImg = imgchangeClass->QImage2cvMat(src); blur(srcImg, dstImg, Size(kernel_length, kernel_length), Point(-1, -1)); QImage dst = imgchangeClass->cvMat2QImage(dstImg); return dst; } QImage Yx_opencv_Enhance::Gaussian(QImage src, int kernel_length) // Gauss filtering { Mat srcImg, dstImg; srcImg = imgchangeClass->QImage2cvMat(src); GaussianBlur(srcImg, dstImg, Size(kernel_length, kernel_length), 0, 0); QImage dst = imgchangeClass->cvMat2QImage(dstImg); return dst; } QImage Yx_opencv_Enhance::Median(QImage src, int kernel_length) // median filtering { Mat srcImg, dstImg; srcImg = imgchangeClass->QImage2cvMat(src); medianBlur(srcImg, dstImg, kernel_length); QImage dst = imgchangeClass->cvMat2QImage(dstImg); return dst; } QImage Yx_opencv_Enhance::HoughLine(QImage src, int threshold, double minLineLength, double maxLineGap) // Line detection { Mat srcImg, dstImg, cdstPImg; srcImg = imgchangeClass->QImage2cvMat(src); cv::Canny(srcImg, dstImg, 50, 200, 3); // Canny Operator Edge Detection if (srcImg.channels() != 1) cvtColor(dstImg, cdstPImg, COLOR_GRAY2BGR); // Conversion of gray image else cdstPImg = srcImg; vector<Vec4i> linesP; HoughLinesP(dstImg, linesP, 1, CV_PI / 180, threshold, minLineLength, maxLineGap);// 50,50,10 for (size_t i = 0; i < linesP.size(); i++) { Vec4i l = linesP[i]; line(cdstPImg, Point(l[0], l[1]), Point(l[2], l[3]), Scalar(0, 0, 255), 1, LINE_AA); } QImage dst = imgchangeClass->cvMat2QImage(cdstPImg); return dst; } QImage Yx_opencv_Enhance::HoughCircle(QImage src, int minRadius, int maxRadius) // Circle detection { Mat srcImg, dstImg; srcImg = imgchangeClass->QImage2cvMat(src); Mat gray; if (srcImg.channels() != 1) cvtColor(srcImg, gray, COLOR_BGR2GRAY); else gray = srcImg; medianBlur(gray, gray, 5); // Median filtering, noise removal and error detection avoided vector<Vec3f> circles; HoughCircles(gray, circles, HOUGH_GRADIENT, 1, gray.rows / 16, 100, 30, minRadius, maxRadius); // Hough circle detection, 100, 30, 1, 30 dstImg = srcImg.clone(); for (size_t i = 0; i < circles.size(); i++) { Vec3i c = circles[i]; Point center = Point(c[0], c[1]); circle(dstImg, center, 1, Scalar(0, 100, 100), 3, LINE_AA); // Circle drawing int radius = c[2]; circle(dstImg, center, radius, Scalar(255, 0, 255), 3, LINE_AA); } QImage dst = imgchangeClass->cvMat2QImage(dstImg); return dst; } QImage Yx_opencv_Enhance::Sobel(QImage src, int kernel_length) // sobel { Mat srcImg, dstImg, src_gray; srcImg = imgchangeClass->QImage2cvMat(src); GaussianBlur(srcImg, srcImg, Size(3, 3), 0, 0, BORDER_DEFAULT); // Gauss ambiguity if (srcImg.channels() != 1) cvtColor(srcImg, src_gray, COLOR_BGR2GRAY); // Conversion of gray image else src_gray = srcImg; Mat grad_x, grad_y, abs_grad_x, abs_grad_y; cv::Sobel(src_gray, grad_x, CV_16S, 1, 0, kernel_length, 1, 0, BORDER_DEFAULT); cv::Sobel(src_gray, grad_y, CV_16S, 0, 1, kernel_length, 1, 0, BORDER_DEFAULT); convertScaleAbs(grad_x, abs_grad_x); // Scale, calculate absolute values, and convert the results to 8 bits convertScaleAbs(grad_y, abs_grad_y); addWeighted(abs_grad_x, 0.5, abs_grad_y, 0.5, 0, dstImg); QImage dst = imgchangeClass->cvMat2QImage(dstImg); return dst; } QImage Yx_opencv_Enhance::Laplacian(QImage src, int kernel_length) // laplacian { Mat srcImg, dstImg, src_gray; srcImg = imgchangeClass->QImage2cvMat(src); GaussianBlur(srcImg, srcImg, Size(3, 3), 0, 0, BORDER_DEFAULT); // Gauss ambiguity if (srcImg.channels() != 1) cvtColor(srcImg, src_gray, COLOR_BGR2GRAY); // Conversion of gray image else src_gray = srcImg; Mat abs_dst; // Laplacian second derivative cv::Laplacian(src_gray, dstImg, CV_16S, kernel_length, 1, 0, BORDER_DEFAULT); convertScaleAbs(dstImg, dstImg); // Absolute value 8 bits QImage dst = imgchangeClass->cvMat2QImage(dstImg); return dst; } QImage Yx_opencv_Enhance::Canny(QImage src, int kernel_length ,int lowThreshold) // canny { Mat srcImg, dstImg, src_gray, detected_edges; srcImg = imgchangeClass->QImage2cvMat(src); dstImg.create(srcImg.size(), srcImg.type()); if (srcImg.channels() != 1) cvtColor(srcImg, src_gray, COLOR_BGR2GRAY); // Conversion of gray image else src_gray = srcImg; blur(src_gray, detected_edges, Size(3, 3)); // Average filtering smoothing cv::Canny(detected_edges, detected_edges, lowThreshold, lowThreshold * 3, kernel_length); dstImg = Scalar::all(0); srcImg.copyTo(dstImg, detected_edges); QImage dst = imgchangeClass->cvMat2QImage(dstImg); return dst; }

Opencv Image Geometric Transform Yx_opencv_Enhance Class

Yx_opencv_Enhance class under qt_opencv.h

// Image geometric transformation class Yx_opencv_Geom { public: Yx_opencv_Geom(); ~Yx_opencv_Geom(); QImage Resize(QImage src, int length, int width); QImage Enlarge_Reduce(QImage src, int times); QImage Rotate(QImage src, int angle); QImage Rotate_fixed(QImage src, int angle); QImage Flip(QImage src, int flipcode); QImage Lean(QImage src, int x, int y); private: Yx_opencv_ImgChange *imgchangeClass; // Size class };

Yx_opencv_Geom::Yx_opencv_Geom() { imgchangeClass = new Yx_opencv_ImgChange; } Yx_opencv_Geom::~Yx_opencv_Geom() { } QImage Yx_opencv_Geom::Resize(QImage src, int length, int width) // Change size { Mat matSrc, matDst; matSrc = imgchangeClass->QImage2cvMat(src); resize(matSrc, matDst, Size(length, width), 0, 0, INTER_LINEAR);// linear interpolation QImage dst = imgchangeClass->cvMat2QImage(matDst); return dst; } QImage Yx_opencv_Geom::Enlarge_Reduce(QImage src, int times) // zoom { Mat matSrc, matDst; matSrc = imgchangeClass->QImage2cvMat(src); if (times > 0) { resize(matSrc, matDst, Size(matSrc.cols * abs(times+1), matSrc.rows * abs(times+1)), 0, 0, INTER_LINEAR); QImage dst = imgchangeClass->cvMat2QImage(matDst); return dst; } else if (times < 0) { resize(matSrc, matDst, Size(matSrc.cols / abs(times-1), matSrc.rows / abs(times-1)), 0, 0, INTER_AREA); QImage dst = imgchangeClass->cvMat2QImage(matDst); return dst; } else { return src; } } QImage Yx_opencv_Geom::Rotate(QImage src, int angle) // rotate { Mat matSrc, matDst,M; matSrc = imgchangeClass->QImage2cvMat(src); cv::Point2f center(matSrc.cols / 2, matSrc.rows / 2); cv::Mat rot = cv::getRotationMatrix2D(center, angle, 1); cv::Rect bbox = cv::RotatedRect(center, matSrc.size(), angle).boundingRect(); rot.at<double>(0, 2) += bbox.width / 2.0 - center.x; rot.at<double>(1, 2) += bbox.height / 2.0 - center.y; cv::warpAffine(matSrc, matDst, rot, bbox.size()); QImage dst = imgchangeClass->cvMat2QImage(matDst); return dst; } QImage Yx_opencv_Geom::Rotate_fixed(QImage src, int angle) // Rotation 90, 180, 270 { Mat matSrc, matDst, M; matSrc = imgchangeClass->QImage2cvMat(src); M = getRotationMatrix2D(Point2i(matSrc.cols / 2, matSrc.rows / 2), angle, 1); warpAffine(matSrc, matDst, M, Size(matSrc.cols, matSrc.rows)); QImage dst = imgchangeClass->cvMat2QImage(matDst); return dst; } QImage Yx_opencv_Geom::Flip(QImage src, int flipcode) // image { Mat matSrc, matDst; matSrc = imgchangeClass->QImage2cvMat(src); flip(matSrc, matDst, flipcode); // flipCode==0 vertical flip (flip along the X axis), flip Code > 0 horizontal flip (flip along the Y axis) // FlipCode < 0 Horizontal and Vertical Flip (first along the X-axis, then along the Y-axis, equivalent to 180 degrees of rotation) QImage dst = imgchangeClass->cvMat2QImage(matDst); return dst; } QImage Yx_opencv_Geom::Lean(QImage src, int x, int y) // tilt { Mat matSrc, matTmp, matDst; matSrc = imgchangeClass->QImage2cvMat(src); matTmp = Mat::zeros(matSrc.rows, matSrc.cols, matSrc.type()); Mat map_x, map_y; Point2f src_point[3], tmp_point[3], x_point[3], y_point[3]; double angleX = x / 180.0 * CV_PI ; double angleY = y / 180.0 * CV_PI; src_point[0] = Point2f(0, 0); src_point[1] = Point2f(matSrc.cols, 0); src_point[2] = Point2f(0, matSrc.rows); x_point[0] = Point2f(matSrc.rows * tan(angleX), 0); x_point[1] = Point2f(matSrc.cols + matSrc.rows * tan(angleX), 0); x_point[2] = Point2f(0, matSrc.rows); map_x = getAffineTransform(src_point, x_point); warpAffine(matSrc, matTmp, map_x, Size(matSrc.cols + matSrc.rows * tan(angleX), matSrc.rows)); tmp_point[0] = Point2f(0, 0); tmp_point[1] = Point2f(matTmp.cols, 0); tmp_point[2] = Point2f(0, matTmp.rows); y_point[0] = Point2f(0, 0); y_point[1] = Point2f(matTmp.cols, matTmp.cols * tan(angleY)); y_point[2] = Point2f(0, matTmp.rows); map_y = getAffineTransform(tmp_point, y_point); warpAffine(matTmp, matDst, map_y, Size(matTmp.cols, matTmp.rows + matTmp.cols * tan(angleY))); QImage dst = imgchangeClass->cvMat2QImage(matDst); return dst; }

Opencv Image Enhancement Yx_opencv_Geom Class

Yx_opencv_Geom class under qt_opencv.h

// image enhancement class Yx_opencv_Gray { public: Yx_opencv_Gray(); ~Yx_opencv_Gray(); QImage Bin(QImage src, int threshold); QImage Graylevel(QImage src); QImage Reverse(QImage src); // Image inversion QImage Linear(QImage src, int alpha, int beta); // linear transformation QImage Gamma(QImage src, int gamma); // Gamma transformation (exponential transformation) QImage Log(QImage src, int c); // Logarithmic transformation QImage Histeq(QImage src); // Histogram equalization private: Yx_opencv_ImgChange *imgchangeClass; };

Yx_opencv_Gray::Yx_opencv_Gray() { imgchangeClass = new Yx_opencv_ImgChange; } Yx_opencv_Gray::~Yx_opencv_Gray() { } QImage Yx_opencv_Gray::Bin(QImage src, int threshold) // Binarization { Mat srcImg, dstImg,grayImg; srcImg = imgchangeClass->QImage2cvMat(src); if(srcImg.channels()!=1) cvtColor(srcImg, grayImg, CV_BGR2GRAY); else dstImg = srcImg.clone(); cv::threshold(grayImg, dstImg, threshold, 255, THRESH_BINARY); QImage dst = imgchangeClass->cvMat2QImage(dstImg); return dst; } QImage Yx_opencv_Gray::Graylevel(QImage src) // Grayscale image { Mat srcImg, dstImg; srcImg = imgchangeClass->QImage2cvMat(src); dstImg.create(srcImg.size(), srcImg.type()); if (srcImg.channels() != 1) cvtColor(srcImg, dstImg, CV_BGR2GRAY); else dstImg = srcImg.clone(); QImage dst = imgchangeClass->cvMat2QImage(dstImg); return dst; } QImage Yx_opencv_Gray::Reverse(QImage src) // Image inversion { Mat srcImg, dstImg; srcImg = imgchangeClass->QImage2cvMat(src); bitwise_xor(srcImg, Scalar(255), dstImg); // XOR QImage dst = imgchangeClass->cvMat2QImage(dstImg); return dst; } QImage Yx_opencv_Gray::Linear(QImage src, int alpha, int beta) // linear transformation { Mat srcImg, dstImg; srcImg = imgchangeClass->QImage2cvMat(src); srcImg.convertTo(dstImg, -1, alpha/100.0, beta-100); // matDst = alpha * matTmp + beta QImage dst = imgchangeClass->cvMat2QImage(dstImg); return dst; } QImage Yx_opencv_Gray::Gamma(QImage src, int gamma) // Gamma transformation (exponential transformation) { if (gamma < 0) return src; Mat srcImg, dstImg; srcImg = imgchangeClass->QImage2cvMat(src); Mat lookUpTable(1, 256, CV_8U); // Lookup table uchar* p = lookUpTable.ptr(); for (int i = 0; i < 256; ++i) p[i] = saturate_cast<uchar>(pow(i / 255.0, gamma/100.0)*255.0); // pow() is a power operation LUT(srcImg, lookUpTable, dstImg); // LUT QImage dst = imgchangeClass->cvMat2QImage(dstImg); return dst; } QImage Yx_opencv_Gray::Log(QImage src, int c) // Logarithmic transformation { Mat srcImg, dstImg; srcImg = imgchangeClass->QImage2cvMat(src); Mat lookUpTable(1, 256, CV_8U); // Lookup table uchar* p = lookUpTable.ptr(); for (int i = 0; i < 256; ++i) p[i] = saturate_cast<uchar>((c/100.0)*log(1 + i / 255.0)*255.0); // pow() is a power operation LUT(srcImg, lookUpTable, dstImg); // LUT QImage dst = imgchangeClass->cvMat2QImage(dstImg); return dst; } QImage Yx_opencv_Gray::Histeq(QImage src) // Histogram equalization { Mat srcImg, dstImg; srcImg = imgchangeClass->QImage2cvMat(src); if (srcImg.channels() != 1) cvtColor(srcImg, srcImg, CV_BGR2GRAY); else dstImg = srcImg.clone(); equalizeHist(srcImg, dstImg); // Histogram equalization QImage dst = imgchangeClass->cvMat2QImage(dstImg); return dst; }

Opencv Image Corrosion Yx_opencv_Gray Class

Yx_opencv_Gray Class under qt_opencv.h

// Image Corrosion class Yx_opencv_Morp { public: Yx_opencv_Morp(); ~Yx_opencv_Morp(); QImage Erode(QImage src, int elem,int kernel,int times,int,int); // corrosion QImage Dilate(QImage src, int elem, int kernel, int times,int,int); // expand QImage Open(QImage src, int elem, int kernel, int times,int,int); // Open operation QImage Close(QImage src, int elem, int kernel, int times,int,int); // Closed operation QImage Grad(QImage src, int elem, int kernel,int,int,int); // Morphological gradient QImage Tophat(QImage src, int elem, int kernel,int,int,int); // Cap operation QImage Blackhat(QImage src, int elem, int kernel,int,int,int); // Black hat operation private: Yx_opencv_ImgChange *imgchangeClass; };

Yx_opencv_Morp::Yx_opencv_Morp() { imgchangeClass = new Yx_opencv_ImgChange; } Yx_opencv_Morp::~Yx_opencv_Morp() { } QImage Yx_opencv_Morp::Erode(QImage src, int elem, int kernel, int times, int, int) // corrosion { Mat srcImg, dstImg; srcImg = imgchangeClass->QImage2cvMat(src); int erosion_type = 0; if (elem == 0) { erosion_type = MORPH_RECT; } else if (elem == 1) { erosion_type = MORPH_CROSS; } else if (elem == 2) { erosion_type = MORPH_ELLIPSE; } Mat element = getStructuringElement(erosion_type,Size(2 * kernel + 3, 2 * kernel + 3), Point(kernel+1, kernel+1)); erode(srcImg, dstImg, element, Point(-1, -1), times); QImage dst = imgchangeClass->cvMat2QImage(dstImg); return dst; } QImage Yx_opencv_Morp::Dilate(QImage src, int elem, int kernel, int times,int,int) // expand { Mat srcImg, dstImg; srcImg = imgchangeClass->QImage2cvMat(src); int dilation_type = 0; if (elem == 0) { dilation_type = MORPH_RECT; } else if (elem == 1) { dilation_type = MORPH_CROSS; } else if (elem == 2) { dilation_type = MORPH_ELLIPSE; } Mat element = getStructuringElement(dilation_type, Size(2 * kernel + 3, 2 * kernel + 3), Point(kernel + 1, kernel + 1)); dilate(srcImg, dstImg, element, Point(-1, -1), times); QImage dst = imgchangeClass->cvMat2QImage(dstImg); return dst; } QImage Yx_opencv_Morp::Open(QImage src, int elem, int kernel, int times, int, int) // Open operation { Mat srcImg, dstImg; srcImg = imgchangeClass->QImage2cvMat(src); Mat element = getStructuringElement(elem,Size(2 * kernel + 3, 2 * kernel + 3), Point(kernel + 1, kernel + 1)); morphologyEx(srcImg, dstImg, MORPH_OPEN, element, Point(-1, -1), times); QImage dst = imgchangeClass->cvMat2QImage(dstImg); return dst; } QImage Yx_opencv_Morp::Close(QImage src, int elem, int kernel, int times, int, int) // Closed operation { Mat srcImg, dstImg; srcImg = imgchangeClass->QImage2cvMat(src); Mat element = getStructuringElement(elem,Size(2 * kernel + 3, 2 * kernel + 3), Point(kernel + 1, kernel + 1)); morphologyEx(srcImg, dstImg, MORPH_CLOSE, element, Point(-1, -1), times); QImage dst = imgchangeClass->cvMat2QImage(dstImg); return dst; } QImage Yx_opencv_Morp::Grad(QImage src, int elem, int kernel, int, int, int) // Morphological gradient { Mat srcImg, grayImg, dstImg; srcImg = imgchangeClass->QImage2cvMat(src); Mat element = getStructuringElement(elem,Size(2 * kernel + 3, 2 * kernel + 3), Point(kernel + 1, kernel + 1)); if (srcImg.channels() != 1) cvtColor(srcImg, grayImg, CV_BGR2GRAY); else grayImg = srcImg.clone(); morphologyEx(grayImg, dstImg, MORPH_GRADIENT, element); QImage dst = imgchangeClass->cvMat2QImage(dstImg); return dst; } QImage Yx_opencv_Morp::Tophat(QImage src, int elem, int kernel,int,int,int) // Cap operation { Mat srcImg, grayImg, dstImg; srcImg = imgchangeClass->QImage2cvMat(src); Mat element = getStructuringElement(elem,Size(2 * kernel + 3, 2 * kernel + 3), Point(kernel + 1, kernel + 1)); if (srcImg.channels() != 1) cvtColor(srcImg, grayImg, CV_BGR2GRAY); else grayImg = srcImg.clone(); morphologyEx(grayImg, dstImg, MORPH_TOPHAT, element); QImage dst = imgchangeClass->cvMat2QImage(dstImg); return dst; } QImage Yx_opencv_Morp::Blackhat(QImage src, int elem, int kernel,int,int,int) // Black hat operation { Mat srcImg, grayImg, dstImg; srcImg = imgchangeClass->QImage2cvMat(src); Mat element = getStructuringElement(elem,Size(2 * kernel + 3, 2 * kernel + 3), Point(kernel + 1, kernel + 1)); if (srcImg.channels() != 1) cvtColor(srcImg, grayImg, CV_BGR2GRAY); else grayImg = srcImg.clone(); morphologyEx(grayImg, dstImg, MORPH_BLACKHAT, element); QImage dst = imgchangeClass->cvMat2QImage(dstImg); return dst; }