Originally written by binder

When it comes to binder, many people should say how old the technology of binder is, and there are a lot of articles analyzing binder. You don't need to write articles about binder at all!

In fact, I don't agree with this view. Tell me my reasons:

- You need to summarize the binder knowledge you have read and learned. This summary is very necessary. If you don't believe it, you can think about it. If you don't summarize, have you forgotten a lot of the knowledge you have learned before? If you don't summarize, the knowledge you have learned is scattered and unsystematic.

- Even though the binder is very old, there will still be many people who don't understand it. Maybe you will say, what's the use of understanding it. I want to say that binder is the cornerstone of android. Binder can be seen everywhere in android (AMS, WMS, etc.). If you know binder, you can have a deep understanding of android, the interaction logic between processes, the interaction logic between processes and system services, etc.

- Android system architecture is divided into app, framework, native and linux kernel layers. What is the relationship between these four layers? After understanding the binder (which runs through these four layers), you will know how the four layers work together to ensure the normal operation of the system.

Doubt point

In the process of learning binder, I will encounter some doubts more or less. Why do designers design like this? I have the following doubts:

- Two processes (client and server processes) communicate. The client process gets the proxy class of the server process (actually a handle). When was this handle generated and what are the generation rules?

- How does the app process establish a one-to-one correspondence with the driver layer

- How does the client in the driver layer transfer data and wake up the server

- How does the client process cause blocking when calling the server process method

- Binder of driver layer_ proc, binder_ What is the relationship between thread and non thread. binder_ node, binder_ ref, binder_ nodes, binder_ What is the function of refs?

- When did the app process open the driver

- What role does the ServiceManager process play? Does the ServiceManager store the proxy binder of the system service or the real system service object

- When the app process communicates with system services (such as AMS), does it have to go through the ServiceManager to get the binder of the system service every time?

- Does the client process communicate with the server process through the ServiceManager process

By reading the source code and referring to Daniel's articles (such as gityuan and Lao Luo), the above doubts are basically solved. Therefore, I hope to solve these doubts in this series of articles. At the same time, I hope I can analyze the binder from a familiar and easy to understand perspective, which is also the purpose of adding familiar and easy to understand in the title of the article.

Of course, it is impossible to explain the binder clearly through an article, so this will be a series of articles.

The source code of android is 9.0.0_r3/

binder_ The source code of the driver layer needs to be downloaded separately from google's official website. Don't choose the version of goldfish (simulator), choose other versions

Driver layer code: binder.c

First, let's start this series of articles with the preparation of binder. Why should we start from the preparatory work, because I think tracing a thing should start from its root.

#Content of this article

- binder preparation

- system call

- First preparation: open binder driver

- The second preparation: mmap (memory mapping)

- The process communicates with the driver layer

- The third preparation: start the binder main thread to receive data

- When is the binder preparation called

- summary

1.binder preparation

Before the binder can communicate with the process, it needs to do some preparatory work. These works are roughly as follows:

1. open binder driver

2. mmap

3. Start the binder main thread to receive data

Before we talk about preparations, let's talk about system call

2. System call

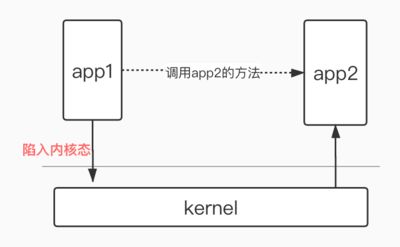

app processes or system processes belong to two different processes, and there is no way to communicate between processes. Therefore, an intermediary is needed. This intermediary is the linux kernel, as shown in the following figure:

app process or system process is also called user space, and linux kernel is called kernel space,

The process of calling kernel space program in user space is called syscall. When making system call, user space will fall into kernel state.

The simple understanding of falling into the kernel state is: the thread in user space pauses execution (in the interrupted state), switches to the kernel thread to execute the kernel program, when the kernel program is executed, switches to user space, and the thread in user space resumes execution; On the contrary, if the kernel program is blocked, the thread in user space is always interrupted.

The correspondence between binder user space calls and kernel space methods is:

open-> binder_ open, ioctl->binder_ ioctl,mmap->binder_ MMAP wait

System call is the core principle of binder.

Kernel space is shared memory

3. The first preparation: open binder driver

Why is the first preparation open binder driver? We can analyze what we have done from the code. After the analysis, we can naturally know that the corresponding class is ProcessState. This class is a single instance, so there is only one instance in a process. The code for calling and opening the driver is as follows:

static int open_driver(const char *driver){

// Open the key method of the binder driver and eventually call the binder of the driver layer_ The fd returned by the open method is very important. You need to take it with you when you interact with the driver later

int fd = open(driver, O_RDWR | O_CLOEXEC);

if (fd >= 0) {

int vers = 0;

// Get the binder version number

status_t result = ioctl(fd, BINDER_VERSION, &vers);

if (result == -1) {

ALOGE("Binder ioctl to obtain version failed: %s", strerror(errno));

close(fd);

fd = -1;

}

if (result != 0 || vers != BINDER_CURRENT_PROTOCOL_VERSION) {

ALOGE("Binder driver protocol(%d) does not match user space protocol(%d)! ioctl() return value: %d",

vers, BINDER_CURRENT_PROTOCOL_VERSION, result);

close(fd);

fd = -1;

}

size_t maxThreads = DEFAULT_MAX_BINDER_THREADS;

// Tell the driver layer the maximum number of threads that can be started

result = ioctl(fd, BINDER_SET_MAX_THREADS, &maxThreads);

if (result == -1) {

ALOGE("Binder ioctl to set max threads failed: %s", strerror(errno));

}

} else {

ALOGW("Opening '%s' failed: %s\n", driver, strerror(errno));

}

return fd;

}

After calling the open method, an fd value will be returned. This value is very important and will be used for each subsequent communication with the driver.

The open method will eventually be transferred to the binder of the driver layer_ Open method:

static int binder_open(struct inode *nodp, struct file *filp){

// Declare binder_proc, and then allocate space and initialize it

struct binder_proc *proc;

Omit code ...

proc = kzalloc(sizeof(*proc), GFP_KERNEL);

if (proc == NULL)

return -ENOMEM;

get_task_struct(current);

proc->tsk = current;

proc->vma_vm_mm = current->mm;

INIT_LIST_HEAD(&proc->todo);

init_waitqueue_head(&proc->wait);

Omit code ...

hlist_add_head(&proc->proc_node, &binder_procs);

proc->pid = current->group_leader->pid;

INIT_LIST_HEAD(&proc->delivered_death);

filp->private_data = proc;

Omit code ...

return 0;

}

In the process of opening binder driver, several important things are done:

1. On the driver layer, check the binder_ Proc (binder_proc records a lot of information, which will be introduced later) is initialized, and the binder_ The todo queue and other information in proc are initialized.

2. Record the pid (process id) of the upper layer in the binder_ In proc

3. Bind_ Proc is assigned to filp - > private_ Data and filp have a certain relationship with the fd value returned to the upper layer. filp can be found through fd, and then the binder of the current process can be found_ proc.

4.ProcessState stores the returned fd in memory to communicate with the driver layer

5. Obtain the version information of the driver layer (ioctl acquisition) for comparison

6. Notify the upper layer of the driver layer to start up at most several binder threads (ioctl notification)

After opening the binder driver, the upper layer processes have records in the driver layer, and subsequent upper layer processes can communicate with the driver layer (basically through ioctl).

#4. The second preparation: mmap (memory mapping)

ProcessState::ProcessState(const char *driver){

Omit code...

// After successfully opening the driver

if (mDriverFD >= 0) {

// Memory mapping

// mmap the binder, providing a chunk of virtual address space to receive transactions.

mVMStart = mmap(nullptr, BINDER_VM_SIZE, PROT_READ, MAP_PRIVATE | MAP_NORESERVE, mDriverFD, 0);

if (mVMStart == MAP_FAILED) {

// *sigh*

ALOGE("Using %s failed: unable to mmap transaction memory.\n", mDriverName.c_str());

close(mDriverFD);

mDriverFD = -1;

mDriverName.clear();

}

}

Omit code...

}

In ProcessState, open the binder driver and then do mmap.

The method corresponding to the driver layer is: binder_mmap

One of the factors that the binder is superior to other process communication in terms of performance is that the data in process communication is copied only once. The important reason for copying once is mmap (memory mapping). There are many introductions on mmap on the Internet, which will not be repeated here.

5. Communication between process and driver layer

Before starting the binder main thread and receiving data content, we need to clarify the communication content between a basic process and the driver layer, which is very helpful for us to understand the following content.

Unlike socket communication, client and server can send messages to each other after the connection is established. The communication principle between binder process and driver layer is syscall, and the initiator of communication can only be the upper process.

5.1 sending data to driver layer

It mainly transfers data through ioctl method, and the calling process is ioctl – > binder_ ioctl (driver layer method),

ioctl(fd, cmd,data)

The parameters of ioctl are as above. fd needless to say, is the value returned by opening the binder driver (used to find the binder_proc of the current process in the driver layer). The value of cmd has binder_ WRITE_ READ,BINDER_SET_MAX_THREADS, etc. the structure corresponding to the data has a binder_ write_ Read et al.

5.2driver layer return data

Some calls require the driver layer to return data. For example, cmd is binder_ WRITE_ For the call of read, the driver layer calls copy for the data to be returned_ to_ User method copy in user space, when binder_ After the IOCTL method is executed, switch to the user space thread and start from the BINDER_WRITE_READ. read_ Fetch data from buffer.

5.3 binder_write_read

After talking about the communication mode between the upper process and the driver layer, why do you say binder_write_read, because binder_write_read is the most frequently used structure in the process of binder communication. It is used for communication between processes. Let's have a preliminary understanding first. We'll introduce it in detail later to see its definition

struct binder_write_read {

//The beginning of write is related to the data passed to the driver layer

binder_size_t write_size;

binder_size_t write_consumed;

//write_ Data passed from buffer to driver layer

binder_uintptr_t write_buffer;

//The beginning of read is related to the data returned by the driver layer

binder_size_t read_size;

binder_size_t read_consumed;

//read_ Data returned by buffer driver layer

binder_uintptr_t read_buffer;

};

How do we transfer code from the upper layer to the lower layer_ write_ Read, let's just take a brief look at this process, and we'll talk about the details of this process in detail later. This process is mainly in the IPCThreadState class

status_t IPCThreadState::writeTransactionData(int32_t cmd, uint32_t binderFlags, int32_t handle, uint32_t code, const Parcel& data, status_t* statusBuffer)

The writeTransactionData method first writes the key data of the interaction between processes to the binder_ transaction_ In the data structure, the binder_transaction_data is written to mOut, and the data of mOut is finally written to binder_ write_ Write of read_ In buffer, the final call is:

status_t IPCThreadState::talkWithDriver(bool doReceive)

The talkWithDriver method can be seen from the method name that it communicates with the driver layer. If doReceive is true, it means waiting for the driver layer to return data, otherwise it will not wait. The data is finally written to the binder_ write_ In read, the following methods are finally called in this method:

ioctl(mProcess->mDriverFD, BINDER_WRITE_READ, &bwr)

ioctl finally calls the binder of the driver layer_ ioctl method

static long binder_ioctl(struct file *filp, unsigned int cmd, unsigned long arg)

binder_ioctl method, because the current cmd is BINDER_WRITE_READ, so the binder will be executed_ ioctl_ write_ Read method

static int binder_ioctl_write_read(struct file *filp, unsigned int cmd, unsigned long arg, struct binder_thread *thread)

binder_ ioctl_ write_ In the read method, the binder_ thread_ The write method will read the data passed by the upper layer, and the binder_ thread_ The read method will return to the upper data according to the situation.

binder_thread_write is very important. For example, when the process communication is completed, the driver layer is notified to finish the work, reply data to the other process, and the driver layer notifies the upper layer to start the binder thread. Let's briefly introduce this aspect for the time being, so that we can better understand the following contents. We will explain the relevant contents in detail later.

#6. The third preparation: start the binder main thread to receive data

6.1 why start the binder main thread

Why do you want to start the main thread of the binder? Take socket communication as an example. In order to communicate between the client and server of the socket, the server must be started first to wait for the client to connect and then communicate. Similarly, the communication between binder processes is divided into client process and server process. The server process needs to monitor the data transmitted by the client process, and the monitoring behavior needs to be placed in a thread. This thread is the main thread of binder, and the main thread of binder is a receiver.

6.2 process analysis of starting binder main thread

Let's analyze the code. When analyzing, we will use the just mentioned process to communicate with the driver layer, binder_write_read content,

void ProcessState::startThreadPool()

{

AutoMutex _l(mLock);

if (!mThreadPoolStarted) {

mThreadPoolStarted = true;

// true means to start the binder main thread

spawnPooledThread(true);

}

}

Let's look at the spawnPooledThread method

void ProcessState::spawnPooledThread(bool isMain)

{

// Execute after the binder main thread starts

if (mThreadPoolStarted) {

// The name of the binder thread, format: binder:pid_x (x stands for the thread)

String8 name = makeBinderThreadName();

ALOGV("Spawning new pooled thread, name=%s\n", name.string());

// new whether a thread isMain is the main thread of the binder

sp<Thread> t = new PoolThread(isMain);

t->run(name.string());

}

}

Take a look at the PoolThread class

class PoolThread : public Thread

{

public:

explicit PoolThread(bool isMain)

: mIsMain(isMain)

{

}

protected:

virtual bool threadLoop()

{

// After the thread starts, execute the method of IPCThreadState

IPCThreadState::self()->joinThreadPool(mIsMain);

return false;

}

const bool mIsMain;

};

After starting the thread, finally call the IPCThreadState:: self() - > jointhreadpool (mismain) method. The above process is mainly in the ProcessState class. Now switch to the IPCThreadState class

void IPCThreadState::joinThreadPool(bool isMain)

{

// The data of mOut will be sent to the driver layer, BC_ENTER_LOOPER: represents the main thread of binder,

//BC_REGISTER_LOOPER: represents the binder thread started by the driver after issuing the command

mOut.writeInt32(isMain ? BC_ENTER_LOOPER : BC_REGISTER_LOOPER);

status_t result;

do {

processPendingDerefs();

// Wait for the data transmitted by the driver layer for processing. If there is no data, it is in the waiting state

// now get the next command to be processed, waiting if necessary

result = getAndExecuteCommand();

Omit code...

// If the current thread is no longer used for and is not the main thread, exit between

// Let this thread exit the thread pool if it is no longer

// needed and it is not the main process thread.

if(result == TIMED_OUT && !isMain) {

break;

}

} while (result != -ECONNREFUSED && result != -EBADF);

// The binder thread exits and needs to notify the drive layer to do some processing

mOut.writeInt32(BC_EXIT_LOOPER);

// Passing false means that after notifying the driver layer, it will return immediately without waiting for the data of the driver layer

talkWithDriver(false);

}

joinThreadPool() method, if isMain is true, it means the main binder thread will not exit, and it will basically exist with the whole process (unless an error occurs). The driver layer also needs to be notified when the thread exits. Look at the method getAndExecuteCommand()

status_t IPCThreadState::getAndExecuteCommand()

{

status_t result;

int32_t cmd;

// Send data to the driver layer and wait for the data to be returned

result = talkWithDriver();

if (result >= NO_ERROR) {

size_t IN = mIn.dataAvail();

if (IN < sizeof(int32_t)) return result;

cmd = mIn.readInt32();

Omit code...

//Get cmd for processing

result = executeCommand(cmd);

Omit code...

}

return result;

}

getAndExecuteCommand() method will call talkWithDriver() to send data to the driver layer and wait for the return data from the driver layer. Calling executeCommand(cmd) method will process the returned cmd. We won't discuss this process for the time being. Let's look at the talkWithDriver() method:

// The default value of doReceive is true

status_t IPCThreadState::talkWithDriver(bool doReceive)

{

if (mProcess->mDriverFD <= 0) {

return -EBADF;

}

// Declare a binder_write_read

binder_write_read bwr;

// Is the read buffer empty?

const bool needRead = mIn.dataPosition() >= mIn.dataSize();

// We don't want to write anything if we are still reading

// from data left in the input buffer and the caller

// has requested to read the next data.

const size_t outAvail = (!doReceive || needRead) ? mOut.dataSize() : 0;

bwr.write_size = outAvail;

bwr.write_buffer = (uintptr_t)mOut.data();

// Doreceive & & needread are all true, which means that they will wait for the data returned by the driver layer and will be blocked before returning

// This is what we'll read.

if (doReceive && needRead) {

bwr.read_size = mIn.dataCapacity();

// The returned data is finally read from mIn

bwr.read_buffer = (uintptr_t)mIn.data();

} else {

bwr.read_size = 0;

bwr.read_buffer = 0;

}

Omit code...

bwr.write_consumed = 0;

bwr.read_consumed = 0;

status_t err;

do {

Omit code...

// Communication with driver layer

if (ioctl(mProcess->mDriverFD, BINDER_WRITE_READ, &bwr) >= 0)

err = NO_ERROR;

else

err = -errno;

Omit code...

} while (err == -EINTR);

Omit code...

if (err >= NO_ERROR) {

if (bwr.write_consumed > 0) {

if (bwr.write_consumed < mOut.dataSize())

mOut.remove(0, bwr.write_consumed);

else {

mOut.setDataSize(0);

processPostWriteDerefs();

}

}

// Is there any data returned

if (bwr.read_consumed > 0) {

mIn.setDataSize(bwr.read_consumed);

mIn.setDataPosition(0);

}

Omit code...

return NO_ERROR;

}

return err;

}

The talkWithDriver() method will eventually call IOCTL to communicate with the driver layer and send BC_ENTER_LOOPER is given to the driver layer. It is talking about the communication between the process and the driver layer_ write_ When the driver arrives at the final * * layer_ ioctl_ write_ Read * * method, binder_ thread_ The write method reads the data of the upper layer (not introduced for the time being). Now look at the binder_thread_read method

static int binder_thread_read(struct binder_proc *proc,

struct binder_thread *thread,

binder_uintptr_t binder_buffer, size_t size,

binder_size_t *consumed, int non_block)

{

Omit code...

retry:

// wait_for_proc_work is true, indicating that the current kernel thread has no transactions to process, so it is about to enter the waiting state

wait_for_proc_work = thread->transaction_stack == NULL &&

list_empty(&thread->todo);

Omit code...

if (wait_for_proc_work) {

// The current kernel thread enters the waiting state, waiting for a new transaction to join and be awakened

if (!(thread->looper & (BINDER_LOOPER_STATE_REGISTERED |

BINDER_LOOPER_STATE_ENTERED))) {

binder_user_error("%d:%d ERROR: Thread waiting for process work before calling BC_REGISTER_LOOPER or BC_ENTER_LOOPER (state %x)\n",

proc->pid, thread->pid, thread->looper);

wait_event_interruptible(binder_user_error_wait,

binder_stop_on_user_error < 2);

}

binder_set_nice(proc->default_priority);

if (non_block) {

if (!binder_has_proc_work(proc, thread))

ret = -EAGAIN;

} else

ret = wait_event_freezable_exclusive(proc->wait, binder_has_proc_work(proc, thread));

} else {

Omit code...

}

Omit code...

// It means you're awakened

thread->looper &= ~BINDER_LOOPER_STATE_WAITING;

if (ret)

return ret;

while (1) {

// The code omitted below takes out the transaction and starts execution

Omit code...

}

done:

// The code omitted below informs the upper process whether to open a new binder thread according to the condition

Omit code...

return 0;

}

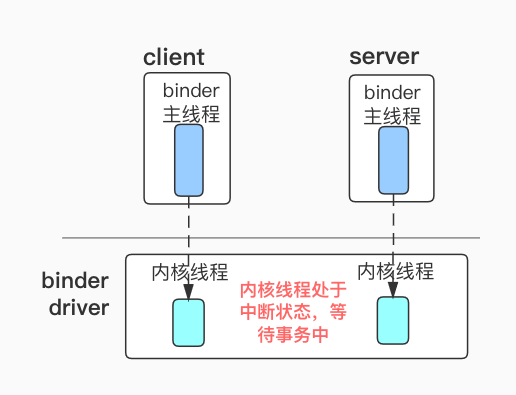

binder_thread_read method: if the current kernel thread has no transaction to process, the kernel thread enters the waiting state, which will cause the binder thread corresponding to the upper process to enter the waiting state. If there is a transaction, the kernel thread will be awakened and then process the transaction (we will introduce this part later).

So far, the basic analysis of the process of starting the binder main thread has been completed. Let's summarize:

1. First, the binder main thread will be started in the current process, and the life cycle of the main thread is consistent with that of the process.

2. After all, processes are isolated. The main thread of the binder is started immediately, and the main thread will not receive data from other processes without the coordination of the binder driver. Therefore, after the main thread of the binder is started, it will notify the driver layer, and the corresponding kernel thread of the driver layer enters the waiting state, resulting in the main thread of the binder in the upper layer entering the waiting state.

3. After receiving the transaction, the kernel thread of the driver layer returns the relevant data to the corresponding binder thread in the upper process.

4.binder threads are divided into main threads and ordinary threads. Ordinary threads are generally destroyed after execution

7. When will the binder preparation be called

So far, the preparation of the binder has been completed, but there is still a very important work to be done, that is, when the preparation of the binder will be called, and the specific call is in app_ main. AppRuntime class in cpp file

virtual void onZygoteInit()

{

sp<ProcessState> proc = ProcessState::self();

ALOGV("App process: starting thread pool.\n");

proc->startThreadPool();

}

In the onZygoteInit method, the ProcessState::self() ProcessState constructor starts to initialize. During initialization, the binder driver and mmap will be opened and executed after these works are completed

Proc - > startthreadpool(), this method will start the binder main thread to receive data.

The onZygoteInit method of AppRuntime is called immediately after the app process zygote. Therefore, as long as each app process is zygote, it will immediately prepare the binder.

8. Summary

Let me use a picture to summarize the content of this article

After opening the binder driver, the upper process can communicate with the driver layer. After starting the binder main thread, the current process can be used as the server side of the binder process communication and can receive the data of the client process.

Whether it is a server process or a client process, there is a corresponding kernel thread in the driver layer in the interrupted state. Why does the client process also need a waiting kernel thread when waiting for a transaction (which will be described later in the binder_transfer)? This is because when the client communicates with the process, as long as the data passed to other processes contains a binder (this binder is a BBinder, not a BpBinder proxy class), the client process will become the provider of the binder service at any time.