Authors: Yin min, KubeSphere Ambassador, head of Hangzhou station of KubeSphere community user committee

1, KubeKey introduction

KubeKey (hereinafter referred to as KK) is an open source lightweight tool for deploying Kubernetes clusters. It provides a flexible, fast and convenient way to install only Kubernetes/K3s, or both Kubernetes/K3s and KubeSphere, as well as other cloud native plug-ins. In addition, it is also an effective tool for expanding and upgrading clusters.

KubeKey v2. Version 0.0 adds the concepts of manifest and artifact, which provides a solution for users to deploy Kubernetes clusters offline. In the past, users needed to prepare deployment tools to mirror tar packages and other related binaries. Each user needed to deploy different versions of Kubernetes and images. Now with kk, users only need to use the manifest manifest file to define the content required by the cluster environment to be deployed offline, and then export the artifact file through the manifest to complete the preparation. When deploying offline, you only need kk and artifact to quickly and simply deploy the image warehouse and Kubernetes cluster in the environment.

2, Deployment preparation

1. List of resources

| name | quantity | purpose |

|---|---|---|

| kubesphere3.2.1 | 1 | Source cluster packaging |

| The server | 2 | Offline environment deployment |

2. Download and unzip KK2 from the source cluster 0.0-rc-3

Note: as the KK version is constantly updated, please follow the latest Releases version on github

$ wget https://github.com/kubesphere/kubekey/releases/download/v2.0.0-rc.3/kubekey-v2.0.0-rc.3-linux-amd64.tar.gz

$ tar -zxvf kubekey-v2.0.0-rc.3-linux-amd64.tar.gz

3. Create a manifest using KK in the source cluster

Note: manifest is a text file that describes the current Kubernetes cluster information and defines what needs to be included in the artifact artifacts. There are currently two ways to generate this file:

Manually create and write the file based on the template. Use the kk command to generate the file based on the existing cluster.

$ ./kk create manifest

4. Modify the manifest configuration in the source cluster

explain:

1. The repository part needs to specify the dependent iso package of the server system. You can directly fill in the corresponding download address in the url or download the iso package locally in advance, fill in the local storage path in the localPath and delete the url configuration item

Open the harbor and docker compose configuration items to push the image through the self built harbor warehouse of KK

By default, the list of images in the created manifest is from docker IO acquisition, it is recommended to modify the image acquired in Qingyun warehouse in the following example

You can modify manifest sample according to the actual situation The contents of yaml file are used to export the desired artifact file later

$ vim manifest.yaml

---

apiVersion: kubekey.kubesphere.io/v1alpha2

kind: Manifest

metadata:

name: sample

spec:

arches:

- amd64

operatingSystems:

- arch: amd64

type: linux

id: centos

version: "7"

repository:

iso:

localPath: /mnt/sdb/kk2.0-rc/kubekey/centos-7-amd64-rpms.iso

url: #You can also fill in the download address here

kubernetesDistributions:

- type: kubernetes

version: v1.21.5

components:

helm:

version: v3.6.3

cni:

version: v0.9.1

etcd:

version: v3.4.13

## For now, if your cluster container runtime is containerd, KubeKey will add a docker 20.10.8 container runtime in the below list.

## The reason is KubeKey creates a cluster with containerd by installing a docker first and making kubelet connect the socket file of containerd which docker contained.

containerRuntimes:

- type: docker

version: 20.10.8

crictl:

version: v1.22.0

##

# docker-registry:

# version: "2"

harbor:

version: v2.4.1

docker-compose:

version: v2.2.2

images:

- registry.cn-beijing.aliyuncs.com/kubesphereio/kube-apiserver:v1.22.1

- registry.cn-beijing.aliyuncs.com/kubesphereio/kube-controller-manager:v1.22.1

- registry.cn-beijing.aliyuncs.com/kubesphereio/kube-proxy:v1.22.1

- registry.cn-beijing.aliyuncs.com/kubesphereio/kube-scheduler:v1.22.1

- registry.cn-beijing.aliyuncs.com/kubesphereio/kube-apiserver:v1.21.5

- registry.cn-beijing.aliyuncs.com/kubesphereio/kube-controller-manager:v1.21.5

- registry.cn-beijing.aliyuncs.com/kubesphereio/kube-proxy:v1.21.5

- registry.cn-beijing.aliyuncs.com/kubesphereio/kube-scheduler:v1.21.5

- registry.cn-beijing.aliyuncs.com/kubesphereio/kube-apiserver:v1.20.10

- registry.cn-beijing.aliyuncs.com/kubesphereio/kube-controller-manager:v1.20.10

- registry.cn-beijing.aliyuncs.com/kubesphereio/kube-proxy:v1.20.10

- registry.cn-beijing.aliyuncs.com/kubesphereio/kube-scheduler:v1.20.10

- registry.cn-beijing.aliyuncs.com/kubesphereio/kube-apiserver:v1.19.9

- registry.cn-beijing.aliyuncs.com/kubesphereio/kube-controller-manager:v1.19.9

- registry.cn-beijing.aliyuncs.com/kubesphereio/kube-proxy:v1.19.9

- registry.cn-beijing.aliyuncs.com/kubesphereio/kube-scheduler:v1.19.9

- registry.cn-beijing.aliyuncs.com/kubesphereio/pause:3.5

- registry.cn-beijing.aliyuncs.com/kubesphereio/pause:3.4.1

- registry.cn-beijing.aliyuncs.com/kubesphereio/coredns:1.8.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/cni:v3.20.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/kube-controllers:v3.20.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/node:v3.20.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/pod2daemon-flexvol:v3.20.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/typha:v3.20.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/flannel:v0.12.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/provisioner-localpv:2.10.1

- registry.cn-beijing.aliyuncs.com/kubesphereio/linux-utils:2.10.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/haproxy:2.3

- registry.cn-beijing.aliyuncs.com/kubesphereio/nfs-subdir-external-provisioner:v4.0.2

- registry.cn-beijing.aliyuncs.com/kubesphereio/k8s-dns-node-cache:1.15.12

- registry.cn-beijing.aliyuncs.com/kubesphereio/ks-installer:v3.2.1

- registry.cn-beijing.aliyuncs.com/kubesphereio/ks-apiserver:v3.2.1

- registry.cn-beijing.aliyuncs.com/kubesphereio/ks-console:v3.2.1

- registry.cn-beijing.aliyuncs.com/kubesphereio/ks-controller-manager:v3.2.1

- registry.cn-beijing.aliyuncs.com/kubesphereio/kubectl:v1.21.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/kubectl:v1.20.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/kubefed:v0.8.1

- registry.cn-beijing.aliyuncs.com/kubesphereio/tower:v0.2.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/minio:RELEASE.2019-08-07T01-59-21Z

- registry.cn-beijing.aliyuncs.com/kubesphereio/mc:RELEASE.2019-08-07T23-14-43Z

- registry.cn-beijing.aliyuncs.com/kubesphereio/snapshot-controller:v4.0.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/nginx-ingress-controller:v0.48.1

- registry.cn-beijing.aliyuncs.com/kubesphereio/defaultbackend-amd64:1.4

- registry.cn-beijing.aliyuncs.com/kubesphereio/metrics-server:v0.4.2

- registry.cn-beijing.aliyuncs.com/kubesphereio/redis:5.0.14-alpine

- registry.cn-beijing.aliyuncs.com/kubesphereio/haproxy:2.0.25-alpine

- registry.cn-beijing.aliyuncs.com/kubesphereio/alpine:3.14

- registry.cn-beijing.aliyuncs.com/kubesphereio/openldap:1.3.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/netshoot:v1.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/cloudcore:v1.7.2

- registry.cn-beijing.aliyuncs.com/kubesphereio/edge-watcher:v0.1.1

- registry.cn-beijing.aliyuncs.com/kubesphereio/edge-watcher-agent:v0.1.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/gatekeeper:v3.5.2

- registry.cn-beijing.aliyuncs.com/kubesphereio/openpitrix-jobs:v3.2.1

- registry.cn-beijing.aliyuncs.com/kubesphereio/devops-apiserver:v3.2.1

- registry.cn-beijing.aliyuncs.com/kubesphereio/devops-controller:v3.2.1

- registry.cn-beijing.aliyuncs.com/kubesphereio/devops-tools:v3.2.1

- registry.cn-beijing.aliyuncs.com/kubesphereio/ks-jenkins:v3.2.0-2.249.1

- registry.cn-beijing.aliyuncs.com/kubesphereio/jnlp-slave:3.27-1

- registry.cn-beijing.aliyuncs.com/kubesphereio/builder-base:v3.2.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/builder-nodejs:v3.2.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/builder-maven:v3.2.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/builder-python:v3.2.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/builder-go:v3.2.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/builder-go:v3.2.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/builder-base:v3.2.0-podman

- registry.cn-beijing.aliyuncs.com/kubesphereio/builder-nodejs:v3.2.0-podman

- registry.cn-beijing.aliyuncs.com/kubesphereio/builder-maven:v3.2.0-podman

- registry.cn-beijing.aliyuncs.com/kubesphereio/builder-python:v3.2.0-podman

- registry.cn-beijing.aliyuncs.com/kubesphereio/builder-go:v3.2.0-podman

- registry.cn-beijing.aliyuncs.com/kubesphereio/builder-go:v3.2.0-podman

- registry.cn-beijing.aliyuncs.com/kubesphereio/s2ioperator:v3.2.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/s2irun:v3.2.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/s2i-binary:v3.2.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/tomcat85-java11-centos7:v3.2.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/tomcat85-java11-runtime:v3.2.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/tomcat85-java8-centos7:v3.2.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/tomcat85-java8-runtime:v3.2.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/java-11-centos7:v3.2.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/java-8-centos7:v3.2.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/java-8-runtime:v3.2.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/java-11-runtime:v3.2.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/nodejs-8-centos7:v3.2.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/nodejs-6-centos7:v3.2.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/nodejs-4-centos7:v3.2.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/python-36-centos7:v3.2.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/python-35-centos7:v3.2.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/python-34-centos7:v3.2.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/python-27-centos7:v3.2.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/configmap-reload:v0.3.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/prometheus:v2.26.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/prometheus-config-reloader:v0.43.2

- registry.cn-beijing.aliyuncs.com/kubesphereio/prometheus-operator:v0.43.2

- registry.cn-beijing.aliyuncs.com/kubesphereio/kube-rbac-proxy:v0.8.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/kube-state-metrics:v1.9.7

- registry.cn-beijing.aliyuncs.com/kubesphereio/node-exporter:v0.18.1

- registry.cn-beijing.aliyuncs.com/kubesphereio/k8s-prometheus-adapter-amd64:v0.6.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/alertmanager:v0.21.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/thanos:v0.18.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/grafana:7.4.3

- registry.cn-beijing.aliyuncs.com/kubesphereio/kube-rbac-proxy:v0.8.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/notification-manager-operator:v1.4.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/notification-manager:v1.4.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/notification-tenant-sidecar:v3.2.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/elasticsearch-curator:v5.7.6

- registry.cn-beijing.aliyuncs.com/kubesphereio/elasticsearch-oss:6.7.0-1

- registry.cn-beijing.aliyuncs.com/kubesphereio/fluentbit-operator:v0.11.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/docker:19.03

- registry.cn-beijing.aliyuncs.com/kubesphereio/fluent-bit:v1.8.3

- registry.cn-beijing.aliyuncs.com/kubesphereio/log-sidecar-injector:1.1

- registry.cn-beijing.aliyuncs.com/kubesphereio/filebeat:6.7.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/kube-events-operator:v0.3.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/kube-events-exporter:v0.3.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/kube-events-ruler:v0.3.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/kube-auditing-operator:v0.2.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/kube-auditing-webhook:v0.2.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/pilot:1.11.1

- registry.cn-beijing.aliyuncs.com/kubesphereio/proxyv2:1.11.1

- registry.cn-beijing.aliyuncs.com/kubesphereio/jaeger-operator:1.27

- registry.cn-beijing.aliyuncs.com/kubesphereio/jaeger-agent:1.27

- registry.cn-beijing.aliyuncs.com/kubesphereio/jaeger-collector:1.27

- registry.cn-beijing.aliyuncs.com/kubesphereio/jaeger-query:1.27

- registry.cn-beijing.aliyuncs.com/kubesphereio/jaeger-es-index-cleaner:1.27

- registry.cn-beijing.aliyuncs.com/kubesphereio/kiali-operator:v1.38.1

- registry.cn-beijing.aliyuncs.com/kubesphereio/kiali:v1.38

- registry.cn-beijing.aliyuncs.com/kubesphereio/busybox:1.31.1

- registry.cn-beijing.aliyuncs.com/kubesphereio/nginx:1.14-alpine

- registry.cn-beijing.aliyuncs.com/kubesphereio/wget:1.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/hello:plain-text

- registry.cn-beijing.aliyuncs.com/kubesphereio/wordpress:4.8-apache

- registry.cn-beijing.aliyuncs.com/kubesphereio/hpa-example:latest

- registry.cn-beijing.aliyuncs.com/kubesphereio/java:openjdk-8-jre-alpine

- registry.cn-beijing.aliyuncs.com/kubesphereio/fluentd:v1.4.2-2.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/perl:latest

- registry.cn-beijing.aliyuncs.com/kubesphereio/examples-bookinfo-productpage-v1:1.16.2

- registry.cn-beijing.aliyuncs.com/kubesphereio/examples-bookinfo-reviews-v1:1.16.2

- registry.cn-beijing.aliyuncs.com/kubesphereio/examples-bookinfo-reviews-v2:1.16.2

- registry.cn-beijing.aliyuncs.com/kubesphereio/examples-bookinfo-details-v1:1.16.2

- registry.cn-beijing.aliyuncs.com/kubesphereio/examples-bookinfo-ratings-v1:1.16.3

registry:

auths: {}5. Export artifact from source cluster

explain:

Artifact is a tgz package that contains image tar package and related binary files exported according to the content of the specified manifest file. kk can specify an artifact in the commands of initializing the image warehouse, creating a cluster, adding nodes and upgrading a cluster. kk will automatically unpack the artifact and directly use the unpacked file when executing the command.

be careful:

The export command will download the corresponding binary files from the Internet. Please ensure that the network connection is normal.

The export command will pull the images one by one according to the image list in the manifest file. Please ensure that containerd or docker with a minimum version of 18.09 is installed on the work node of kk.

3.kk will resolve the image name in the image list. If the image warehouse in the image name needs authentication information, it can be in the manifest file registry. The auths field is being configured.

- If the artifact file to be exported contains operating system dependent files (e.g. connarck, chrony, etc.), it can be found in the operationSystem element repostiory. iso. Configure the corresponding ISO dependent file download address in the URL.

$ export KKZONE=cn $ ./kk artifact export -m manifest-sample.yaml -o kubesphere.tar.gz #The default tar package name is kubekey artifact tar. GZ, you can customize the package name through the - o parameter

3, Offline cluster installation environment

1. Download KK in offline environment

$ wget https://github.com/kubesphere/kubekey/releases/download/v2.0.0-rc.3/kubekey-v2.0.0-rc.3-linux-amd64.tar.gz

2. Create offline cluster configuration file

$./kk create config --with-kubesphere v3.2.1 --with-kubernetes v1.21.5 -f config-sample.yaml

3. Modify the configuration file

$ vim config-sample.yaml

explain:

- Modify the node information according to the actual offline environment configuration

- The registry warehouse deployment node must be specified (because KK deployment needs to use self built harbor warehouse)

- The type must be specified as harbor in the registry. If it is not matched with harbor, the docker registry is installed by default

apiVersion: kubekey.kubesphere.io/v1alpha2

kind: Cluster

metadata:

name: sample

spec:

hosts:

- {name: master, address: 192.168.149.133, internalAddress: 192.168.149.133, user: root, password: "Supaur@2022"}

- {name: node1, address: 192.168.149.134, internalAddress: 192.168.149.134, user: root, password: "Supaur@2022"}

roleGroups:

etcd:

- master

control-plane:

- master

worker:

- node1

# If you need to use kk to automatically deploy the image warehouse, please set the host group (it is recommended that the warehouse be deployed separately from the cluster to reduce mutual influence)

registry:

- node1

controlPlaneEndpoint:

## Internal loadbalancer for apiservers

# internalLoadbalancer: haproxy

domain: lb.kubesphere.local

address: ""

port: 6443

kubernetes:

version: v1.21.5

clusterName: cluster.local

network:

plugin: calico

kubePodsCIDR: 10.233.64.0/18

kubeServiceCIDR: 10.233.0.0/18

## multus support. https://github.com/k8snetworkplumbingwg/multus-cni

multusCNI:

enabled: false

registry:

# If you need to use kk to deploy harbor, you can set this parameter to harbor. If you do not set this parameter and need to use kk to create a container image warehouse, docker registry will be used by default.

type: harbor

# If you use the harbor deployed by kk or other warehouses that need to log in, you can set the auths of the corresponding warehouse. If you use the docker registry warehouse created by kk, you do not need to configure this parameter.

# Note: if using kk to deploy harbor, please set this parameter after harbor is started.

#auths:

# "dockerhub.kubekey.local":

# username: admin

# password: Harbor12345

plainHTTP: false

# Set the private warehouse used in cluster deployment

privateRegistry: "dockerhub.kubekey.local"

namespaceOverride: ""

registryMirrors: []

insecureRegistries: []

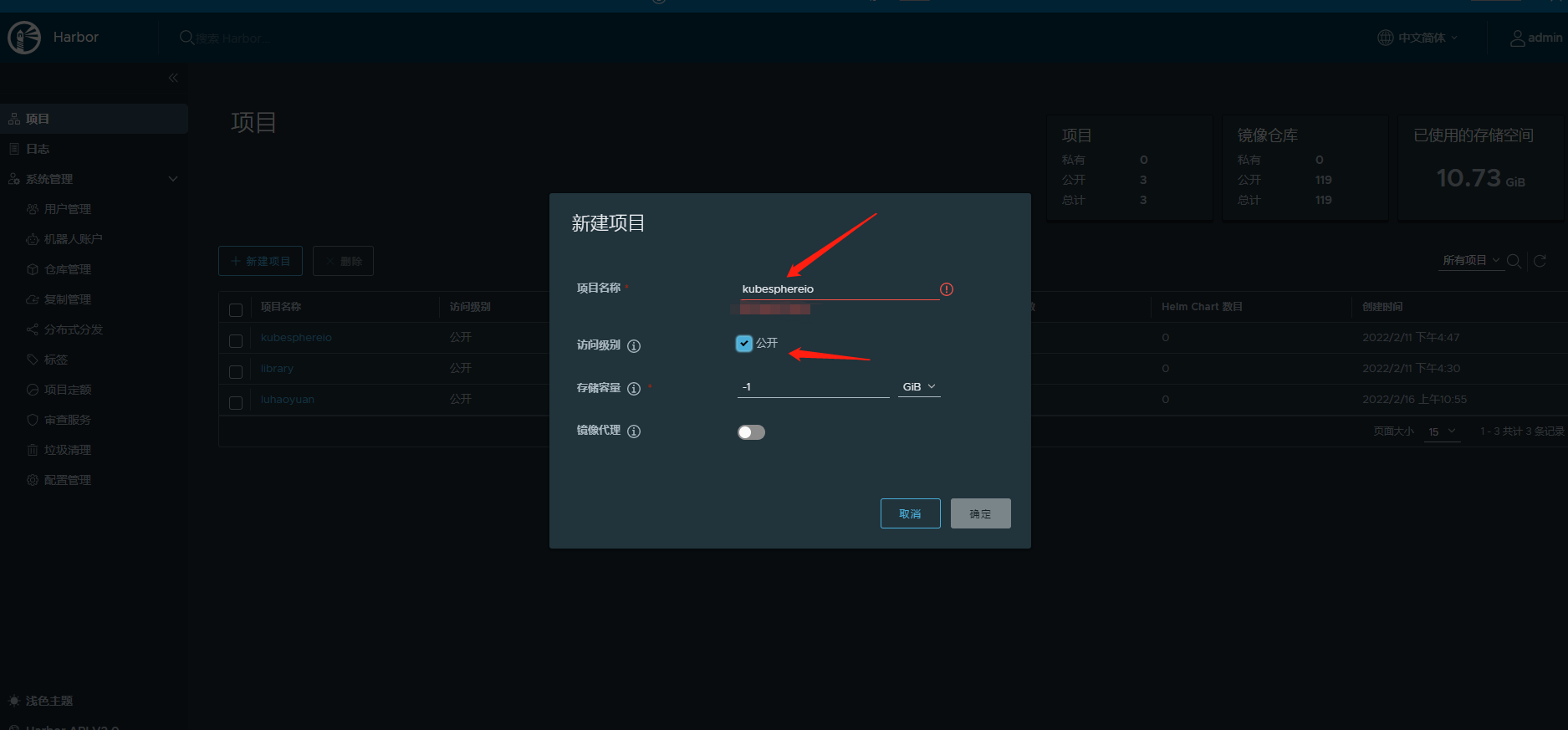

addons: []4. Method 1: execute script to create harbor project

4.1 download the specified script to initialize the harbor warehouse

$ curl https://github.com/kubesphere/ks-installer/blob/master/scripts/create_project_harbor.sh

4.2 modify script configuration file

explain:

- The value of the modified url is https://dockerhub.kubekey.local

- It is necessary to specify the warehouse project name and keep consistent with the project name in the image list

- Add - k at the end of curl command at the end of script

$ vim create_project_harbor.sh

#!/usr/bin/env bash

Copyright 2018 The KubeSphere Authors.

#

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at

#

http://www.apache.org/licenses/LICENSE-2.0

#

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License.

url="https://dockerhub.kubekey.local" #The value of the modified url is https://dockerhub.kubekey.local user="admin" passwd="Harbor12345"

harbor_projects=(library kubesphereio # needs to specify the warehouse project name, which is consistent with the project name in the image list)

for project in "${harbor_projects[@]}"; Do echo "creating $project" curl - U "${user}: ${passwd}" - x post - H "content type: application / JSON" "${URL} / API / v2.0 / projects" - D "{" project_name ":" ${project} "," public ": true}" - K #curl add - k done at the end of the command

```bash $ chmod +x create_project_harbor.sh

$ ./create_project_harbor.sh

4.3 method 2: log in to harbor warehouse to create project

5. Install the image warehouse with KK

Description: 1 config-sample. Yaml (configuration file of offline environment cluster) 2 kubesphere. tar. GZ (tar package image packaged by the source cluster) 3 The harbor installation file is in / opt/harbor. If you need to operate harbor, you can go to this directory.

$ ./kk init registry -f config-sample.yaml -a kubesphere.tar.gz

6. Modify the cluster configuration file again

explain:

- Added auths configuration and dockerhub kubekey. Local, account and password

2. Dockerhub is added to privateregistry kubekey. local

3. Add kubesphere IO to namespaceoverride (corresponding to the new project in the warehouse)

$ vim config-sample.yaml

...

registry:

type: harbor

auths:

"dockerhub.kubekey.local":

username: admin

password: Harbor12345

plainHTTP: false

privateRegistry: "dockerhub.kubekey.local"

namespaceOverride: "kubesphereio"

registryMirrors: []

insecureRegistries: []

addons: []

7. Install kubesphere cluster

Description: 1 config-sample. Yaml (configuration file of offline environment cluster) 2 kubesphere. tar. GZ (tar package image packaged by the source cluster)

- Specify k8s version, kubepshere version

- --With packages (must be added, otherwise the ISO dependency installation fails)

$ ./kk create cluster -f config-sample1.yaml -a kubesphere.tar.gz --with-kubernetes v1.21.5 --with-kubesphere v3.2.1 --with-packages

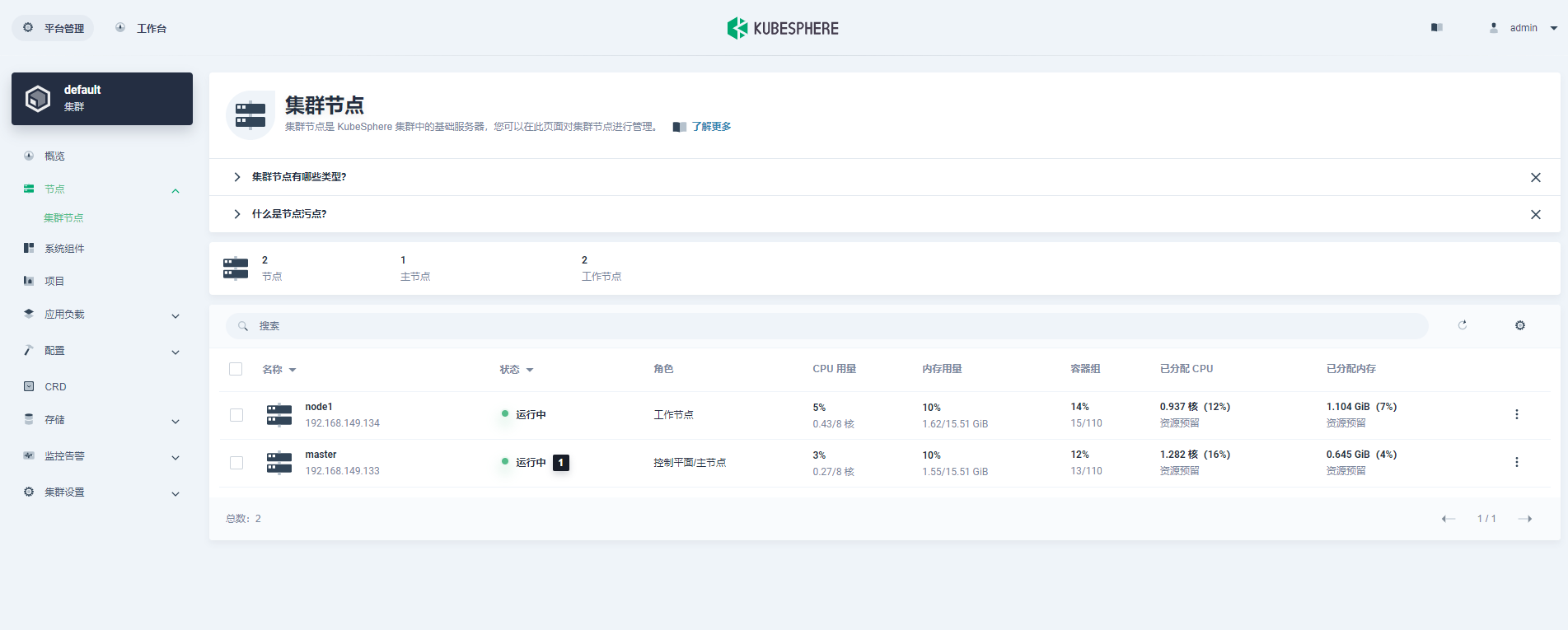

8. View cluster status

$ kubectl logs -n kubesphere-system $(kubectl get pod -n kubesphere-system -l app=ks-install -o jsonpath='{.items[0].metadata.name}') -f************************************************** ##################################################### ### Welcome to KubeSphere! ### ##################################################### Console: http://192.168.149.133:30880 Account: admin Password: P@88w0rd NOTES: 1. After you log into the console, please check the monitoring status of service components in the "Cluster Management". If any service is not ready, please wait patiently until all components are up and running. 2. Please change the default password after login. ##################################################### https://kubesphere.io 2022-02-28 23:30:06 #####################################################

9. Log in to kubesphere console

4, Ending

This tutorial uses KK 2.0.0 as the deployment tool to realize the deployment of kubesphere cluster in offline environment. Of course, KK also supports the deployment of kubernetes. I hope KK can help you achieve the purpose of offline lightning delivery. If you have good ideas and suggestions, you can go to Kubekey warehouse Submit an issue in to help solve the problem.

This article is composed of blog one article multi posting platform OpenWrite release!