What is fast power algorithm

First of all, let's look at an ACM programming problem. This problem is the problem with serial number 2035 in Hangzhou Electric OJ. Students who have not done this problem can do it together( Click here Transmission), the title is as follows:

Problem Description:

At first glance, this topic will not be difficult. The topic is just one line, and the idea is also very easy. The algorithm of exponentiation should be connected when learning programming language. It can be solved by writing a simple cycle.

/**

* Ordinary exponentiation function

* @param base base number

* @param power index

* @return The integer represented by the last 3 digits of the power result

*/

long long normalPower(long long base,long long power){

long long result=1;

for(int i=1;i<=power;i++){

result=result*base;

}

return result%1000;

}Isn't this problem solved in minutes? Next, let's write a main function to test:

int main(){

long long base,power;

cin>>base>>power;

cout<<"base="<<base<<" power="<<power<<" "<<normalPower(base,power)<<endl;

return 0;

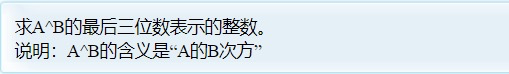

}Then, let's happily find out what the integer represented by the last three digits of the result of 2 ^ 100 is! The output results are as follows:

Why is the answer 0? There is no mistake~

Don't worry, let's think about it again. This problem is actually very interesting. The problem requires you to output the last three of the results. Why don't you output the results directly? Is it just to make the problem more difficult? Of course not. We learned "index explosion" in junior high school. Let's review the concept of "index":

Exponent: in power a, where a is called base number , n is called index , the result is called power.

f(x)=a^x. with the increase of x unit length, f(x) will increase explosively.

A piece of paper is folded in half once, and the thickness becomes twice the original. Then fold it in half for the second time, and it becomes 4 times of the original 2 to the power of 2. By analogy, assuming that the thickness of the paper is 0.1mm, after folding in half for 24 times, the length exceeds 1km; Fold in half 39 times to 55000 kilometers, exceeding the length of the earth's equator; Fold in half 42 times, reaching 440000 kilometers, exceeding the distance from the earth to the moon; Fold in half 51 times, reaching 2.2 billion kilometers, exceeding the distance from the earth to the sun; Folded 82 times, 51113 light-years, exceeding the length of the Galactic radius.

Therefore, if the topic asks you to find the 100th power of 2, it seems that the largest long lnog type in our programming language can not carry such a large value, so the topic will not require you to output the result, because the result may be so large that there is no type to carry. Therefore, we will find out why the above result is 0 because overflow has occurred.

So why does the question require an integer represented by the last three digits of the output result? Some students may ask: it's easy to find the integer represented by the last three digits of a number. As long as you use this result to "modulo" and let it modulo 1000, the number you get is the integer represented by the last three digits of the number( For example, the integer represented by the last three digits of 12345 is 12345% (1000 = 345). However, you can't find the result. How can I do the "modulo" operation? Aren't you fooling around?

Don't worry, let's first understand the algorithm of "modulo" operation: (specific proof, interested students can ask Du Niang)

(a + b) % p = (a % p + b % p) % p (1) (a - b) % p = (a % p - b % p ) % p (2) (a * b) % p = (a % p * b % p) % p (3)

Among them, we only need to pay attention to the "3" rule: (a * b)% P = (a% p * B% P)% p. if we carefully study this algorithm, we will find that the modular result of the continuous product of multiple factors is equal to the modular result of the product after each factor is modular. That is, if we ask:

(a*b*c)%d=(a%d*b%d*c%d)%d;

Therefore, with the help of this law, we can achieve the same effect only by performing the "modulo" operation in advance at each step of the cyclic product, rather than waiting until the end to "modulo" the result directly.

So our code can look like this:

/**

* Ordinary exponentiation function

* @param base base number

* @param power index

* @return The integer represented by the last 3 digits of the power result

*/

long long normalPower(long long base,long long power){

long long result=1;

for(int i=1;i<=power;i++){

result=result*base;

result=result%1000;

}

return result%1000;

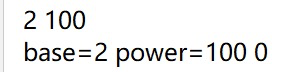

}Let's test again. Can we output the results? Let's still ask what the last three digits of 2 ^ 100 are:

This time we got the perfect result we wanted. The last three integer digits of the power result of 2 ^ 100 are 376. We submit the following code to OJ to see if it can pass:

#include <iostream>

#include <cmath>

using namespace std;

/**

* Ordinary exponentiation function

* @param base base number

* @param power index

* @return The integer represented by the last 3 digits of the power result

*/

long long normalPower(long long base, long long power) {

long long result = 1;

for (int i = 1; i <= power; i++) {

result = result * base;

result = result % 1000;

}

return result % 1000;

}

int main() {

long long base, power;

while (true) {

cin >> base >> power;

if (base == 0 && power == 0) break;

cout << normalPower(base, power) << endl;

}

return 0;

}

The final result is successful acceptance.

Think again

Although this power seeking method is very useful and submitted to OJ and accepted directly, let's consider the time complexity of this algorithm. Suppose we find the 100th power of 2, then 100 cycles will be executed. If we analyze this algorithm, we will find that the time complexity of this algorithm is O(N), where N is exponential. It's OK to find a small result. What if we ask for the 1000000000 power of 2? This program may run for a long time. How long will it last? Let's test it. The test code is as follows:

#include <iostream>

#include <cmath>

#include <time.h>

using namespace std;

/**

* Ordinary exponentiation function

* @param base base number

* @param power index

* @return The integer represented by the last 3 digits of the power result

*/

long long normalPower(long long base, long long power) {

long long result = 1;

for (int i = 1; i <= power; i++) {

result = result * base;

result = result % 1000;

}

return result % 1000;

}

int main() {

clock_t start, finish;

//clock_t is the number of CPU clock timing units

long long base, power;

cin >> base >> power;

start = clock();

//The clock() function returns the number of CPU clock timing units at this time

cout << normalPower(base, power) << endl;

finish = clock();

//The clock() function returns the number of CPU clock timing units at this time

cout << "the time cost is" << double(finish - start) / CLOCKS_PER_SEC;

//The difference between finish and start is the number of CPU clock units spent by the program running, and then divided by the number of CPU clock units per second, that is, the time spent by the program

return 0;

}

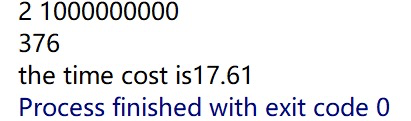

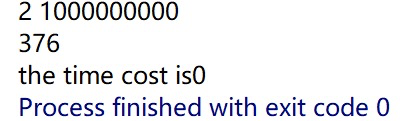

The results are shown in the figure:

We found that although the result was successful, it took nearly 18 seconds to find the final answer. Of course, this efficiency is very low, let alone the actual production application. So is there any good way to optimize it? Next is our topic: fast power algorithm.

Preliminary introduction to fast power algorithm

The fast power algorithm can help us calculate the power with a very large exponent. The reason why the traditional power algorithm has a very high time complexity (O (exponent n)) is that when the exponent n is very large, the number of loop operations to be performed is also very large. Therefore, the core idea of our fast power algorithm is to divide the exponent into two halves at each step, and square the corresponding base. This can not only reduce the very large index, but also reduce the number of cycles to be executed, but the final result will not change. Let's start with a simple example:

3^10=3*3*3*3*3*3*3*3*3*3 //Try to make the index smaller. The index here is 10 3^10=(3*3)*(3*3)*(3*3)*(3*3)*(3*3) 3^10=(3*3)^5 3^10=9^5 //At this time, the index is reduced by half from 10 to 5, and the base becomes the original square. It originally needs to perform 10 cycles to find 3 ^ 10, but only 5 cycles to find 9 ^ 5, but 3 ^ 10 is equal to 9 ^ 5. We use one operation (square operation with the base) to reduce half of the original cycle, especially when the power is very large, for example, 2 ^ 10000 = 4 ^ 5000, The base number only makes a small square operation, and the index changes from 10000 to 5000, reducing 5000 cyclic operations. //Now our problem is how to change the index 5 into half of the original. 5 is an odd number and half of 5 is 2.5. However, we know that the index cannot be a decimal, so we can't execute 5 / 2 directly. However, there is another way to express 9 ^ 5 9^5=(9^4)*(9^1) //At this time, we draw out a base to the power of 9 ^ 1, which is 9 ^ 1. We move this 9 ^ 1 separately, and the remaining 9 ^ 4 can be reduced by half, and the base can be squared 9^5=(81^2)*(9^1) //Reduce the index by half and square the base 9^5=(6561^1)*(9^1) //At this time, we find that the index becomes an odd number 1 again. According to the above operation method of odd index, we should extract the first power of a base, which is 6561 ^ 1. We move the 6561 ^ 1 separately, but the index becomes 0, which means that we can't "shrink the index" any more. 9^5=(6561^0)*(9^1)*(6561^1)=1*(9^1)*(6561^1)=(9^1)*(6561^1)=9*6561=59049 We can find that the final result is 9*6561,And how did 9 come into being? When the index is an odd number of 5, the base number is 9. How did 6561 come into being? When the index is odd 1, the base number is 6561. So we can find a rule: the final power result is actually the product of all the bases when the exponent is odd in the change process.

Next, let's demonstrate the above algorithm with code:

long long fastPower(long long base, long long power) {

long long result = 1;

while (power > 0) {

if (power % 2 == 0) {

//If the exponent is even

power = power / 2;//Reduce the index to half

base = base * base % 1000;//The base increases to the original square

} else {

//If the exponent is odd

power = power - 1;//Subtract 1 from the index to make it an even number

result = result * base % 1000;//At this time, remember to collect the power of the base separated when the index is odd

power = power / 2;//At this time, the index is even and you can continue the operation

base = base * base % 1000;

}

}

return result;

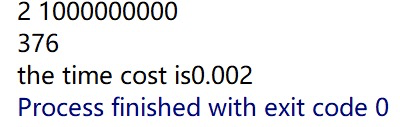

}Let's test the efficiency of the fast power algorithm and ordinary power algorithm at this time. Let's still find the 1000000000 power of 2 and see how much time it will take:

It's incredible that it took only 0.002 seconds to get the result, and the result is 376. However, the ordinary algorithm took nearly 18 seconds to get the final result.

Re optimization of pressing performance

Although the efficiency of the fast power algorithm above has been very high, we can still optimize it again. It seems that the above code can be further simplified. For example, there are still repetitive codes in if and else code blocks:

power = power / 2; base = base * base % 1000;

and

power = power - 1;//Subtract 1 from the index to make it an even number power = power / 2;

Can be compressed

power = power / 2;

Because power is an integer, for example, when power is an odd number 5, power-1 = 4 and power / 2 = 2; And if we use power/2=5/2=2 directly. The results obtained in the integer operation are the same, so our code can be compressed as follows:

long long fastPower(long long base, long long power) {

long long result = 1;

while (power > 0) {

if (power % 2 == 1) {

result = result * base % 1000;

}

power = power / 2;

base = (base * base) % 1000;

}

return result;

}Next, let's test the performance after optimization, which is still to the power of 1000000000 of 2:

The result was still correct 376, but the time cost was reduced from 0.002 to 0.001.

Ultimate optimization

In C language, power%2==1 can be replaced by faster "bit operation", such as power & 1. Because if power is an even number, the last bit of its binary representation must be 0; If power is an odd number, the last bit of its binary representation must be 1. And them respectively with the binary of 1 to get the last digit of the power binary. If it is 0, it is even, and if it is 1, it is odd. For example, if 5 is an odd number, then 5 & 1 = 1; If 6 is an even number, then 6 & 1 = 0; Therefore, the judgment of odd and even numbers can be replaced by "bit operation".

Similarly, for power=power/2, it can also be replaced by faster "bit operation". As long as we move the binary representation of power by 1 bit to the right, it will become half of the original.

Finally, our code can be optimized using bit operations as follows:

long long fastPower(long long base, long long power) {

long long result = 1;

while (power > 0) {

if (power & 1) {//This is equivalent to if(power%2==1)

result = result * base % 1000;

}

power >>= 1;//Here it is equivalent to power=power/2

base = (base * base) % 1000;

}

return result;

}We still test the 1000000000 power of 2 to see how the performance of the final optimized code is:

It's terrible. The time cost is close to 0 seconds. We compressed it from the first 18 seconds to close to 0 seconds. We really lament the power of the algorithm! If the same two companies adopt different algorithms, the user experience will be very different, which makes us feel the power of the algorithm.

Original link: https://blog.csdn.net/qq_19782019/article/details/85621386

(I think it's pretty good. Reprint it)