I have been learning ffmpeg for some time. Because no one around me can ask for advice, I basically check the information on the Internet when there is a problem. I can barely finish the task by now. This paper aims to summarize, deepen understanding and facilitate review.

ffmpeg used in the project mainly includes:

1. The upper computer pulls the video stream of the camera and displays it. (developed with Qt for upper computer)

2. Save the video stream (at present, only 264 coding has been realized, 265 has not been successful and has not been understood).

3. Add a time stamp (add a time watermark every second, mainly due to efficiency and font problems).

4. Playback video. (the basic functions of a video player are realized)

5. The upper computer pulls the camera stream, but after decoding, it needs to add some intelligent identification information to the picture (the current project is to add the trajectory of high-altitude parabolic), so it needs to only use the ffmpeg decoder, and then draw the decoded data with opencv and send it to the Qt client for display.

The above project involves many detailed problems, which can be solved. I need to thank Lei Xiaohua and yuntianzhiding's blog, especially Lei Xiaohua's blog, for helping me get started from scratch and complete the basic tasks. The title of great God deserves its name!

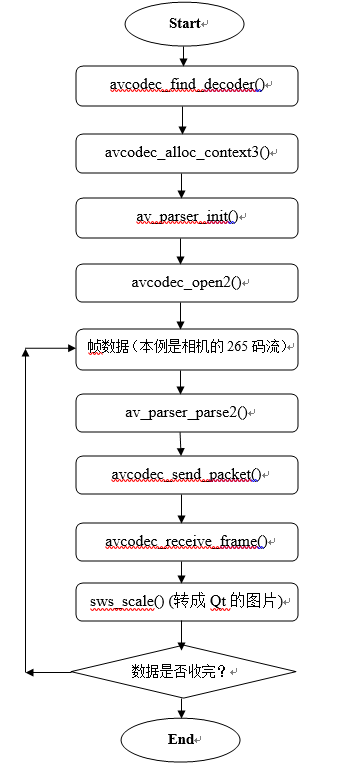

Only ffmpeg decoder is used. The specific decoding process is shown in the figure below: (the summary is point 5 above)

ffmpeg function:

avcodec_find_decoder(): find the decoder.

avcodec_alloc_context3(): allocate memory for AVCodecContext.

avcodec_open2(): open the decoder.

av_parser_init(): initialize AVCodecParserContext.

av_parser_parse2(): parse to get a Packet.

avcodec_send_packet() + avcodec_receive_frame(): decode frame data (because the version of ffmpeg is updated, Raytheon uses avcodec_decode_video2(), which needs to be replaced)

sws_scale(): format conversion, which converts the decoded frame data into qt image.

Website of Lei Xiaohua's related blog: https://blog.csdn.net/leixiaohua1020/article/details/42181571

Key codes of decoding part:

The work flow of the whole software decoding: the frame data is a video stream with high-altitude parabolic detection information sent from the network camera using socket, so after receiving the socket data, the 265 data is separated and then transmitted to the decoder. After decoding, the decoder converts the data into qt pictures and adds parabolic information (such as drawing path, etc.).

code:

//Initialization function

bool DecodeThread::initVideoObjects()

{

m_avPacket = av_packet_alloc();

av_init_packet(m_avPacket);

m_avFrameInput = av_frame_alloc();

m_avFramePicture = av_frame_alloc();

m_pCodec = avcodec_find_decoder(m_CodecId);

if (!m_pCodec)

{

CDiagnosis::GetInstance()->printMsg(QString::fromLocal8Bit("Codec not found."));

return false;

}

m_avCodecCtx = avcodec_alloc_context3(m_pCodec);

if (!m_avCodecCtx)

{

CDiagnosis::GetInstance()->printMsg(QString::fromLocal8Bit("Could not allocate video codec context."));

return false;

}

m_pCodecParserCtx = av_parser_init(m_CodecId);

if (!m_pCodecParserCtx)

{

CDiagnosis::GetInstance()->printMsg(QString::fromLocal8Bit("Could not allocate video parser context."));

return false;

}

if (avcodec_open2(m_avCodecCtx, m_pCodec, NULL) < 0)

{

CDiagnosis::GetInstance()->printMsg(QString::fromLocal8Bit("Could not open codec."));

return false;

}

m_bFfmpegInited = true;

return true;

}

//Initialization function is used to initialize the picture used in qt display

bool DecodeThread::initImageObjects()

{

AVPixelFormat srcFormat = AV_PIX_FMT_YUV420P;

AVPixelFormat dstFormat = AV_PIX_FMT_RGB32;

srcFormat = m_avCodecCtx->pix_fmt;

int byte = av_image_get_buffer_size(AV_PIX_FMT_RGB32, m_nVideoWidth, m_nVideoHeight, 1);

m_pPicBuffer = (uint8_t*)av_malloc(byte * sizeof(uint8_t));

//Open cache to store one frame of data

av_image_fill_arrays(m_avFramePicture->data, m_avFramePicture->linesize, m_pPicBuffer, dstFormat, m_nVideoWidth, m_nVideoHeight, 1);

m_pSwsContext = sws_getContext(m_nVideoWidth, m_nVideoHeight, srcFormat, m_nVideoWidth, m_nVideoHeight, dstFormat, SWS_FAST_BILINEAR, NULL, NULL, NULL);

return true;

}

//Key functions of decoding

void DecodeThread::decodeData()

{

QString szTime = QTime::currentTime().toString();

QString szMsg = szTime.append(QString::fromLocal8Bit(" :Thread started successfully"));

CDiagnosis::GetInstance()->printMsg(szMsg);

int first_time = 1;

while (!m_bStop)

{

if (!m_bIsInit)//init according to this flag

{

if (m_bIsFirstData)

continue;

bool bInitSuccess = initVideoObjects();

if (!bInitSuccess)

{

continue;

}

m_bIsInit = true;

continue;

}

m_pDataMutex->lock();

if (m_qDataArray.size() <= 0)

{

CUtilityMethod::Sleep(200);

m_pDataMutex->unlock();

continue;

}

QByteArray data = m_qDataArray.dequeue();

m_pDataMutex->unlock();

int nDataSize = data.size();

if (nDataSize <= 0)

{

CDiagnosis::GetInstance()->printMsg(QString::fromLocal8Bit("The data length is not greater than zero,Waiting for the next packet of data"));//Exiting thread

CUtilityMethod::Sleep(200);

continue;

//break;

}

while (nDataSize > 0)

{

//unsigned char pData[nBufferSize] = { 0 };

const int nBufferSize = nDataSize + FF_BUG_NO_PADDING;

unsigned char* pData = new unsigned char[nBufferSize];

memcpy_s(pData, nBufferSize, data, nDataSize);

int nLength = av_parser_parse2(m_pCodecParserCtx, m_avCodecCtx, &m_avPacket->data, &m_avPacket->size, pData, nDataSize, AV_NOPTS_VALUE, AV_NOPTS_VALUE, AV_NOPTS_VALUE);

nDataSize -= nLength;

data.remove(0, nLength);

if (m_avPacket->size == 0)

{

delete[] pData;

continue;

}

switch (m_pCodecParserCtx->pict_type)

{

case AV_PICTURE_TYPE_I: /*CDiagnosis::GetInstance()->printMsg(QString::fromLocal8Bit("Received I frame "");*/ break;

case AV_PICTURE_TYPE_P: /*CDiagnosis::GetInstance()->printMsg(QString::fromLocal8Bit("Received P frame "");*/ break;

case AV_PICTURE_TYPE_B: CDiagnosis::GetInstance()->printMsg(QString::fromLocal8Bit("received B frame")); break;

default: CDiagnosis::GetInstance()->printMsg(QString::fromLocal8Bit("Unknown frame received")); break;

}

avcodec_send_packet(m_avCodecCtx, m_avPacket);

int ret = avcodec_receive_frame(m_avCodecCtx, m_avFrameInput);

if (ret < 0)

{

if (ret == -11)

{

delete[] pData;

continue;

}

CDiagnosis::GetInstance()->printMsg(QString::fromLocal8Bit("Decoding error"));

return;

}

else

{

if (first_time)

{

m_nVideoWidth = m_avCodecCtx->width;

m_nVideoHeight = m_avCodecCtx->height;

initImageObjects();

first_time = 0;

}

sws_scale(m_pSwsContext, (const uint8_t* const*)m_avFrameInput->data, m_avFrameInput->linesize, 0, m_nVideoHeight, m_avFramePicture->data, m_avFramePicture->linesize);

if (m_qDrawData.size() <= 0)

{

delete[] pData;

continue;

}

m_pHeadMutex->lock();

output_str* pTemp = m_qDrawData.dequeue();

m_pHeadMutex->unlock();

QImage image(m_avFramePicture->data[0], m_nVideoWidth, m_nVideoHeight, QImage::Format_RGB32);

if (pTemp->data_size <= 0)

{

emit receiveImage(image);

cv::Mat matImage = cv::Mat(image.height(), image.width(), CV_8UC4, (void*)image.constBits(), image.bytesPerLine());

}

else

{

cv::Mat matImage = cv::Mat(image.height(), image.width(), CV_8UC4, (void*)image.constBits(), image.bytesPerLine());

cv::Mat newImage = show(matImage, *pTemp);

const uchar* pSrc = (const uchar*)newImage.data;

QImage ShowImage(pSrc, newImage.cols, newImage.rows, newImage.step, QImage::Format_RGB32);//Format_ARGB32

if (!ShowImage.isNull())

{

emit receiveImage(ShowImage);

}

matImage.release();

newImage.release();

}

delete pTemp;

}

delete[] pData;

}

//_CrtDumpMemoryLeaks();

av_packet_unref(m_avPacket);

av_freep(m_avPacket);

}

freeObjects();

emit stopSignal();

}