background

A lot of information about FFmpeg reading memory 264 directly published into rtmp was searched on the internet. It was found that very little information was available in this area. Recently, the function of this area was done, and it is hereby recorded.

Problem Description

There are many examples of audio and video transcoding on the Internet (no encoding and decoding process, that is, audio and video format re-encapsulation), but they are all based on input files. My requirement is to read a frame of 264 bitstream from memory and save it into flv or publish it into rtmp (the audio and video format of rtmp itself is flv).

Implementation

1. demo program validation

Because the new version of FFmpeg and the old version of FFmpeg have some differences in the interface, so I take the latest version of FFmpeg to do, first use FFmpeg remuxing.c routine to test, mainly used to read the IPC rtsp stream stored into FLV or released into rtmp, after testing, the FLV format stored is correct. In format, RTMP can also play on the player normally, and it's okay to play on flv.js (my nginx is integrated with nginx-http-flv-module).

The demo code is pasted below:

AVOutputFormat *ofmt = NULL;

AVFormatContext *ifmt_ctx = NULL, *ofmt_ctx = NULL;

AVPacket pkt;

const char *in_filename, *out_filename;

int ret, i;

int stream_index = 0;

int *stream_mapping = NULL;

int stream_mapping_size = 0;

/*if (argc < 3) {

printf("usage: %s input output\n"

"API example program to remux a media file with libavformat and libavcodec.\n"

"The output format is guessed according to the file extension.\n"

"\n", argv[0]);

return 1;

}

in_filename = argv[1];

out_filename = argv[2];*/

in_filename = "rtsp://admin:admin@172.16.28.253:554/h264/ch1/main/av_stream?videoCodecType=H.264";

out_filename = "rtmp://localhost:1985/live/mystream";

//out_filename = "demo.flv";

if ((ret = avformat_open_input(&ifmt_ctx, in_filename, 0, 0)) < 0) {

fprintf(stderr, "Could not open input file '%s'", in_filename);

goto end;

}

if ((ret = avformat_find_stream_info(ifmt_ctx, 0)) < 0) {

fprintf(stderr, "Failed to retrieve input stream information");

goto end;

}

av_dump_format(ifmt_ctx, 0, in_filename, 0);

avformat_alloc_output_context2(&ofmt_ctx, NULL, "flv", out_filename);

if (!ofmt_ctx) {

fprintf(stderr, "Could not create output context\n");

ret = AVERROR_UNKNOWN;

goto end;

}

stream_mapping_size = ifmt_ctx->nb_streams;

stream_mapping = (int*)av_mallocz_array(stream_mapping_size, sizeof(*stream_mapping));

if (!stream_mapping) {

ret = AVERROR(ENOMEM);

goto end;

}

ofmt = ofmt_ctx->oformat;

for (i = 0; i < ifmt_ctx->nb_streams; i++) {

AVStream *out_stream;

AVStream *in_stream = ifmt_ctx->streams[i];

AVCodecParameters *in_codecpar = in_stream->codecpar;

if (in_codecpar->codec_type != AVMEDIA_TYPE_AUDIO &&

in_codecpar->codec_type != AVMEDIA_TYPE_VIDEO &&

in_codecpar->codec_type != AVMEDIA_TYPE_SUBTITLE) {

stream_mapping[i] = -1;

continue;

}

stream_mapping[i] = stream_index++;

out_stream = avformat_new_stream(ofmt_ctx, NULL);

if (!out_stream) {

fprintf(stderr, "Failed allocating output stream\n");

ret = AVERROR_UNKNOWN;

goto end;

}

ret = avcodec_parameters_copy(out_stream->codecpar, in_codecpar);

if (ret < 0) {

fprintf(stderr, "Failed to copy codec parameters\n");

goto end;

}

out_stream->codecpar->codec_tag = 0;

}

av_dump_format(ofmt_ctx, 0, out_filename, 1);

if (!(ofmt->flags & AVFMT_NOFILE)) {

ret = avio_open(&ofmt_ctx->pb, out_filename, AVIO_FLAG_WRITE);

if (ret < 0) {

fprintf(stderr, "Could not open output file '%s'", out_filename);

goto end;

}

}

ret = avformat_write_header(ofmt_ctx, NULL);

if (ret < 0) {

fprintf(stderr, "Error occurred when opening output file\n");

goto end;

}

while (1) {

AVStream *in_stream, *out_stream;

ret = av_read_frame(ifmt_ctx, &pkt);

if (ret < 0)

break;

in_stream = ifmt_ctx->streams[pkt.stream_index];

if (pkt.stream_index >= stream_mapping_size ||

stream_mapping[pkt.stream_index] < 0) {

av_packet_unref(&pkt);

continue;

}

pkt.stream_index = stream_mapping[pkt.stream_index];

out_stream = ofmt_ctx->streams[pkt.stream_index];

//log_packet(ifmt_ctx, &pkt, "in");

/* copy packet */

pkt.pts = av_rescale_q_rnd(pkt.pts, in_stream->time_base, out_stream->time_base, (AVRounding)(AV_ROUND_NEAR_INF | AV_ROUND_PASS_MINMAX));

pkt.dts = av_rescale_q_rnd(pkt.dts, in_stream->time_base, out_stream->time_base, (AVRounding)(AV_ROUND_NEAR_INF | AV_ROUND_PASS_MINMAX));

pkt.duration = av_rescale_q(pkt.duration, in_stream->time_base, out_stream->time_base);

pkt.pos = -1;

//log_packet(ofmt_ctx, &pkt, "out");

ret = av_interleaved_write_frame(ofmt_ctx, &pkt);

if (ret < 0) {

fprintf(stderr, "Error muxing packet\n");

break;

}

av_packet_unref(&pkt);

}

av_write_trailer(ofmt_ctx);

end:

avformat_close_input(&ifmt_ctx);

/* close output */

if (ofmt_ctx && !(ofmt->flags & AVFMT_NOFILE))

avio_closep(&ofmt_ctx->pb);

avformat_free_context(ofmt_ctx);

av_freep(&stream_mapping);

if (ret < 0 && ret != AVERROR_EOF) {

fprintf(stderr, "Error occurred\n");

return 1;

}

return 0;

2. Memory H264 Publishes rtmp

First paste out the interface program directly:

extern "C"

{

#include <libavutil/timestamp.h>

#include <libavformat/avformat.h>

};

AVOutputFormat *ofmt = NULL;

AVFormatContext *ofmt_ctx = NULL;

//const char *out_filename = "new.flv";

const char *out_filename = "rtmp://localhost:1985/live/mystream";

int stream_index = 0;

int waitI = 0, rtmpisinit = 0;

int ptsInc = 0;

int GetSpsPpsFromH264(uint8_t* buf, int len)

{

int i = 0;

for (i = 0; i < len; i++) {

if (buf[i+0] == 0x00

&& buf[i + 1] == 0x00

&& buf[i + 2] == 0x00

&& buf[i + 3] == 0x01

&& buf[i + 4] == 0x06) {

break;

}

}

if (i == len) {

printf("GetSpsPpsFromH264 error...");

return 0;

}

printf("h264(i=%d):", i);

for (int j = 0; j < i; j++) {

printf("%x ", buf[j]);

}

return i;

}

static bool isIdrFrame2(uint8_t* buf, int len)

{

switch (buf[0] & 0x1f) {

case 7: // SPS

return true;

case 8: // PPS

return true;

case 5:

return true;

case 1:

return false;

default:

return false;

break;

}

return false;

}

static bool isIdrFrame1(uint8_t* buf, int size)

{

int last = 0;

for (int i = 2; i <= size; ++i) {

if (i == size) {

if (last) {

bool ret = isIdrFrame2(buf + last, i - last);

if (ret) {

return true;

}

}

}

else if (buf[i - 2] == 0x00 && buf[i - 1] == 0x00 && buf[i] == 0x01) {

if (last) {

int size = i - last - 3;

if (buf[i - 3]) ++size;

bool ret = isIdrFrame2(buf + last, size);

if (ret) {

return true;

}

}

last = i + 1;

}

}

return false;

}

//When initializing, the sps and pps data of the first key frame of H264 must be put in.

static int RtmpInit(void* spspps_date, int spspps_datalen)

{

int ret = 0;

AVStream *out_stream;

AVCodecParameters *out_codecpar;

av_register_all();

avformat_network_init();

printf("rtmp init...\n");

avformat_alloc_output_context2(&ofmt_ctx, NULL, "flv", NULL);// out_filename);

if (!ofmt_ctx) {

fprintf(stderr, "Could not create output context\n");

ret = AVERROR_UNKNOWN;

goto end;

}

ofmt = ofmt_ctx->oformat;

out_stream = avformat_new_stream(ofmt_ctx, NULL);

if (!out_stream) {

fprintf(stderr, "Failed allocating output stream\n");

ret = AVERROR_UNKNOWN;

goto end;

}

stream_index = out_stream->index;

//Because our input is H264 data of a frame read out from memory, we have no input codecpar information, so we have to manually add the output codecpar.

out_codecpar = out_stream->codecpar;

out_codecpar->codec_type = AVMEDIA_TYPE_VIDEO;

out_codecpar->codec_id = AV_CODEC_ID_H264;

out_codecpar->bit_rate = 400000;

out_codecpar->width = 1280;

out_codecpar->height = 720;

out_codecpar->codec_tag = 0;

out_codecpar->format = AV_PIX_FMT_YUV420P;

//Extdata (sps and pps data for the first frame of H264) must be added, otherwise FLV with AVCDecoderConfiguration Record information cannot be generated.

//unsigned char sps_pps[26] = { 0x00, 0x00, 0x01, 0x67, 0x4d, 0x00, 0x1f, 0x9d, 0xa8, 0x14, 0x01, 0x6e, 0x9b, 0x80, 0x80, 0x80, 0x81, 0x00, 0x00, 0x00, 0x01, 0x68, 0xee, 0x3c, 0x80 };

out_codecpar->extradata_size = spspps_datalen;

out_codecpar->extradata = (uint8_t*)av_malloc(spspps_datalen + AV_INPUT_BUFFER_PADDING_SIZE);

if (out_codecpar->extradata == NULL)

{

printf("could not av_malloc the video params extradata!\n");

goto end;

}

memcpy(out_codecpar->extradata, spspps_date, spspps_datalen);

av_dump_format(ofmt_ctx, 0, out_filename, 1);

if (!(ofmt->flags & AVFMT_NOFILE)) {

ret = avio_open(&ofmt_ctx->pb, out_filename, AVIO_FLAG_WRITE);

if (ret < 0) {

fprintf(stderr, "Could not open output file '%s'", out_filename);

goto end;

}

}

AVDictionary *opts = NULL;

av_dict_set(&opts, "flvflags", "add_keyframe_index", 0);

ret = avformat_write_header(ofmt_ctx, &opts);

av_dict_free(&opts);

if (ret < 0) {

fprintf(stderr, "Error occurred when opening output file\n");

goto end;

}

waitI = 1;

return 0;

end:

/* close output */

if (ofmt_ctx && !(ofmt->flags & AVFMT_NOFILE))

avio_closep(&ofmt_ctx->pb);

if (ofmt_ctx) {

avformat_free_context(ofmt_ctx);

ofmt_ctx = NULL;

}

return -1;

}

static void VideoWrite(void* data, int datalen)

{

int ret = 0, isI = 0;

AVStream *out_stream;

AVPacket pkt;

out_stream = ofmt_ctx->streams[stream_index];

av_init_packet(&pkt);

isI = isIdrFrame1((uint8_t*)data, datalen);

pkt.flags |= isI ? AV_PKT_FLAG_KEY : 0;

pkt.stream_index = out_stream->index;

pkt.data = (uint8_t*)data;

pkt.size = datalen;

//wait I frame

if (waitI) {

if (0 == (pkt.flags & AV_PKT_FLAG_KEY))

return;

else

waitI = 0;

}

AVRational time_base;

time_base.den = 50;

time_base.num = 1;

pkt.pts = av_rescale_q((ptsInc++) * 2, time_base, out_stream->time_base);

pkt.dts = av_rescale_q_rnd(pkt.dts, out_stream->time_base, out_stream->time_base, (AVRounding)(AV_ROUND_NEAR_INF | AV_ROUND_PASS_MINMAX));

pkt.duration = av_rescale_q(pkt.duration, out_stream->time_base, out_stream->time_base);

pkt.pos = -1;

/* copy packet (remuxing In the example)*/

//pkt.pts = av_rescale_q_rnd(pkt.pts, in_stream->time_base, out_stream->time_base, AV_ROUND_NEAR_INF | AV_ROUND_PASS_MINMAX);

//pkt.dts = av_rescale_q_rnd(pkt.dts, in_stream->time_base, out_stream->time_base, AV_ROUND_NEAR_INF | AV_ROUND_PASS_MINMAX);

//pkt.duration = av_rescale_q(pkt.duration, in_stream->time_base, out_stream->time_base);

//pkt.pos = -1;

ret = av_interleaved_write_frame(ofmt_ctx, &pkt);

if (ret < 0) {

fprintf(stderr, "Error muxing packet\n");

}

av_packet_unref(&pkt);

}

static void RtmpUnit(void)

{

if (ofmt_ctx)

av_write_trailer(ofmt_ctx);

/* close output */

if (ofmt_ctx && !(ofmt->flags & AVFMT_NOFILE))

avio_closep(&ofmt_ctx->pb);

if (ofmt_ctx) {

avformat_free_context(ofmt_ctx);

ofmt_ctx = NULL;

}

}

int main()

{

//The following is a simple list of the invocation methods for an excuse. The specific invocation depends on the actual situation.

//preturnps is the input frame 264 data, and iPsLength is the length of a frame

//Simulated Initialization Call

char *h264buffer = new char[iPsLength];

memcpy(h264buffer, preturnps, iPsLength);

printf("h264 len = %d\n", iPsLength);

if (!rtmpisinit) {

if (isIdrFrame1((uint8_t*)h264buffer, iPsLength)) {

int spspps_len = GetSpsPpsFromH264((uint8_t*)h264buffer, iPsLength);

if (spspps_len > 0) {

char *spsbuffer = new char[spspps_len];

memcpy(spsbuffer, h264buffer, spspps_len);

rtmpisinit = 1;

RtmpInit(spsbuffer, spspps_len);

delete spsbuffer;

}

}

}

//Start pushing video data

if (rtmpisinit) {

VideoWrite(h264buffer, iPsLength);

}

//Deinitialization

RtmpUnit();

return 0;

}

3. Main problems encountered

These interfaces evolved from remuxing.c, but because our input is 264 bitstream, some operations of FFmpeg on input files are removed, such as avformat_open_input. Therefore, the codec information we output can only be edited manually by ourselves. If it is an input file, the information can only be passed through the following Each interface can be accessed

avcodec_parameters_copy(out_stream->codecpar, in_stream->codecpar);

Now we have no input file, we can only edit the following codec information according to the known information as follows

AVCodecParameters *out_codecpar = out_stream->codecpar; out_codecpar->codec_type = AVMEDIA_TYPE_VIDEO; out_codecpar->codec_id = AV_CODEC_ID_H264; out_codecpar->bit_rate = 400000; out_codecpar->width = 1280; out_codecpar->height = 720; out_codecpar->codec_tag = 0; out_codecpar->format = AV_PIX_FMT_YUV420P;

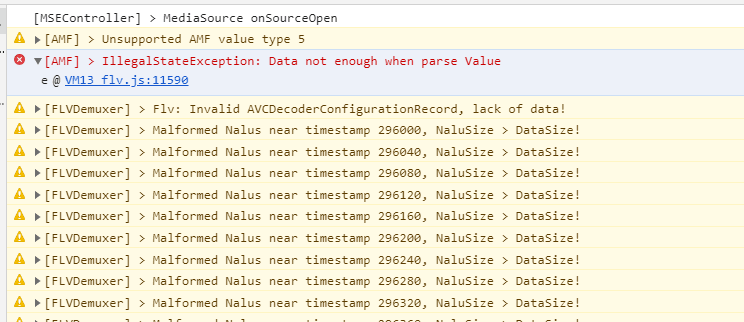

However, the rtmp released after running the program can not be played on flv.js. flv.js has received the stream, but the error is reported.

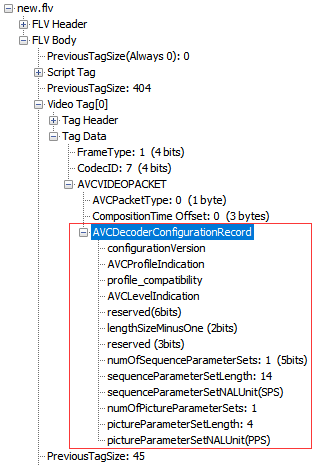

According to the flv.js error prompt, I guess it should be missing the AVCDecoderConfiguration Record. In order to verify, I changed the output to FLV file and saved the flv comparison from demo program (you can use the flvanalyzer tool to see), which is indeed missing the AVCDecoderConfiguration Record.

So consult the relevant information:

The encapsulation form of RTMP push audio and video streams is similar to FLV format. Therefore, to push H264 and AAC live streams, it is necessary to first send "AVC sequence header" and "AAC sequence header", which contain important encoding information. Without them, the decoder will not be able to decode.

AVC sequence header is the AVCDecoderConfiguration Record structure.

Here comes the question???

What does AVCD Configuration Record contain?

Positive Solution: AVCDecoder Configuration Record. It contains sps and pps information which is important for H.264 decoding. Before sending data stream to AVC decoder, it must send sps and pps information, otherwise the decoder can not decode normally. And before the decoder start s again after stop, such as seek, fast-forward and fast-backward status switching, all the information of sps and pps needs to be sent again. AVCD Configuration Record also appears once in FLV file, which is the first video tag.

Now that you know the problem, how can you make flv include AVCDecoderConfiguration Record?

I looked at the parameters in the structure AVCodecParameters and found that the data of extradata seemed to be set. The meaning of this parameter is that the additional binary data needed to initialize the decoder depends on the codec.

/**

* Extra binary data needed for initializing the decoder, codec-dependent.

*

* Must be allocated with av_malloc() and will be freed by

* avcodec_parameters_free(). The allocated size of extradata must be at

* least extradata_size + AV_INPUT_BUFFER_PADDING_SIZE, with the padding

* bytes zeroed.

*/

uint8_t *extradata;

Knowing that extradata as a global headers, in fact, the main storage is SPS, PPS and other information;

So specify the SPS/PPS content manually and the value of extradata in the AVCodecParameters structure.

But how to get sps and pps?

There are a lot of data about H264 bitstream and key frame on the internet, which are not detailed. The following is a brief list to illustrate:

NALU rule encoded by h264

The first frame SPS [0 0001 0x67], PPS [0 0001 0x68], SEI [0 0001 0x6], IDR [0 0001 0x65]

P frame P [0 0001 0x61]

I frame SPS [0 0001 0x67], PPS [0 0001 0x68], IDR [0 0001 0x65]

After knowing this, we can get the data of SPS [0 000 1 0x67] PPS [0 000 1 0x68] and send it to extradata.

//Because our input is H264 data of a frame read out from memory, we have no input codecpar information, so we have to manually add the output codecpar.

AVCodecParameters *out_codecpar = out_stream->codecpar;

out_codecpar->codec_type = AVMEDIA_TYPE_VIDEO;

out_codecpar->codec_id = AV_CODEC_ID_H264;

out_codecpar->bit_rate = 400000;

out_codecpar->width = 1280;

out_codecpar->height = 720;

out_codecpar->codec_tag = 0;

out_codecpar->format = AV_PIX_FMT_YUV420P;

//Extdata (sps and pps data for the first frame of H264) must be added, otherwise FLV with AVCDecoderConfiguration Record information cannot be generated.

//unsigned char sps_pps[26] = { 0x00, 0x00, 0x01, 0x67, 0x4d, 0x00, 0x1f, 0x9d, 0xa8, 0x14, 0x01, 0x6e, 0x9b, 0x80, 0x80, 0x80, 0x81, 0x00, 0x00, 0x00, 0x01, 0x68, 0xee, 0x3c, 0x80 };

out_codecpar->extradata_size = spspps_datalen;

out_codecpar->extradata = (uint8_t*)av_malloc(spspps_datalen + AV_INPUT_BUFFER_PADDING_SIZE);

if (out_codecpar->extradata == NULL)

{

printf("could not av_malloc the video params extradata!\n");

goto end;

}

memcpy(out_codecpar->extradata, spspps_date, spspps_datalen);

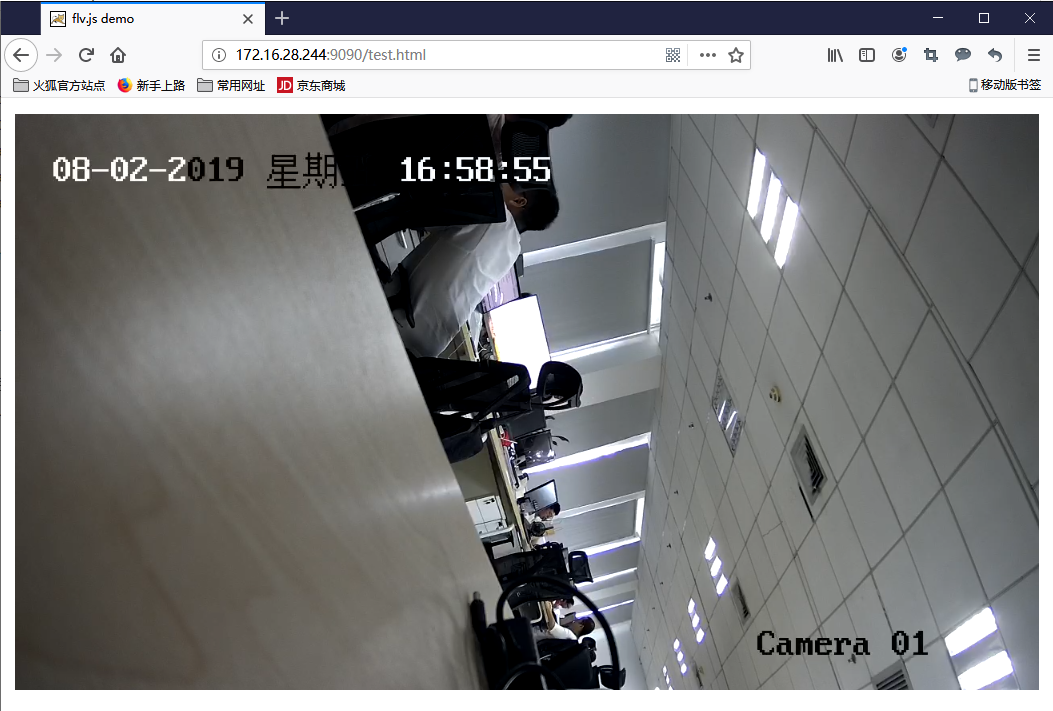

After adding this step, the memory H264 stored in flv also has AVCD Configuration Record, and the released rtmp can also play smoothly in flv.js.

download

Source download: http