Children's shoes who often play with crawlers know the importance of cookies. So far, most websites still use cookies to identify their login status, and only a few websites upgrade to use jwt to record their login status.

The function of extracting cookies is self-evident. What are the high-end operations of extracting cookies? Watch:

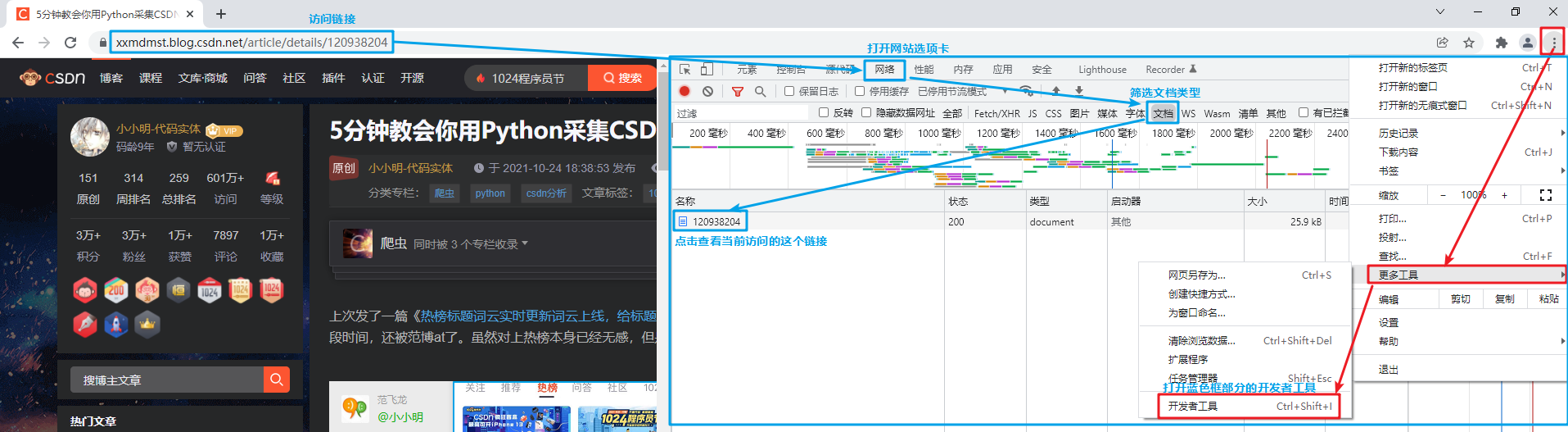

Purely manual extraction of Google viewer cookie s

This should be the most difficult solution for any children's shoes that have played with reptiles, and it may also be the most difficult solution for children's shoes that can't crawl at all.

The method is to open the developer tool F12, then visit the website to extract the cookie, and then select the request just accessed in the network. The specific steps are as follows:

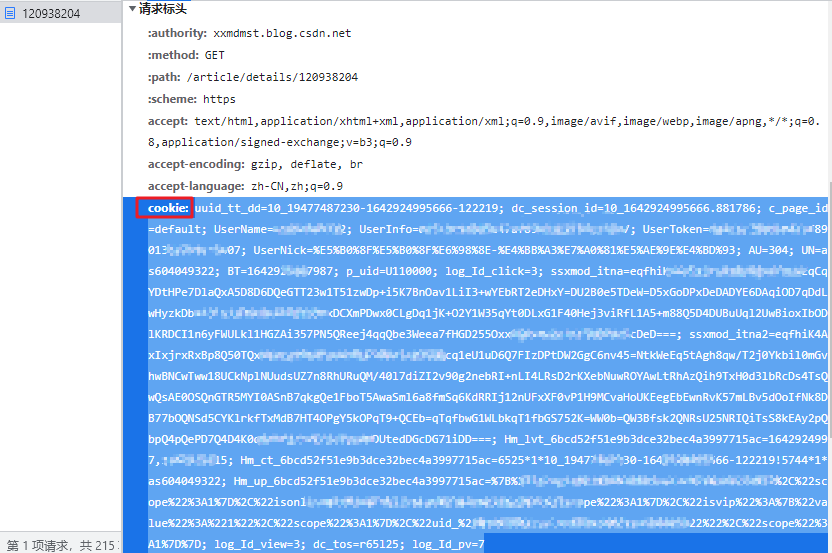

Then find the cookie in the request header and copy the corresponding value:

This should be the operation of any children's shoes who have learned crawlers. Is there any way to use code to increase the effect of automation?

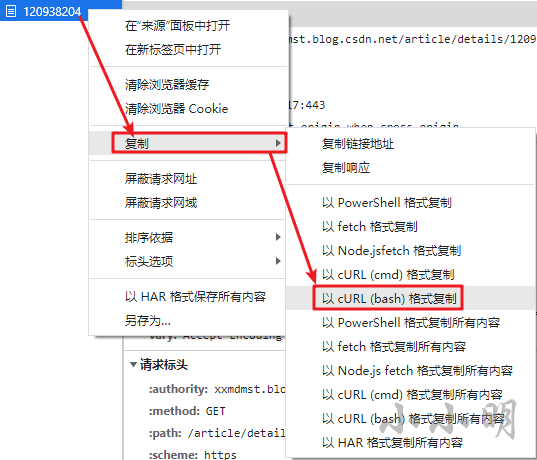

My scheme is to extract curl request directly by code after copying. The operation is as follows:

After copying the request to the clipboard in the form of curl command, we can directly extract the cookie through the code. The code is as follows:

import re

import pyperclip

def extractCookieByCurlCmd(curl_cmd):

cookie_obj = re.search("-H \$?'cookie: ([^']+)'", curl_cmd, re.I)

if cookie_obj:

return cookie_obj.group(1)

cookie = extractCookieByCurlCmd(pyperclip.paste())

print(cookie)

After comparison, it is found that the printed cookie is completely consistent with the directly copied cookie.

selenium manually logs in and gets cookie s

Take saving the login cookie of station B as an example:

from selenium import webdriver

from selenium.webdriver.common.by import By

from selenium.common.exceptions import NoSuchElementException

import time

import json

browser = webdriver.Chrome()

browser.get("https://passport.bilibili.com/login")

flag = True

print("Waiting for login...")

while flag:

try:

browser.find_element(By.XPATH,

"//div[@class='user-con signin']|//ul[@class='right-entry']"

"//a[@class='header-entry-avatar']")

flag = False

except NoSuchElementException as e:

time.sleep(3)

print("Signed in, now save for you cookie...")

with open('cookie.txt', 'w', encoding='u8') as f:

json.dump(browser.get_cookies(), f)

browser.close()

print("cookie After saving, the viewer has automatically exited...")

Execute the above code, selenium will control Google viewer to open the login page of station B, wait for the user to log in to station B manually, and the cookie will be saved automatically after logging in.

So how to use the cookie directly when the subsequent selenium program starts without logging in again? The demonstration is as follows:

import json

from selenium import webdriver

browser = webdriver.Chrome()

with open('cookie.txt', 'r', encoding='u8') as f:

cookies = json.load(f)

browser.get("https://www.bilibili.com/")

for cookie in cookies:

browser.add_cookie(cookie)

browser.get("https://www.bilibili.com/")

The process is to first visit the website to load cookies, then quickly add cookies to it, and then visit the website again. The loaded cookies will take effect.

selenium headless mode to get non login cookie s

For example, the website of tiktok, which wants to download the video, must have an initial cookie. But the algorithm generated by cookie is more complex and pure requests is hard to simulate. At this point, we can fully load selenium pages with cookie and save cookie time for analyzing js. Since we do not need manual operation, it is better to use headless mode.

The following is the demonstration of the headless mode to get the cookie of the tiktok website.

from selenium import webdriver

import time

def selenium_get_cookies(url='https://www.douyin.com'):

"""The headless pattern extracts the information corresponding to the target link cookie,Code Author: Xiao Ming-Code entity"""

start_time = time.time()

option = webdriver.ChromeOptions()

option.add_argument("--headless")

option.add_experimental_option('excludeSwitches', ['enable-automation'])

option.add_experimental_option('useAutomationExtension', False)

option.add_argument(

'user-agent=Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/86.0.4240.198 Safari/537.36')

option.add_argument("--disable-blink-features=AutomationControlled")

print("Open headless Tour...")

browser = webdriver.Chrome(options=option)

print(f"visit{url} ...")

browser.get(url)

cookie_list = browser.get_cookies()

# Close browser

browser.close()

cost_time = time.time() - start_time

print(f"Headless viewer acquisition cookie Time consuming:{cost_time:0.2f} second")

return {row["name"]: row["value"] for row in cookie_list}

print(selenium_get_cookies("https://www.douyin.com"))

The printing results are as follows:

Open headless Tour...

visit https://www.douyin.com ...

Headless viewer acquisition cookie Time consuming: 3.28 second

{'': 'douyin.com', 'ttwid': '1%7CZn_LJdPjHKdCy4jtBoYWL_yT3NMn7OZVTBStEzoLoQg%7C1642932056%7C80dbf668fd283c71f9aee1a277cb35f597a8453a3159805c92dfee338e70b640', 'AB_LOGIN_GUIDE_TIMESTAMP': '1642932057106', 'MONITOR_WEB_ID': '651d9eca-f155-494b-a945-b8758ae948fb', 'ttcid': 'ea2b5aed3bb349219f7120c53dc844a033', 'home_can_add_dy_2_desktop': '0', '_tea_utm_cache_6383': 'undefined', '__ac_signature': '_02B4Z6wo00f01kI39JwAAIDBnlvrNDKInu5CB.AAAPFv24', 'MONITOR_DEVICE_ID': '25d4799c-1d29-40e9-ab2b-3cc056b09a02', '__ac_nonce': '061ed27580066860ebc87'}

Get cookie s in local Google Explorer

We know that when we directly control the Google viewer through selenium, the cookie of the original Google viewer is not loaded. Is there any way to directly get the cookies that Google viewer has logged in?

In fact, it is very simple. As long as we run the local Google viewer in the debug remote debugging mode, and then use selenium control, we can extract the previously logged in cookie s:

import os

import winreg

from selenium import webdriver

import time

def get_local_ChromeCookies(url, chrome_path=None):

"""Extract the target link corresponding to the local Google viewer cookie,Code Author: Xiao Ming-Code entity"""

if chrome_path is None:

key = winreg.OpenKey(winreg.HKEY_CLASSES_ROOT, r"ChromeHTML\Application")

path = winreg.QueryValueEx(key, "ApplicationIcon")[0]

chrome_path = path[:path.rfind(",")]

start_time = time.time()

command = f'"{chrome_path}" --remote-debugging-port=9222'

# print(command)

os.popen(command)

option = webdriver.ChromeOptions()

option.add_experimental_option("debuggerAddress", "127.0.0.1:9222")

browser = webdriver.Chrome(options=option)

print(f"visit{url}...")

browser.get(url)

cookie_list = browser.get_cookies()

# Close browser

browser.close()

cost_time = time.time() - start_time

print(f"Get Google Explorer cookie Time consuming:{cost_time:0.2f} second")

return {row["name"]: row["value"] for row in cookie_list}

print(get_local_ChromeCookies("https://www.douyin.com"))

The above code has the ability to get tiktok directly from any website visited by Google tour, and the following is the result of cookie:

DevTools listening on ws://127.0.0.1:9222/devtools/browser/9a9c19da-21fc-42ba-946d-ff60a91aa9d2

visit https://www.douyin.com...

Get Google Explorer cookie Time consuming: 4.62 second

{'THEME_STAY_TIME': '10718', 'home_can_add_dy_2_desktop': '0', '_tea_utm_cache_1300': 'undefined', 'passport_csrf_token': 'c6bda362fba48845a2fe6e79f4d35bc8', 'passport_csrf_token_default': 'c6bda362fba48845a2fe6e79f4d35bc8', 'MONITOR_WEB_ID': 'd6e51b15-5276-41f3-97f5-e14d51242496', 'MONITOR_DEVICE_ID': 'd465b931-3a0e-45ba-ac19-263dd31a76ee', '': 'douyin.com', 'ttwid': '1%7CsXCoN0TQtHpKYiRoZnAKyqNJhOfkdJjNEJIdPPAibJw%7C1642915541%7C8a3308d87c6d2a38632bbfe4dfc0baae75162cedf6d63ace9a9e2ae4a13182d2', '__ac_signature': '_02B4Z6wo00f01I50-sQAAIDADnYAhr3RZZCOUP5AAEJ0f7', 'msToken': 'mUYtlAj8qr_9fuTIekLmAThy9N_ltbh0NJo05ns14o3X5As496_O5n7XT4-I81npZuGrIxt0V3JadDZlznmwgzwxqT6GZdIOBozEPC-WAZawQR-teML5984=', '__ac_nonce': '061ed25850009be132678', '_tea_utm_cache_6383': 'undefined', 'AB_LOGIN_GUIDE_TIMESTAMP': '1642915542503', 'ttcid': '3087b27658f74de9a4dae240e7b3930726'}

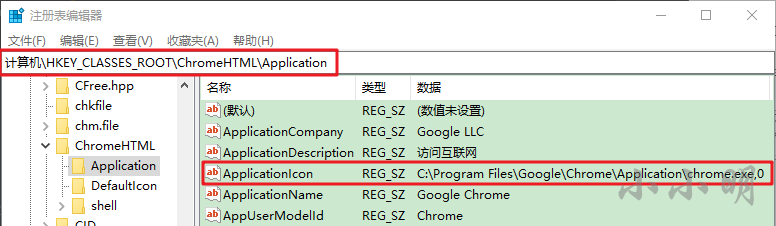

The above code obtains the path of Google Explorer by reading the item in the registry:

The individual cannot confirm that the location of Google viewer can also be obtained on other computers through this registry, so the parameter of active incoming path is provided.

Add -- remote debugging port = 9222 to the startup parameter of Google viewer to enable remote debugging mode, while selenium can connect an existing Google viewer with remote debugging mode through the debuggerAddress parameter.

So we can successfully get any website cookie s that we have logged in to Google viewer before.

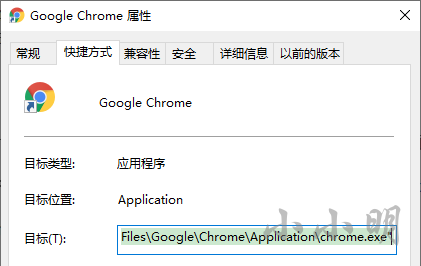

Note, however, that if the shortcut for running Google viewer Daily has the -- user data dir parameter, the above code should also increase the parameter, but the Google viewer on the host does not:

Parse the file storing Google viewer cookie data and extract it

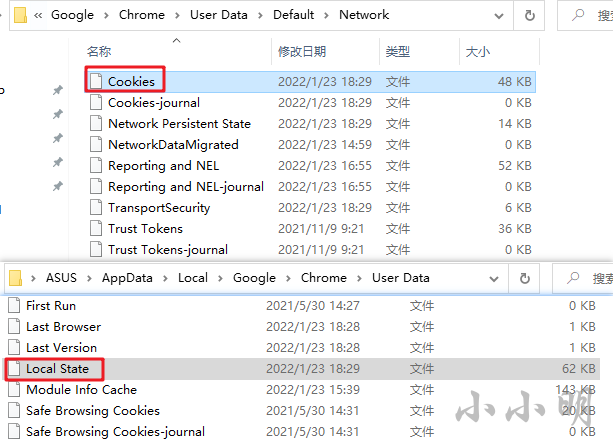

Finally, I'll introduce the ultimate trick, which is to decrypt cookies directly. In the version of Google viewer before 97, the file that saved the cookie was stored in% LOCALAPPDATA%\Google\Chrome\User Data\Default\Cookies, and after 97, it was moved to% LOCALAPPDATA%\Google\Chrome\User Data\Default\Network\Cookies.

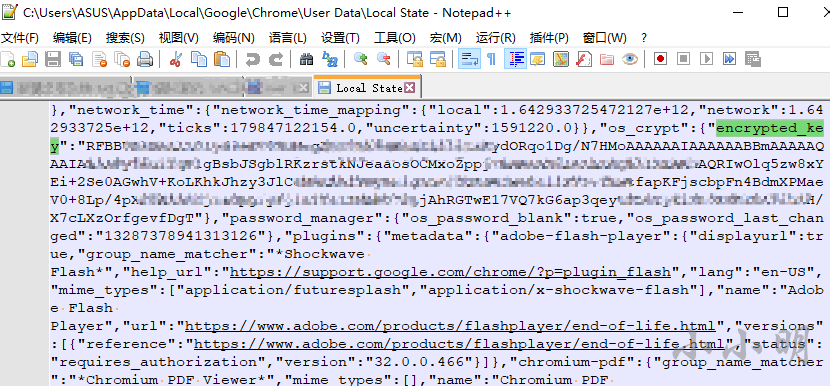

However, it is unknown whether the storage location and encryption method will continue to change in the future versions above 97. Up to now, the key data has always been stored in the% LOCALAPPDATA%\Google\Chrome\User Data\Local State file. (before version 80, directly use win32crypt.cryptunprotectdata (encrypted_value_bytes, none, none, 0) [1] to decrypt without a key)

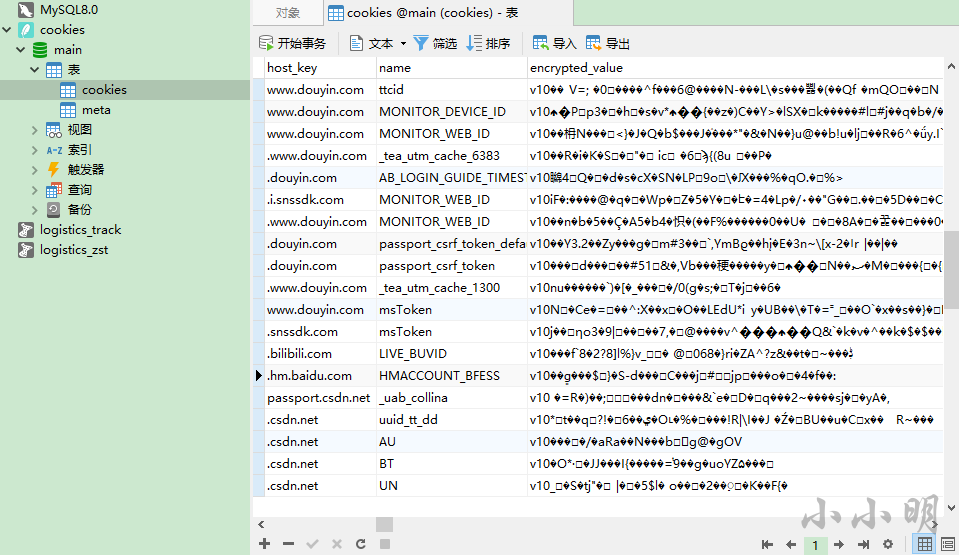

In fact, the cookie file is an SQLite database, which can be viewed directly using Navicat Premium 15:

However, the value is encrypted. For the encryption algorithm after version 80, see: https://github.com/chromium/chromium/blob/master/components/os_crypt/os_crypt_win.cc

The file Local State, which involves decrypting the stored key, is a JSON file:

The complete extraction code is as follows:

"""

Xiao Ming's code

CSDN Home page: https://blog.csdn.net/as604049322

"""

__author__ = 'Xiao Ming'

__time__ = '2022/1/23'

import base64

import json

import os

import sqlite3

import win32crypt

from cryptography.hazmat.primitives.ciphers.aead import AESGCM

def load_local_key(localStateFilePath):

"read chrome Save in json In file key Again base64 Decoding and DPAPI Decrypt to get real AESGCM key"

with open(localStateFilePath, encoding='u8') as f:

encrypted_key = json.load(f)['os_crypt']['encrypted_key']

encrypted_key_with_header = base64.b64decode(encrypted_key)

encrypted_key = encrypted_key_with_header[5:]

key = win32crypt.CryptUnprotectData(encrypted_key, None, None, None, 0)[1]

return key

def decrypt_value(key, data):

"AESGCM decrypt"

nonce, cipherbytes = data[3:15], data[15:]

aesgcm = AESGCM(key)

plaintext = aesgcm.decrypt(nonce, cipherbytes, None).decode('u8')

return plaintext

def fetch_host_cookie(host):

"Get all under the specified domain name cookie"

userDataDir = os.environ['LOCALAPPDATA'] + r'\Google\Chrome\User Data'

localStateFilePath = userDataDir + r'\Local State'

cookiepath = userDataDir + r'\Default\Cookies'

# Version 97 has moved Cookies to the Network directory

if not os.path.exists(cookiepath) or os.stat(cookiepath).st_size == 0:

cookiepath = userDataDir + r'\Default\Network\Cookies'

# print(cookiepath)

sql = f"select name,encrypted_value from cookies where host_key like '%.{host}'"

cookies = {}

key = load_local_key(localStateFilePath)

with sqlite3.connect(cookiepath) as conn:

cu = conn.cursor()

for name, encrypted_value in cu.execute(sql).fetchall():

cookies[name] = decrypt_value(key, encrypted_value)

return cookies

if __name__ == '__main__':

print(fetch_host_cookie("douyin.com"))

result:

{'ttcid': '3087b27658f74de9a4dae240e7b3930726', 'MONITOR_DEVICE_ID': 'd465b931-3a0e-45ba-ac19-263dd31a76ee', 'MONITOR_WEB_ID': '70892127-f756-4455-bb5e-f8b1bf6b71d0', '_tea_utm_cache_6383': 'undefined', 'AB_LOGIN_GUIDE_TIMESTAMP': '1642915542503', 'passport_csrf_token_default': 'c6bda362fba48845a2fe6e79f4d35bc8', 'passport_csrf_token': 'c6bda362fba48845a2fe6e79f4d35bc8', '_tea_utm_cache_1300': 'undefined', 'msToken': 'e2XPeN9Oe2rvoAwQrIKLvpGYQTF8ymR4MFv6N8dXHhu4To2NlR0uzx-XPqxCWWLlO5Mqr2-3hwSIGO_o__heO0Rv6nxYXaOt6yx2eaBS7vmttb4wQSQcYBo=', 'THEME_STAY_TIME': '13218', '__ac_nonce': '061ed2dee006ff56640fa', '__ac_signature': '_02B4Z6wo00f01rasq3AAAIDCNq5RMzqU2Ya2iK.AAMxSb2', 'home_can_add_dy_2_desktop': '1', 'ttwid': '1%7CsXCoN0TQtHpKYiRoZnAKyqNJhOfkdJjNEJIdPPAibJw%7C1642915541%7C8a3308d87c6d2a38632bbfe4dfc0baae75162cedf6d63ace9a9e2ae4a13182d2'}

You can see that cookie s have been perfectly extracted from local files.