Flex-1.12 parse compilation

This paper mainly integrates flink into cdh-6.3.2

1 compilation environment preparation

1.1 install jdk1 eight

slightly

1.2 installing git

yum install git -y

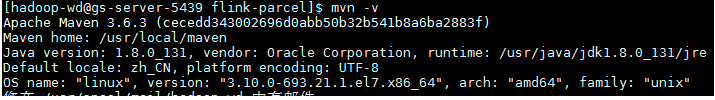

1.3 installing maven

- Install maven

# If the network is blocked, you can download and upload manually wget http://mirrors.tuna.tsinghua.edu.cn/apache/maven/maven-3/3.6.3/binaries/apache-maven-3.6.3-bin.tar.gz tar xzvf apache-maven-3.6.3-bin.tar.gz -C /usr/local/ mv /usr/local/apache-maven-3.6.3/ /usr/local/maven vim /etc/profile export M2_HOME=/usr/local/maven export PATH=$PATH:$M2_HOME/bin: source /etc/profile mvn -v

- Configure storage path and mvn Library

vim /usr/local/maven/conf/setting.xml

# Modify this configuration

<localRepository>/home/hadoop-wd@gridsum.com/guobin/flink-parcel/maven_repo</localRepository>

# Add configuration

<mirrors>

<mirror>

<id>alimaven</id>

<mirrorOf>central</mirrorOf>

<name>aliyun maven</name>

<url>http://maven.aliyun.com/nexus/content/repositories/central/</url>

</mirror>

<mirror>

<id>alimaven</id>

<name>aliyun maven</name>

<url>http://maven.aliyun.com/nexus/content/groups/public/</url>

<mirrorOf>*,!cloudera</mirrorOf>

</mirror>

<mirror>

<id>central</id>

<name>Maven Repository Switchboard</name>

<url>http://repo1.maven.org/maven2/</url>

<mirrorOf>central</mirrorOf>

</mirror>

<mirror>

<id>repo2</id>

<mirrorOf>central</mirrorOf>

<name>Human Readable Name for this Mirror.</name>

<url>http://repo2.maven.org/maven2/</url>

</mirror>

<mirror>

<id>ibiblio</id>

<mirrorOf>central</mirrorOf>

<name>Human Readable Name for this Mirror.</name>

<url>http://mirrors.ibiblio.org/pub/mirrors/maven2/</url>

</mirror>

<mirror>

<id>jboss-public-repository-group</id>

<mirrorOf>central</mirrorOf>

<name>JBoss Public Repository Group</name>

<url>http://repository.jboss.org/nexus/content/groups/public</url>

</mirror>

<mirror>

<id>google-maven-central</id>

<name>Google Maven Central</name>

<url>https://maven-central.storage.googleapis.com</url>

<mirrorOf>central</mirrorOf>

</mirror>

<!-- Image of central warehouse in China -->

<mirror>

<id>maven.net.cn</id>

<name>oneof the central mirrors in china</name>

<url>http://maven.net.cn/content/groups/public/</url>

<mirrorOf>central</mirrorOf>

</mirror>

</mirrors>

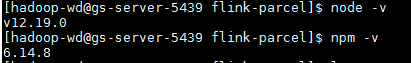

1.4 installing Node

Compile the Flink runtime web module

wget https://nodejs.org/dist/v12.19.0/node-v12.19.0-linux-x64.tar.xz tar -xvf node-v12.19.0-linux-x64.tar.xz ln -s /opt/build_flink/node-v12.19.0-linux-x64/bin/npm /usr/local/bin/ ln -s /opt/build_flink/node-v12.19.0-linux-x64/bin/node /usr/local/bin/ node -v npm -v

2. Flex source code compilation

2.1 flink-shaded

2.1.1 download the Flink shade package

release-10.0 can be selected here, and release-9.0 is in flink1.0 There is no relevant common package in the integration of version 11. The flex-shaded-hadoop-2 module has been removed in release-11.0 FLINK-17685

wget https://github.com/apache/flink-shaded/archive/release-10.0.zip tar -xvf release-10.0.zip cd flink-shaded-release-10.0/

2.1.2 modify pom package

Modify pom dependency

vim pom.xml # Add the following content to < / profiles >

<profile>

<id>vendor-repos</id>

<activation>

<property>

<name>vendor-repos</name>

</property>

</activation>

<!-- Add vendor maven repositories -->

<repositories>

<!-- Cloudera -->

<repository>

<id>cloudera-releases</id>

<url>https://repository.cloudera.com/artifactory/cloudera-repos</url>

<releases>

<enabled>true</enabled>

</releases>

<snapshots>

<enabled>false</enabled>

</snapshots>

</repository>

<!-- Hortonworks -->

<repository>

<id>HDPReleases</id>

<name>HDP Releases</name>

<url>https://repo.hortonworks.com/content/repositories/releases/</url>

<snapshots><enabled>false</enabled></snapshots>

<releases><enabled>true</enabled></releases>

</repository>

<repository>

<id>HortonworksJettyHadoop</id>

<name>HDP Jetty</name>

<url>https://repo.hortonworks.com/content/repositories/jetty-hadoop</url>

<snapshots><enabled>false</enabled></snapshots>

<releases><enabled>true</enabled></releases>

</repository>

<!-- MapR -->

<repository>

<id>mapr-releases</id>

<url>https://repository.mapr.com/maven/</url>

<snapshots><enabled>false</enabled></snapshots>

<releases><enabled>true</enabled></releases>

</repository>

</repositories>

</profile>

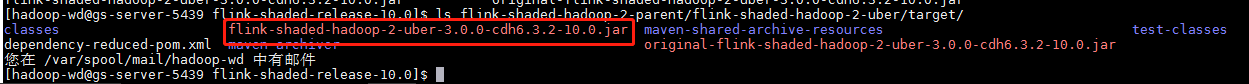

Execute compilation

mvn -T4C clean install -DskipTests -Pvendor-repos -Dhadoop.version=3.0.0-cdh6.3.2 -Dscala-2.11 -Drat.skip=true

The generated file is in the current directory under the following directory: flink-shaded-hadoop-2-parent/flink-shaded-hadoop-2-uber/target /

2.2 flink

2.2.1 download the flink package

Download the corresponding version of flink, Manual Download

wget https://github.com/apache/flink/archive/release-1.12.4.zip

Then decompress

unzip release-1.12.4.zip

2.2.2 modify pom

Modify flick POM xml

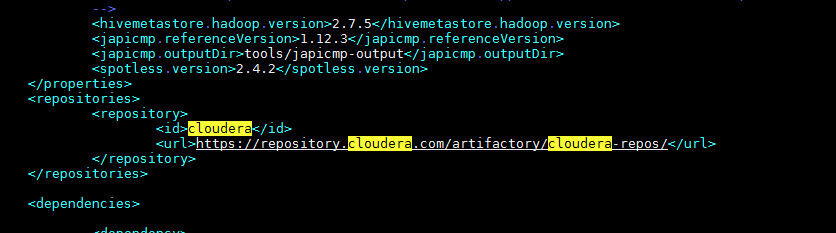

cd flink-release-1.12.4/ vim pom.xml # Add the following configuration <repositories> <repository> <id>cloudera</id> <url>https://repository.cloudera.com/artifactory/cloudera-repos/</url> </repository> </repositories>

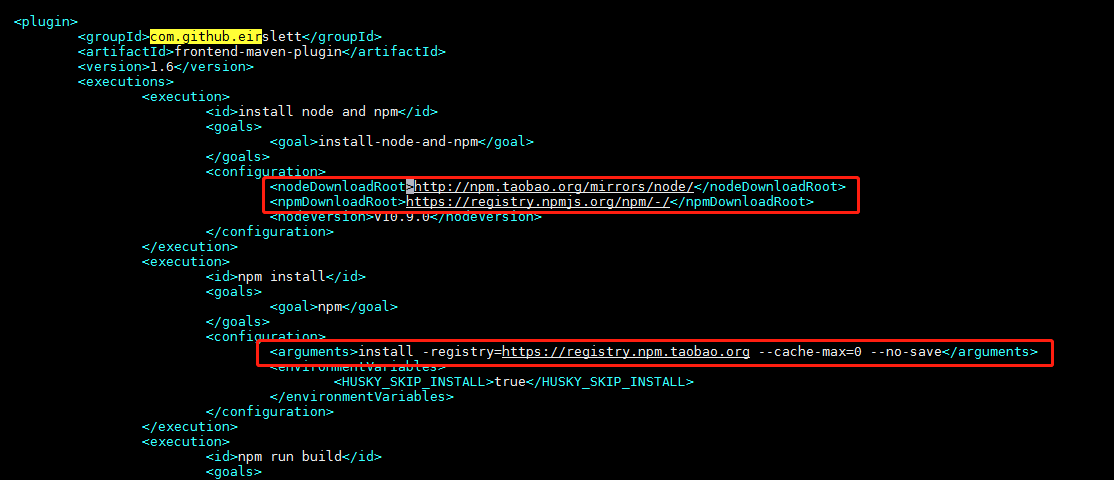

Modify flick runtime web POM XML configuration. In the tag, modify the groupId to com github. The value under the label of eirslet is the value provided below

cd flink-runtime-web

vim pom.xml

<groupId>com.github.eirslett</groupId>

<artifactId>frontend-maven-plugin</artifactId>

<version>1.6</version>

<executions>

<execution>

<id>install node and npm</id>

<goals>

<goal>install-node-and-npm</goal>

</goals>

<configuration>

<nodeDownloadRoot>http://npm.taobao.org/mirrors/node/</nodeDownloadRoot>

<npmDownloadRoot>https://registry.npmjs.org/npm/-/</npmDownloadRoot>

<nodeVersion>v10.9.0</nodeVersion>

</configuration>

</execution>

<execution>

<id>npm install</id>

<goals>

<goal>npm</goal>

</goals>

<configuration>

<arguments>install -registry=https://registry.npm.taobao.org --cache-max=0 --no-save</arguments>

<environmentVariables>

<HUSKY_SKIP_INSTALL>true</HUSKY_SKIP_INSTALL>

</environmentVariables>

</configuration>

</execution>

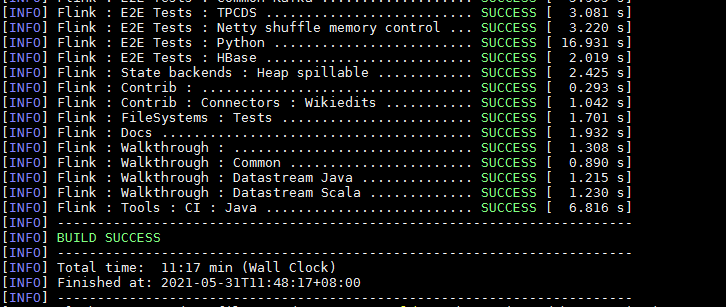

2.2.3 start compilation

cd ../ # Back to flick-release-1.12.4 mvn clean install -DskipTests -Dfast -Drat.skip=true -Dhaoop.version=3.0.0-cdh6.3.2 -Pvendor-repos -Dinclude-hadoop -Dscala-2.11 -T4C

2.2.4 adding dependent packages

# You need to add a dependent package to the compiled package and add it to $path / flick-release-1.12.4/flick-dist/target/flick-1.12.4-bin/flick-1.12.4/lib # libfb303-0.9.3. Jar (self prepared), hive-exec-2.1.1-cdh6 3.2. Jar (self prepared) # flink-connectors/flink-connector-hive/target/flink-connector-hive_ 2.11-1.12.4. Jar (in the flink-release-1.12.4 directory) # flink-shaded-hadoop-2-uber-3.0.0-cdh6.3.2-10.0.jar (packaged and generated in the previous step) # flink-libraries/flink-state-processing-api/target/flink-state-processor-api_ 2.11-1.12.4. Jar (in the flink-release-1.12.4 directory)

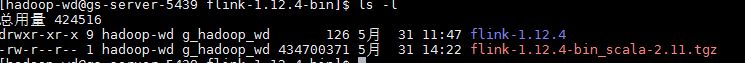

2.2.5 packaging

Package the compiled flink package and use it later in making flink parcel

cd $PATH/flink-release-1.12.4/flink-dist/target/flink-1.12.4-bin/ tar zvcf flink-1.12.4-bin_scala-2.11.tgz flink-1.12.4/

3. Production by parcel

3.1 preparation

- Download the Flink parcel packaging tool

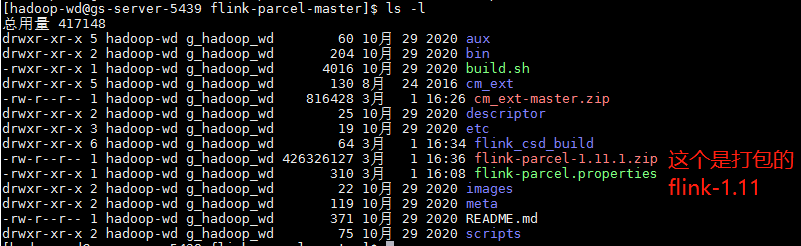

git clone https://github.com/EvenGui/flink-parcel-master # Enter this directory

- Modify configuration

Before that, copy the flink package generated by [2.2.5] package to the flink parcel master directory

vim flink-parcel.properties #FLINk download address FLINK_URL= ./flink-1.12.4-bin_scala-2.11.tgz #flink version number FLINK_VERSION=1.12.4 #Extended version number EXTENS_VERSION=BIN_SCALA-2.11 #Operating system version, taking centos as an example OS_VERSION=7 #CDH small version CDH_MIN_FULL=5.16.2 CDH_MAX_FULL=6.4 #CDH large version CDH_MIN=5 CDH_MAX=6

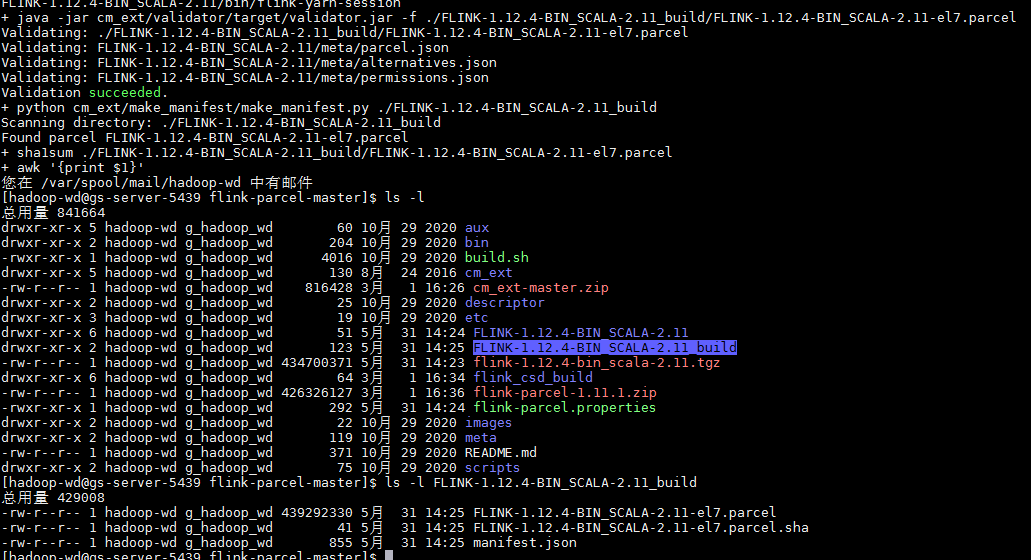

3.2 start making Flink parcel

sh build.sh parcel

Compiled successfully

3.3 compiling csd

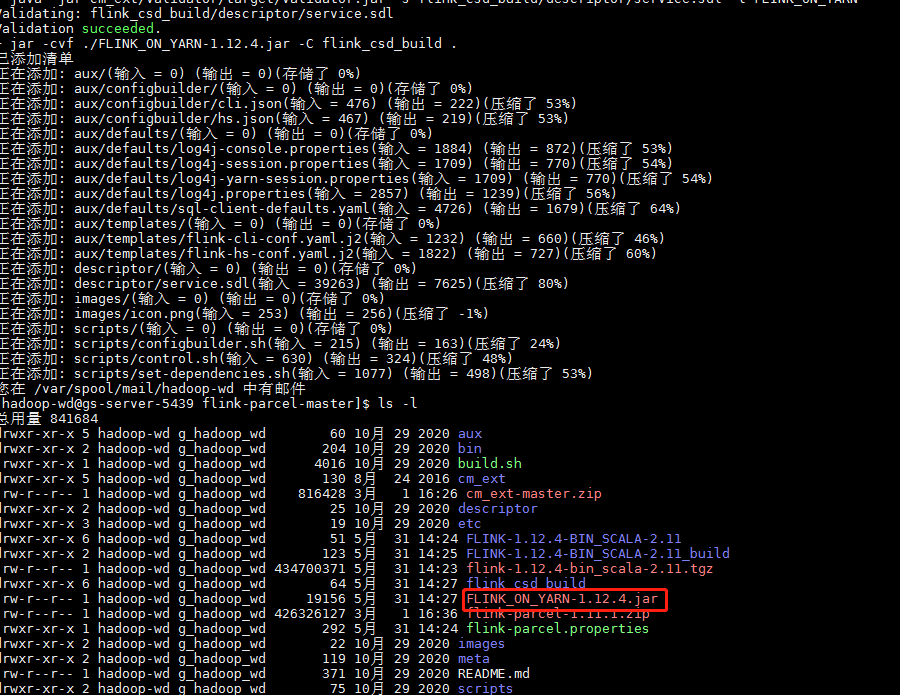

sh build.sh csd

This compilation package can be installed and used in the cdh cluster.

3.4 package all packages into zip package

mkdir -p flink-parcel-1.12.4 mv FLINK-1.12.4-BIN_SCALA-2.11_build/* flink-parcel-1.12.4/ mv FLINK_ON_YARN-1.12.4.jar flink-parcel-1.12.4/ zip flink-parcel-1.12.4.zip -r flink-parcel-1.12.4/

4 installation

If this is an upgrade, you can first save the savepoint of the corresponding task, uninstall the old version from the cluster (omitted), and add a new version again.

4.1 upload the corresponding parcel package to the cluster

Upload the package of [3.4] (#3.4 package all packages into zip package) to / opt / cloudera / parcel repo of CM server node in cdh cluster (back up the original manifest.json), and then unzip it

unzip flink-parcel-1.12.4.zip

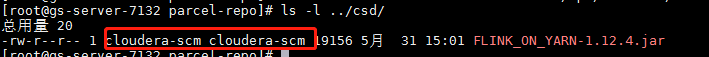

Move the extracted flink parcel package to / opt / cloudera / parcel repo. In addition, Flink_ ON_ YARN-1.12.4. Move the jar to / opt/cloudera/csd, and then modify the permissions

chown cloudera-scm:cloudera-scm -R /opt/cloudera/parcel-repo chown cloudera-scm:cloudera-scm -R /opt/cloudera/csd

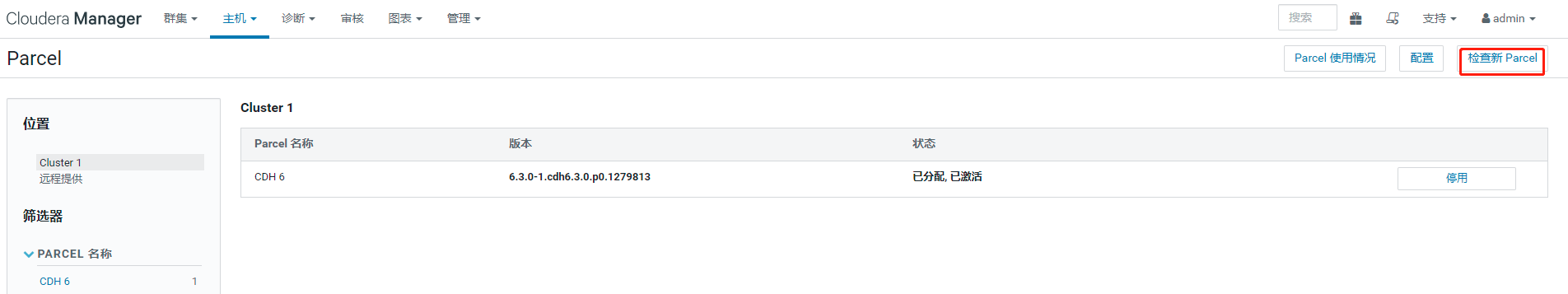

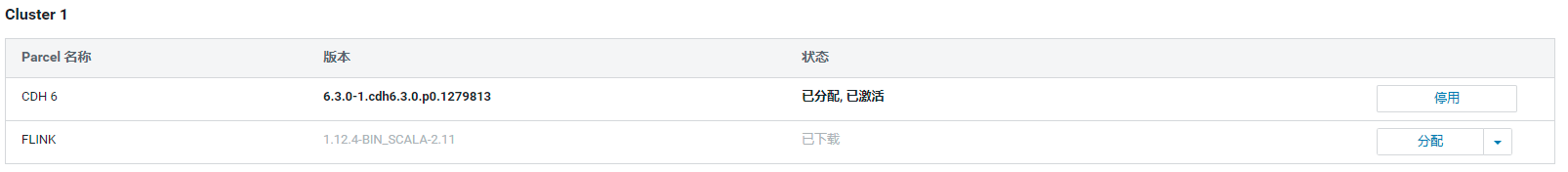

4.2 activate parcel package

Operate in cm visual interface

- Detect new parcel s

- Assignment activation

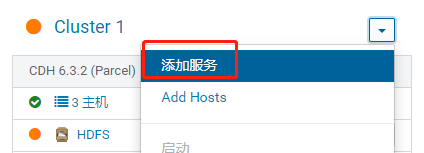

4.3 new services

- Cm server needs to be restarted before adding services

Execute on CM server machine:

systemctl restart cloudera-scm-server

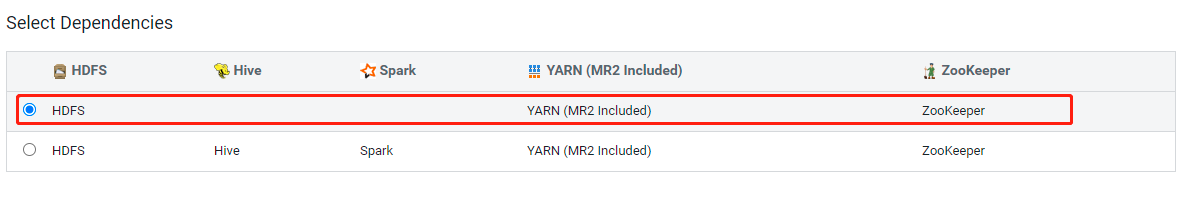

- Add service

Then select the flick point to continue. Hive is configured later, so the non hive version is bound here

Custom role

Select one machine on the Dashboard and all machines on the gateway. Click continue

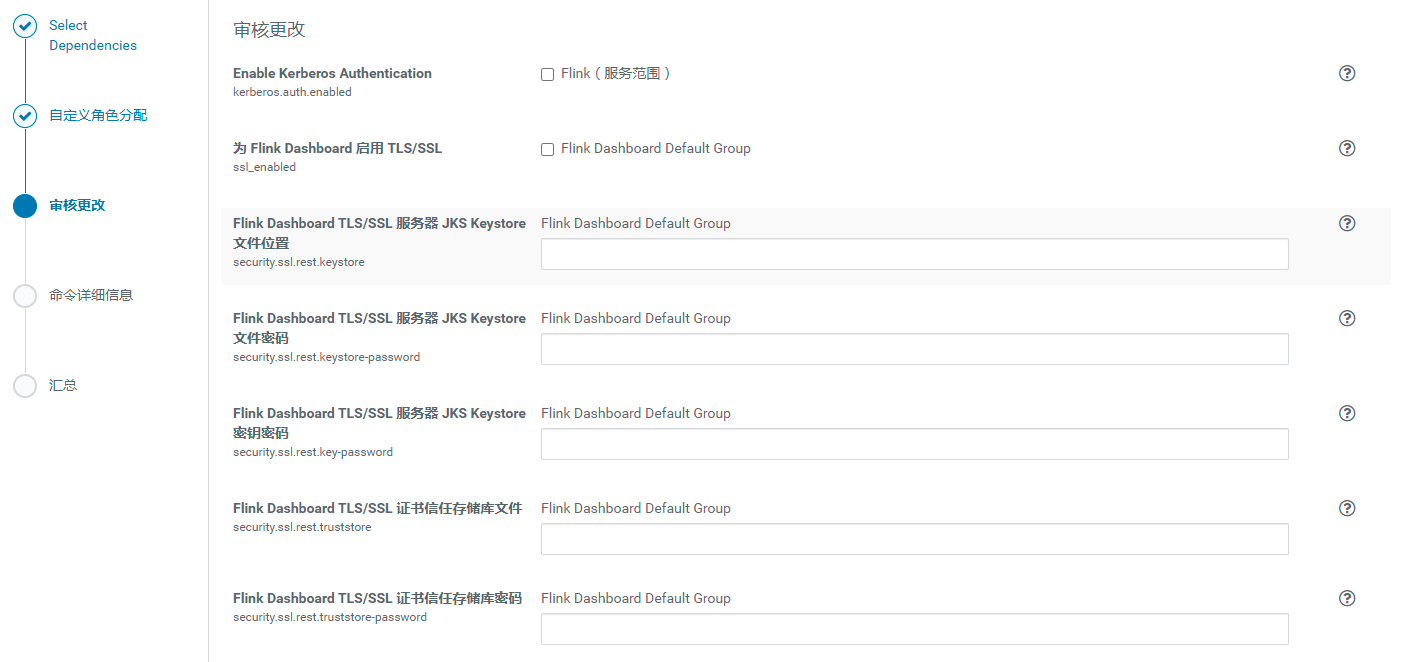

Review the changes and click continue

Then click start to finish.

4.4 configuration adjustment

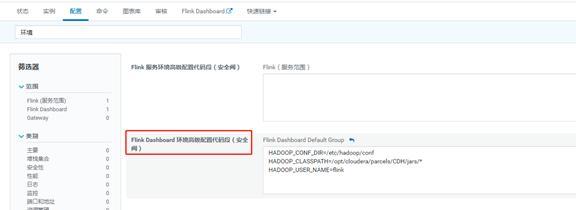

- Configure environment variables

This is because the environment variables of this component need to be configured:

Return to the cm main interface - > select the blink yarn component - > select the configuration - > search "environment", and add the following configuration

HADOOP_CONF_DIR=/etc/hadoop/conf HADOOP_CLASSPATH=/opt/cloudera/parcels/CDH/jars/* HADOOP_USER_NAME=flink

-

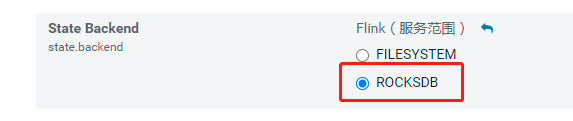

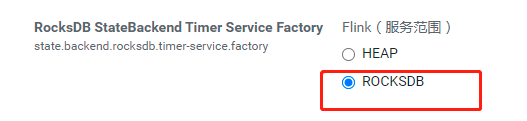

Configure statebackend

[the external chain picture transfer fails. The source station may have an anti-theft chain mechanism. It is recommended to save the picture and upload it directly (img-dwwm6ego-1622691791878)(

)]

- Keytab

If kerberos authentication is not started, remove the value from this configuration

- Resource allocation

The default configuration is adopted. If necessary, it is adjusted according to the actual situation. The specific resources of each task are configured at startup

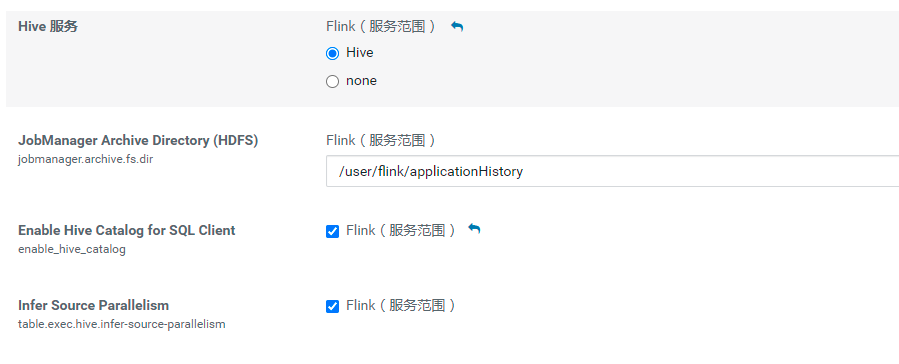

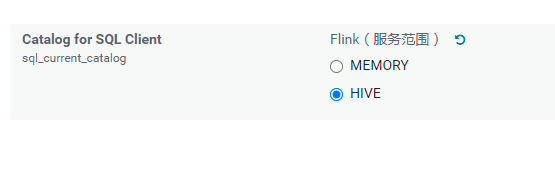

4.4.1 configure hive

5 test

- Configure execution environment (all machines)

vi /etc/profile # Add the following export HADOOP_CONF_DIR=/etc/hadoop/conf export HADOOP_CLASSPATH=/opt/cloudera/parcels/CDH/jars/* export HADOOP_USER_NAME=flink # After adding, execute the following command to take effect source /etc/profile

- test

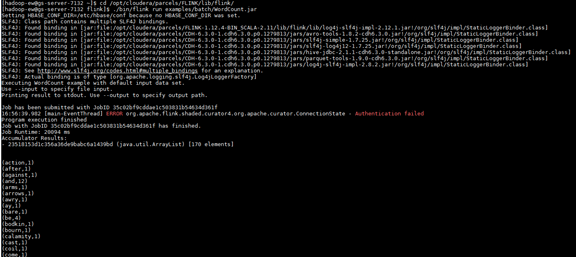

Execute a wordcount:

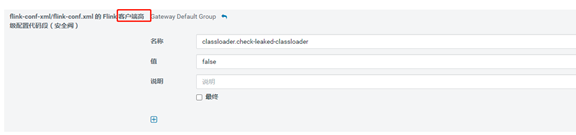

cd /opt/cloudera/parcels/FLINK/lib/flink/ # Execute word count test ./bin/flink run -Dclassloader.check-leaked-classloader=false examples/batch/WordCount.jar

After submission, the output is as follows:

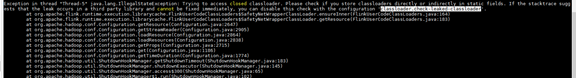

If a similar exception occurs at the end of the test:

Classloader can be configured check-leaked-classloader=false