catalogue

1. Introduction to broadcaststate

2. Requirements - realize dynamic update of configuration

0. Links to related articles

1. Introduction to broadcaststate

During the development process, if low throughput events such as distribution / broadcast configuration and rules need to flow to all downstream task s, Broadcast State can be used. Broadcast State is a new feature introduced by Flink 1.5.

The downstream task receives these configurations and rules and saves them as a BroadcastState to apply them to the calculation of another data flow.

Scenario example:

- Dynamically update calculation rules: if the event flow needs to be calculated according to the latest rules, the rules can be broadcast to downstream tasks as broadcast status.

- Add additional fields in real time: if the event flow needs to add the user's basic information in real time, the user's basic information can be broadcast to the downstream Task as a broadcast status.

API introduction:

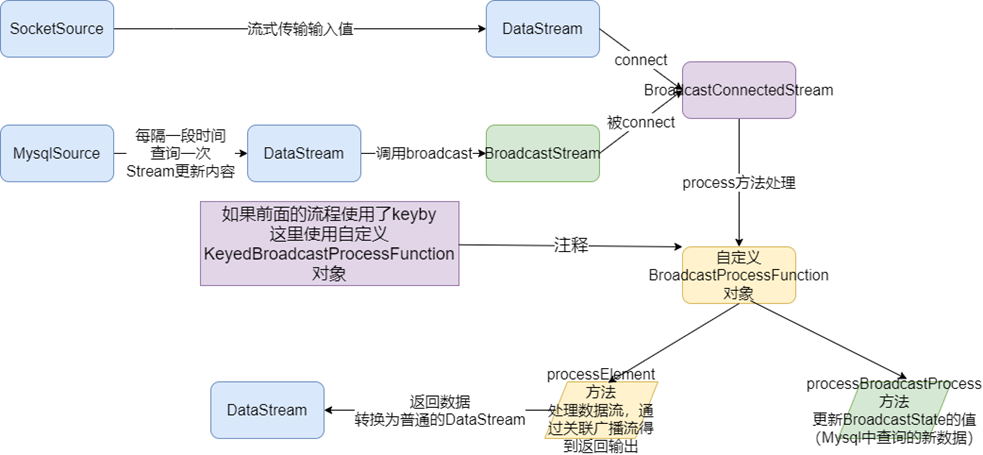

First, create a Keyed or non Keyed DataStream, then create a Broadcasted Stream, and finally connect (call the connect method) to the Broadcasted Stream through the DataStream, so as to broadcast the broadcastestate to each Task downstream of the Data Stream.

Case 1:

If the DataStream is a Keyed Stream, keyedroadcastprocessfunction needs to be used to add a processing function after connecting to the Broadcasted Stream. The following is the API of keyedroadcastprocessfunction, and the code is as follows:

public abstract class KeyedBroadcastProcessFunction<KS, IN1, IN2, OUT> extends BaseBroadcastProcessFunction {

public abstract void processElement(final IN1 value, final ReadOnlyContext ctx, final Collector<OUT> out) throws Exception;

public abstract void processBroadcastElement(final IN2 value, final Context ctx, final Collector<OUT> out) throws Exception;

}

The meanings of the parameters in the above generic types are described as follows:

- KS: indicates the type of Key that the Flink program relies on when calling keyBy to build a Stream from the upstream Source Operator;

- IN1: indicates the type of data record in non Broadcast Data Stream;

- IN2: indicates the type of data record in Broadcast Stream;

- OUT: indicates the type of output result data record after being processed by the processElement() and processbroadcasteelement() methods of keyedroadcastprocessfunction.

Case 2:

If the Data Stream is a non keyed stream, you need to use BroadcastProcessFunction to add a processing function after connecting to the Broadcasted Stream. The following is the API of BroadcastProcessFunction, and the code is as follows:

public abstract class BroadcastProcessFunction<IN1, IN2, OUT> extends BaseBroadcastProcessFunction {

public abstract void processElement(final IN1 value, final ReadOnlyContext ctx, final Collector<OUT> out) throws Exception;

public abstract void processBroadcastElement(final IN2 value, final Context ctx, final Collector<OUT> out) throws Exception;

}

The meanings of the parameters in the above generics are the same as those in the last three generic types of keyedroadcastprocessfunction, except that KS generic parameters are not required without calling keyBy operation to partition the original Stream.

How to use the above BroadcastProcessFunction is detailed. Next, we will take keyedroadcastprocessfunction as an example through actual programming.

matters needing attention:

- Broadcast State is Map type, i.e. K-V type.

- Broadcast State can be modified only on the broadcast side, that is, in the processBroadcastElement method of BroadcastProcessFunction or keyedroadcastprocessfunction. On the non broadcast side, that is, read-only in the processElement method of BroadcastProcessFunction or keyedroadcastprocessfunction.

- The order of elements in Broadcast State may be different in each Task. Order based processing requires attention.

- Broadcast State when it is in Checkpoint, each Task will broadcast the Checkpoint state.

- The Broadcast State is saved in memory at runtime and cannot be saved in RocksDB State Backend at present.

2. Requirements - realize dynamic update of configuration

Filter out the users in the configuration in real time, and complete the basic information of these users in the event flow.

1. Event flow: it means that the user browses or clicks a commodity at a certain time. The format is as follows:

{"userID": "user_3", "eventTime": "2019-08-17 12:19:47", "eventType": "browse", "productID": 1}

{"userID": "user_2", "eventTime": "2019-08-17 12:19:48", "eventType": "click", "productID": 1}

2. Configuration data: represents the details of the user. In Mysql, it is as follows:

DROP TABLE IF EXISTS `user_info`;

CREATE TABLE `user_info` (

`userID` varchar(20) CHARACTER SET utf8 COLLATE utf8_general_ci NOT NULL,

`userName` varchar(10) CHARACTER SET utf8 COLLATE utf8_general_ci NULL DEFAULT NULL,

`userAge` int(11) NULL DEFAULT NULL,

PRIMARY KEY (`userID`) USING BTREE

) ENGINE = MyISAM CHARACTER SET = utf8 COLLATE = utf8_general_ci ROW_FORMAT = Dynamic;

-- ----------------------------

-- Records of user_info

-- ----------------------------

INSERT INTO `user_info` VALUES ('user_1', 'Zhang San', 10);

INSERT INTO `user_info` VALUES ('user_2', 'Li Si', 20);

INSERT INTO `user_info` VALUES ('user_3', 'Wang Wu', 30);

INSERT INTO `user_info` VALUES ('user_4', 'Zhao Liu', 40);

SET FOREIGN_KEY_CHECKS = 1;

3. Output results

(user_3,2019-08-17 12:19:47,browse,1,Wang Wu,33) (user_2,2019-08-17 12:19:48,click,1,Li Si,20)

3. Coding steps

1. env

2. source

2.1. Build real-time data event flow-Custom random

<userID, eventTime, eventType, productID>

2.2.Build configuration flow-from MySQL

<user id,<full name,Age>>

3. transformation

3.1. Define state descriptor

MapStateDescriptor<Void, Map<String, Tuple2<String, Integer>>> descriptor =

new MapStateDescriptor<>("config",Types.VOID, Types.MAP(Types.STRING, Types.TUPLE(Types.STRING, Types.INT)));

3.2. Broadcast configuration flow

BroadcastStream<Map<String, Tuple2<String, Integer>>> broadcastDS = configDS.broadcast(descriptor);

3.3. Connect the event stream to the broadcast stream

BroadcastConnectedStream<Tuple4<String, String, String, Integer>, Map<String, Tuple2<String, Integer>>> connectDS =eventDS.connect(broadcastDS);

3.4. Process connected streams-Complete the information of users in the event flow according to the configuration flow

4. sink

5. execute

4. Code implementation

import org.apache.flink.api.common.state.BroadcastState;

import org.apache.flink.api.common.state.MapStateDescriptor;

import org.apache.flink.api.common.state.ReadOnlyBroadcastState;

import org.apache.flink.api.common.typeinfo.Types;

import org.apache.flink.api.java.tuple.Tuple2;

import org.apache.flink.api.java.tuple.Tuple4;

import org.apache.flink.api.java.tuple.Tuple6;

import org.apache.flink.configuration.Configuration;

import org.apache.flink.streaming.api.datastream.BroadcastConnectedStream;

import org.apache.flink.streaming.api.datastream.BroadcastStream;

import org.apache.flink.streaming.api.datastream.DataStreamSource;

import org.apache.flink.streaming.api.datastream.SingleOutputStreamOperator;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.streaming.api.functions.co.BroadcastProcessFunction;

import org.apache.flink.streaming.api.functions.source.RichSourceFunction;

import org.apache.flink.streaming.api.functions.source.SourceFunction;

import org.apache.flink.util.Collector;

import java.sql.Connection;

import java.sql.DriverManager;

import java.sql.PreparedStatement;

import java.sql.ResultSet;

import java.text.SimpleDateFormat;

import java.util.Date;

import java.util.HashMap;

import java.util.Map;

import java.util.Random;

/**

* Desc

* Requirements:

* Use Flink's BroadcastState to complete

* The association between event flow and configuration flow (which needs to be broadcast as State) and realize the dynamic update of configuration!

*/

public class BroadcastStateConfigUpdate {

public static void main(String[] args) throws Exception{

//1.env

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

//2.source

//-1. Build a real-time user-defined random data event flow - data will be generated continuously and the amount will be large

//<userID, eventTime, eventType, productID>

DataStreamSource<Tuple4<String, String, String, Integer>> eventDS = env.addSource(new MySource());

//-2. Build configuration flow - regularly query the latest data from MySQL, with a small amount of data

//< user ID, < name, age > >

DataStreamSource<Map<String, Tuple2<String, Integer>>> configDS = env.addSource(new MySQLSource());

//3.transformation

//-1. Define the state descriptor - prepare to broadcast the configuration flow as a state

MapStateDescriptor<Void, Map<String, Tuple2<String, Integer>>> descriptor =

new MapStateDescriptor<>("config", Types.VOID, Types.MAP(Types.STRING, Types.TUPLE(Types.STRING, Types.INT)));

//-2. Broadcast the configuration flow according to the state descriptor and turn it into a broadcast state flow

BroadcastStream<Map<String, Tuple2<String, Integer>>> broadcastDS = configDS.broadcast(descriptor);

//-3. Connect the event stream with the broadcast stream

BroadcastConnectedStream<Tuple4<String, String, String, Integer>, Map<String, Tuple2<String, Integer>>> connectDS =eventDS.connect(broadcastDS);

//-4. Process the connected stream - complete the user information in the event stream according to the configured stream

SingleOutputStreamOperator<Tuple6<String, String, String, Integer, String, Integer>> result = connectDS

//BroadcastProcessFunction<IN1, IN2, OUT>

.process(new BroadcastProcessFunction<

//< userid, eventtime, eventtype, productid > / / event flow

Tuple4<String, String, String, Integer>,

//< user ID, < name, age > > / / broadcast stream

Map<String, Tuple2<String, Integer>>,

//< user id, eventTime, eventType, productID, name, age > / / data to be collected

Tuple6<String, String, String, Integer, String, Integer>>() {

//Handling elements in an event stream

@Override

public void processElement(Tuple4<String, String, String, Integer> value, ReadOnlyContext ctx, Collector<Tuple6<String, String, String, Integer, String, Integer>> out) throws Exception {

//Retrieve the userId from the event stream

String userId = value.f0;

//Obtain the broadcast status according to the status descriptor

ReadOnlyBroadcastState<Void, Map<String, Tuple2<String, Integer>>> broadcastState = ctx.getBroadcastState(descriptor);

if (broadcastState != null) {

//Take out the map in the broadcast status, < user ID, < name, age > >

Map<String, Tuple2<String, Integer>> map = broadcastState.get(null);

if (map != null) {

//Get < name, age >

Tuple2<String, Integer> tuple2 = map.get(userId);

//Take out the name and age in tuple2

String userName = tuple2.f0;

Integer userAge = tuple2.f1;

out.collect(Tuple6.of(userId, value.f1, value.f2, value.f3, userName, userAge));

}

}

}

//Handling elements in broadcast streams

@Override

public void processBroadcastElement(Map<String, Tuple2<String, Integer>> value, Context ctx, Collector<Tuple6<String, String, String, Integer, String, Integer>> out) throws Exception {

//value is the latest map data obtained from MySQLSource at regular intervals

//First, obtain the historical broadcast status according to the status descriptor

BroadcastState<Void, Map<String, Tuple2<String, Integer>>> broadcastState = ctx.getBroadcastState(descriptor);

//Then clear the historical status data

broadcastState.clear();

//Finally, put the latest broadcast stream data into state (update status data)

broadcastState.put(null, value);

}

});

//4.sink

result.print();

//5.execute

env.execute();

}

/**

* <userID, eventTime, eventType, productID>

*/

public static class MySource implements SourceFunction<Tuple4<String, String, String, Integer>>{

private boolean isRunning = true;

@Override

public void run(SourceContext<Tuple4<String, String, String, Integer>> ctx) throws Exception {

Random random = new Random();

SimpleDateFormat df = new SimpleDateFormat("yyyy-MM-dd HH:mm:ss");

while (isRunning){

int id = random.nextInt(4) + 1;

String user_id = "user_" + id;

String eventTime = df.format(new Date());

String eventType = "type_" + random.nextInt(3);

int productId = random.nextInt(4);

ctx.collect(Tuple4.of(user_id,eventTime,eventType,productId));

Thread.sleep(500);

}

}

@Override

public void cancel() {

isRunning = false;

}

}

/**

* <User ID, < name, age > >

*/

public static class MySQLSource extends RichSourceFunction<Map<String, Tuple2<String, Integer>>> {

private boolean flag = true;

private Connection conn = null;

private PreparedStatement ps = null;

private ResultSet rs = null;

@Override

public void open(Configuration parameters) throws Exception {

conn = DriverManager.getConnection("jdbc:mysql://localhost:3306/bigdata", "root", "root");

String sql = "select `userID`, `userName`, `userAge` from `user_info`";

ps = conn.prepareStatement(sql);

}

@Override

public void run(SourceContext<Map<String, Tuple2<String, Integer>>> ctx) throws Exception {

while (flag){

Map<String, Tuple2<String, Integer>> map = new HashMap<>();

ResultSet rs = ps.executeQuery();

while (rs.next()){

String userID = rs.getString("userID");

String userName = rs.getString("userName");

int userAge = rs.getInt("userAge");

//Map<String, Tuple2<String, Integer>>

map.put(userID,Tuple2.of(userName,userAge));

}

ctx.collect(map);

Thread.sleep(5000);//Update the user's configuration information every 5s!

}

}

@Override

public void cancel() {

flag = false;

}

@Override

public void close() throws Exception {

if (conn != null) conn.close();

if (ps != null) ps.close();

if (rs != null) rs.close();

}

}

}

Note: this blog is adapted from the 2020 New Year video of a horse - > Website of station B

Note: links to other related articles go here - > Flink article summary