1. General

Reprint: Flink source code reading notes (6) - Computing Resource Management

In Flink, computing resources are allocated with Slot as the basic unit. This paper will analyze the management mechanism of computing resources in Flink.

2. Basic concept of task slot

In the previous article, we learned about the startup process of Flink cluster. In the Flink cluster, each TaskManager is a separate JVM process (non MiniCluster mode), and multiple subtasks may run in a TaskManager, all of which run in their own independent threads. In order to control the number of tasks that can be run in a TaskManager, the concept of Task Slot is introduced.

Each Task Slot represents a fixed subset of computing resources owned by task manager. For example, a TaskManager with three slots can use 1 ⁄ 3 of memory for each slot. In this way, subtasks running in different slots will not compete for memory resources. At present, Flink does not support CPU isolation, but only memory isolation.

By adjusting the number of slots, you can control the isolation degree of subtasks. For example, if there is only one slot for each task manager, it is assumed that each group of subtasks is running in a separate JVM process; If each task manager has multiple slots, it means that more subtasks can run in the same JVM. Subtasks in the same JVM process can share TCP connection and heartbeat messages, reduce network transmission of data, and share some data structures. The consumption of each subtask is reduced to a certain extent.

By default, Flink allows subtasks to share slots, provided that they belong to the same Job and are not subtasks of the same operator. As a result, a complete pipeline of the Job may be run in the same slit. Allowing slot sharing has two main benefits:

- When Flink calculates the number of slot s required for a Job, it only needs to determine its maximum parallelism, without considering the parallelism of each task;

- Can make better use of resources. If there is no slot sharing, those subtasks with small resource requirements and those with large resource requirements will occupy the same resources, but once slot sharing is allowed, they may be assigned to the same slot.

Flink uses SlotSharingGroup and colocation group to determine how to share resources when scheduling tasks. They correspond to two constraints:

-

SlotSharingGroup: subtasks of different jobvertices of the same SlotSharingGroup can be assigned to the same slot, but it is not guaranteed that they can be done;

-

Colocation group: the nth subtask of different jobvertices of the same SlotSharingGroup must be in the same slot, which is a mandatory constraint.

3. Slot management in taskexecutor

3.1 TaskSlot

First, let's take a look at how slots are managed in TaskManager, that is, TaskExecutor.

The slot ID is the unique identifier of a slot, which contains two attributes. The ResourceID indicates the TaskExecutor where the slot is located, and the slot number is the index position of the slot in the TaskExecutor.

TaskSlot is the abstraction of slot in TaskExecutor. It may be in the four states of free, releasing, allocated and active. Its main properties are as follows:

class TaskSlot {

/** Index of the task slot. */

private final int index;

/** State of this slot. */

private TaskSlotState state;

/** Resource characteristics for this slot. */

private final ResourceProfile resourceProfile;

/** Tasks running in this slot. */

//Multiple tasks may be executed in a Slot

private final Map<ExecutionAttemptID, Task> tasks;

/** Job id to which the slot has been allocated; null if not allocated. */

private JobID jobId;

/** Allocation id of this slot; null if not allocated. */

private AllocationID allocationId;

}

TaskSlot provides methods to modify the status. For example, the allocate (jobid, newjobid, allocationid, newallocationid) method will mark the slot as Allocated; markFree() will mark the slot as Free, but it can only be released successfully after all tasks are removed. When a slot switches states, it will first judge its current state. In addition, you can add tasks to the slot through add(Task task). You need to ensure that these tasks come from the same Job.

3.2 TaskSlotTable

TaskExecutor mainly manages all the slots it owns through TaskSlotTable:

class TaskSlotTable implements TimeoutListener<AllocationID> {

/** Timer service used to time out allocated slots. */

private final TimerService<AllocationID> timerService;

/** The list of all task slots. */

private final List<TaskSlot> taskSlots;

/** Mapping from allocation id to task slot. */

private final Map<AllocationID, TaskSlot> allocationIDTaskSlotMap;

/** Mapping from execution attempt id to task and task slot. */

private final Map<ExecutionAttemptID, TaskSlotMapping> taskSlotMappings;

/** Mapping from job id to allocated slots for a job. */

private final Map<JobID, Set<AllocationID>> slotsPerJob;

/** Interface for slot actions, such as freeing them or timing them out. */

private SlotActions slotActions;

}

Through the allocateSlot (int index, jobid, jobid, AllocationID, AllocationID, time slottimeout) method, you can assign the slot of the specified index to the request corresponding to the AllocationID. This method will call taskslot Allocate (jobid, newjobid, AllocationID, newallocationid) method. Note that the last parameter of the allocateSlot method is a timeout. We notice that a member variable of TaskSlotTable is TimerService timerService. You can register the timer through timeService. If the timer is not cancelled before the timeout expires, slotact The timeout method is called. If the slot associated with the assigned slot is not cancelled before the timeout, the slot will be released again and marked as Free.

class TaskSlotTable {

public boolean allocateSlot(int index, JobID jobId, AllocationID allocationId, Time slotTimeout) {

checkInit();

TaskSlot taskSlot = taskSlots.get(index);

boolean result = taskSlot.allocate(jobId, allocationId);

if (result) {

// update the allocation id to task slot map

allocationIDTaskSlotMap.put(allocationId, taskSlot);

// register a timeout for this slot since it's in state allocated

timerService.registerTimeout(allocationId, slotTimeout.getSize(), slotTimeout.getUnit());

// add this slot to the set of job slots

Set<AllocationID> slots = slotsPerJob.get(jobId);

if (slots == null) {

slots = new HashSet<>(4);

slotsPerJob.put(jobId, slots);

}

slots.add(allocationId);

}

return result;

}

}

If the slot is marked as Active, the timer associated with slot allocation will be cancelled:

class TaskSlotTable {

public boolean markSlotActive(AllocationID allocationId) throws SlotNotFoundException {

checkInit();

TaskSlot taskSlot = getTaskSlot(allocationId);

if (taskSlot != null) {

if (taskSlot.markActive()) {

// unregister a potential timeout

LOG.info("Activate slot {}.", allocationId);

timerService.unregisterTimeout(allocationId);

return true;

} else {

return false;

}

} else {

throw new SlotNotFoundException(allocationId);

}

}

}

A SlotReport object can be obtained through createSlotReport. The SlotReport contains the status of all slots in the current task executor and their allocation.

4.TaskExecutor

The task executor needs to report the status of all slots to the resource manager so that the resource manager knows the allocation of all slots. This mainly occurs in two cases:

- When the TaskExecutor establishes a connection with the resource manager for the first time, it needs to send a SlotReport

- TaskExecutor and ResourceManager regularly send heartbeat information, and the heartbeat package contains SlotReport

Let's look at the relevant code logic:

class TaskExecutor {

private void establishResourceManagerConnection(

ResourceManagerGateway resourceManagerGateway,

ResourceID resourceManagerResourceId,

InstanceID taskExecutorRegistrationId,

ClusterInformation clusterInformation) {

//Establish the connection for the first time and report the slot information to RM

final CompletableFuture<Acknowledge> slotReportResponseFuture = resourceManagerGateway.sendSlotReport(

getResourceID(),

taskExecutorRegistrationId,

taskSlotTable.createSlotReport(getResourceID()),

taskManagerConfiguration.getTimeout());

//.........

}

private class ResourceManagerHeartbeatListener implements HeartbeatListener<Void, SlotReport> {

//heartbeat message

@Override

public CompletableFuture<SlotReport> retrievePayload(ResourceID resourceID) {

return callAsync(

() -> taskSlotTable.createSlotReport(getResourceID()),

taskManagerConfiguration.getTimeout());

}

}

}

Resource manager through TaskExecutor The requestslot method requires the TaskExecutor to allocate slots. Since the resource manager knows the current status of all slots, the allocation request will be accurate to the specific slot ID:

class TaskExecutor {

@Override

public CompletableFuture<Acknowledge> requestSlot(

final SlotID slotId,

final JobID jobId,

final AllocationID allocationId,

final String targetAddress,

final ResourceManagerId resourceManagerId,

final Time timeout) {

try {

//Judge whether the RM sending the request is registered by the current TaskExecutor

if (!isConnectedToResourceManager(resourceManagerId)) {

final String message = String.format("TaskManager is not connected to the resource manager %s.", resourceManagerId);

log.debug(message);

throw new TaskManagerException(message);

}

if (taskSlotTable.isSlotFree(slotId.getSlotNumber())) {

//If the slot is in Free status, the slot is allocated

if (taskSlotTable.allocateSlot(slotId.getSlotNumber(), jobId, allocationId, taskManagerConfiguration.getTimeout())) {

log.info("Allocated slot for {}.", allocationId);

} else {

log.info("Could not allocate slot for {}.", allocationId);

throw new SlotAllocationException("Could not allocate slot.");

}

} else if (!taskSlotTable.isAllocated(slotId.getSlotNumber(), jobId, allocationId)) {

//If the slot has been allocated, an exception is thrown

final String message = "The slot " + slotId + " has already been allocated for a different job.";

log.info(message);

final AllocationID allocationID = taskSlotTable.getCurrentAllocation(slotId.getSlotNumber());

throw new SlotOccupiedException(message, allocationID, taskSlotTable.getOwningJob(allocationID));

}

//Provide the allocated slot to the JobManager sending the request

if (jobManagerTable.contains(jobId)) {

//If a connection has been established with the corresponding JobManager, a slot is provided to the JobManager

offerSlotsToJobManager(jobId);

} else {

//Otherwise, first establish a connection with the JobManager, and then call the offerSlotsToJobManager(jobId) method

try {

jobLeaderService.addJob(jobId, targetAddress);

} catch (Exception e) {

// free the allocated slot

try {

taskSlotTable.freeSlot(allocationId);

} catch (SlotNotFoundException slotNotFoundException) {

// slot no longer existent, this should actually never happen, because we've

// just allocated the slot. So let's fail hard in this case!

onFatalError(slotNotFoundException);

}

// release local state under the allocation id.

localStateStoresManager.releaseLocalStateForAllocationId(allocationId);

// sanity check

if (!taskSlotTable.isSlotFree(slotId.getSlotNumber())) {

onFatalError(new Exception("Could not free slot " + slotId));

}

throw new SlotAllocationException("Could not add job to job leader service.", e);

}

}

} catch (TaskManagerException taskManagerException) {

return FutureUtils.completedExceptionally(taskManagerException);

}

return CompletableFuture.completedFuture(Acknowledge.get());

}

}

After the slot is assigned to, the TaskExecutor needs to provide the corresponding slot to the JobManager, which is realized through the offerSlotsToJobManager(jobId) method:

class TaskExecutor {

private void offerSlotsToJobManager(final JobID jobId) {

final JobManagerConnection jobManagerConnection = jobManagerTable.get(jobId);

if (jobManagerConnection == null) {

log.debug("There is no job manager connection to the leader of job {}.", jobId);

} else {

if (taskSlotTable.hasAllocatedSlots(jobId)) {

log.info("Offer reserved slots to the leader of job {}.", jobId);

final JobMasterGateway jobMasterGateway = jobManagerConnection.getJobManagerGateway();

//Get the slot allocated to the current Job. Here, only the slot in allocated status will be obtained

final Iterator<TaskSlot> reservedSlotsIterator = taskSlotTable.getAllocatedSlots(jobId);

final JobMasterId jobMasterId = jobManagerConnection.getJobMasterId();

final Collection<SlotOffer> reservedSlots = new HashSet<>(2);

while (reservedSlotsIterator.hasNext()) {

SlotOffer offer = reservedSlotsIterator.next().generateSlotOffer();

reservedSlots.add(offer);

}

//Provide slot to JobMaster through RPC call

CompletableFuture<Collection<SlotOffer>> acceptedSlotsFuture = jobMasterGateway.offerSlots(

getResourceID(),

reservedSlots,

taskManagerConfiguration.getTimeout());

acceptedSlotsFuture.whenCompleteAsync(

(Iterable<SlotOffer> acceptedSlots, Throwable throwable) -> {

if (throwable != null) {

//Timeout, retry

if (throwable instanceof TimeoutException) {

log.info("Slot offering to JobManager did not finish in time. Retrying the slot offering.");

// We ran into a timeout. Try again.

offerSlotsToJobManager(jobId);

} else {

log.warn("Slot offering to JobManager failed. Freeing the slots " +

"and returning them to the ResourceManager.", throwable);

// If an exception occurs, all slot s will be released

for (SlotOffer reservedSlot: reservedSlots) {

freeSlotInternal(reservedSlot.getAllocationId(), throwable);

}

}

} else {

//Call succeeded

// check if the response is still valid

if (isJobManagerConnectionValid(jobId, jobMasterId)) {

// mark accepted slots active

//The slot accepted by the JobMaster is marked as Active

for (SlotOffer acceptedSlot : acceptedSlots) {

try {

if (!taskSlotTable.markSlotActive(acceptedSlot.getAllocationId())) {

// the slot is either free or releasing at the moment

final String message = "Could not mark slot " + jobId + " active.";

log.debug(message);

jobMasterGateway.failSlot(

getResourceID(),

acceptedSlot.getAllocationId(),

new FlinkException(message));

}

} catch (SlotNotFoundException e) {

final String message = "Could not mark slot " + jobId + " active.";

jobMasterGateway.failSlot(

getResourceID(),

acceptedSlot.getAllocationId(),

new FlinkException(message));

}

reservedSlots.remove(acceptedSlot);

}

final Exception e = new Exception("The slot was rejected by the JobManager.");

//Release the remaining unaccepted slot s

for (SlotOffer rejectedSlot : reservedSlots) {

freeSlotInternal(rejectedSlot.getAllocationId(), e);

}

} else {

// discard the response since there is a new leader for the job

log.debug("Discard offer slot response since there is a new leader " +

"for the job {}.", jobId);

}

}

},

getMainThreadExecutor());

} else {

log.debug("There are no unassigned slots for the job {}.", jobId);

}

}

}

}

Through the freelot (AllocationID, throwable) method, you can request the TaskExecutor to release the slot associated with the AllocationID:

class TaskExecutor {

@Override

public CompletableFuture<Acknowledge> freeSlot(AllocationID allocationId, Throwable cause, Time timeout) {

freeSlotInternal(allocationId, cause);

return CompletableFuture.completedFuture(Acknowledge.get());

}

private void freeSlotInternal(AllocationID allocationId, Throwable cause) {

checkNotNull(allocationId);

try {

final JobID jobId = taskSlotTable.getOwningJob(allocationId);

//Attempt to release slot bound by allocationId

final int slotIndex = taskSlotTable.freeSlot(allocationId, cause);

if (slotIndex != -1) {

//slot released successfully

if (isConnectedToResourceManager()) {

//Inform ResourceManager that the slot is currently available

// the slot was freed. Tell the RM about it

ResourceManagerGateway resourceManagerGateway = establishedResourceManagerConnection.getResourceManagerGateway();

resourceManagerGateway.notifySlotAvailable(

establishedResourceManagerConnection.getTaskExecutorRegistrationId(),

new SlotID(getResourceID(), slotIndex),

allocationId);

}

if (jobId != null) {

// If the Job bound to the allocationID has no assigned slot s, you can disconnect from the JobMaster

// check whether we still have allocated slots for the same job

if (taskSlotTable.getAllocationIdsPerJob(jobId).isEmpty()) {

// we can remove the job from the job leader service

try {

jobLeaderService.removeJob(jobId);

} catch (Exception e) {

log.info("Could not remove job {} from JobLeaderService.", jobId, e);

}

closeJobManagerConnection(

jobId,

new FlinkException("TaskExecutor " + getAddress() +

" has no more allocated slots for job " + jobId + '.'));

}

}

}

} catch (SlotNotFoundException e) {

log.debug("Could not free slot for allocation id {}.", allocationId, e);

}

localStateStoresManager.releaseLocalStateForAllocationId(allocationId);

}

}

5. Slot management in resourcemanage

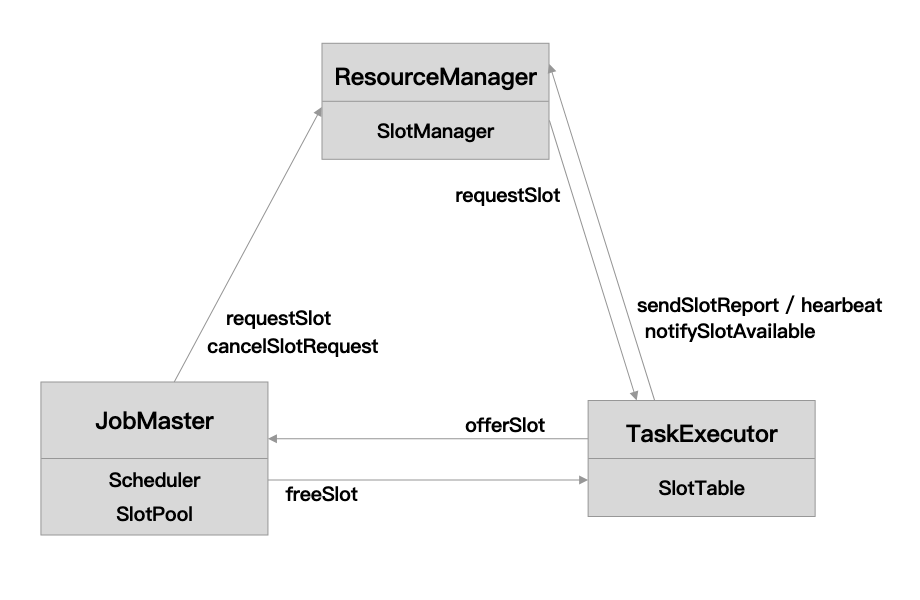

The previous section analyzed the management of slots in TaskExecutor, which is limited to a single TaskExecutor, while ResourceManager needs to manage the slots of all taskexecutors. All jobmanagers apply for resources through the resource manager, which "forwards" the request to the task executor according to the current computing resource usage of the cluster.

5.1 SlotManager

Resource manager manages slots with the help of slot manager. The slot manager maintains the status and allocation of all slots of all registered task executors. The slot manager also maintains all pending slot requests. Whenever a new slot is registered or an allocated slot is released, the slot manager will try to meet the slot request in the waiting state. If the available slots are not enough to meet the requirements, the slot manager will inform the resource manager through resourceactions #allocateresource (resource profile), and the resource manager may try to start a new task executor (such as in Yan mode).

In addition, the TaskExecutor that has been idle for a long time or the pending slot request that has not been satisfied for a long time will trigger the timeout mechanism for processing.

5.2 Slot registration

First, let's take a look at some important member variables in SlotManager, mainly the status of slot and the status of slot request:

class SlotManager {

/** Map for all registered slots. */

private final HashMap<SlotID, TaskManagerSlot> slots;

/** Index of all currently free slots. */

private final LinkedHashMap<SlotID, TaskManagerSlot> freeSlots;

/** All currently registered task managers. */

private final HashMap<InstanceID, TaskManagerRegistration> taskManagerRegistrations;

/** Map of fulfilled and active allocations for request deduplication purposes. */

private final HashMap<AllocationID, SlotID> fulfilledSlotRequests;

/** Map of pending/unfulfilled slot allocation requests. */

private final HashMap<AllocationID, PendingSlotRequest> pendingSlotRequests;

// When resources are insufficient, new resources will be applied through resourceactions #allocateresource (resource profile) (new task manager may be started or nothing may be done),

// These newly requested resources will be encapsulated as PendingTaskManagerSlot

private final HashMap<TaskManagerSlotId, PendingTaskManagerSlot> pendingSlots;

/** ResourceManager's id. */

private ResourceManagerId resourceManagerId;

/** Callbacks for resource (de-)allocations. */

private ResourceActions resourceActions;

}

When a new TaskManager is registered, registerTaskManager is called:

class SlotManager {

public void registerTaskManager(final TaskExecutorConnection taskExecutorConnection, SlotReport initialSlotReport) {

checkInit();

LOG.debug("Registering TaskManager {} under {} at the SlotManager.", taskExecutorConnection.getResourceID(), taskExecutorConnection.getInstanceID());

// we identify task managers by their instance id

if (taskManagerRegistrations.containsKey(taskExecutorConnection.getInstanceID())) {

reportSlotStatus(taskExecutorConnection.getInstanceID(), initialSlotReport);

} else {

// first register the TaskManager

ArrayList<SlotID> reportedSlots = new ArrayList<>();

for (SlotStatus slotStatus : initialSlotReport) {

reportedSlots.add(slotStatus.getSlotID());

}

TaskManagerRegistration taskManagerRegistration = new TaskManagerRegistration(

taskExecutorConnection,

reportedSlots);

taskManagerRegistrations.put(taskExecutorConnection.getInstanceID(), taskManagerRegistration);

// Register all the slot s in turn

// next register the new slots

for (SlotStatus slotStatus : initialSlotReport) {

registerSlot(

slotStatus.getSlotID(),

slotStatus.getAllocationID(),

slotStatus.getJobID(),

slotStatus.getResourceProfile(),

taskExecutorConnection);

}

}

}

//Register a slot

private void registerSlot(

SlotID slotId,

AllocationID allocationId,

JobID jobId,

ResourceProfile resourceProfile,

TaskExecutorConnection taskManagerConnection) {

if (slots.containsKey(slotId)) {

// remove the old slot first

removeSlot(slotId);

}

//Create a TaskManagerSlot object and add it to slots

final TaskManagerSlot slot = createAndRegisterTaskManagerSlot(slotId, resourceProfile, taskManagerConnection);

final PendingTaskManagerSlot pendingTaskManagerSlot;

if (allocationId == null) {

//If this slot has not been allocated, find the PendingTaskManagerSlot that matches the calculation resources of the current slot

pendingTaskManagerSlot = findExactlyMatchingPendingTaskManagerSlot(resourceProfile);

} else {

//This slot has been assigned

pendingTaskManagerSlot = null;

}

if (pendingTaskManagerSlot == null) {

//There are two possibilities: 1) the slot has been assigned; 2) there is no matching PendingTaskManagerSlot

updateSlot(slotId, allocationId, jobId);

} else {

// The newly registered slot can meet the requirements of pending task manager slot

pendingSlots.remove(pendingTaskManagerSlot.getTaskManagerSlotId());

final PendingSlotRequest assignedPendingSlotRequest = pendingTaskManagerSlot.getAssignedPendingSlotRequest();

// PendingTaskManagerSlot may have an associated PedningSlotRequest

if (assignedPendingSlotRequest == null) {

//If there is no associated PedningSlotRequest, the slot will be in Free status

handleFreeSlot(slot);

} else {

//If there is an associated PedningSlotRequest, the request can be satisfied and a slot can be allocated

assignedPendingSlotRequest.unassignPendingTaskManagerSlot();

allocateSlot(slot, assignedPendingSlotRequest);

}

}

}

private void handleFreeSlot(TaskManagerSlot freeSlot) {

Preconditions.checkState(freeSlot.getState() == TaskManagerSlot.State.FREE);

//First find out whether there is a PendingSlotRequest that can be satisfied

PendingSlotRequest pendingSlotRequest = findMatchingRequest(freeSlot.getResourceProfile());

if (null != pendingSlotRequest) {

//If there is a matching PendingSlotRequest, a slot is assigned

allocateSlot(freeSlot, pendingSlotRequest);

} else {

freeSlots.put(freeSlot.getSlotId(), freeSlot);

}

}

}

In addition, the TaskExecutor will regularly report the status of the slot to the resource manager through the heartbeat. The status of the slot is updated in the reportSlotStatus method.

5.3 request Slot

The ResourceManager requests a slot through the registerSlotRequest(SlotRequest slotRequest) method of the SlotManager. The JobId, AllocationID and resource profile of the request are encapsulated in the SlotRequest. The SlotManager further encapsulates the slot request as pending SlotRequest, which means a slot request that has not been met.

class SlotManager {

public boolean registerSlotRequest(SlotRequest slotRequest) throws SlotManagerException {

checkInit();

if (checkDuplicateRequest(slotRequest.getAllocationId())) {

LOG.debug("Ignoring a duplicate slot request with allocation id {}.", slotRequest.getAllocationId());

return false;

} else {

//Encapsulate the request as PendingSlotRequest

PendingSlotRequest pendingSlotRequest = new PendingSlotRequest(slotRequest);

pendingSlotRequests.put(slotRequest.getAllocationId(), pendingSlotRequest);

try {

//Execute the logic of requesting slot allocation

internalRequestSlot(pendingSlotRequest);

} catch (ResourceManagerException e) {

// requesting the slot failed --> remove pending slot request

pendingSlotRequests.remove(slotRequest.getAllocationId());

throw new SlotManagerException("Could not fulfill slot request " + slotRequest.getAllocationId() + '.', e);

}

return true;

}

}

private void internalRequestSlot(PendingSlotRequest pendingSlotRequest) throws ResourceManagerException {

final ResourceProfile resourceProfile = pendingSlotRequest.getResourceProfile();

//First, select a slot that meets the requirements from the registered slots in FREE status

TaskManagerSlot taskManagerSlot = findMatchingSlot(resourceProfile);

if (taskManagerSlot != null) {

//The slot matching the conditions is found, and the allocation

allocateSlot(taskManagerSlot, pendingSlotRequest);

} else {

//Select from PendingTaskManagerSlot

Optional<PendingTaskManagerSlot> pendingTaskManagerSlotOptional = findFreeMatchingPendingTaskManagerSlot(resourceProfile);

//If not even in PendingTaskManagerSlot

if (!pendingTaskManagerSlotOptional.isPresent()) {

//Request the resource manager to allocate resources through the ResourceActions#allocateResource(ResourceProfile) callback

pendingTaskManagerSlotOptional = allocateResource(resourceProfile);

}

//Assign PendingTaskManagerSlot to PendingSlotRequest

pendingTaskManagerSlotOptional.ifPresent(pendingTaskManagerSlot -> assignPendingTaskManagerSlot(pendingSlotRequest, pendingTaskManagerSlot));

}

}

}

What is the logic of allocatSlot? In the previous section, we mentioned that you can request TaskExecutor to allocate slot through RPC call. This RPC call occurs in SlotManager#allocateSlot:

class SlotManager {

private void allocateSlot(TaskManagerSlot taskManagerSlot, PendingSlotRequest pendingSlotRequest) {

Preconditions.checkState(taskManagerSlot.getState() == TaskManagerSlot.State.FREE);

TaskExecutorConnection taskExecutorConnection = taskManagerSlot.getTaskManagerConnection();

TaskExecutorGateway gateway = taskExecutorConnection.getTaskExecutorGateway();

final CompletableFuture<Acknowledge> completableFuture = new CompletableFuture<>();

final AllocationID allocationId = pendingSlotRequest.getAllocationId();

final SlotID slotId = taskManagerSlot.getSlotId();

final InstanceID instanceID = taskManagerSlot.getInstanceId();

//The taskManagerSlot status changes to PENDING

taskManagerSlot.assignPendingSlotRequest(pendingSlotRequest);

pendingSlotRequest.setRequestFuture(completableFuture);

//If a PendingTaskManager is assigned to the current pendingSlotRequest, you must first disassociate it

returnPendingTaskManagerSlotIfAssigned(pendingSlotRequest);

TaskManagerRegistration taskManagerRegistration = taskManagerRegistrations.get(instanceID);

if (taskManagerRegistration == null) {

throw new IllegalStateException("Could not find a registered task manager for instance id " +

instanceID + '.');

}

taskManagerRegistration.markUsed();

// RPC call to the task manager

// Request slot from TaskExecutor via RPC call

CompletableFuture<Acknowledge> requestFuture = gateway.requestSlot(

slotId,

pendingSlotRequest.getJobId(),

allocationId,

pendingSlotRequest.getTargetAddress(),

resourceManagerId,

taskManagerRequestTimeout);

//Request for RPC call completed

requestFuture.whenComplete(

(Acknowledge acknowledge, Throwable throwable) -> {

if (acknowledge != null) {

completableFuture.complete(acknowledge);

} else {

completableFuture.completeExceptionally(throwable);

}

});

//PendingSlotRequest callback function for request completion

//The completion of PendingSlotRequest request may be due to the completion of the above RPC call or the cancellation of PendingSlotRequest

completableFuture.whenCompleteAsync(

(Acknowledge acknowledge, Throwable throwable) -> {

try {

if (acknowledge != null) {

//If the request is successful, cancel pendingSlotRequest and update the slot status pending - > allocated

updateSlot(slotId, allocationId, pendingSlotRequest.getJobId());

} else {

if (throwable instanceof SlotOccupiedException) {

//This slot has been occupied. Update the status

SlotOccupiedException exception = (SlotOccupiedException) throwable;

updateSlot(slotId, exception.getAllocationId(), exception.getJobId());

} else {

//Request failed, remove pendingSlotRequest from TaskManagerSlot

removeSlotRequestFromSlot(slotId, allocationId);

}

if (!(throwable instanceof CancellationException)) {

//The slot request failed and will be retried

handleFailedSlotRequest(slotId, allocationId, throwable);

} else {

//Active cancellation

LOG.debug("Slot allocation request {} has been cancelled.", allocationId, throwable);

}

}

} catch (Exception e) {

LOG.error("Error while completing the slot allocation.", e);

}

},

mainThreadExecutor);

}

}

5.4 cancel slot request

You can cancel a slot request through unregisterSlotRequest:

class SlotManager {

public boolean unregisterSlotRequest(AllocationID allocationId) {

checkInit();

//Remove from pendingSlotRequests

PendingSlotRequest pendingSlotRequest = pendingSlotRequests.remove(allocationId);

if (null != pendingSlotRequest) {

LOG.debug("Cancel slot request {}.", allocationId);

//Cancel request

cancelPendingSlotRequest(pendingSlotRequest);

return true;

} else {

LOG.debug("No pending slot request with allocation id {} found. Ignoring unregistration request.", allocationId);

return false;

}

}

private void cancelPendingSlotRequest(PendingSlotRequest pendingSlotRequest) {

CompletableFuture<Acknowledge> request = pendingSlotRequest.getRequestFuture();

returnPendingTaskManagerSlotIfAssigned(pendingSlotRequest);

if (null != request) {

request.cancel(false);

}

}

}

5.5 timeout setting

When starting the SlotManager, it will start two timeout detection tasks, one is to detect the timeout of the slot request, and the other is to detect that the TaskManager is idle for a long time:

class SlotManager {

public void start(ResourceManagerId newResourceManagerId, Executor newMainThreadExecutor, ResourceActions newResourceActions) {

LOG.info("Starting the SlotManager.");

this.resourceManagerId = Preconditions.checkNotNull(newResourceManagerId);

mainThreadExecutor = Preconditions.checkNotNull(newMainThreadExecutor);

resourceActions = Preconditions.checkNotNull(newResourceActions);

started = true;

//Check whether the TaskExecutor is in idle state for a long time

taskManagerTimeoutCheck = scheduledExecutor.scheduleWithFixedDelay(

() -> mainThreadExecutor.execute(

() -> checkTaskManagerTimeouts()),

0L,

taskManagerTimeout.toMilliseconds(),

TimeUnit.MILLISECONDS);

//Check whether the slot request timed out

slotRequestTimeoutCheck = scheduledExecutor.scheduleWithFixedDelay(

() -> mainThreadExecutor.execute(

() -> checkSlotRequestTimeouts()),

0L,

slotRequestTimeout.toMilliseconds(),

TimeUnit.MILLISECONDS);

}

}

Once the TaskExecutor is idle for a long time, it will release resources through the ResourceActions#releaseResource() callback function:

class SlotManager {

private void releaseTaskExecutor(InstanceID timedOutTaskManagerId) {

final FlinkException cause = new FlinkException("TaskExecutor exceeded the idle timeout.");

LOG.debug("Release TaskExecutor {} because it exceeded the idle timeout.", timedOutTaskManagerId);

resourceActions.releaseResource(timedOutTaskManagerId, cause);

}

}

If a slot request times out, PendingSlotRequest will be canceled and ResourceManager will be informed through ResourceActions#notifyAllocationFailure():

class SlotManager {

private void checkSlotRequestTimeouts() {

if (!pendingSlotRequests.isEmpty()) {

long currentTime = System.currentTimeMillis();

Iterator<Map.Entry<AllocationID, PendingSlotRequest>> slotRequestIterator = pendingSlotRequests.entrySet().iterator();

while (slotRequestIterator.hasNext()) {

PendingSlotRequest slotRequest = slotRequestIterator.next().getValue();

if (currentTime - slotRequest.getCreationTimestamp() >= slotRequestTimeout.toMilliseconds()) {

slotRequestIterator.remove();

if (slotRequest.isAssigned()) {

cancelPendingSlotRequest(slotRequest);

}

resourceActions.notifyAllocationFailure(

slotRequest.getJobId(),

slotRequest.getAllocationId(),

new TimeoutException("The allocation could not be fulfilled in time."));

}

}

}

}

}

6.ResourceManager

We already know that ResourceManager actually manages all the slots registered by TaskExecutor through slot manager, but ResourceManager itself needs to provide external RPC call methods to expose the methods related to slot management to JobMaster and TaskExecutor.

6.1 RPC interface

First, let's take a look at the RPC methods related to slot management provided by ResourceManager:

interface ResouceManagerGateway {

CompletableFuture<Acknowledge> requestSlot(

JobMasterId jobMasterId,

SlotRequest slotRequest,

@RpcTimeout Time timeout);

void cancelSlotRequest(AllocationID allocationID);

CompletableFuture<Acknowledge> sendSlotReport(

ResourceID taskManagerResourceId,

InstanceID taskManagerRegistrationId,

SlotReport slotReport,

@RpcTimeout Time timeout);

void notifySlotAvailable(

InstanceID instanceId,

SlotID slotID,

AllocationID oldAllocationId);

}

The above four methods, requestSlot and cancelSlotRequest, are mainly called by JobMaster, while sendSlotReport and notifySlotAvailable are mainly called by TaskExecutor. After receiving the above RPC call, the resource manager will complete the specific work through the SlotManager.

6.2 dynamic resource management

An important feature introduced by FLIP-6 is that ResourceManager supports dynamic management of TaskExecutor computing resources, which can better integrate with Yarn, Mesos, Kubernetes and other frameworks to dynamically manage computing resources. Let's introduce how this feature is implemented.

The slot manager will tell the resource manager that the slot # cannot be registered through the slot #; When a SlotManager detects that a TaskExecutor is in Idle state for a long time, it will also notify the ResourceManager through the ResourceActions#releaseResource callback. Through these two callbacks, the ResourceManager can dynamically apply for and release resources:

class ResourceManager {

private class ResourceActionsImpl implements ResourceActions {

@Override

public void releaseResource(InstanceID instanceId, Exception cause) {

validateRunsInMainThread();

//Release resources

ResourceManager.this.releaseResource(instanceId, cause);

}

@Override

public Collection<ResourceProfile> allocateResource(ResourceProfile resourceProfile) {

validateRunsInMainThread();

//The specific behavior of applying for new resources is related to the implementation of different resource managers. The list returned is equivalent to the promised resources to be allocated

return startNewWorker(resourceProfile);

}

@Override

public void notifyAllocationFailure(JobID jobId, AllocationID allocationId, Exception cause) {

validateRunsInMainThread();

JobManagerRegistration jobManagerRegistration = jobManagerRegistrations.get(jobId);

if (jobManagerRegistration != null) {

jobManagerRegistration.getJobManagerGateway().notifyAllocationFailure(allocationId, cause);

}

}

}

protected void releaseResource(InstanceID instanceId, Exception cause) {

WorkerType worker = null;

// TODO: Improve performance by having an index on the instanceId

for (Map.Entry<ResourceID, WorkerRegistration<WorkerType>> entry : taskExecutors.entrySet()) {

if (entry.getValue().getInstanceID().equals(instanceId)) {

worker = entry.getValue().getWorker();

break;

}

}

if (worker != null) {

//Stop the corresponding worker. The specific behavior is related to the implementation of different resource managers

if (stopWorker(worker)) {

closeTaskManagerConnection(worker.getResourceID(), cause);

} else {

log.debug("Worker {} could not be stopped.", worker.getResourceID());

}

} else {

// unregister in order to clean up potential left over state

slotManager.unregisterTaskManager(instanceId);

}

}

public abstract Collection<ResourceProfile> startNewWorker(ResourceProfile resourceProfile);

public abstract boolean stopWorker(WorkerType worker);

}

The two abstract methods of startNewWorker and stopWorker are the key to realize dynamic application and release of resources. For Standalone mode, TaskExecutor is fixed and does not support dynamic startup and release; For Flink running on Yarn, the specific implementation of these two methods in YarnResourceManager involves starting a new container and releasing the applied container.

class YarnResourceManager {

@Override

public Collection<ResourceProfile> startNewWorker(ResourceProfile resourceProfile) {

Preconditions.checkArgument(

ResourceProfile.UNKNOWN.equals(resourceProfile),

"The YarnResourceManager does not support custom ResourceProfiles yet. It assumes that all containers have the same resources.");

//Apply for container

requestYarnContainer();

return slotsPerWorker;

}

@Override

public boolean stopWorker(final YarnWorkerNode workerNode) {

final Container container = workerNode.getContainer();

log.info("Stopping container {}.", container.getId());

try {

nodeManagerClient.stopContainer(container.getId(), container.getNodeId());

} catch (final Exception e) {

log.warn("Error while calling YARN Node Manager to stop container", e);

}

//Release container

resourceManagerClient.releaseAssignedContainer(container.getId());

workerNodeMap.remove(workerNode.getResourceID());

return true;

}

}

7. Slot management in jobmanager

Compared with TaskExecutor and resource manager, the part of resource management in jobmanager may be relatively more complex. This is mainly because Flink allows multiple subtasks to run in the same slot through SlotSharingGroup and colocation group constraints. In the JobMaster, it mainly communicates with the ResourceManager and TaskExecutor through the slot pool, and manages the slot allocated to the current JobMaster; The scheduling and resource allocation of all subtasks of the current Job mainly depend on the Scheduler and SlotSharingManager.

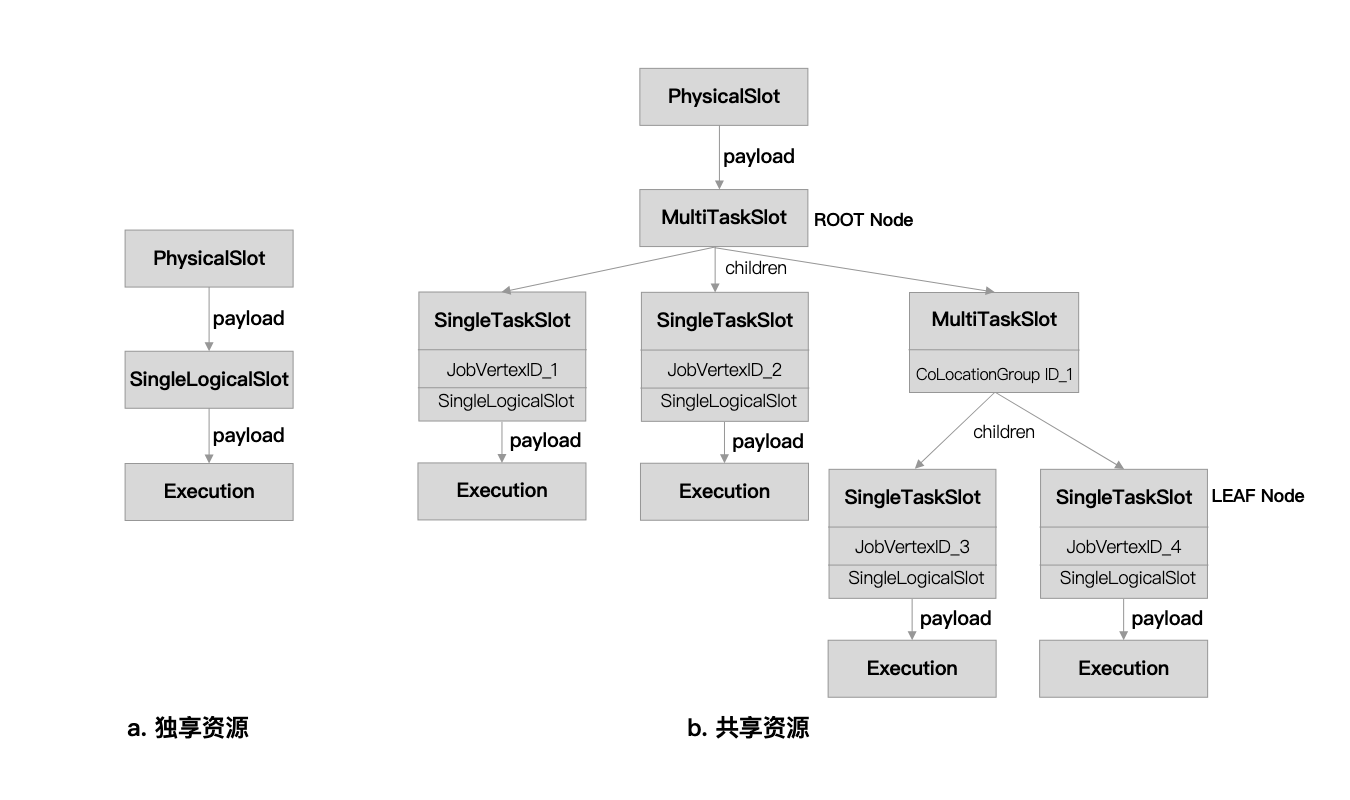

7.1 PhysicalSlot vs. LogicalSlot vs. MultiTaskSlot

First of all, we need to distinguish the two concepts of PhysicalSlot and LogicalSlot: PhysicalSlot represents a slot on the task executor in the physical sense, while LogicalSlot represents a slot in the logic. A task can be deployed to a LogicalSlot, but it does not correspond to a specific slot in the physical sense. Due to the existence of mechanisms such as resource sharing, multiple logicalslots may be mapped to the same PhysicalSlot.

The only implementation class of PhysicalSlot interface is AllocatedSlot:

public interface PhysicalSlot extends SlotContext {

boolean tryAssignPayload(Payload payload);

/**

* Payload which can be assigned to an {@link AllocatedSlot}.

*/

interface Payload {

/**

* Releases the payload

*

* @param cause of the payload release

*/

void release(Throwable cause);

}

}

class AllocatedSlot implements PhysicalSlot {

/** The ID under which the slot is allocated. Uniquely identifies the slot. */

private final AllocationID allocationId;

/** The location information of the TaskManager to which this slot belongs */

private final TaskManagerLocation taskManagerLocation;

/** The resource profile of the slot provides */

private final ResourceProfile resourceProfile;

/** RPC gateway to call the TaskManager that holds this slot */

private final TaskManagerGateway taskManagerGateway;

/** The number of the slot on the TaskManager to which slot belongs. Purely informational. */

private final int physicalSlotNumber;

private final AtomicReference<Payload> payloadReference;

}

LogicSlot interface and its implementation class SingleLogicalSlot:

public interface LogicalSlot {

TaskManagerLocation getTaskManagerLocation();

TaskManagerGateway getTaskManagerGateway();

int getPhysicalSlotNumber();

AllocationID getAllocationId();

SlotRequestId getSlotRequestId();

Locality getLocality();

CompletableFuture<?> releaseSlot(@Nullable Throwable cause);

@Nullable

SlotSharingGroupId getSlotSharingGroupId();

boolean tryAssignPayload(Payload payload);

Payload getPayload();

/**

* Payload for a logical slot.

*/

interface Payload {

void fail(Throwable cause);

CompletableFuture<?> getTerminalStateFuture();

}

}

public class SingleLogicalSlot implements LogicalSlot, PhysicalSlot.Payload {

private final SlotRequestId slotRequestId;

private final SlotContext slotContext;

// null if the logical slot does not belong to a slot sharing group, otherwise non-null

@Nullable

private final SlotSharingGroupId slotSharingGroupId;

// locality of this slot wrt the requested preferred locations

private final Locality locality;

// owner of this slot to which it is returned upon release

private final SlotOwner slotOwner;

private final CompletableFuture<Void> releaseFuture;

private volatile State state;

// LogicalSlot.Payload of this slot

private volatile Payload payload;

}

Note that SingleLogicalSlot implements PhysicalSlot The payload interface means that SingleLogicalSlot can be assigned to PhysicalSlot as a payload. Similarly, LogicalSlot also specifies the payload it can carry The implementation class of the payload interface is Execution, which is a task that needs to be scheduled for Execution.

Similarly, we need to pay attention to the difference between AllocationID and SlotRequestID: AllocationID is used to distinguish the allocation of physical memory. It is always associated with AllocatedSlot; SlotRequestID refers to the request for LogicalSlot when the task is scheduled and executed, which is associated with LogicalSlot.

We already know that in order to realize the sharing of slot resources, we need to map multiple logicalslots to the same PhysicalSlot. How is this mapping realized? Here you need to introduce PhysicalSlot Another implementation of the payload interface: the internal class SlotSharingManager of SlotSharingManager MultiTaskSlot.

The public parent class of MultiTaskSlot and SingleTaskSlot is TaskSlot. A tree structure composed of TaskSlot is constructed to realize slot sharing and mandatory constraints of CoLocationGroup. MultiTaskSlot corresponds to the internal node of the tree structure. It can contain multiple child nodes (MultiTaskSlot or SingleTaskSlot); SingleTaskSlot corresponds to the leaf node of the tree structure.

The root node of the tree is MultiTaskSlot. The root node will be assigned a SlotContext, which represents a physical slot in its assigned TaskExecutor. All tasks in the tree will run in the same slot. A MultiTaskSlot can contain multiple leaf nodes, as long as the AbstractID used to distinguish these leaf nodes is different (it may be JobVertexID or the ID of colocation group).

Let's take a look at the two ID S encapsulated in TaskSlot. One is SlotRequestId and the other is AbstractID:

class TaskSlot {

// every TaskSlot has an associated slot request id

private final SlotRequestId slotRequestId;

// all task slots except for the root slots have a group id assigned

// Except for the root node, each node has a groupId to distinguish a TaskSlot. It may be JobVertexID or the ID of the CoLocationGroup

@Nullable

private final AbstractID groupId;

public boolean contains(AbstractID groupId) {

return Objects.equals(this.groupId, groupId);

}

public abstract void release(Throwable cause);

}

MultiTaskSlot inherits TaskSlot. MultiTaskSlot can have multiple child nodes. MultiTaskSlot can be used as root node or internal node. MultiTaskSlot also implements PhysicalSlot Payload interface, which can be assigned to PhysicalSlot (when it is the root node):

public final class MultiTaskSlot extends TaskSlot implements PhysicalSlot.Payload {

private final Map<AbstractID, TaskSlot> children;

// the root node has its parent set to null

@Nullable

private final MultiTaskSlot parent;

// underlying allocated slot

private final CompletableFuture<? extends SlotContext> slotContextFuture;

// slot request id of the allocated slot

@Nullable

private final SlotRequestId allocatedSlotRequestId;

}

SingleTaskSlot can only be used as a leaf node. It has a LogicalSlot, which can be used to assign specific tasks later:

public final class SingleTaskSlot extends TaskSlot {

private final MultiTaskSlot parent;

// future containing a LogicalSlot which is completed once the underlying SlotContext future is completed

private final CompletableFuture<SingleLogicalSlot> singleLogicalSlotFuture;

private SingleTaskSlot(

SlotRequestId slotRequestId,

AbstractID groupId,

MultiTaskSlot parent,

Locality locality) {

super(slotRequestId, groupId);

this.parent = Preconditions.checkNotNull(parent);

Preconditions.checkNotNull(locality);

singleLogicalSlotFuture = parent.getSlotContextFuture()

.thenApply(

(SlotContext slotContext) -> {

//Create a SingleLogicalSlot after the parent node is assigned a PhysicalSlot

LOG.trace("Fulfill single task slot [{}] with slot [{}].", slotRequestId, slotContext.getAllocationId());

return new SingleLogicalSlot(

slotRequestId,

slotContext,

slotSharingGroupId,

locality,

slotOwner);

});

}

}

More specifically, for the constraints of ordinary SlotShargingGroup, the tree structure is formed: MultiTaskSlot as the root node and multiple singletaskslots as leaf nodes. These leaf nodes represent different tasks and are used to distinguish their jobvertex IDs. For the CO locationgroup constraint, a multi taskslot node (using the CO locationgroup ID) will be created at the lower level of the multi taskslot root node to distinguish. The subtasks under the same co locationgroup constraint will be further used as the leaf node of the second level multi taskslot.

7.2 SlotPool

The JobManager uses the slot pool to apply for slots from the resource manager and manage all slots assigned to the JobManager. The slot mentioned in this section refers to physical slot.

The only implementation class of the SlotPool interface is SlotPoolImpl. Let's take a look at several key member variables:

class SlotPoolImpl implements SlotPool {

/** The book-keeping of all allocated slots. */

//All slots assigned to the current JobManager

private final AllocatedSlots allocatedSlots;

/** The book-keeping of all available slots. */

//All available slots (already assigned to the JobManager but not loaded with payload)

private final AvailableSlots availableSlots;

/** All pending requests waiting for slots. */

//All slot request s in waiting status (requests have been sent to ResourceManager)

private final DualKeyMap<SlotRequestId, AllocationID, PendingRequest> pendingRequests;

/** The requests that are waiting for the resource manager to be connected. */

//slot request in waiting state (the request has not been sent to the ResourceManager, and there is no connection with the ResourceManager at this time)

private final HashMap<SlotRequestId, PendingRequest> waitingForResourceManager;

}

Each slot assigned to a SlotPool is uniquely distinguished by the AllocationID. The getAvailableSlotsInformation method can obtain the currently available slots (there is no payload), and then allocate the AlocatedSlot associated with the specific AllocationID to the request corresponding to the specified SlotRequestID through allocateAvailableSlot:

class SlotPoolImpl implements SlotPool {

//Lists the currently available slot s

@Override

public Collection<SlotInfo> getAvailableSlotsInformation() {

return availableSlots.listSlotInfo();

}

//Assign the slot associated with the allocationID to the request corresponding to the slotRequestId

@Override

public Optional<PhysicalSlot> allocateAvailableSlot(

@Nonnull SlotRequestId slotRequestId,

@Nonnull AllocationID allocationID) {

componentMainThreadExecutor.assertRunningInMainThread();

//Remove from availableSlots

AllocatedSlot allocatedSlot = availableSlots.tryRemove(allocationID);

if (allocatedSlot != null) {

//Join the assigned mapping relationship

allocatedSlots.add(slotRequestId, allocatedSlot);

return Optional.of(allocatedSlot);

} else {

return Optional.empty();

}

}

}

If there is no available slot at present, you can ask the slot pool to apply to the resource manager:

class SlotPoolImpl implements SlotPool {

//Apply to RM for a new slot

@Override

public CompletableFuture<PhysicalSlot> requestNewAllocatedSlot(

@Nonnull SlotRequestId slotRequestId,

@Nonnull ResourceProfile resourceProfile,

Time timeout) {

return requestNewAllocatedSlotInternal(slotRequestId, resourceProfile, timeout)

.thenApply((Function.identity()));

}

private CompletableFuture<AllocatedSlot> requestNewAllocatedSlotInternal(

@Nonnull SlotRequestId slotRequestId,

@Nonnull ResourceProfile resourceProfile,

@Nonnull Time timeout) {

componentMainThreadExecutor.assertRunningInMainThread();

//Construct a PendingRequest

final PendingRequest pendingRequest = new PendingRequest(

slotRequestId,

resourceProfile);

// register request timeout

FutureUtils

.orTimeout(

pendingRequest.getAllocatedSlotFuture(),

timeout.toMilliseconds(),

TimeUnit.MILLISECONDS,

componentMainThreadExecutor)

.whenComplete(

(AllocatedSlot ignored, Throwable throwable) -> {

if (throwable instanceof TimeoutException) {

//timeout handler

timeoutPendingSlotRequest(slotRequestId);

}

});

if (resourceManagerGateway == null) {

//If no connection is currently established with RM, you need to wait for RM to establish a connection

stashRequestWaitingForResourceManager(pendingRequest);

} else {

//Currently, you have established a connection with RM and apply for a slot from RM

requestSlotFromResourceManager(resourceManagerGateway, pendingRequest);

}

return pendingRequest.getAllocatedSlotFuture();

}

//If no connection is currently established with RM, you need to wait for RM to establish a connection and join waitingForResourceManager

//Once a connection is established with RM, a request is sent to RM

private void stashRequestWaitingForResourceManager(final PendingRequest pendingRequest) {

log.info("Cannot serve slot request, no ResourceManager connected. " +

"Adding as pending request [{}]", pendingRequest.getSlotRequestId());

waitingForResourceManager.put(pendingRequest.getSlotRequestId(), pendingRequest);

}

//Currently, you have established a connection with RM and apply for a slot from RM

private void requestSlotFromResourceManager(

final ResourceManagerGateway resourceManagerGateway,

final PendingRequest pendingRequest) {

//Generate an AllocationID, and the later allocated slot s are distinguished by the AllocationID

final AllocationID allocationId = new AllocationID();

//Add to pending requests

pendingRequests.put(pendingRequest.getSlotRequestId(), allocationId, pendingRequest);

pendingRequest.getAllocatedSlotFuture().whenComplete(

(AllocatedSlot allocatedSlot, Throwable throwable) -> {

if (throwable != null || !allocationId.equals(allocatedSlot.getAllocationId())) {

// cancel the slot request if there is a failure or if the pending request has

// been completed with another allocated slot

resourceManagerGateway.cancelSlotRequest(allocationId);

}

});

//Request slot from RM through RPC call. The processing flow of RM has been described earlier

CompletableFuture<Acknowledge> rmResponse = resourceManagerGateway.requestSlot(

jobMasterId,

new SlotRequest(jobId, allocationId, pendingRequest.getResourceProfile(), jobManagerAddress),

rpcTimeout);

FutureUtils.whenCompleteAsyncIfNotDone(

rmResponse,

componentMainThreadExecutor,

(Acknowledge ignored, Throwable failure) -> {

// on failure, fail the request future

if (failure != null) {

slotRequestToResourceManagerFailed(pendingRequest.getSlotRequestId(), failure);

}

});

}

}

Once the resource manager completes the processing flow of slot allocation, it will provide the allocated slot to the JobManager, and finally the slot pool The offerslots() method will be called:

class SlotPoolImpl {

//Allocate slots to the SlotPool and return the accepted set of slots. If there is no accepted slot, RM can be assigned to other jobs.

@Override

public Collection<SlotOffer> offerSlots(

TaskManagerLocation taskManagerLocation,

TaskManagerGateway taskManagerGateway,

Collection<SlotOffer> offers) {

ArrayList<SlotOffer> result = new ArrayList<>(offers.size());

//SlotPool can determine whether to accept each slot

for (SlotOffer offer : offers) {

if (offerSlot(

taskManagerLocation,

taskManagerGateway,

offer)) {

result.add(offer);

}

}

return result;

}

boolean offerSlot(

final TaskManagerLocation taskManagerLocation,

final TaskManagerGateway taskManagerGateway,

final SlotOffer slotOffer) {

componentMainThreadExecutor.assertRunningInMainThread();

// check if this TaskManager is valid

final ResourceID resourceID = taskManagerLocation.getResourceID();

final AllocationID allocationID = slotOffer.getAllocationId();

if (!registeredTaskManagers.contains(resourceID)) {

log.debug("Received outdated slot offering [{}] from unregistered TaskManager: {}",

slotOffer.getAllocationId(), taskManagerLocation);

return false;

}

// check whether we have already using this slot

// If the AllocationID associated with the current slot already appears in the SlotPool

AllocatedSlot existingSlot;

if ((existingSlot = allocatedSlots.get(allocationID)) != null ||

(existingSlot = availableSlots.get(allocationID)) != null) {

// we need to figure out if this is a repeated offer for the exact same slot,

// or another offer that comes from a different TaskManager after the ResourceManager

// re-tried the request

// we write this in terms of comparing slot IDs, because the Slot IDs are the identifiers of

// the actual slots on the TaskManagers

// Note: The slotOffer should have the SlotID

final SlotID existingSlotId = existingSlot.getSlotId();

final SlotID newSlotId = new SlotID(taskManagerLocation.getResourceID(), slotOffer.getSlotIndex());

if (existingSlotId.equals(newSlotId)) {

//This slot has been accepted by the SlotPool before, which is equivalent to the TaskExecutor sending a duplicate offer

log.info("Received repeated offer for slot [{}]. Ignoring.", allocationID);

// return true here so that the sender will get a positive acknowledgement to the retry

// and mark the offering as a success

return true;

} else {

//There is already another AllocatedSlot associated with this AllocationID, so the current slot cannot be accepted

// the allocation has been fulfilled by another slot, reject the offer so the task executor

// will offer the slot to the resource manager

return false;

}

}

//The allocation ID associated with this slot has not appeared before

//Create an AllocatedSlot object to represent the newly allocated slot

final AllocatedSlot allocatedSlot = new AllocatedSlot(

allocationID,

taskManagerLocation,

slotOffer.getSlotIndex(),

slotOffer.getResourceProfile(),

taskManagerGateway);

// check whether we have request waiting for this slot

// Check whether there is a request associated with this AllocationID

PendingRequest pendingRequest = pendingRequests.removeKeyB(allocationID);

if (pendingRequest != null) {

// we were waiting for this!

//There is a pending request waiting for this slot

allocatedSlots.add(pendingRequest.getSlotRequestId(), allocatedSlot);

//Try to complete the waiting request

if (!pendingRequest.getAllocatedSlotFuture().complete(allocatedSlot)) {

// we could not complete the pending slot future --> try to fulfill another pending request

//failed

allocatedSlots.remove(pendingRequest.getSlotRequestId());

//Try to satisfy other waiting requests

tryFulfillSlotRequestOrMakeAvailable(allocatedSlot);

} else {

log.debug("Fulfilled slot request [{}] with allocated slot [{}].", pendingRequest.getSlotRequestId(), allocationID);

}

}

else {

//There is no request waiting for this slot. Maybe the request has been satisfied

// we were actually not waiting for this:

// - could be that this request had been fulfilled

// - we are receiving the slots from TaskManagers after becoming leaders

//Try to satisfy other waiting requests

tryFulfillSlotRequestOrMakeAvailable(allocatedSlot);

}

// we accepted the request in any case. slot will be released after it idled for

// too long and timed out

return true;

}

}

Once a new AllocatedSlot is available, SlotPoolImpl will try to use this AllocatedSlot to meet other requests waiting for response in advance:

class SlotManagerImpl implements SlotPool {

private void tryFulfillSlotRequestOrMakeAvailable(AllocatedSlot allocatedSlot) {

Preconditions.checkState(!allocatedSlot.isUsed(), "Provided slot is still in use.");

//Find the pending requests that match the computing resources of the current AllocatedSlot

final PendingRequest pendingRequest = pollMatchingPendingRequest(allocatedSlot);

if (pendingRequest != null) {

//If there are matching requests, AllocatedSlot is assigned to the waiting requests

log.debug("Fulfilling pending slot request [{}] early with returned slot [{}]",

pendingRequest.getSlotRequestId(), allocatedSlot.getAllocationId());

allocatedSlots.add(pendingRequest.getSlotRequestId(), allocatedSlot);

pendingRequest.getAllocatedSlotFuture().complete(allocatedSlot);

} else {

//If not, the allocated slot becomes available

log.debug("Adding returned slot [{}] to available slots", allocatedSlot.getAllocationId());

availableSlots.add(allocatedSlot, clock.relativeTimeMillis());

}

}

private PendingRequest pollMatchingPendingRequest(final AllocatedSlot slot) {

final ResourceProfile slotResources = slot.getResourceProfile();

// try the requests sent to the resource manager first

for (PendingRequest request : pendingRequests.values()) {

if (slotResources.isMatching(request.getResourceProfile())) {

pendingRequests.removeKeyA(request.getSlotRequestId());

return request;

}

}

// try the requests waiting for a resource manager connection next

for (PendingRequest request : waitingForResourceManager.values()) {

if (slotResources.isMatching(request.getResourceProfile())) {

waitingForResourceManager.remove(request.getSlotRequestId());

return request;

}

}

// no request pending, or no request matches

return null;

}

}

When the slot pool is started, a scheduled task will be started, and the idle slot will be checked periodically. If the slot is idle for too long, the slot will be returned to the TaskManager:

class SlotPoolImpl {

private void checkIdleSlot() {

// The timestamp in SlotAndTimestamp is relative

final long currentRelativeTimeMillis = clock.relativeTimeMillis();

final List<AllocatedSlot> expiredSlots = new ArrayList<>(availableSlots.size());

for (SlotAndTimestamp slotAndTimestamp : availableSlots.availableSlots.values()) {

if (currentRelativeTimeMillis - slotAndTimestamp.timestamp > idleSlotTimeout.toMilliseconds()) {

expiredSlots.add(slotAndTimestamp.slot);

}

}

final FlinkException cause = new FlinkException("Releasing idle slot.");

for (AllocatedSlot expiredSlot : expiredSlots) {

final AllocationID allocationID = expiredSlot.getAllocationId();

if (availableSlots.tryRemove(allocationID) != null) {

//Return the idle slot to the TaskManager

log.info("Releasing idle slot [{}].", allocationID);

final CompletableFuture<Acknowledge> freeSlotFuture = expiredSlot.getTaskManagerGateway().freeSlot(

allocationID,

cause,

rpcTimeout);

FutureUtils.whenCompleteAsyncIfNotDone(

freeSlotFuture,

componentMainThreadExecutor,

(Acknowledge ignored, Throwable throwable) -> {

if (throwable != null) {

if (registeredTaskManagers.contains(expiredSlot.getTaskManagerId())) {

log.debug("Releasing slot [{}] of registered TaskExecutor {} failed. " +

"Trying to fulfill a different slot request.", allocationID, expiredSlot.getTaskManagerId(),

throwable);

tryFulfillSlotRequestOrMakeAvailable(expiredSlot);

} else {

log.debug("Releasing slot [{}] failed and owning TaskExecutor {} is no " +

"longer registered. Discarding slot.", allocationID, expiredSlot.getTaskManagerId());

}

}

});

}

}

scheduleRunAsync(this::checkIdleSlot, idleSlotTimeout);

}

}

8.Scheduler and SlotSharingManager

We have learned that SlotPool is mainly responsible for the management of the PhysicalSlot assigned to the current JobMaster. However, the scheduling and management of computing resources required by each Task are organized according to logicalslots. Different tasks have different logicalslots, but their underlying physical slots may be the same. The main logic is encapsulated in SlotSharingManager and Scheduler.

As mentioned earlier, by constructing a tree structure composed of TaskSlot, the resource sharing in the SlotSharingGroup and the mandatory constraints of the colocation group can be realized, which is mainly completed through the SlotSharingManager. Each SlotSharingGroup will have a corresponding SlotSharingManager.

The main member variables of SlotSharingManager are as follows. In addition to the associated SlotSharingGroupId, the most important are the three maps used to manage TaskSlot:

class SlotSharingManager {

private final SlotSharingGroupId slotSharingGroupId;

/** Actions to release allocated slots after a complete multi task slot hierarchy has been released. */

private final AllocatedSlotActions allocatedSlotActions;

/** Owner of the slots to which to return them when they are released from the outside. */

private final SlotOwner slotOwner;

//All taskslots, including root, inner and leaf

private final Map<SlotRequestId, TaskSlot> allTaskSlots;

//root MultiTaskSlot, but the underlying Physical Slot has not been allocated

/** Root nodes which have not been completed because the allocated slot is still pending. */

private final Map<SlotRequestId, MultiTaskSlot> unresolvedRootSlots;

//root MultiTaskSlot, the underlying physical slot has also been allocated and organized in the way of two-tier map,

//You can find the location of the TaskManager where the assigned Physical slot is located

/** Root nodes which have been completed (the underlying allocated slot has been assigned). */

private final Map<TaskManagerLocation, Map<AllocationID, MultiTaskSlot>> resolvedRootSlots;

}

When you need to construct a new TaskSlot tree, you need to call createRootSlot to create the root node:

class SlotSharingManager {

MultiTaskSlot createRootSlot(

SlotRequestId slotRequestId,

CompletableFuture<? extends SlotContext> slotContextFuture,

SlotRequestId allocatedSlotRequestId) {

final MultiTaskSlot rootMultiTaskSlot = new MultiTaskSlot(

slotRequestId,

slotContextFuture,

allocatedSlotRequestId);

LOG.debug("Create multi task slot [{}] in slot [{}].", slotRequestId, allocatedSlotRequestId);

allTaskSlots.put(slotRequestId, rootMultiTaskSlot);

//Add to the unresolved rootslots first

unresolvedRootSlots.put(slotRequestId, rootMultiTaskSlot);

// add the root node to the set of resolved root nodes once the SlotContext future has

// been completed and we know the slot's TaskManagerLocation

slotContextFuture.whenComplete(

(SlotContext slotContext, Throwable throwable) -> {

if (slotContext != null) {

//Once the physical slot is allocated, it is removed from the unresolved rootslots and added to the resolved rootslots

final MultiTaskSlot resolvedRootNode = unresolvedRootSlots.remove(slotRequestId);

if (resolvedRootNode != null) {

final AllocationID allocationId = slotContext.getAllocationId();

LOG.trace("Fulfill multi task slot [{}] with slot [{}].", slotRequestId, allocationId);

final Map<AllocationID, MultiTaskSlot> innerMap = resolvedRootSlots.computeIfAbsent(

slotContext.getTaskManagerLocation(),

taskManagerLocation -> new HashMap<>(4));

MultiTaskSlot previousValue = innerMap.put(allocationId, resolvedRootNode);

Preconditions.checkState(previousValue == null);

}

} else {

rootMultiTaskSlot.release(throwable);

}

});

return rootMultiTaskSlot;

}

}

In addition, different tasks in Flink can share resources as long as they are in the same SlotSharingGroup, but there is an implicit condition that the two tasks need to be subtasks of different operators. For example, if the parallelism of the map Operator is three, the map[1] subtask and the map[2] subtask cannot fall into the same PhysicalSlot. In listResolvedRootSlotInfo and getunsolvedrootslot, there are both! multi Task Slot. The logic of contains (groupid), that is, to ensure that different subtasks of the same Operator do not appear in the tree composed of a TaskSlot.

class SlotSharingManager {

@Nonnull

public Collection<SlotInfo> listResolvedRootSlotInfo(@Nullable AbstractID groupId) {

//List the root MultiTaskSlot that has been assigned a physical slot, but the MultiTaskSlot does not contain the specified groupId

return resolvedRootSlots

.values()

.stream()

.flatMap((Map<AllocationID, MultiTaskSlot> map) -> map.values().stream())

.filter((MultiTaskSlot multiTaskSlot) -> !multiTaskSlot.contains(groupId))

.map((MultiTaskSlot multiTaskSlot) -> (SlotInfo) multiTaskSlot.getSlotContextFuture().join())

.collect(Collectors.toList());

}

@Nullable

public MultiTaskSlot getResolvedRootSlot(@Nonnull SlotInfo slotInfo) {

//Find MultiTaskSlot according to SlotInfo (TasManagerLocation and AllocationId)

Map<AllocationID, MultiTaskSlot> forLocationEntry = resolvedRootSlots.get(slotInfo.getTaskManagerLocation());

return forLocationEntry != null ? forLocationEntry.get(slotInfo.getAllocationId()) : null;

}

/**

* Gets an unresolved slot which does not yet contain the given groupId. An unresolved

* slot is a slot whose underlying allocated slot has not been allocated yet.

*

* @param groupId which the returned slot must not contain

* @return the unresolved slot or null if there was no root slot with free capacities

*/

@Nullable

MultiTaskSlot getUnresolvedRootSlot(AbstractID groupId) {

//Found a root MultiTaskSlot that does not contain the specified groupId

for (MultiTaskSlot multiTaskSlot : unresolvedRootSlots.values()) {

if (!multiTaskSlot.contains(groupId)) {

return multiTaskSlot;

}

}

return null;

}

}

During task scheduling, the application of LogicalSlot resources is managed through the Scheduler interface, which inherits the SlotProvider interface, and its only implementation class is SchuedulerImpl.

public interface SlotProvider {

//Apply for a slot and return the future of a LogicalSlot

CompletableFuture<LogicalSlot> allocateSlot(

SlotRequestId slotRequestId,

ScheduledUnit scheduledUnit,

SlotProfile slotProfile,

boolean allowQueuedScheduling,

Time allocationTimeout);

void cancelSlotRequest(

SlotRequestId slotRequestId,

@Nullable SlotSharingGroupId slotSharingGroupId,

Throwable cause);

}

public interface Scheduler extends SlotProvider, SlotOwner {

void start(@Nonnull ComponentMainThreadExecutor mainThreadExecutor);

boolean requiresPreviousExecutionGraphAllocations();

}

The main member variables of SchedulerImpl are:

class SchedulerImpl implements Scheduler {

private final SlotSelectionStrategy slotSelectionStrategy;

private final SlotPool slotPool;

private final Map<SlotSharingGroupId, SlotSharingManager> slotSharingManagers;

}

Obviously, SchedulerImpl uses SlotPool to apply for physical slots and SlotSharingManager to share slots. The SlotSelectionStrategy interface is mainly used to select the most suitable slot from a group of slots.

class SchedulerImpl {

public CompletableFuture<LogicalSlot> allocateSlot(

SlotRequestId slotRequestId,

ScheduledUnit scheduledUnit,

SlotProfile slotProfile,

boolean allowQueuedScheduling,

Time allocationTimeout) {

log.debug("Received slot request [{}] for task: {}", slotRequestId, scheduledUnit.getTaskToExecute());

componentMainThreadExecutor.assertRunningInMainThread();

final CompletableFuture<LogicalSlot> allocationResultFuture = new CompletableFuture<>();

//If the SlotSharingGroupId is not specified, it means that this task does not run slot sharing and needs to monopolize one slot

CompletableFuture<LogicalSlot> allocationFuture = scheduledUnit.getSlotSharingGroupId() == null ?

allocateSingleSlot(slotRequestId, slotProfile, allowQueuedScheduling, allocationTimeout) :

allocateSharedSlot(slotRequestId, scheduledUnit, slotProfile, allowQueuedScheduling, allocationTimeout);

allocationFuture.whenComplete((LogicalSlot slot, Throwable failure) -> {

if (failure != null) {

cancelSlotRequest(

slotRequestId,

scheduledUnit.getSlotSharingGroupId(),

failure);

allocationResultFuture.completeExceptionally(failure);

} else {

allocationResultFuture.complete(slot);

}

});

return allocationResultFuture;

}

@Override

public void cancelSlotRequest(

SlotRequestId slotRequestId,

@Nullable SlotSharingGroupId slotSharingGroupId,

Throwable cause) {

componentMainThreadExecutor.assertRunningInMainThread();

if (slotSharingGroupId != null) {

releaseSharedSlot(slotRequestId, slotSharingGroupId, cause);

} else {

slotPool.releaseSlot(slotRequestId, cause);

}

}

@Override

public void returnLogicalSlot(LogicalSlot logicalSlot) {

SlotRequestId slotRequestId = logicalSlot.getSlotRequestId();

SlotSharingGroupId slotSharingGroupId = logicalSlot.getSlotSharingGroupId();

FlinkException cause = new FlinkException("Slot is being returned to the SlotPool.");

cancelSlotRequest(slotRequestId, slotSharingGroupId, cause);

}

}

The logic of these exposed methods is relatively clear. Then let's look at the specific implementation of the internal.

If resource sharing is not allowed, get PhysicalSlot directly from the SlotPool and create a LogicalSlot:

class SchedulerImpl {

private CompletableFuture<LogicalSlot> allocateSingleSlot(

SlotRequestId slotRequestId,

SlotProfile slotProfile,

boolean allowQueuedScheduling,

Time allocationTimeout) {

//First try to get from the AllocatedSlot available in the SlotPool

Optional<SlotAndLocality> slotAndLocality = tryAllocateFromAvailable(slotRequestId, slotProfile);

if (slotAndLocality.isPresent()) {

//If available, create a SingleLogicalSlot as the payload of the AllocatedSlot

// already successful from available

try {

return CompletableFuture.completedFuture(

completeAllocationByAssigningPayload(slotRequestId, slotAndLocality.get()));

} catch (FlinkException e) {

return FutureUtils.completedExceptionally(e);

}

} else if (allowQueuedScheduling) {

//Temporarily unavailable. If queuing is allowed, you can ask the SlotPool to apply to RM for a new slot

// we allocate by requesting a new slot

return slotPool

.requestNewAllocatedSlot(slotRequestId, slotProfile.getResourceProfile(), allocationTimeout)

.thenApply((PhysicalSlot allocatedSlot) -> {

try {

return completeAllocationByAssigningPayload(slotRequestId, new SlotAndLocality(allocatedSlot, Locality.UNKNOWN));

} catch (FlinkException e) {

throw new CompletionException(e);

}

});

} else {

// failed to allocate

return FutureUtils.completedExceptionally(

new NoResourceAvailableException("Could not allocate a simple slot for " + slotRequestId + '.'));

}

}

private Optional<SlotAndLocality> tryAllocateFromAvailable(

@Nonnull SlotRequestId slotRequestId,

@Nonnull SlotProfile slotProfile) {

Collection<SlotInfo> slotInfoList = slotPool.getAvailableSlotsInformation();

Optional<SlotSelectionStrategy.SlotInfoAndLocality> selectedAvailableSlot =

slotSelectionStrategy.selectBestSlotForProfile(slotInfoList, slotProfile);

return selectedAvailableSlot.flatMap(slotInfoAndLocality -> {

Optional<PhysicalSlot> optionalAllocatedSlot = slotPool.allocateAvailableSlot(

slotRequestId,

slotInfoAndLocality.getSlotInfo().getAllocationId());

return optionalAllocatedSlot.map(

allocatedSlot -> new SlotAndLocality(allocatedSlot, slotInfoAndLocality.getLocality()));

});

}

}

If resource sharing is required, then we need to further consider the mandatory constraint of colocation group. Its core is to construct a tree composed of taskslots, and then create a leaf node on the tree, which encapsulates the required logicalslots. For a more detailed process, refer to the following code and added comments:

class SchedulerImpl {

private CompletableFuture<LogicalSlot> allocateSharedSlot(

SlotRequestId slotRequestId,

ScheduledUnit scheduledUnit,

SlotProfile slotProfile,

boolean allowQueuedScheduling,

Time allocationTimeout) {

//Each SlotSharingGroup corresponds to a SlotSharingManager

// allocate slot with slot sharing

final SlotSharingManager multiTaskSlotManager = slotSharingManagers.computeIfAbsent(

scheduledUnit.getSlotSharingGroupId(),

id -> new SlotSharingManager(

id,

slotPool,

this));