Articles Catalogue

- 1. Install flink (1)

- 2. Flnk Standalone mode deployment

- 3. YARN mode installation

- 3.1. Hadoop Installation

- 3.1.1. Hadoop download and decompression

- 3.1.2. Configuration of Hadoop configuration file

- 3.1.3. Start hadoop

- 3.1.4. The process of starting hadoop requires entering password, which is very tedious, so password-free login needs to be configured under Linux.

- 3.2. Flnk on yarn submitting tasks

Flink Installation and Configuration

1. Install flink (1)

1. Download flink

1. Official website

2. Tsinghua Mirror Source

2. Decompression

$ tar -zxvf flink-1.8.1-bin-scala_2.11.tgz $ mv flink-1.8.1 flink

3. Start flink

# Start flink command $ flink/bin/start-cluster.sh # View the process [hadoop@192 app]$ jps 10003 StandaloneSessionClusterEntrypoint 10521 Jps 10447 TaskManagerRunner

4.webUI View

Open the browser and enter: http://192.168.154.130:8081

5. Start a flink example

flink/bin/flink run flink/examples/batch/WordCount.jar --input /home/hadoop/file/test.txt --output /home/hadoop/file/output.txt

2. Flnk Standalone mode deployment

2.1. Modify configuration files

1. Modify flink-conf.yaml

$ vim conf/flink-conf.yaml # Specify the host of job manager jobmanager.rpc.address: hadoop-master

2. Modify slaves

# Simply change the host name; stand-alone deployment, so there is only one machine hadoop-master

3. Domain Name Resolution

Modify the c: windows system 32 drivers etc hosts file

Add to

192.168.193.128 hadoop-master

4. Start the cluster

$ flink/bin/start-cluster.sh

5.webUI View

http://hadoop-master:8081/#/overview

3. YARN mode installation

3.1. Hadoop Installation

3.1.1. Hadoop download and decompression

1. Download hadoop

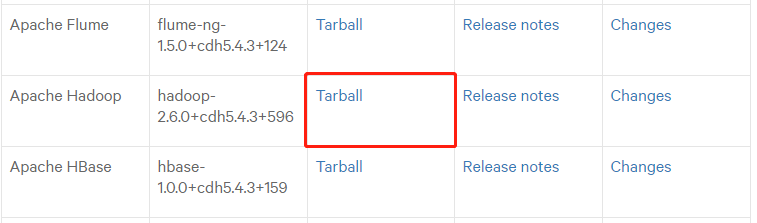

Apache's native Hadoop is almost useless in production. Developers usually deploy the Hadoop distribution Cloudera Hadoop, or CDH.

CDH 5.4.3 Download Address

[CDH 5.4.3 document]

Choose hadoop-2.6.0-cdh 5.4.3/

2. Decompression

$ tar -zxvf hadoop-2.6.0-cdh5.4.3.tar.gz $ mv hadoop-2.6.0-cdh5.4.3 hadoop

3. Configure JAVA_HOME path

The bottom layer of hadoop is developed in java, and the operation of hadoop depends on Java

export JAVA_HOME=/home/hadoop/app/jdk

3.1.2. Configuration of Hadoop configuration file

To start hadoop and run it, you have to configure some configuration files. Mainly used to configure: hdfs file copy number, specify mr running on yarn, specify the address of YARN's ResourceManager, reduce the way to get data, etc.

The configuration file is in the directory / home/hadoop/app/hadoop/etc/hadoop

1. Configure core-site.xml

- First create the temporary file directory

mkdir /home/hadoop/app/hadoop/data - Modify core-site.xml

<property> <name>fs.defaultFS</name> <value>hdfs://hadoop-master:8020</value> </property> <property> <name>hadoop.tmp.dir</name> <value>/home/hadoop/app/hadoop/data/tmp</value> </property>

2. Configure hdfs-site.xml

<property> <!--hdfs Number of copies of documents --> <name>fs.replication</name> <value>1</value> </property> <property> <name>dfs.namenode.secondary.http-address</name> <value>hadoop-master:50090</value> </property>

3. Configure mapred-site.xml

- First you need to modify the file name

$ mv mapred-site.xml.template mapred-site.xml

- Second, configure mapred-site.xml

<!-- Appoint mr Running in yarn upper --> <configuration> <property> <name>mapreduce.framework.name</name> <value>yarn</value> </property> </configuration>

4. Configure yarn-site.xml

<!-- Appoint YARN The elder( ResourceManager)Address --> <property> <name>yarn.resourcemanager.hostname</name> <value>hadoop-master</value> </property> <!-- reducer How to get data --> <property> <name>yarn.nodemanager.aux-services</name> <value>mapreduce_shuffle</value> </property>

5. Modify slaves

hadoop-master

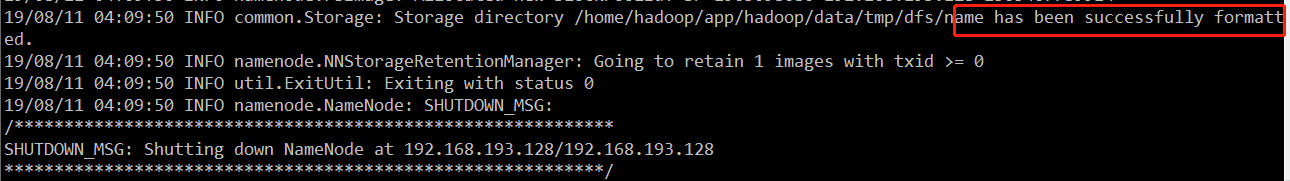

3.1.3. Start hadoop

1. Formatting hadoop

bin/hdfs namenode -format

2. Start hadoop

sbin/start-all.sh

[hadoop@hadoop-master hadoop]$ jps

2721 TaskManagerRunner

3524 SecondaryNameNode

3924 NodeManager

3972 Jps

3258 NameNode

3658 ResourceManager

2285 StandaloneSessionClusterEntrypoint

3374 DataNode

3.1.4. The process of starting hadoop requires entering password, which is very tedious, so password-free login needs to be configured under Linux.

$ ssh-keygen $ cd .ssh $ ls id_rsa id_rsa.pub $ cat id_rsa.pub >> authorized_keys # Authorization: chmod 600 authorized_keys $ ssh localhost

[Note] To avoid errors, do not execute this command first. Because after execution, a known_hosts file will be generated in the. ssh / directory. Copy to other hosts and regret the error.

You can log in directly to localhost

hadoop@hadoop1:~/.ssh$ ls

authorized_keys id_rsa id_rsa.pub

Copy to other hosts

scp -r .ssh hadoop@hadoop1:/home/hadoop

3.2. Flnk on yarn submitting tasks

Start hadoop, start hdfs, then yarn

$ sbin/start-dfs.sh

$ sbin/start-yarn.sh

[Note] Monitoring page of hadoop

http://hadoop-master:8088/ - Yarn monitoring page

http://hadoop-master:50070/ Hdfs monitoring page

Then the hadoop-master node submits Yarn-Session, using the yarn-session.sh script in the bin directory under the installation directory.

./bin/flink run -m yarn-cluster ./examples/batch/WordCount.jar