1, Keying status description

Referring to the description on the official website, several keying states are introduced as follows:

- ValueState: save a value that can be updated and retrieved (as mentioned above, each value corresponds to the key of the current input data, so each key received by the operator may correspond to a value). This value can be updated through update(T) and retrieved through T value().

- ListState: saves a list of elements. You can add data to this list and retrieve it on the current list. You can add elements through add(T) or addAll(List), and get the whole list through iteratable get(). You can also overwrite the current list through update(List).

- ReducingState: save a single value that represents the aggregation of all values added to the state. The interface is similar to ListState, but adding elements with add(T) will aggregate using the provided ReduceFunction.

- Aggregatingstate < in, out >: keep a single value that represents the aggregation of all values added to the state. In contrast to ReducingState, the aggregation type may be different from the type of element added to the state. The interface is similar to ListState, but the elements added with add(IN) will be aggregated with the specified AggregateFunction.

- Mapstate < UK, UV >: maintains a mapping list. You can add key value pairs to the state, or you can get iterators that reflect all current mappings. Use put(UK, UV) or putall (map < UK, UV >) to add a map. Use get(UK) to retrieve a specific key. Use entries(), keys(), and values() to retrieve iteratable views of maps, keys, and values, respectively. You can also use isEmpty() to determine whether any key value pairs are included.

Note:

- All types have clear() to clear the status of the current key.

- These state objects are only used for users to interact with states.

- The value obtained from the state is related to the key of the input element (keyby action).

- The state does not have to be stored in memory, but can also be stored on disk or anywhere else.

The status backend currently has three statuses:

MemoryStateBackend: memory level, generally used in test environment

FsStateBackend: the local state is stored in the JobManager memory, and the Checkpoint is stored in the file system, which can be used to generate

RocksDBStateBackend: serialize all States and store them in the local RocksDB database (a NoSql database, stored in KV form), which uses super large status jobs and does not require high performance in read and write status

Status is accessed through RuntimeContext, so it can only be used in rich functions. RuntimeContext in RichFunction provides the following methods:

- ValueState getState(ValueStateDescriptor)

- ReducingState getReducingState(ReducingStateDescriptor)

- ListState getListState(ListStateDescriptor)

- AggregatingState<IN, OUT> getAggregatingState(AggregatingStateDescriptor<IN, ACC, OUT>)

- MapState<UK, UV> getMapState(MapStateDescriptor<UK, UV>)

2, Development example code

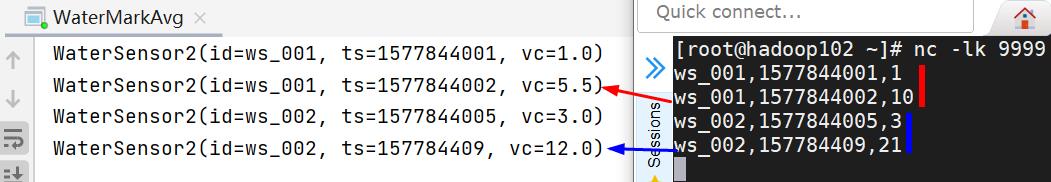

Obtain the average water level of each ID based on the flow data

- Result display

- Code part

package com.test;

import bean.WaterSensor2;

import org.apache.flink.api.common.functions.AggregateFunction;

import org.apache.flink.api.common.functions.MapFunction;

import org.apache.flink.api.common.state.AggregatingState;

import org.apache.flink.api.common.state.AggregatingStateDescriptor;

import org.apache.flink.api.common.typeinfo.Types;

import org.apache.flink.api.java.tuple.Tuple2;

import org.apache.flink.configuration.Configuration;

import org.apache.flink.streaming.api.datastream.DataStreamSource;

import org.apache.flink.streaming.api.datastream.KeyedStream;

import org.apache.flink.streaming.api.datastream.SingleOutputStreamOperator;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.streaming.api.functions.KeyedProcessFunction;

import org.apache.flink.util.Collector;

import java.lang.reflect.Type;

/**

* @author: Rango

* @create: 2021-05-07 18:53

* @description:

**/

public class WaterMarkAvg {

public static void main(String[] args) throws Exception {

//In the previous routine operation, establish the environment, establish the connection, install and change the data packet

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

env.setParallelism(1);

DataStreamSource<String> hadoop102 = env.socketTextStream("hadoop102", 9999);

SingleOutputStreamOperator<WaterSensor2> mapDS = hadoop102.map(new MapFunction<String, WaterSensor2>() {

@Override

public WaterSensor2 map(String value) throws Exception {

String[] split = value.split(",");

return new WaterSensor2(split[0], Long.parseLong(split[1]), Double.parseDouble(split[2]));

}

});

KeyedStream<WaterSensor2, String> keyedStream = mapDS.keyBy(WaterSensor2::getId);

//In the main processing process, the ACC part is realized by Tuple2

SingleOutputStreamOperator<WaterSensor2> streamOperator = keyedStream.process(

new KeyedProcessFunction<String, WaterSensor2, WaterSensor2>() {

//<IN,OUT>

private AggregatingState<Double, Double> aggregatingState;

@Override

public void open(Configuration parameters) throws Exception {

aggregatingState = getRuntimeContext()

.getAggregatingState(new AggregatingStateDescriptor<Double, Tuple2<Double, Integer>, Double>(

"agg-state", new AggregateFunction<Double, Tuple2<Double, Integer>, Double>() {

@Override

public Tuple2<Double, Integer> createAccumulator() {

return Tuple2.of(0.0, 0);

}

@Override

public Tuple2<Double, Integer> add(Double value, Tuple2<Double, Integer> accumulator) {

return Tuple2.of(accumulator.f0 + value, accumulator.f1 + 1);

}

@Override

public Double getResult(Tuple2<Double, Integer> accumulator) {

return accumulator.f0 / accumulator.f1;

}

@Override

public Tuple2<Double, Integer> merge(Tuple2<Double, Integer> a, Tuple2<Double, Integer> b) {

return Tuple2.of(a.f0 + b.f0, a.f1 + b.f1);

}

}, Types.TUPLE(Types.DOUBLE, Types.INT)));

}

@Override

public void processElement(WaterSensor2 value, Context ctx, Collector<WaterSensor2> out) throws Exception {

aggregatingState.add(value.getVc());

out.collect(new WaterSensor2(value.getId(), value.getTs(), aggregatingState.get()));

}});

streamOperator.print();

env.execute();

}}

Supplement: aggregatingstatedescriptor < in, ACC, out >, the middle ACC is troublesome to use Tuple2 as accumulator, and a user-defined class can be used to realize accumulation

//Customize a bean class to be used as an accumulator, and use lombok to simplify writing

@Data

@NoArgsConstructor

@AllArgsConstructor

public class AvgVc {

Double vc;

Integer count;

}

The main class implementation part can be modified as follows:

public void open(Configuration parameters) throws Exception {

aggState = getRuntimeContext().getAggregatingState(

new AggregatingStateDescriptor<Double, AvgVc, Double>("state-agg",

new AggregateFunction<Double, AvgVc, Double>() {

@Override

public AvgVc createAccumulator() {

return new AvgVc(0.0, 0);

}

@Override

public AvgVc add(Double value, AvgVc accumulator) {

return new AvgVc(accumulator.getVc() + value,

accumulator.getCount() + 1);

}

@Override

public Double getResult(AvgVc accumulator) {

return accumulator.getVc() / accumulator.getCount();

}

@Override

public AvgVc merge(AvgVc a, AvgVc b) {

return new AvgVc(a.getVc() + b.getVc(), a.getCount() + b.getCount());

}

}, AvgVc.class)

);

}

Learn to communicate. If you have any questions, please feel free to comment and point out.