1, Project overview

1.1 module creation and data preparation

create a new package for networkflow analysis.

copy the log file apache.log of the Apache server to the resource file directory src/main/resources

Next, we will read the data from here.

of course, we can still use UserBehavior.csv as the data source. At this time, we analyze not every access request to the server, but the specific page browsing ("pv") operation.

1.2 statistics of popular page views based on server log

the module we want to implement now is "real-time traffic statistics". For an e-commerce platform, the entry traffic of user login and the access traffic of different pages are important data worthy of analysis, and these data can be simply extracted from the log of the web server.

here we first implement the statistics of "popular page views", that is, read each line of log in the server log, count the number of times users visit each url in a period of time, and then sort and output it for display.

the specific method is to output the top N URL s with the most visits in the last 10 minutes every 5 seconds. It can be seen that this requirement is very similar to the previous "real-time popular commodity statistics", so we can learn from the previous code.

create NetworkFlow class under NetworkFlow analysis, and define POJO class ApacheLogEvent under beans, which is the input log data stream; In addition, there is UrlViewCount, which is the output data type of window operation statistics. Create and configure the StreamExecutionEnvironment in the main function, then read the data from the apache.log file and wrap it into the Apache logevent type.

it should be noted that the time in the original log is in the form of "dd/MM/yyyy:HH:mm:ss". You need to define a DateTimeFormat to convert it to the timestamp format we need:

.map( line -> {

String[] fields = line.split(" "); SimpleDateFormat simpleDateFormat = new

SimpleDateFormat("dd/MM/yyyy:HH:mm:ss");

Long timestamp = simpleDateFormat.parse(fields[3]).getTime();

return new ApacheLogEvent(fields[0], fields[1], timestamp, fields[5], fields[6]);

} )

2, pom file configuration

pom files are as follows:

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-java</artifactId>

<version>1.10.1</version>

<scope>provided</scope>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-streaming-java_2.11</artifactId>

<version>1.10.1</version>

<scope>provided</scope>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-connector-kafka_2.11</artifactId>

<version>1.10.1</version>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-core</artifactId>

<version>1.10.1</version>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-clients_2.11</artifactId>

<version>1.10.1</version>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-connector-redis_2.11</artifactId>

<version>1.1.5</version>

</dependency>

<!-- https://mvnrepository.com/artifact/mysql/mysql-connector-java -->

<dependency>

<groupId>mysql</groupId>

<artifactId>mysql-connector-java</artifactId>

<version>8.0.19</version>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-statebackend-rocksdb_2.11</artifactId>

<version>1.10.1</version>

</dependency>

<!-- Table API and Flink SQL -->

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-table-planner-blink_2.11</artifactId>

<version>1.10.1</version>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-table-planner_2.11</artifactId>

<version>1.10.1</version>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-table-api-java-bridge_2.11</artifactId>

<version>1.10.1</version>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-streaming-scala_2.11</artifactId>

<version>1.10.1</version>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-table-common</artifactId>

<version>1.10.1</version>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-csv</artifactId>

<version>1.10.1</version>

</dependency>

3, Code

3.1 POJO

ApacheLogEvent

package com.zqs.flink.project.networkflowanalysis.beans;

public class ApacheLogEvent {

private String ip;

private String userId;

private Long timestamp;

private String method;

private String url;

public ApacheLogEvent(){

}

public ApacheLogEvent(String ip, String userId, Long timestamp, String method, String url) {

this.ip = ip;

this.userId = userId;

this.timestamp = timestamp;

this.method = method;

this.url = url;

}

public String getIp() {

return ip;

}

public String getUserId() {

return userId;

}

public Long getTimestamp() {

return timestamp;

}

public String getMethod() {

return method;

}

public String getUrl() {

return url;

}

public void setIp(String ip) {

this.ip = ip;

}

public void setUserId(String userId) {

this.userId = userId;

}

public void setTimestamp(Long timestamp) {

this.timestamp = timestamp;

}

public void setMethod(String method) {

this.method = method;

}

public void setUrl(String url) {

this.url = url;

}

@Override

public String toString() {

return "ApacheLogEvent{" +

"ip='" + ip + '\'' +

", userId='" + userId + '\'' +

", timestamp=" + timestamp +

", method='" + method + '\'' +

", url='" + url + '\'' +

'}';

}

}

PageViewCount

package com.zqs.flink.project.networkflowanalysis.beans;

public class PageViewCount {

private String url;

private Long windowEnd;

private Long count;

public PageViewCount(){

}

public PageViewCount(String url, Long windowEnd, Long count) {

this.url = url;

this.windowEnd = windowEnd;

this.count = count;

}

public String getUrl() {

return url;

}

public void setUrl(String url) {

this.url = url;

}

public Long getWindowEnd() {

return windowEnd;

}

public void setWindowEnd(Long windowEnd) {

this.windowEnd = windowEnd;

}

public Long getCount() {

return count;

}

public void setCount(Long count) {

this.count = count;

}

@Override

public String toString() {

return "PageViewCount{" +

"url='" + url + '\'' +

", windowEnd=" + windowEnd +

", count=" + count +

'}';

}

}

UserBehavior

package com.zqs.flink.project.networkflowanalysis.beans;

public class UserBehavior {

// Define private properties

private Long userId;

private Long itemId;

private Integer categoryId;

private String behavior;

private Long timestamp;

public UserBehavior() {

}

public UserBehavior(Long userId, Long itemId, Integer categoryId, String behavior, Long timestamp) {

this.userId = userId;

this.itemId = itemId;

this.categoryId = categoryId;

this.behavior = behavior;

this.timestamp = timestamp;

}

public Long getUserId() {

return userId;

}

public void setUserId(Long userId) {

this.userId = userId;

}

public Long getItemId() {

return itemId;

}

public void setItemId(Long itemId) {

this.itemId = itemId;

}

public Integer getCategoryId() {

return categoryId;

}

public void setCategoryId(Integer categoryId) {

this.categoryId = categoryId;

}

public String getBehavior() {

return behavior;

}

public void setBehavior(String behavior) {

this.behavior = behavior;

}

public Long getTimestamp() {

return timestamp;

}

public void setTimestamp(Long timestamp) {

this.timestamp = timestamp;

}

@Override

public String toString() {

return "UserBehavior{" +

"userId=" + userId +

", itemId=" + itemId +

", categoryId=" + categoryId +

", behavior='" + behavior + '\'' +

", timestamp=" + timestamp +

'}';

}

}

3.2 popular pages

code:

HotPages

package com.zqs.flink.project.networkflowanalysis;

import akka.protobuf.ByteString;

import com.zqs.flink.project.networkflowanalysis.beans.ApacheLogEvent;

import com.zqs.flink.project.networkflowanalysis.beans.PageViewCount;

import org.apache.flink.api.common.functions.AggregateFunction;

import org.apache.flink.api.common.state.ListState;

import org.apache.flink.api.common.state.ListStateDescriptor;

import org.apache.flink.api.common.state.MapState;

import org.apache.flink.api.common.state.MapStateDescriptor;

import org.apache.flink.configuration.Configuration;

import org.apache.flink.shaded.guava18.com.google.common.collect.Lists;

import org.apache.flink.streaming.api.TimeCharacteristic;

import org.apache.flink.streaming.api.datastream.DataStream;

import org.apache.flink.streaming.api.datastream.SingleOutputStreamOperator;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.streaming.api.functions.KeyedProcessFunction;

import org.apache.flink.streaming.api.functions.timestamps.BoundedOutOfOrdernessTimestampExtractor;

import org.apache.flink.streaming.api.functions.windowing.WindowFunction;

import org.apache.flink.streaming.api.windowing.time.Time;

import org.apache.flink.streaming.api.windowing.windows.TimeWindow;

import org.apache.flink.util.Collector;

import org.apache.flink.util.OutputTag;

import java.net.URL;

import java.sql.Timestamp;

import java.text.SimpleDateFormat;

import java.util.ArrayList;

import java.util.Comparator;

import java.util.Map;

import java.util.regex.Pattern;

/**

* @author Just a

* @date 2021-10-18

* @remark Popular page

*/

public class HotPages {

public static void main(String[] args) throws Exception {

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

env.setStreamTimeCharacteristic(TimeCharacteristic.EventTime);

env.setParallelism(1);

//read file

URL resource = HotPages.class.getResource("/apache.log");

DataStream<String> inputStream = env.readTextFile(resource.getPath());

DataStream<ApacheLogEvent> dataStream = inputStream

.map(line -> {

String[] fields = line.split(" ");

SimpleDateFormat simpleDateFormat = new SimpleDateFormat("dd/MM/yyyy:HH:mm:ss");

Long timestamp = simpleDateFormat.parse(fields[3]).getTime();

return new ApacheLogEvent(fields[0], fields[1], timestamp, fields[5], fields[6]);

})

.assignTimestampsAndWatermarks(new BoundedOutOfOrdernessTimestampExtractor<ApacheLogEvent>(Time.seconds(1)) {

@Override

public long extractTimestamp(ApacheLogEvent element) {

return element.getTimestamp();

}

});

dataStream.print("data");

// Grouping windowing aggregation

// Define a side output stream label

OutputTag<ApacheLogEvent> lateTag = new OutputTag<ApacheLogEvent>("late"){};

SingleOutputStreamOperator<PageViewCount> windowAggStream = dataStream

.filter(data -> "GET".equals(data.getMethod())) // Filter get requests

.filter(data -> {

String regex = "^((?!\\.(css|js|png|ico)$).)*$";

return Pattern.matches(regex, data.getUrl());

})

.keyBy(ApacheLogEvent:: getUrl) // Group by url

.timeWindow(Time.minutes(10), Time.seconds(5))

.allowedLateness(Time.minutes(1))

.sideOutputLateData(lateTag)

.aggregate(new PageCountAgg(), new PageCountResult());

windowAggStream.print("agg");

windowAggStream.getSideOutput(lateTag).print("late");

// Collect the count data of the same window and sort the output

DataStream<String> resultStream = windowAggStream

.keyBy(PageViewCount::getWindowEnd)

.process(new TopNHotPages(3));

resultStream.print();

env.execute("hot pages job");

}

// Custom aggregate function

public static class PageCountAgg implements AggregateFunction<ApacheLogEvent, Long, Long> {

@Override

public Long createAccumulator() {

return 0L;

}

@Override

public Long add(ApacheLogEvent value, Long accumulator) {

return accumulator + 1;

}

@Override

public Long getResult(Long accumulator) {

return accumulator;

}

@Override

public Long merge(Long a, Long b) {

return a + b;

}

}

// Implement custom window functions

public static class PageCountResult implements WindowFunction<Long, PageViewCount, String, TimeWindow>{

@Override

public void apply(String url, TimeWindow window, Iterable<Long> input, Collector<PageViewCount> out) throws Exception {

out.collect(new PageViewCount(url, window.getEnd(), input.iterator().next() ));

}

}

// Implement custom processing functions

public static class TopNHotPages extends KeyedProcessFunction<Long, PageViewCount, String>{

private Integer topSize;

public TopNHotPages(Integer topSize){

this.topSize = topSize;

}

// Define the status and save all the current pageviewcounts to the Map

MapState<String, Long> pageViewCountMapState;

@Override

public void open(Configuration parameters) throws Exception {

pageViewCountMapState = getRuntimeContext().getMapState(new MapStateDescriptor<String, Long>("page-count-map", String.class, Long.class));

}

@Override

public void processElement(PageViewCount value, Context ctx, Collector<String> out) throws Exception {

pageViewCountMapState.put(value.getUrl(), value.getCount());

ctx.timerService().registerEventTimeTimer(value.getWindowEnd() + 1);

// Register a timer after 1 minute to clear the status

ctx.timerService().registerEventTimeTimer(value.getWindowEnd() + 60 + 1000L);

}

@Override

public void onTimer(long timestamp, OnTimerContext ctx, Collector<String> out) throws Exception {

// First judge whether it is time to close the window for cleaning. If so, directly return to the empty status

if ( timestamp == ctx.getCurrentKey() + 60 * 1000L ){

pageViewCountMapState.clear();

return;

}

ArrayList<Map.Entry<String, Long>> pageViewCounts = Lists.newArrayList(pageViewCountMapState.entries());

pageViewCounts.sort(new Comparator<Map.Entry<String, Long>>() {

@Override

public int compare(Map.Entry<String, Long> o1, Map.Entry<String, Long> o2) {

if(o1.getValue() > o2.getValue())

return -1;

else if(o1.getValue() < o2.getValue())

return 1;

else

return 0;

}

});

// Format as String output

StringBuilder resultBuilder = new StringBuilder();

resultBuilder.append("=================================================\n");

resultBuilder.append("Window end time:").append(new Timestamp(timestamp -1)).append("\n");

// Traverse the list and take the top n output

for (int i = 0; i < Math.min(topSize, pageViewCounts.size()); i++){

Map.Entry<String, Long> currentItemViewCount = pageViewCounts.get(i);

resultBuilder.append("NO ").append(i + 1).append(":")

.append(" page URL = ").append(currentItemViewCount.getKey())

.append(" Views = ").append(currentItemViewCount.getValue())

.append("\n");

}

resultBuilder.append("======================================\n\n");

// Control output frequency

Thread.sleep(1000L);

out.collect(resultBuilder.toString());

}

}

}

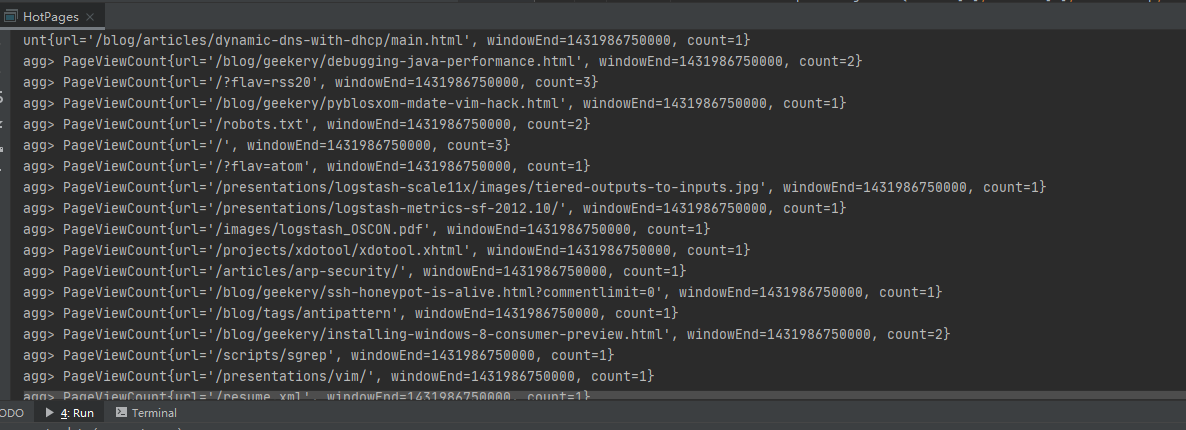

Test record:

3.3 page views

code:

PageView

package com.zqs.flink.project.networkflowanalysis;

import com.zqs.flink.project.networkflowanalysis.beans.UserBehavior;

import com.zqs.flink.project.networkflowanalysis.beans.PageViewCount;

import org.apache.flink.api.common.functions.AggregateFunction;

import org.apache.flink.api.common.functions.MapFunction;

import org.apache.flink.api.common.state.ValueState;

import org.apache.flink.api.common.state.ValueStateDescriptor;

import org.apache.flink.api.java.tuple.Tuple;

import org.apache.flink.api.java.tuple.Tuple2;

import org.apache.flink.configuration.Configuration;

import org.apache.flink.streaming.api.TimeCharacteristic;

import org.apache.flink.streaming.api.datastream.DataStream;

import org.apache.flink.streaming.api.datastream.SingleOutputStreamOperator;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.streaming.api.functions.KeyedProcessFunction;

import org.apache.flink.streaming.api.functions.timestamps.AscendingTimestampExtractor;

import org.apache.flink.streaming.api.functions.windowing.WindowFunction;

import org.apache.flink.streaming.api.windowing.time.Time;

import org.apache.flink.streaming.api.windowing.windows.TimeWindow;

import org.apache.flink.util.Collector;

import java.net.URL;

import java.util.Random;

/**

* @author Just a

* @date 2021-10-18

* @remark page view Statistics

*/

public class PageView {

public static void main(String[] args) throws Exception{

// 1. Create execution environment

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

env.setParallelism(4);

env.setStreamTimeCharacteristic(TimeCharacteristic.EventTime);

// 2. Read data and create DataStream

URL resource = PageView.class.getResource("/UserBehavior.csv");

DataStream<String> inputStream = env.readTextFile(resource.getPath());

// 3. Convert to POJO and allocate timestamp and watermark

DataStream<UserBehavior> dataStream = inputStream

.map(line -> {

String[] fields = line.split(",");

return new UserBehavior(new Long(fields[0]), new Long(fields[1]), new Integer(fields[2]), fields[3], new Long(fields[4]));

})

.assignTimestampsAndWatermarks(new AscendingTimestampExtractor<UserBehavior>() {

@Override

public long extractAscendingTimestamp(UserBehavior element) {

return element.getTimestamp() * 1000L;

}

});

// 4. Group windowing aggregation to get the count value of each commodity in each window

SingleOutputStreamOperator<Tuple2<String, Long>> pvResultStream0 =

dataStream

.filter(data -> "pv".equals(data.getBehavior())) // Filter pv behavior

.map(new MapFunction<UserBehavior, Tuple2<String, Long>>() {

@Override

public Tuple2<String, Long> map(UserBehavior value) throws Exception {

return new Tuple2<>("pv", 1L);

}

})

.keyBy(0) // Group by product

.timeWindow(Time.hours(1)) // Open 1 hour scrolling window

.sum(1);

// Improve parallel tasks, design random key s, and solve the problem of data skew

SingleOutputStreamOperator<PageViewCount> pvStream = dataStream.filter(data -> "pv".equals(data.getBehavior()))

.map(new MapFunction<UserBehavior, Tuple2<Integer, Long>>() {

@Override

public Tuple2<Integer, Long> map(UserBehavior value) throws Exception {

Random random = new Random();

return new Tuple2<>(random.nextInt(10), 1L);

}

})

.keyBy(data -> data.f0)

.timeWindow(Time.hours(1))

.aggregate(new PvCountAgg(), new PvCountResult());

// Summarize the data of each partition

DataStream<PageViewCount> pvResultStream = pvStream

.keyBy(PageViewCount::getWindowEnd)

.process(new TotalPvCount());

pvResultStream.print();

env.execute("pv count job");

}

// Implement custom prepolymerization functions

public static class PvCountAgg implements AggregateFunction<Tuple2<Integer, Long>, Long, Long>{

@Override

public Long createAccumulator() {

return 0L;

}

@Override

public Long add(Tuple2<Integer, Long> value, Long accumulator) {

return accumulator + 1;

}

@Override

public Long getResult(Long accumulator) {

return accumulator;

}

@Override

public Long merge(Long a, Long b) {

return a + b;

}

}

// Implement custom windows

public static class PvCountResult implements WindowFunction<Long, PageViewCount, Integer, TimeWindow>{

@Override

public void apply(Integer integer, TimeWindow window, Iterable<Long> input, Collector<PageViewCount> out) throws Exception {

out.collect( new PageViewCount(integer.toString(), window.getEnd(), input.iterator().next()));

}

}

// Implement a user-defined processing function to stack the count values of the same window grouping statistics

public static class TotalPvCount extends KeyedProcessFunction<Long, PageViewCount, PageViewCount>{

// Define the status and save the current total Count value

ValueState<Long> totalCountState;

@Override

public void open(Configuration parameters) throws Exception {

totalCountState = getRuntimeContext().getState(new ValueStateDescriptor<Long>("total-count", Long.class, 0L));

}

@Override

public void processElement(PageViewCount value, Context ctx, Collector<PageViewCount> out) throws Exception {

totalCountState.update( totalCountState.value() + value.getCount() );

ctx.timerService().registerEventTimeTimer(value.getWindowEnd() + 1);

}

@Override

public void onTimer(long timestamp, OnTimerContext ctx, Collector<PageViewCount> out) throws Exception {

// Starting from the timer, the count values of all groups are equal, and the current total count value is directly output

Long totalCount = totalCountState.value();

out.collect(new PageViewCount("pv", ctx.getCurrentKey(), totalCount));

// Empty status

totalCountState.clear();

}

}

}

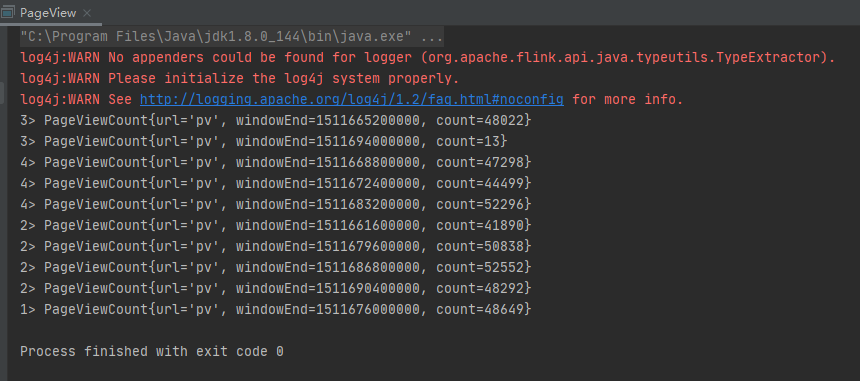

Test record:

3.4 page independent visits

code:

UniqueVisitor

package com.zqs.flink.project.networkflowanalysis;

/**

* @author Just a

* @date 2021-10-18

* @remark unique page view Statistics

*/

import com.zqs.flink.project.networkflowanalysis.beans.UserBehavior;

import com.zqs.flink.project.networkflowanalysis.beans.PageViewCount;

import org.apache.flink.streaming.api.TimeCharacteristic;

import org.apache.flink.streaming.api.datastream.DataStream;

import org.apache.flink.streaming.api.datastream.SingleOutputStreamOperator;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.streaming.api.functions.timestamps.AscendingTimestampExtractor;

import org.apache.flink.streaming.api.functions.windowing.AllWindowFunction;

import org.apache.flink.streaming.api.functions.windowing.WindowFunction;

import org.apache.flink.streaming.api.windowing.time.Time;

import org.apache.flink.streaming.api.windowing.windows.TimeWindow;

import org.apache.flink.util.Collector;

import java.net.URL;

import java.util.HashSet;

public class UniqueVisitor {

public static void main(String[] args) throws Exception {

// 1. Create execution environment

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

env.setParallelism(1);

env.setStreamTimeCharacteristic(TimeCharacteristic.EventTime);

// 2. Read data and create DataStream

URL resource = UniqueVisitor.class.getResource("/UserBehavior.csv");

DataStream<String> inputStream = env.readTextFile(resource.getPath());

// 3. Convert to POJO and allocate timestamp and watermark

DataStream<UserBehavior> dataStream = inputStream

.map(line -> {

String[] fields = line.split(",");

return new UserBehavior(new Long(fields[0]), new Long(fields[1]), new Integer(fields[2]), fields[3], new Long(fields[4]));

})

.assignTimestampsAndWatermarks(new AscendingTimestampExtractor<UserBehavior>() {

@Override

public long extractAscendingTimestamp(UserBehavior element) {

return element.getTimestamp() * 1000L;

}

});

// Windowing statistical uv value

SingleOutputStreamOperator<PageViewCount> uvStream = dataStream.filter(data -> "pv".equals(data.getBehavior()))

.timeWindowAll(Time.hours(1))

.apply(new UvCountResult());

uvStream.print();

env.execute("uv count job");

}

// Implement custom full window function

public static class UvCountResult implements AllWindowFunction<UserBehavior, PageViewCount, TimeWindow>{

@Override

public void apply(TimeWindow window, Iterable<UserBehavior> values, Collector<PageViewCount> out) throws Exception {

// Define a Set structure to save all userids in the window and automatically remove duplicates

HashSet<Long> uidSet = new HashSet<>();

for (UserBehavior ub: values)

uidSet.add(ub.getUserId());

out.collect( new PageViewCount("uv", window.getEnd(), (long)uidSet.size()));

}

}

}

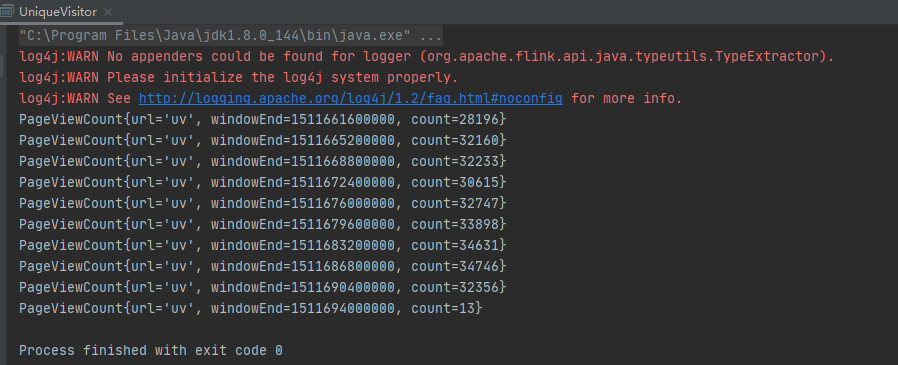

Test record:

3.5 bloom filter to achieve independent traffic

code:

UvWithBloomFilter

package com.zqs.flink.project.networkflowanalysis;

/**

* @author Just a

* @date 2021-10-18

* @remark unique page view Bloom filter

*/

import com.zqs.flink.project.networkflowanalysis.beans.UserBehavior;

import com.zqs.flink.project.networkflowanalysis.beans.PageViewCount;

// import kafka.server.DynamicConfig;

import org.apache.flink.configuration.Configuration;

import org.apache.flink.streaming.api.TimeCharacteristic;

import org.apache.flink.streaming.api.datastream.DataStream;

import org.apache.flink.streaming.api.datastream.SingleOutputStreamOperator;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.streaming.api.functions.timestamps.AscendingTimestampExtractor;

import org.apache.flink.streaming.api.functions.windowing.ProcessAllWindowFunction;

import org.apache.flink.streaming.api.windowing.time.Time;

import org.apache.flink.streaming.api.windowing.triggers.Trigger;

import org.apache.flink.streaming.api.windowing.triggers.TriggerResult;

import org.apache.flink.streaming.api.windowing.windows.TimeWindow;

import org.apache.flink.util.Collector;

import redis.clients.jedis.Jedis;

import java.net.URL;

public class UvWithBloomFilter {

public static void main(String[] args) throws Exception {

// 1. Create execution environment

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

env.setParallelism(1);

env.setStreamTimeCharacteristic(TimeCharacteristic.EventTime);

// 2. Read data and create DataStream

URL resource = UniqueVisitor.class.getResource("/UserBehavior.csv");

DataStream<String> inputStream = env.readTextFile(resource.getPath());

// 3. Convert to POJO and allocate timestamp and watermark

DataStream<UserBehavior> dataStream = inputStream

.map(line -> {

String[] fields = line.split(",");

return new UserBehavior(new Long(fields[0]), new Long(fields[1]), new Integer(fields[2]), fields[3], new Long(fields[4]));

})

.assignTimestampsAndWatermarks(new AscendingTimestampExtractor<UserBehavior>() {

@Override

public long extractAscendingTimestamp(UserBehavior element) {

return element.getTimestamp() * 1000L;

}

});

// Windowing statistical uv value

SingleOutputStreamOperator<PageViewCount> uvStream = dataStream

.filter(data -> "pv".equals(data.getBehavior()))

.timeWindowAll(Time.hours(1))

.trigger( new MyTrigger() )

.process( new UvCountResultWithBloomFliter() );

uvStream.print();

env.execute("uv count with bloom filter job");

}

// Custom trigger

public static class MyTrigger extends Trigger<UserBehavior, TimeWindow>{

@Override

public TriggerResult onElement(UserBehavior element, long timestamp, TimeWindow window, TriggerContext ctx) throws Exception {

// When each piece of data arrives, it directly triggers the window calculation and directly clears the window

return TriggerResult.FIRE_AND_PURGE;

}

@Override

public TriggerResult onProcessingTime(long time, TimeWindow window, TriggerContext ctx) throws Exception {

return TriggerResult.CONTINUE;

}

@Override

public TriggerResult onEventTime(long time, TimeWindow window, TriggerContext ctx) throws Exception {

return TriggerResult.CONTINUE;

}

@Override

public void clear(TimeWindow window, TriggerContext ctx) throws Exception {

}

}

// Customize a bloom filter

public static class MyBloomFilter {

// To define the size of a bitmap, you generally need to define it as an integral power of 2

private Integer cap;

public MyBloomFilter(Integer cap){

this.cap = cap;

}

// Implement a hash function

public Long hashCode(String value, Integer seed){

Long result = 0l;

for (int i = 0; i < value.length(); i++){

result = result * seed + value.charAt(i);

}

return result & (cap - 1);

}

}

// Implement custom processing functions

public static class UvCountResultWithBloomFliter extends ProcessAllWindowFunction<UserBehavior, PageViewCount, TimeWindow>{

// Define jedis connections and Bloom filters

Jedis jedis;

MyBloomFilter myBloomFilter;

@Override

public void open(Configuration parameters) throws Exception {

jedis = new Jedis("10.31.1.122", 6379);

myBloomFilter = new MyBloomFilter(1 << 29); // To process 100 million data, use a 64 MB bitmap

}

@Override

public void process(Context context, Iterable<UserBehavior> elements, Collector<PageViewCount> out) throws Exception {

// Store all the bitmap and window count values in redis, and use windowEnd as the key

Long windowEnd = context.window().getEnd();

String bitmapKey = windowEnd.toString();

// Save the count value as a hash table

String countHashName = "uv_count";

String countKey = windowEnd.toString();

// 1. Get the current userId

Long userId = elements.iterator().next().getUserId();

// 2. Calculate offset in bitmap

Long offset = myBloomFilter.hashCode(userId.toString(), 61);

// 3. Use the getbit command of redis to judge the value of the corresponding position

Boolean isExist = jedis.getbit(bitmapKey, offset);

if ( !isExist ){

// If it does not exist, the position of the corresponding bitmap is set to 1

jedis.setbit(bitmapKey, offset, true);

// Update the count value saved in redis

Long uvCount = 0L; // Initial count value

String uvCountString = jedis.hget(countHashName, countKey);

if ( uvCountString != null && !"".equals(uvCountString) )

uvCount = Long.valueOf(uvCountString);

jedis.hset(countHashName, countKey, String.valueOf(uvCount + 1));

out.collect(new PageViewCount("uv", windowEnd, uvCount + 1));

}

}

@Override

public void close() throws Exception {

super.close();

}

}

}

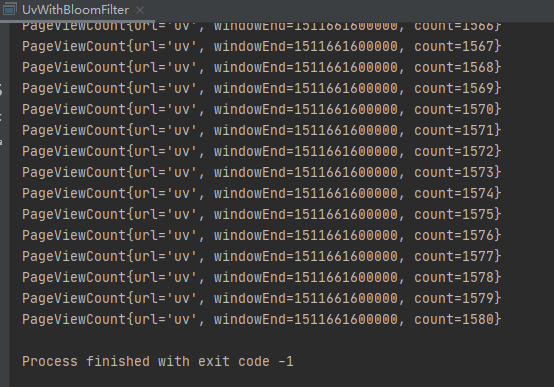

Test record:

reference resources:

- https://www.bilibili.com/video/BV1qy4y1q728

- https://ashiamd.github.io/docsify-notes/#/study/BigData/Flink/%E5%B0%9A%E7%A1%85%E8%B0%B7Flink%E5%85%A5%E9%97%A8%E5%88%B0%E5%AE%9E%E6%88%98-%E5%AD%A6%E4%B9%A0%E7%AC%94%E8%AE%B0?id=_1432-%e5%ae%9e%e6%97%b6%e6%b5%81%e9%87%8f%e7%bb%9f%e8%ae%a1%e7%83%ad%e9%97%a8%e9%a1%b5%e9%9d%a2