Summary

Deduplication logic is widely used in business processing, which can be roughly divided into two categories: DISTINCT de-duplication and FIRST_VALUE primary key de-duplication. The difference between the two is that DISTINCT de-duplication is to deduplicate the whole line data, for example, the data in tt may be duplicated, we need to remove duplicate data; FIRST_VALUE is based on the primary key to de-duplicate, you can. Think of it as a kind of business-level de-emphasis, but the use of real business scenarios is also common, for example, a user has multiple clicks, business only needs to take the first. This paper focuses on the application of these two kinds of weight removal.

1. DISTINCT weight removal

blink sql supports DISTINNCT de-weighting of standard sql. Suppose we have the following input data and want to de-duplicate the same row.

sql can be written as follows: select distinct * from tt_source; complete blink sql is as follows.

create table tt_source( a varchar, b varchar )with( type='tt', topic='se_taobao_wireless_click', accessId='08061416466YCN3FIU', accessKey='xxxxx' lengthCheck='PAD' ); create table tt_output( a varchar, b varchar )with( type='tt', topic='blink_test_32_1', accessKey='xxxx' ); insert into tt_output select distinct * from tt_source;

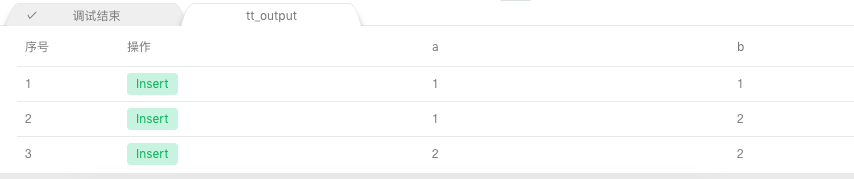

When output, the first line (1,1) and the second line (1,1) data are de-duplicated. The output is as follows

2. FIRST_VALUE udaf weight removal

Another case is to de-duplicate the data according to the primary key field, that is, if the two rows of data have the same primary key, even if the other non-primary key fields are different, only the first row of data is taken. In this case, we can use FIRST_VALUE udaf function to achieve the purpose of weight removal.

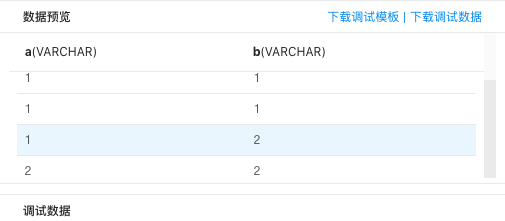

For the following input, and want to duplicate the data according to the primary key a:

sql can be written as follows:

INSERT INTO tt_output SELECT a, FIRST_VALUE(b) FROM tt_source GROUP BY a;

The complete blink sql is as follows.

CREATE TABLE tt_source( a VARCHAR, b VARCHAR )WITH( type='tt', topic='se_taobao_wireless_click', accessId='08061416466YCN3FIU', accessKey='xxx', lengthCheck='PAD' ); CREATE TABLE tt_output( a VARCHAR, b VARCHAR )WITH( type='tt', topic='blink_test_32_1', accessKey='xxx' ); INSERT INTO tt_output SELECT a, FIRST_VALUE(b) FROM tt_source GROUP BY a;

Output results:

You can see three rows of the same primary key a, with only the first row taken.

FIRST_VALUE also supports passing an order parameter to determine which line first is based on order, using FIRST_VALUE(b, c), but note that field C can only be BIGINT. If we have the following input, for the same primary key, we want to take the smallest record of C (the actual scene C is usually a time field).

The complete blink sql is as follows.

CREATE TABLE tt_source( a VARCHAR, b VARCHAR, c BIGINT )WITH( type='tt', topic='se_taobao_wireless_click', accessId='08061416466YCN3FIU', accessKey='xxx', lengthCheck='PAD' ); CREATE TABLE tt_output( a VARCHAR, b VARCHAR )WITH( type='tt', topic='blink_test_32_1', accessKey='xxx' ); INSERT INTO tt_output SELECT a, FIRST_VALUE(b, c) FROM tt_source GROUP BY a;

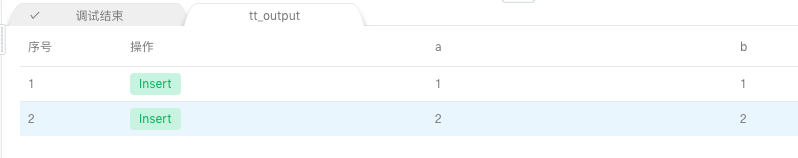

Output results:

You can see that when the output (1,1,1) comes back (1,2,0), 0 is smaller than 1, so the record of primary key 1 is updated, and the output (1,2)