For websites,

User login is not a frequent business operation

. If

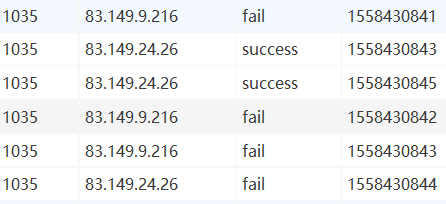

If a user fails to log in frequently in a short time, there may be a malicious attack on the program, such as password brute force cracking

. Therefore, we consider that the login failure actions of users should be counted. Specifically, if

If the same user (can be different IP s) fails to log in twice in succession within 2 seconds, it is considered that there is a risk of malicious login, and relevant information is output for alarm prompt

. This is the basic link of risk control for e-commerce websites and almost all websites.

The CEP Library of flink will be used to realize the event flow. Method 3: using CEP is the key method.

Import from pom file

CEP

Related dependencies:

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-cep-scala_${scala.binary.version}</artifactId>

<version>${flink.version}</version>

</dependency>Input data:

Method 1:

Status programming:

Due to the introduction of time, the simplest method is actually similar to the previous popular statistics, which only needs

Shunt according to user ID, and then save the event of login failure in ListState, and then set a timer to trigger after 2 seconds. When the timer is triggered, check the number of login failure events in the status. If it is greater than or equal to 2, the alarm information will be output.

(this method can only judge whether there are multiple failed logins after 2 seconds, instead of calling the police immediately after one failed login and another failed login.)

import org.apache.flink.api.common.state.{ListState, ListStateDescriptor, ValueState, ValueStateDescriptor}

import org.apache.flink.streaming.api.TimeCharacteristic

import org.apache.flink.streaming.api.functions.KeyedProcessFunction

import org.apache.flink.streaming.api.functions.timestamps.BoundedOutOfOrdernessTimestampExtractor

import org.apache.flink.streaming.api.scala._

import org.apache.flink.streaming.api.windowing.time.Time

import org.apache.flink.util.Collector

import scala.collection.mutable.ListBuffer

//Enter login event

case class LoginEvent(userId: Long, ip: String, eventType: String, timeStamp: Long)

// Output alarm information sample class

case class LoginFailWarning(userId: Long, firstFailTime: Long, lastFailTime: Long, warnMsg: String)

object LoginFail {

def main(args: Array[String]): Unit = {

val env = StreamExecutionEnvironment.getExecutionEnvironment

env.setParallelism(1)

env.setStreamTimeCharacteristic(TimeCharacteristic.EventTime)

val inputStream: DataStream[String] = env.readTextFile("D:\\Mywork\\workspace\\Project_idea\\UserBehaviorAnalysis0903\\LoginFailDetect\\src\\main\\resources\\LoginLog.csv")

//Convert to the sample class and extract the timestamp and watermark

val loginEventStream = inputStream.map{data =>

val arr = data.split(",")

LoginEvent(arr(0) toLong, arr(1), arr(2), arr(3).toLong)

}.assignTimestampsAndWatermarks(new BoundedOutOfOrdernessTimestampExtractor[LoginEvent](Time.seconds(5)) {

override def extractTimestamp(t: LoginEvent): Long = t.timeStamp * 1000L

})

// Judge and detect. If continuous login fails within 2 seconds, output alarm information

val loginFailWarningStream = loginEventStream

.keyBy(_.userId)

.process(new LoginFailWarningResult(2))

loginFailWarningStream.print()

env.execute("login fail detect job")

}

}

class LoginFailWarningResult(failTimes: Int) extends KeyedProcessFunction[Long, LoginEvent, LoginFailWarning]{

// Define the status, save all current login failure events, and save the timestamp of the timer

lazy val loginFailListState: ListState[LoginEvent] = getRuntimeContext.getListState(new ListStateDescriptor[LoginEvent]("loginfail-list", classOf[LoginEvent]))

lazy val timerTsState: ValueState[Long] = getRuntimeContext.getState(new ValueStateDescriptor[Long]("timer-ts", classOf[Long]))

override def processElement(value: LoginEvent, ctx: KeyedProcessFunction[Long, LoginEvent, LoginFailWarning]#Context, out: Collector[LoginFailWarning]): Unit = {

// Judge whether the current login event is successful or failed

if( value.eventType == "fail" ){

loginFailListState.add(value)

// If there is no timer, register a timer after 2 seconds

if( timerTsState.value() == 0 ){

val ts = value.timeStamp * 1000L + 2000L

ctx.timerService().registerEventTimeTimer(ts)

timerTsState.update(ts)

}

} else {

// If it is successful, clear the status and timer directly and start again

ctx.timerService().deleteEventTimeTimer(timerTsState.value())

loginFailListState.clear()

timerTsState.clear()

}

}

override def onTimer(timestamp: Long, ctx: KeyedProcessFunction[Long, LoginEvent, LoginFailWarning]#OnTimerContext, out: Collector[LoginFailWarning]): Unit = {

val allLoginFailList: ListBuffer[LoginEvent] = new ListBuffer[LoginEvent]()

val iter = loginFailListState.get().iterator()

while(iter.hasNext){

allLoginFailList += iter.next()

}

// Judge the number of login failure events. If it exceeds the upper limit, an alarm will be given

if(allLoginFailList.length >= failTimes){

out.collect(

LoginFailWarning(

allLoginFailList.head.userId,

allLoginFailList.head.timeStamp,

allLoginFailList.last.timeStamp,

"login fail in 2s for " + allLoginFailList.length + " times."

))

}

// Empty status

loginFailListState.clear()

timerTsState.clear()

}

}

//Output results

LoginFailWarning(1035,1558430842,1558430844,login fail in 2s for 3 times.)Method 2:

You can directly access the last login failure event in the status without triggering the timer, and make judgment and comparison every time.

import org.apache.flink.api.common.state.{ListState, ListStateDescriptor}

import org.apache.flink.streaming.api.TimeCharacteristic

import org.apache.flink.streaming.api.functions.KeyedProcessFunction

import org.apache.flink.streaming.api.functions.timestamps.BoundedOutOfOrdernessTimestampExtractor

import org.apache.flink.streaming.api.scala._

import org.apache.flink.streaming.api.windowing.time.Time

import org.apache.flink.util.Collector

object LoginFailAdvance {

def main(args: Array[String]): Unit = {

val env = StreamExecutionEnvironment.getExecutionEnvironment

env.setParallelism(1)

env.setStreamTimeCharacteristic(TimeCharacteristic.EventTime)

val inputStream = env.readTextFile("D:\\Mywork\\workspace\\Project_idea\\UserBehaviorAnalysis0903\\LoginFailDetect\\src\\main\\resources\\LoginLog.csv")

val loginEventStream = inputStream.map { data =>

val arr = data.split(",")

LoginEvent(arr(0) toLong, arr(1), arr(2), arr(3).toLong)

}.assignTimestampsAndWatermarks(new BoundedOutOfOrdernessTimestampExtractor[LoginEvent](Time.seconds(3)) {

override def extractTimestamp(t: LoginEvent): Long = {

t.timeStamp * 1000L

}

})

// Judge and detect. If continuous login fails within 2 seconds, output alarm information

val loginFailWarningStream = loginEventStream

.keyBy(_.userId)

.process(new LoginFailWaringAdvanceResult())

loginFailWarningStream.print()

env.execute("login fail detect job")

}

}

class LoginFailWaringAdvanceResult() extends KeyedProcessFunction[Long, LoginEvent, LoginFailWarning] {

// Define the status and save all current login failure events

lazy val loginFailListState: ListState[LoginEvent] = getRuntimeContext.getListState(new ListStateDescriptor[LoginEvent]("loginfail-list", classOf[LoginEvent]))

override def processElement(value: LoginEvent, ctx: KeyedProcessFunction[Long, LoginEvent, LoginFailWarning]#Context, out: Collector[LoginFailWarning]): Unit = {

// First determine the event type

if (value.eventType == "fail") {

val iter = loginFailListState.get().iterator()

if (iter.hasNext) { //The state iterator is not empty (that is, the second entry of the same Id)

val firstFailEvent: LoginEvent = iter.next()

if (value.timeStamp < firstFailEvent.timeStamp + 2) { //If the second fail login time is within 2 seconds of the first fail login time

out.collect(LoginFailWarning(value.userId, firstFailEvent.timeStamp, value.timeStamp, "login fail 2 times in 2s"))

}

loginFailListState.clear()

loginFailListState.add(value)

} else {

loginFailListState.add(value) //First wrong login record

}

} else {

loginFailListState.clear()

}

}

}

//Output results

LoginFailWarning(1035,1558430842,1558430843,login fail 2 times in 2s)

LoginFailWarning(1035,1558430843,1558430844,login fail 2 times in 2s)*Method 3: CEP (complex time processing) (key)

flink provides us with CEP

(

Complex Event Processing

, complex event processing) library, which is used to filter events conforming to a complex pattern in the stream.

import java.util

import org.apache.flink.cep.PatternSelectFunction

import org.apache.flink.cep.scala.CEP

import org.apache.flink.cep.scala.pattern.Pattern

import org.apache.flink.streaming.api.TimeCharacteristic

import org.apache.flink.streaming.api.functions.timestamps.BoundedOutOfOrdernessTimestampExtractor

import org.apache.flink.streaming.api.scala.{DataStream, StreamExecutionEnvironment}

import org.apache.flink.streaming.api.scala._

import org.apache.flink.streaming.api.windowing.time.Time

//cep complex problem processing can solve the disorder problem

object LoginFailWithCep {

def main(args: Array[String]): Unit = {

val env = StreamExecutionEnvironment.getExecutionEnvironment

env.setParallelism(1)

env.setStreamTimeCharacteristic(TimeCharacteristic.EventTime)

val inputStream: DataStream[String] = env.readTextFile("D:\\Mywork\\workspace\\Project_idea\\UserBehaviorAnalysis0903\\LoginFailDetect\\src\\main\\resources\\LoginLog.csv")

val loginEventBykeyStream: KeyedStream[LoginEvent, Long] = inputStream.map { data =>

val arr = data.split(",")

LoginEvent(arr(0).toLong, arr(1), arr(2), arr(3).toLong)

}.assignTimestampsAndWatermarks(new BoundedOutOfOrdernessTimestampExtractor[LoginEvent](Time.seconds(3)) {

override def extractTimestamp(element: LoginEvent): Long = element.timeStamp * 1000L

}).keyBy(_.userId)

// 1. Define the matching pattern. It is required that one login failure event is followed by another login failure event

val loginFailPattern: Pattern[LoginEvent, LoginEvent] = Pattern

.begin[LoginEvent]("firstFail").where(_.eventType == "fail")

.next("secondFail").where(_.eventType == "fail")

.within(Time.seconds(2))

val patternStream = CEP.pattern(loginEventBykeyStream, loginFailPattern)

val loginFailWarningStream = patternStream.select(new LoginFailEventMatch())

loginFailWarningStream.print()

env.execute("login fail with cep job")

}

}

class LoginFailEventMatch() extends PatternSelectFunction[LoginEvent, LoginFailWarning] {

//map(key, value) key: firstFail, secondFail value:LoginEvent

override def select(map: util.Map[String, util.List[LoginEvent]]): LoginFailWarning = {

val firstFailEvent = map.get("firstFail").iterator().next()

val secondFailEvent = map.get("secondFail").iterator().next()

LoginFailWarning(firstFailEvent.userId, firstFailEvent.timeStamp, secondFailEvent.timeStamp, "login fail")

}

}

//Output results

LoginFailWarning(1035,1558430841,1558430842,login fail)

LoginFailWarning(1035,1558430843,1558430844,login fail)