Once a day, never tired of learning

Grid search

The way to obtain an optimal super parameter can draw the verification curve, but the verification curve can only obtain one optimal super parameter at a time. If there are many permutations and combinations of multiple hyperparameters, grid search can be used to find the optimal hyperparameter combination.

For each super parameter combination in the super parameter combination list, instantiate the given model, do cv cross validation, and take the super parameter combination with the highest average f1 score as the best choice to instantiate the model object.

Grid search related API s:

import sklearn.model_selection as ms model = ms.GridSearchCV(Model, Super parameter combination list, cv=Fold number) model.fit(Input set) By product of training # Get each parameter combination of grid search model.cv_results_['params'] # Get the average test score corresponding to each parameter combination of grid search model.cv_results_['mean_test_score'] # Get the best parameters model.best_params_ model.best_score_ model.best_estimator_

Case: modify the confidence probability case and obtain the optimal super parameters based on grid search.

# Support vector machine classifier based on radial basis kernel function

params = [

{'kernel':['linear'], 'C':[1, 10, 100, 1000]}, #4 groups

{'kernel':['poly'], 'C':[1], 'degree':[2, 3]}, #2 kinds

{'kernel':['rbf'], 'C':[1,10,100,1000], #4 kinds

'gamma':[1, 0.1, 0.01, 0.001]}]

# When building the model, other parameters in the model are not filled in, only those that are not adjusted are filled in, put them into the grid search, and directly select the best after their own training

model = ms.GridSearchCV(svm.SVC(probability=True), params, cv=5)

model.fit(train_x, train_y)

#Output the results of cross validation of each parameter permutation and combination

for p, s in zip(model.cv_results_['params'],

model.cv_results_['mean_test_score']):

print(p, s)

# Obtain the super parameter information with the best score

print(model.best_params_)

# Get the best score

print(model.best_score_)

# Obtain the parameter information of the optimal model

print(model.best_estimator_)

Case: event prediction

Load event Txt to predict whether special events will occur in a certain time period. Continuous data cannot be used for label coding

Case:

import numpy as np

import sklearn.preprocessing as sp

import sklearn.model_selection as ms

import sklearn.svm as svm

class DigitEncoder():

"A digital encoder that converts numbers to strings"

def fit_transform(self, y):

return y.astype(int)

def transform(self, y):

return y.astype(int)

def inverse_transform(self, y):

return y.astype(str)

# Multivariate classification

data = np.loadtxt('../data/event.txt', delimiter=',', dtype='U10')

data = np.delete(data.T, 1, axis=0)

print(data)

encoders, x = [], []

for row in range(len(data)):

if data[row][0].isdigit():

encoder = DigitEncoder()

else:

encoder = sp.LabelEncoder()

if row < len(data) - 1:

x.append(encoder.fit_transform(data[row]))

else:

y = encoder.fit_transform(data[row])

encoders.append(encoder)

x = np.array(x).T

train_x, test_x, train_y, test_y = \

ms.train_test_split(x, y, test_size=0.25, random_state=5)

model = svm.SVC(kernel='rbf', class_weight='balanced')

print(ms.cross_val_score( model, train_x, train_y, cv=3, scoring='accuracy').mean())

model.fit(train_x, train_y)

pred_test_y = model.predict(test_x)

print((pred_test_y == test_y).sum() / pred_test_y.size)

data = [['Tuesday', '13:30:00', '21', '23']]

data = np.array(data).T

x = []

for row in range(len(data)):

encoder = encoders[row]

x.append(encoder.transform(data[row]))

x = np.array(x).T

pred_y = model.predict(x)

print(encoders[-1].inverse_transform(pred_y))

Case: traffic flow forecast (regression)

Load traffic Txt to predict the traffic flow at a traffic intersection in a certain period of time.

import numpy as np

import sklearn.preprocessing as sp

import sklearn.model_selection as ms

import sklearn.svm as svm

import sklearn.metrics as sm

class DigitEncoder():

def fit_transform(self, y):

return y.astype(int)

def transform(self, y):

return y.astype(int)

def inverse_transform(self, y):

return y.astype(str)

data = []

# regression

data = np.loadtxt('../data/traffic.txt', delimiter=',', dtype='U10')

data = data.T

encoders, x = [], []

for row in range(len(data)):

if data[row][0].isdigit():

encoder = DigitEncoder()

else:

encoder = sp.LabelEncoder()

if row < len(data) - 1:

x.append(encoder.fit_transform(data[row]))

else:

y = encoder.fit_transform(data[row])

encoders.append(encoder)

x = np.array(x).T

train_x, test_x, train_y, test_y = \

ms.train_test_split(x, y, test_size=0.25, random_state=5)

# Support vector machine regressor

model = svm.SVR(kernel='rbf', C=10, epsilon=0.2)

model.fit(train_x, train_y)

pred_test_y = model.predict(test_x)

print(sm.r2_score(test_y, pred_test_y))

data = [['Tuesday', '13:35', 'San Franci', 'yes']]

data = np.array(data).T

x = []

for row in range(len(data)):

encoder = encoders[row]

x.append(encoder.transform(data[row]))

x = np.array(x).T

pred_y = model.predict(x)

print(int(pred_y))

Regression:

Linear regression, ridge regression, polynomial regression, SVM SVR (), decision tree, positive incentive, random forest... Evaluation: r2_score()

Classification:

Logistic regression, naive Bayes, decision tree, random forest, SVM SVC()

Model selection: cross validation, validation curve, learning curve, dataset division, grid search

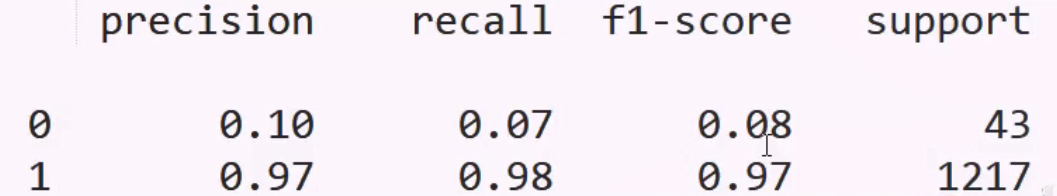

Model evaluation indicators: precision rate, recall rate and f1 score; Confusion matrix, classification report can be used.

Complete a specific business requirement process:

- Carefully understand the business requirements and sort out the required sample data.

- It doesn't involve algorithms. Think clearly whether it is classification business or regression business.

- Figure out what humans will do when making predictions for the current business.

- Find the most suitable model and do things.

clustering

Classification is different from clustering. Classification is a supervised learning model and clustering is an unsupervised learning model. Clustering pays attention to using some algorithms to divide the samples into n communities. Generally, this algorithm needs to calculate the Euclidean distance.

Euclidean distance is Euclidean distance.

P

(

x

1

)

−

Q

(

x

2

)

:

∣

x

1

−

x

2

∣

=

(

x

1

−

x

2

)

2

P

(

x

1

,

y

1

)

−

Q

(

x

2

,

y

2

)

:

(

x

1

−

x

2

)

2

+

(

y

1

−

y

2

)

2

P

(

x

1

,

y

1

,

z

1

)

−

Q

(

x

2

,

y

2

,

z

2

)

:

(

x

1

−

x

2

)

2

+

(

y

1

−

y

2

)

2

+

(

z

1

−

z

2

)

2

P(x_1) - Q(x_2): |x_1-x_2| = \sqrt{(x_1-x_2)^2} \\ P(x_1,y_1) - Q(x_2,y_2): \sqrt{(x_1-x_2)^2+(y_1-y_2)^2} \\ P(x_1,y_1,z_1) - Q(x_2,y_2,z_2): \sqrt{(x_1-x_2)^2+(y_1-y_2)^2+(z_1-z_2)^2} \\

P(x1)−Q(x2):∣x1−x2∣=(x1−x2)2

P(x1,y1)−Q(x2,y2):(x1−x2)2+(y1−y2)2

P(x1,y1,z1)−Q(x2,y2,z2):(x1−x2)2+(y1−y2)2+(z1−z2)2

The Euclidean distance, the square root of the square sum of the difference between the corresponding eigenvalues of the two samples, is used to express the similarity of the two samples.

Love horror comedy

user1 5 5 3

user2 4 5 4

K-means algorithm

Step 1: randomly select k samples as the centers of K clusters, calculate the Euclidean distance from each sample to each cluster center, and assign the sample to the category where the nearest cluster center is located.

Step 2: according to the cluster division obtained in step 1, calculate the geometric center of each cluster respectively, take the geometric center as the new cluster center, and repeat step 1 until the calculated geometric center coincides or nearly coincides with the cluster center.

be careful:

- The number of clusters k must be known in advance. With the help of some evaluation indexes, the best cluster number is selected.

- The initial selection of cluster center will affect the final result of cluster division. The samples far away from the initial center shall be selected as far as possible.

API s related to K-means algorithm:

import sklearn.cluster as sc # n_clusters: number of clusters model = sc.KMeans(n_clusters=4) # Continuously adjust the cluster center until the final cluster center is stable model.fit(x) y = model.predict(x) # Obtain the clustering center of the training results centers = model.cluster_centers_

Case: loading multiple3 Txt, complete the clustering of samples based on K-means algorithm.

import numpy as np

import sklearn.cluster as sc

import matplotlib.pyplot as mp

x = np.loadtxt('../data/multiple3.txt', delimiter=',')

# K-means clustering

model = sc.KMeans(n_clusters=4)

model.fit(x)

centers = model.cluster_centers_

n = 500

l, r = x[:, 0].min() - 1, x[:, 0].max() + 1

b, t = x[:, 1].min() - 1, x[:, 1].max() + 1

grid_x = np.meshgrid(np.linspace(l, r, n),

np.linspace(b, t, n))

flat_x = np.column_stack((grid_x[0].ravel(), grid_x[1].ravel()))

flat_y = model.predict(flat_x)

grid_y = flat_y.reshape(grid_x[0].shape)

pred_y = model.predict(x)

mp.figure('K-Means Cluster', facecolor='lightgray')

mp.title('K-Means Cluster', fontsize=20)

mp.xlabel('x', fontsize=14)

mp.ylabel('y', fontsize=14)

mp.tick_params(labelsize=10)

mp.pcolormesh(grid_x[0], grid_x[1], grid_y, cmap='gray')

mp.scatter(x[:, 0], x[:, 1], c=pred_y, cmap='brg', s=80)

mp.scatter(centers[:, 0], centers[:, 1], marker='+', c='gold', s=1000, linewidth=1)

mp.show()

Image quantization

KMeans clustering algorithm can be applied to the field of image quantization. KMeans algorithm can cluster the color values contained in an image, calculate the average value of each category, and then regenerate a new image. It can achieve the purpose of image dimensionality reduction. This process is called image quantization. Image quantization can better preserve the image contour and reduce the difficulty of image contour recognition.

Case:

import numpy as np

import scipy.misc as sm

import scipy.ndimage as sn

import sklearn.cluster as sc

import matplotlib.pyplot as mp

# The color in the image is quantized by K-means clustering

def quant(image, n_clusters):

x = image.reshape(-1, 1)

model = sc.KMeans(n_clusters=n_clusters)

model.fit(x)

y = model.labels_

centers = model.cluster_centers_.ravel()

return centers[y].reshape(image.shape)

original = sm.imread('../data/lily.jpg', True)

quant4 = quant(original, 4)

quant3 = quant(original, 3)

quant2 = quant(original, 2)

mp.figure('Image Quant', facecolor='lightgray')

mp.subplot(221)

mp.title('Original', fontsize=16)

mp.axis('off')

mp.imshow(original, cmap='gray')

mp.subplot(222)

mp.title('Quant-4', fontsize=16)

mp.axis('off')

mp.imshow(quant4, cmap='gray')

mp.subplot(223)

mp.title('Quant-3', fontsize=16)

mp.axis('off')

mp.imshow(quant3, cmap='gray')

mp.subplot(224)

mp.title('Quant-2', fontsize=16)

mp.axis('off')

mp.imshow(quant2, cmap='gray')

mp.tight_layout()

mp.show()

Mean shift algorithm

Firstly, it is assumed that each cluster in the sample space obeys a certain known probability distribution rule, then different probability density functions are used to fit the statistical histogram in the sample, and the center (mean) of the density function is continuously moved until the best fitting effect is obtained. The peak point of these probability density functions is the cluster center, and then according to the distance between each sample and each center, select the category to which the nearest cluster center belongs as the category of the sample.

Features of mean shift algorithm:

- The number of clusters does not need to be known in advance, and the algorithm will automatically identify the number of centers of the statistical histogram.

- The cluster center is not based on the initial assumption, and the result of cluster division is relatively stable.

- The sample space should obey some probability distribution rules, otherwise the accuracy of the algorithm will be greatly reduced.

API related to mean shift algorithm:

# Quantify the bandwidth and determine the step amount of each adjustment of the probability density function # n_samples: number of samples # quantile: quantization width (width of one histogram) bw = sc.estimate_bandwidth(x, n_samples=len(x), quantile=0.1) # Mean shift cluster model = sc.MeanShift(bandwidth=bw, bin_seeding=True) model.fit(x)

Case: loading multiple3 Txt, use the mean shift algorithm to cluster the samples.

import numpy as np

import sklearn.cluster as sc

import matplotlib.pyplot as mp

x = np.loadtxt('../data/multiple3.txt', delimiter=',')

# Quantify the bandwidth and determine the step amount of each adjustment of the probability density function

bw = sc.estimate_bandwidth(x, n_samples=len(x), quantile=0.2)

# Mean shift cluster

model = sc.MeanShift(bandwidth=bw, bin_seeding=True)

model.fit(x)

centers = model.cluster_centers_

n = 500

l, r = x[:, 0].min() - 1, x[:, 0].max() + 1

b, t = x[:, 1].min() - 1, x[:, 1].max() + 1

grid_x = np.meshgrid(np.linspace(l, r, n),

np.linspace(b, t, n))

flat_x = np.column_stack((grid_x[0].ravel(), grid_x[1].ravel()))

flat_y = model.predict(flat_x)

grid_y = flat_y.reshape(grid_x[0].shape)

pred_y = model.predict(x)

mp.figure('Mean Shift Cluster', facecolor='lightgray')

mp.title('Mean Shift Cluster', fontsize=20)

mp.xlabel('x', fontsize=14)

mp.ylabel('y', fontsize=14)

mp.tick_params(labelsize=10)

mp.pcolormesh(grid_x[0], grid_x[1], grid_y, cmap='gray')

mp.scatter(x[:, 0], x[:, 1], c=pred_y, cmap='brg', s=80)

mp.scatter(centers[:, 0], centers[:, 1], marker='+', c='gold', s=1000, linewidth=1)

mp.show()

Agglomerative hierarchy algorithm

Firstly, it is assumed that each sample is an independent cluster. If the number of clusters counted is greater than the expected number of clusters, start from each sample to find another sample closest to itself and cluster with it to form a larger cluster. At the same time, reduce the total number of clusters and repeat the above process until the number of clusters counted reaches the expected value.

Characteristics of aggregation hierarchy algorithm:

- The number of clusters k must be known in advance. With the help of some evaluation indexes, the best cluster number is selected.

- There is no concept of cluster center, so clustering can only be divided in the training set, but the clustering attribution of unknown samples other than the training set cannot be determined.

- When determining the condensed samples, in addition to taking the distance as the condition, the aggregated samples can also be determined according to the continuity.

API related to aggregation hierarchy algorithm:

# Agglomerative hierarchical cluster model = sc.AgglomerativeClustering(n_clusters=4) pred_y = model.fit_predict(x)

Case: reload multiple3 Txt, using the aggregation hierarchy algorithm for clustering.

import numpy as np

import sklearn.cluster as sc

import matplotlib.pyplot as mp

x = np.loadtxt('../data/multiple3.txt', delimiter=',')

# Agglomerative hierarchical cluster

model = sc.AgglomerativeClustering(n_clusters=4)

pred_y = model.fit_predict(x)

mp.figure('Agglomerative Cluster', facecolor='lightgray')

mp.title('Agglomerative Cluster', fontsize=20)

mp.xlabel('x', fontsize=14)

mp.ylabel('y', fontsize=14)

mp.tick_params(labelsize=10)

mp.scatter(x[:, 0], x[:, 1], c=pred_y, cmap='brg', s=80)

mp.show()

When determining the condensed samples, in addition to taking the distance as the condition, the aggregated samples can also be determined according to the continuity.

import numpy as np

import sklearn.cluster as sc

import sklearn.neighbors as nb

import matplotlib.pyplot as mp

n_samples = 500

x = np.linspace(-1, 1, n_samples)

y = np.sin(x * 2 * np.pi)

n = 0.3 * np.random.rand(n_samples, 2)

x = np.column_stack((x, y)) + n

# Agglomerative hierarchical clustering without continuity

model_nonc = sc.AgglomerativeClustering( linkage='average', n_clusters=3)

pred_y_nonc = model_nonc.fit_predict(x)

# Nearest neighbor filter

conn = nb.kneighbors_graph( x, 10, include_self=False)

# Agglomerative hierarchical cluster with continuity

model_conn = sc.AgglomerativeClustering(

linkage='average', n_clusters=3, connectivity=conn)

pred_y_conn = model_conn.fit_predict(x)

mp.figure('Nonconnectivity', facecolor='lightgray')

mp.title('Nonconnectivity', fontsize=20)

mp.xlabel('x', fontsize=14)

mp.ylabel('y', fontsize=14)

mp.tick_params(labelsize=10)

mp.scatter(x[:, 0], x[:, 1], c=pred_y_nonc, cmap='brg', alpha=0.5, s=30)

mp.figure('Connectivity', facecolor='lightgray')

mp.title('Connectivity', fontsize=20)

mp.xlabel('x', fontsize=14)

mp.ylabel('y', fontsize=14)

mp.tick_params(labelsize=10)

mp.scatter(x[:, 0], x[:, 1], c=pred_y_conn, cmap='brg', alpha=0.5, s=30)

mp.show()

Contour coefficient

Good clustering: dense inside and sparse outside. The samples within the same cluster should be dense enough, and the samples between different clusters should be distant enough.

Contour coefficient calculation rules: for a specific sample in the sample space, calculate the average distance a between it and other samples in the cluster, and the average distance b between the sample and all samples in the nearest cluster. The contour coefficient of the sample is (b-a)/max(a, b). Take the arithmetic average of the contour coefficients of all samples in the whole sample space, As the performance index of clustering s.

The interval of contour coefficient is [- 1, 1]- 1 means poor classification effect and 1 means good classification effect. 0 represents cluster overlap, and clustering is not well divided.

API related to profile factor:

import sklearn.metrics as sm # v: Average contour coefficient # metric: distance algorithm: Euclidean distance is used v = sm.silhouette_score(Input set, Output set, sample_size=Number of samples, metric=Distance algorithm)

Case: output the contour coefficient after clustering and division by KMeans algorithm.

# Print average profile factor print(sm.silhouette_score( x, pred_y, sample_size=len(x), metric='euclidean'))

DBSCAN algorithm

Select any sample from the sample space and make a circle with a given radius in advance. All samples in the circle are considered to be in the same cluster as the sample. Continue to make a circle with these samples in the circle as the center of the circle, repeat the above process, and continuously expand the scale of the samples in the circle until no new samples are added. So far, a cluster is obtained. In the remaining samples, repeat the above process until all samples in the sample space are exhausted.

Features of DBSCAN algorithm:

-

The radius given in advance will affect the final clustering effect, and the better scheme can be selected with the help of contour coefficient.

-

According to the formation process of clustering, the samples are divided into the following three categories:

Peripheral samples: samples that are clustered into a cluster by other samples but cannot introduce new samples.

Isolated samples: if the number of samples in the cluster is lower than the set lower limit, it is not called cluster, on the contrary, it is called isolated samples.

Core samples: samples other than peripheral samples and isolated samples.

API s related to DBSCAN clustering algorithm:

# DBSCAN cluster # eps: radius # min_samples: the lower limit of the number of cluster samples. If it is lower than this value, it is called isolated samples model = sc.DBSCAN(eps=epsilon, min_samples=5) model.fit(x)

Case: modify the agglomerative hierarchical clustering case, cluster division based on DBSCAN clustering algorithm, and select the optimal radius.

import numpy as np

import sklearn.cluster as sc

import sklearn.metrics as sm

import matplotlib.pyplot as mp

x = np.loadtxt('../data/perf.txt', delimiter=',')

epsilons, scores, models = np.linspace(0.3, 1.2, 10), [], []

for epsilon in epsilons:

# DBSCAN cluster

model = sc.DBSCAN(eps=epsilon, min_samples=5)

model.fit(x)

score = sm.silhouette_score(

x, model.labels_, sample_size=len(x), metric='euclidean')

scores.append(score)

models.append(model)

scores = np.array(scores)

best_index = scores.argmax()

best_epsilon = epsilons[best_index]

print(best_epsilon)

best_score = scores[best_index]

print(best_score)

best_model = models[best_index]

Case: obtain core samples, peripheral samples and isolated samples. And use different point drawing.

best_model = models[best_index]

pred_y = best_model.fit_predict(x)

core_mask = np.zeros(len(x), dtype=bool)

core_mask[best_model.core_sample_indices_] = True

offset_mask = best_model.labels_ == -1

periphery_mask = ~(core_mask | offset_mask)

mp.figure('DBSCAN Cluster', facecolor='lightgray')

mp.title('DBSCAN Cluster', fontsize=20)

mp.xlabel('x', fontsize=14)

mp.ylabel('y', fontsize=14)

mp.tick_params(labelsize=10)

labels = best_model.labels_

mp.scatter(x[core_mask][:, 0], x[core_mask][:, 1], c=labels[core_mask],

cmap='brg', s=80, label='Core')

mp.scatter(x[periphery_mask][:, 0], x[periphery_mask][:, 1], alpha=0.5,

c=labels[periphery_mask], cmap='brg', marker='s', s=80, label='Periphery')

mp.scatter(x[offset_mask][:, 0], x[offset_mask][:, 1],

c=labels[offset_mask], cmap='brg', marker='x', s=80, label='Offset')

mp.legend()

mp.show()