1, Container technology

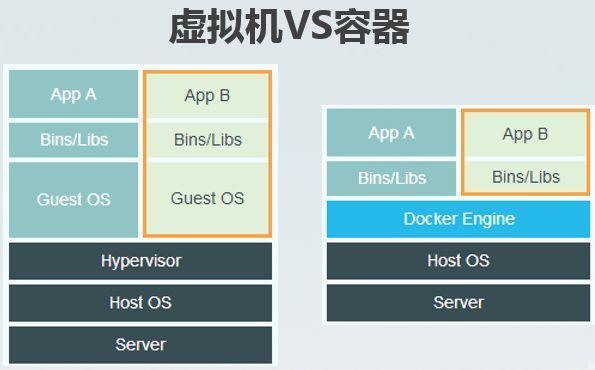

Before the emergence of virtual machine (VMware), due to the dependency of application deployment, new applications often need to be deployed on independent servers, resulting in low utilization of hardware resources. The emergence of VMware perfectly solves this problem.

However, VMware also has shortcomings. First, VMware is actually a virtual independent OS, which requires additional CPU, RAM and storage resources; Secondly, since the virtual machine is an independent OS, the startup is relatively slow; Finally, for independent OS, separate patch & monitoring is required, and for commercial applications, system authorization is also required.

Based on the above reasons, large Internet companies represented by google have been exploring the disadvantages of replacing virtual machine model with container technology.

In the virtual machine model, Hypervisor is hardware virtualization - dividing hardware resources into virtual resources and packaging them into the software structure of VM, while the container model is OS virtualization.

The container shares the host OS to realize process level isolation. Compared with the virtual machine, the container has fast startup, low resource consumption and easy migration.

1.1 Linux container

Modern container technology originated from Linux, and its core technologies include kernel namespace, control group and union file system.

Kernel Namespace

Responsible for isolation

The complexity of container technology makes it difficult to popularize. Knowing the emergence of Docker, Docker makes the use of container technology simple.

At present, there is no Mac container. windows platform win10 and win server2016 already support windows container.

Note that because the container shares the host operating system, the windows container cannot run on the Linux system, but Docker for Mac and Docker for Windows support the Linux container by starting a lightweight Linux VM

In windows, the system must be 64 bit windows 10 and enabled Hyper-V And container properties.

1.2 Docker installation

Omitted, Mac installation reference here , windows installation reference here.

1.3 basic concepts

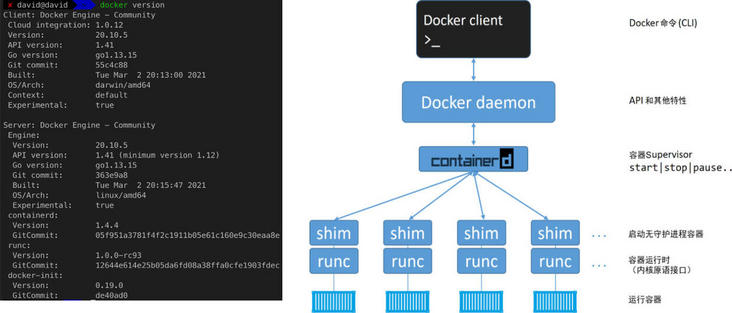

1.3.1 docker engine architecture

After successful installation, execute docker version and output something similar to the following:

Docker daemon

- Image management, image construction, API, authentication, core network, etc.

The early Docker engine only included LXC and daemon, while daemon coupled client, API, runtime, image construction and other functions. The core functions of the disassembled daemon focused on image construction management and API.

containerd

- Encapsulate the execution logic of the container and be responsible for the life cycle management of the container.

shim

- Used to decouple the running container from the daemon (the daemon can be upgraded independently)

- Keep all STDIN and STDOUT streams open

- Synchronize container exit status to daemon

Runc (container runtime)

- Specification reference implementation of OCI container runtime (therefore also known as OCI layer)

- Lightweight Lincontainer wrapper command line interaction tool

- The only purpose is to create containers

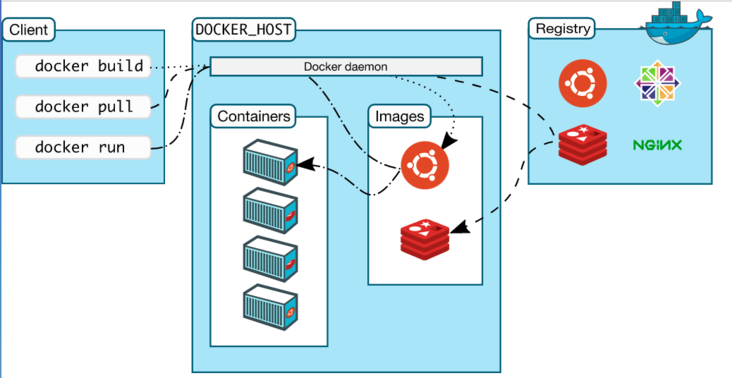

1.3.2 mirroring

The image is composed of multiple layers, including a simplified OS and independent objects of applications and dependent packages. The image is stored in the image warehouse Official image warehouse service.

You can use the command docker image pull image name to pull images. A complete image name is as follows:

<ImageRegistryDomain/><UserId/>:repository:<tag>

Related operation

- Pull image docker image pull repository:tag

- View local image docker image ls

- View image details docker image inspect repository:tag

- Delete image docker image RM Repository: tag (note that images with running containers cannot be deleted)

How to build a mirror

- Build docker container commit through the running container

- Through Dockerfile file

1.3.3 containers

A container is a runtime instance of a mirror.

Run container

$ docker container run [OPIONS] <IMAGE> <APP>

Note that for linux containers, only the processes corresponding to the app run in the container by default. If the app process exits, the container exits, that is, kill the main process in the container and kill the container.

With the CTR PQ key combination, you can exit the container and return to the host machine and keep the container running in the background. At this time, you can enter the container again through docker container exec - it < containerid > bash.

Because the parameters of running containers are quite complex, such as port mapping, mounting disks, setting environment variables, setting networks, etc., especially when running multiple containers, they are generally passed Docker Compose This tool is used to orchestrate and manage containers. Docker Compose is installed by default in the new version of docker.

View running containers

$ docker cotainer ls [-a] $ docker ps [-a]

Start or stop the container

$ docker container start <containerId> $ docker container stop <containerId>

2, Significance of containerization

2.1 unified development environment

2.2 automated testing

2.3 deployment (CI)

The container is the application.

3, Make basic environment (image)

3.1 containerizing

The process of integrating applications into container operation is called containerization.

General steps of containerization:

- Application code development

- establish Dockerfile

- Execute docker image build in the context of Dockerfile

- Start container

Let's get down to business and customize a full stack development basic environment through containerization.

3.2 customized development image

3.2.1 environmental requirements

- CentOS7.x

- gcc

gcc-c++

pcre-devel

openssl-devel

make

perl

zlib

zlib-devel - vim/openssh-server

- NodeJS 12.9.1

- Set NPM registry as the private warehouse of the team

- Mount project directory

- Exposed 80, 22 ports

3.2.1 prepare Dockerfile

FROM centos:7

MAINTAINER xuhui120 <xuhui120@xxx.com>

# Installation dependency, ssh

RUN yum -y install \

gcc \

gcc-c++ \

pcre-devel \

openssl-devel \

make \

perl \

zlib \

vim \

zlib-devel \

openssh-server \

&& ssh-keygen -t rsa -f /etc/ssh/ssh_host_rsa_key \

&& ssh-keygen -t rsa -f /etc/ssh/ssh_host_ecdsa_key \

&& ssh-keygen -t rsa -f /etc/ssh/ssh_host_ed25519_key \

&& /bin/sed -i "s/#UsePrivilegeSeparation.*/UsePrivilegeSeparation no/g" /etc/ssh/sshd_config \

&& /bin/sed -i "s/#PermitRootLogin.*/PermitRootLogin yes/g" /etc/ssh/sshd_config \

&& /bin/sed -i 's/UsePAM yes/UsePAM no/g' /etc/ssh/sshd_config \

&& /bin/echo "cd /var/www" >> /root/.bashrc

#######################

# Install node

#######################

USER root

ARG NODE_VERSION=12.9.1

RUN cd /usr/local/src \

&& curl -O -s https://nodejs.org/dist/v${NODE_VERSION}/node-v${NODE_VERSION}-linux-x64.tar.gz \

&& mkdir -p /usr/local/nodejs \

&& tar zxf node-v${NODE_VERSION}-linux-x64.tar.gz -C /usr/local/nodejs --strip-components=1 --no-same-owner \

&& ls -al /usr/local/nodejs \

&& ln -s /usr/local/nodejs/bin/* /usr/local/bin/ \

&& npm config set registry http://registry.xxx.com \

&& cd /usr/local/src \

&& rm -rf *.tar.gz

# Change the root password

ARG SSH_ROOT_PWD=root

RUN /bin/echo "${SSH_ROOT_PWD}" | passwd --stdin root

# Password free login

ARG LOCAL_SSH_KEY

RUN if [ -n "${LOCAL_SSH_KEY}" ]; then \

mkdir -p /root/.ssh \

&& chmod 750 /root/.ssh \

&& echo ${LOCAL_SSH_KEY} > /root/.ssh/authorized_keys \

&& sed -i s/\"//g /root/.ssh/authorized_keys \

&& chmod 600 /root/.ssh/authorized_keys \

;fi

# Exposed port

EXPOSE 22

EXPOSE 80

COPY startup.sh /

RUN chmod +x /startup.sh

WORKDIR /var/www

CMD /startup.shCreate a container and run the script startup sh:

#!/bin/bash source /etc/profile /usr/sbin/sshd -D

Finally, execute the build command docker image build.