Today we'll look at how some highly concurrent business scenarios can achieve data consistency.

Scene:

1. Data sheet: ConCurrency.

1 CREATE TABLE [dbo].[ConCurrency]( 2 [ID] [int] NOT NULL, 3 [Total] [int] NULL 4 )

2. Initial value: ID=1,Total = 0

3. Require Total + 1 per client request

2. Single thread

1 static void Main(string[] args) 2 { 3 ... 4 new Thread(Run).Start(); 5 ... 6 } 7 8 public static void Run() 9 { 10 for (int i = 1; i <= 100; i++) 11 { 12 var total = DbHelper.ExecuteScalar("Select Total from ConCurrency where Id = 1", null).ToString(); 13 var value = int.Parse(total) + 1; 14 15 DbHelper.ExecuteNonQuery(string.Format("Update ConCurrency Set Total = {0} where Id = 1", value.ToString()), null); 16 Thread.Sleep(1); 17 } 18 }

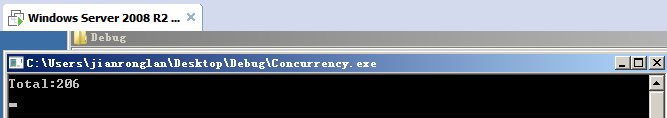

2.1 As required, output should normally be: 100

2.2 Running results

It seems that there is no problem.

3. Multithread concurrency

3.1 Main Change

1 static void Main(string[] args) 2 { 3 ... 4 new Thread(Run).Start(); 5 new Thread(Run).Start(); 6 ... 7 }

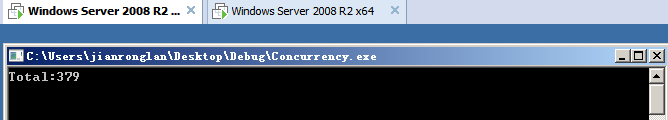

3.2 We expect to output 200

3.3 Running Results

Unfortunately, it's 150. The reason for this is that T1 and T2 acquire Total (assuming the value is 10), and T2 is updated only after T1 is updated one or more times (Total:10).

This causes the previous T1 submissions to be overwritten.

3.4 How to avoid it? General practice can be locked, such as Run changed to the following

1 public static void Run() 2 { 3 for (int i = 1; i <= 100; i++) 4 { 5 lock (resource) 6 { 7 var total = DbHelper.ExecuteScalar("Select Total from ConCurrency where Id = 1", null).ToString(); 8 var value = int.Parse(total) + 1; 9 10 DbHelper.ExecuteNonQuery(string.Format("Update ConCurrency Set Total = {0} where Id = 1", value.ToString()), null); 11 } 12 13 Thread.Sleep(1); 14 } 15 }

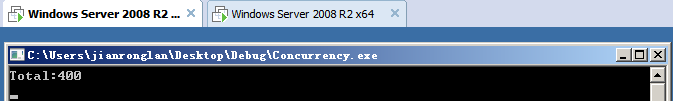

3.5 Run Again

IV. Implementing with Queues

4.1. Define queues

static ConcurrentQueue<int> queue = new ConcurrentQueue<int>();

1 /// <summary>Producer</summary> 2 public static void Produce() 3 { 4 for (int i = 1; i <= 100; i++) 5 { 6 queue.Enqueue(i); 7 } 8 } 9 10 /// <summary>Consumer</summary> 11 public static void Consume() 12 { 13 int times; 14 while (queue.TryDequeue(out times)) 15 { 16 var total = DbHelper.ExecuteScalar("Select Total from ConCurrency where Id = 1", null).ToString(); 17 var value = int.Parse(total) + 1; 18 19 DbHelper.ExecuteNonQuery(string.Format("Update ConCurrency Set Total = {0} where Id = 1", value.ToString()), null); 20 Thread.Sleep(1); 21 } 22 }

4.2 Main Change

1 static void Main(string[] args) 2 { 3 ... 4 new Thread(Produce).Start(); 5 new Thread(Produce).Start(); 6 Consume(); 7 ... 8 }

4.3 Expected output 200, see the operation results

4.4 Testing in Cluster Environment, Two Machines

Something the matter! The last machine to run was 379, and the database was 379.

This is beyond our expectations, and it seems that even locking can't solve all the problems in high concurrency scenarios.

V. Distributed queues

5.1 Distributed queues can be used to solve the above problem, and redis queues are used here.

1 /// <summary>Producer</summary> 2 public static void ProduceToRedis() 3 { 4 using (var client = RedisManager.GetClient()) 5 { 6 for (int i = 1; i <= 100; i++) 7 { 8 client.EnqueueItemOnList("EnqueueName", i.ToString()); 9 } 10 } 11 } 12 13 /// <summary>Consumer</summary> 14 public static void ConsumeFromRedis() 15 { 16 using (var client = RedisManager.GetClient()) 17 { 18 while (client.GetListCount("EnqueueName") > 0) 19 { 20 if (client.SetValueIfNotExists("lock", "lock")) 21 { 22 var item = client.DequeueItemFromList("EnqueueName"); 23 var total = DbHelper.ExecuteScalar("Select Total from ConCurrency where Id = 1", null).ToString(); 24 var value = int.Parse(total) + 1; 25 26 DbHelper.ExecuteNonQuery(string.Format("Update ConCurrency Set Total = {0} where Id = 1", value.ToString()), null); 27 28 client.Remove("lock"); 29 } 30 31 Thread.Sleep(5); 32 } 33 } 34 }

5.2 Main also needs to be changed

1 static void Main(string[] args) 2 { 3 ... 4 new Thread(ProduceToRedis).Start(); 5 new Thread(ProduceToRedis).Start(); 6 Thread.Sleep(1000 * 10); 7 8 ConsumeFromRedis(); 9 ... 10 }

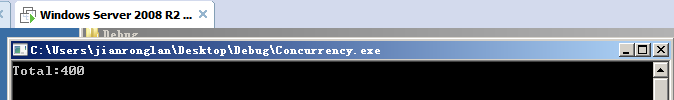

5.3 Try again in the cluster, two are 400, no mistake (because each site has two threads)

You can see that the data is absolutely correct!