theory

distributed file system

Composition: file system interface, object management software collection, objects and attributes

The function of file system: it is to organize and allocate the file storage space, and is responsible for file storage. It is responsible for establishing files for users, storing, being alone, modifying, transferring out files, and controlling access.

FUSE: user space file system. This one is the file system

Workflow

The client sends a read-write request locally, and then sends it to the API of VFS to accept the request. After receiving the request, it will be handed over to FUSE (kernel pseudo file system),

FUSE can simulate the operating system, so it can transfer the file system. The device location of the transfer is: / dev / FUSE (device for transmission - virtual device file) - "and give it to the GFS client. The client will process the data according to the configuration file, and then send it to the GFS server through TCP/ib/rdma network, and write the data to the server storage device

Basic bonds and compliance bonds

Basic volume:

(1) distribute volume: distributed volume

(2) stripe volume: stripe volume

(3) replica volume: copy volume

Composite volume:

(4) distribute stripe volume: distributed stripe volume

(5) distribute replica volume: distributed replication volume

(6) stripe replica volume: stripe replica volume

(7) Distribute stripe replica: a distributed striped replica volume

Distributed volume (default): files are distributed to all Brick servers through HASH algorithm. This volume is the basis of Glusterfs. Hashing files to different bricks according to HASH algorithm only expands disk space. If a disk is damaged, data will be lost. It belongs to file level RAID 0 and has no fault tolerance.

Striped volume (default): similar to RAID0, files are divided into data blocks and distributed to multiple brick servers in a polling manner. File storage is based on data blocks and supports large file storage. The larger the file, the higher the reading efficiency.

100 50 + 50

Distributed stripe volume: the number of Brick servers is a multiple of the number of stripes (the number of bricks distributed by data blocks), which has the characteristics of both distributed and striped volumes.

Distributed replica volume: the number of brick servers is a multiple of the number of mirrors (number of data copies), which combines the characteristics of distributed and replicated volumes

Experiment, gfs cluster deployment

Step 1: experimental environment

Add 4 hard disks to 4 servers for network interworking

The second step is to set the environment, partition and format the four servers

vim /opt/disk.sh

#!/bin/bash

echo "the disks exist list:"

##grep out the disk of the system

fdisk -l |grep 'disk /dev/sd[a-z]'

echo "=================================================="

PS3="chose which disk you want to create:"

##Select the disk number you want to create

select VAR in `ls /dev/sd*|grep -o 'sd[b-z]'|uniq` quit

do

case $VAR in

sda)

##The local disk exits the case statement

fdisk -l /dev/sda

break ;;

sd[b-z])

#create partitions

echo "n ##create disk

p

w" | fdisk /dev/$VAR

#make filesystem

##format

mkfs.xfs -i size=512 /dev/${VAR}"1" &> /dev/null

#mount the system

mkdir -p /data/${VAR}"1" &> /dev/null

###Permanent mount

echo -e "/dev/${VAR}"1" /data/${VAR}"1" xfs defaults 0 0\n" >> /etc/fstab

###Make mount effective

mount -a &> /dev/null

break ;;

quit)

break;;

*)

echo "wrong disk,please check again";;

esac

done

chmod +x disk.sh

sh -x disk.sh

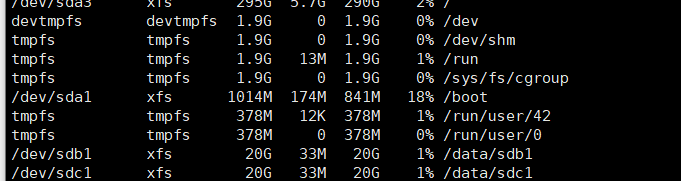

df -Th

What time does the mount complete

Step 3: modify the host name. configuration file

echo "192.168.17.10 node1" >> /etc/hosts echo "192.168.17.20 node2" >> /etc/hosts echo "192.168.17.30 node3" >> /etc/hosts echo "192.168.17.40 node4" >> /etc/hosts echo "192.168.17.50 client" >> /etc/hosts

Step 4: install and start the GFS file

Configure the yum source first

cd /etc/yum.repos.d/

mkdir repo.bak

mv *.repo repo.bak

vim /etc/yum.repos.d/glfs.repo

[glfs] name=glfs baseurl=file:///opt/gfsrepo gpgcheck=0 enabled=1

yum clean all && yum makecache

yum -y install centos-release-gluster

yum -y install glusterfs glusterfs-server glusterfs-fuse glusterfs-rdma

systemctl start glusterd.service

Step 5: create a gfs cluster

[root@node1 yum.repos.d]# gluster peer probe node1 peer probe: success. Probe on localhost not needed [root@node1 yum.repos.d]# gluster peer probe node2 peer probe: success. [root@node1 yum.repos.d]# gluster peer probe node3 peer probe: success. [root@node1 yum.repos.d]# gluster peer probe node4 peer probe: success. [root@node1 yum.repos.d]# gluster peer status '//The other three node nodes operate in the same way and will not be repeated. "

Step 6: create distributed vouchers

1. Create distributed vouchers

[root@node4 yum.repos.d]# gluster volume create dis-volume node1:/e6 node2:/e6 force '// 'create distributed volume' volume create: dis-volume: success: please start the volume to access data [root@node4 yum.repos.d]# gluster volume info dis-volume '// 'view distributed volume information' [root@node4 yum.repos.d]# gluster volume start dis-volume '// Open distributed volume ' volume start: dis-volume: success

2. Create strip coupons

[root@node1 yum.repos.d]# gluster volume create stripe-volume stripe 2 node1:/d5 node2:/d5 force volume create: stripe-volume: success: please start the volume to access data [root@node1 yum.repos.d]# gluster volume info stripe-volume [root@node1 yum.repos.d]# gluster volume start stripe-volume volume start: stripe-volume: success

3. Create a copy voucher

[root@node1 yum.repos.d]# gluster volume create rep-volume replica 2 node3:/d5 node4:/d5 force volume create: rep-volume: success: please start the volume to access data [root@node1 yum.repos.d]# gluster volume info rep-volume [root@node1 yum.repos.d]# gluster volume start rep-volume volume start: rep-volume: success

4. Create distributed strip coupons

[root@node1 yum.repos.d]# gluster volume create dis-stripe stripe 2 node1:/b3 node2:/b3 node3:/b3 node4:/b3 force volume create: dis-stripe: success: please start the volume to access data [root@node1 yum.repos.d]# gluster volume info dis-stripe [root@node1 yum.repos.d]# gluster volume start dis-stripe

5. Create a striped copy voucher

[root@node1 yum.repos.d]# gluster volume create dis-rep replica 2 node1:/c4 node2:/c4 node3:/c4 node4:/c4 force volume create: dis-rep: success: please start the volume to access data [root@node1 yum.repos.d]# gluster volume info dis-rep [root@node1 yum.repos.d]# gluster volume start dis-rep volume start: dis-rep: success

Step 7: deploy client test

Similarly, create the yum source and install the software

mkdir -p /test/{dis,stripe,rep,dis_stripe,dis_rep}

Configure hosts file

Temporary mount file

mount.glusterfs node1:dis-volume /test/dis mount.glusterfs node1:stripe-volume /test/stripe mount.glusterfs node1:rep-volume /test/rep mount.glusterfs node1:dis-stripe /test/dis_stripe mount.glusterfs node1:dis-rep /test/dis_rep

write in

[root@client yum.repos.d]# dd if=/dev/zero of=/demo1.log bs=1M count=40 Recorded 40+0 Read in Recorded 40+0 Writing 41943040 byte(42 MB)Copied, 0.103023 Seconds, 407 MB/second [root@client yum.repos.d]# dd if=/dev/zero of=/demo2.log bs=1M count=40 Recorded 40+0 Read in Recorded 40+0 Writing 41943040 byte(42 MB)Copied, 0.434207 Seconds, 96.6 MB/second [root@client yum.repos.d]# dd if=/dev/zero of=/demo3.log bs=1M count=40 Recorded 40+0 Read in Recorded 40+0 Writing 41943040 byte(42 MB)Copied, 0.388875 Seconds, 108 MB/second [root@client yum.repos.d]# dd if=/dev/zero of=/demo4.log bs=1M count=40 Recorded 40+0 Read in Recorded 40+0 Writing 41943040 byte(42 MB)Copied, 0.465817 Seconds, 90.0 MB/second [root@client yum.repos.d]# dd if=/dev/zero of=/demo5.log bs=1M count=40 Recorded 40+0 Read in Recorded 40+0 Writing 41943040 byte(42 MB)Copied, 0.437829 Seconds, 95.8 MB/second

View, test

When we shut down node2, check whether the file is normal on the client

Distributed voucher viewing

-rw-r--r--. 1 root root 41943040 2 June 17 22:55 demo1.log -rw-r--r--. 1 root root 41943040 2 June 17 22:55 demo2.log -rw-r--r--. 1 root root 41943040 2 June 17 22:55 demo3.log -rw-r--r--. 1 root root 41943040 2 June 17 22:55 demo4.log drwx------. 2 root root 4096 2 July 17-20:52 lost+found '//Demo5, originally stored on node2 Log file disappeared '

Strip coupon view

[root@client stripe]# ll Total consumption 0 '//Because the data is fragmented, the data disappears after node2 is powered off. "

Distributed strip coupon

[root@client dis_and_stripe]# ll Total consumption 40964 -rw-r--r--. 1 root root 41943040 2 June 17 22:55 demo5.log '//It is found that demo5 stored in a distributed manner does not disappear (stored on node3 and node4)

Distributed replication voucher

-rw-r--r--. 1 root root 41943040 2 June 17 22:55 demo1.log -rw-r--r--. 1 root root 41943040 2 June 17 22:55 demo2.log -rw-r--r--. 1 root root 41943040 2 June 17 22:55 demo3.log -rw-r--r--. 1 root root 41943040 2 June 17 22:55 demo4.log -rw-r--r--. 1 root root 41943040 2 June 17 22:55 demo5.log drwx------. 2 root root 4096 2 July 17-20:59 lost+found '//Found that the breakpoint test has no impact on distributed replication volumes'

Summary: the with replicated data is relatively safe,