1. Concurrent introduction

Processes and threads

A. Process is an execution process of a program in the operating system. The system is an independent unit for resource allocation and scheduling.

B. A thread is an executing entity of a process,yes CPU Basic unit of dispatch and dispatch,It is a smaller basic unit that can run independently than a process.

C.A process can create and undo multiple threads;Multiple threads in the same process can execute concurrently.Concurrency and parallelism

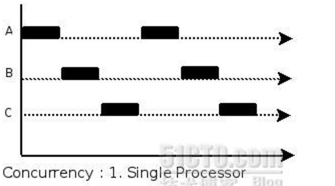

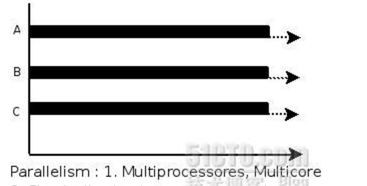

A. Multithreaded programs in one core cpu Running on is concurrency. B. Application of multithreaded programs in multiple cores cpu Running on is parallel.

Concurrent

parallel

Coroutines and threads

Coroutine: independent stack space and shared heap space. The scheduling is controlled by the user. In essence, it is somewhat similar to user level threads. The scheduling of these user level threads is also realized by themselves. Thread: a thread can run multiple coroutines. Coroutines are lightweight threads.

goroutine is just a super "thread pool" officially implemented.

The stack memory occupation of 4-5kb each and the greatly reduced creation and destruction overhead due to the implementation mechanism are the root causes of the high concurrency of go.

Concurrency is not parallel:

Concurrency mainly realizes "simultaneous" operation by switching time slices. Parallelism directly uses multi-core to realize multi-threaded operation. go can set the number of cores to give full play to the ability of multi-core computers.

goroutine pursues shared memory through communication, not shared memory.

Goroutine

When we want to implement concurrent programming in java/c + +, we usually need to maintain a thread pool and wrap one task after another. At the same time, we need to schedule threads to execute tasks and maintain context switching. All this usually consumes a lot of minds of programmers. So can there be a mechanism that programmers only need to define many tasks and let the system help us allocate these tasks to the CPU for concurrent execution?

Goroutine in go language is such a mechanism. The concept of goroutine is similar to thread, but goroutine is scheduled and managed by go runtime. The Go program will intelligently allocate the tasks in goroutine to each CPU. Go language is called a modern programming language because it has built-in scheduling and context switching mechanisms at the language level.

In Go language programming, you don't need to write processes, threads and collaborations by yourself. There is only one skill in your skill package - goroutine. When you need to make a task execute concurrently, you just need to package the task into a function and open a goroutine to execute the function. It's so simple and crude.

Use goroutine

Using goroutine in go language is very simple. You can create a goroutine for a function by adding the go keyword in front of the function call.

A goroutine must correspond to a function. You can create multiple goroutines to execute the same function.

Start a single goroutine

It is very simple to start the function with the keyword "go" and "go" in front of the function.

For example:

func hello() {

fmt.Println("Hello Goroutine!")

}

func main() {

hello()

fmt.Println("main goroutine done!")

}

In this example, the hello function and the following statement are serial, and the result of execution is to print Hello Goroutine! Print main goroutine done!.

Next, we add the keyword go before calling the hello function, that is, start a goroutine to execute the hello function.

func main() {

go hello() // Start another goroutine to execute the hello function

fmt.Println("main goroutine done!")

}

The execution result this time only prints the main goroutine done!, Hello Goroutine! Is not printed!. Why?

When the program starts, the Go program creates a default goroutine for the main() function.

When the main() function returns, the goroutine will end, and all goroutines started in the main() function will end together. The goroutine where the main function is located is like the night king in the game of rights. Other goroutines are strange ghosts. Once the night King dies, all the strange ghosts it transforms will be GG.

So we have to find a way to make the main function wait for the hello function. The simplest and rough way is time Sleep.

func main() {

go hello() // Start another goroutine to execute the hello function

fmt.Println("main goroutine done!")

time.Sleep(time.Second)

}

Execute the above code and you will find that this time, print main goroutine done!, Then print Hello Goroutine!.

First, why print the main goroutine done first! This is because it takes some time to create a new goroutine, and the goroutine where the main function is located continues to execute.

Start multiple goroutine s

It is so simple to implement concurrency in Go language. We can also start multiple goroutines. Let's take another example: (sync.WaitGroup is used to synchronize goroutine)

var wg sync.WaitGroup

func hello(i int) {

defer wg.Done() // Registration at the end of goroutine - 1

fmt.Println("Hello Goroutine!", i)

}

func main() {

for i := 0; i < 10; i++ {

wg.Add(1) // Start a goroutine and register + 1

go hello(i)

}

wg.Wait() // Wait for all registered goroutine s to end

}

If you execute the above code several times, you will find that the order of numbers printed each time is inconsistent. This is because 10 goroutines are executed concurrently, and the scheduling of goroutines is random.

be careful

- If the main process exits, will other tasks be executed (run the following code to test it)

package main

import (

"fmt"

"time"

)

func main() {

// Write together

go func() {

i := 0

for {

i++

fmt.Printf("new goroutine: i = %d\n", i)

time.Sleep(time.Second)

}

}()

i := 0

for {

i++

fmt.Printf("main goroutine: i = %d\n", i)

time.Sleep(time.Second)

if i == 2 {

break

}

}

}

1.1.1. goroutine and thread

Scalable stack

OS threads (operating system threads) generally have fixed stack memory (usually 2MB). A goroutine stack has only a small stack (typically 2KB) at the beginning of its life cycle. The goroutine stack is not fixed. It can be increased and reduced as needed. The stack size limit of goroutine can reach 1GB, although it is rarely used. Therefore, it is also possible to create about 100000 goroutines in Go language at a time.

goroutine scheduling

GPM is the implementation of go language runtime level. It is a set of scheduling system implemented by go language itself. It is different from operating system scheduling OS threads.

- 1.G is a goroutine, which contains not only the goroutine information, but also the binding information with the P.

- 2.P manages a group of goroutine queues. P will store the context of the current goroutine operation (function pointer, stack address and address boundary). P will make some scheduling for the goroutine queues it manages (such as pausing goroutine that takes a long CPU time, running subsequent goroutines, etc.). When its own queue is consumed, it will go to the global queue to get it, If the global queue is also consumed, it will go to the queue of other p to grab tasks.

- 3.M (machine) is the virtual of the kernel thread of the operating system by the Go runtime. M and the kernel thread are generally mapped one by one, and a root is finally executed on M;

The number of P is through runtime Gomaxprocs setting (256 max), go1 After version 5, it defaults to the number of physical threads. When the concurrency is large, some p and M will be added, but not too much. If the switching is too frequent, the gain is not worth the loss.

In terms of thread scheduling alone, the advantage of go language over other languages is that OS threads are scheduled by the OS kernel, while goroutine is scheduled by the go runtime's own scheduler, which uses a technology called m:n scheduling (multiplexing / scheduling m goroutines to n OS threads). One of its major features is that goroutine scheduling is completed in the user state, which does not involve frequent switching between kernel state and user state, including memory allocation and release. It maintains a large memory pool in the user state and does not directly call the malloc function of the system (unless the memory pool needs to be changed). The cost is much lower than scheduling OS threads. On the other hand, it makes full use of multi-core hardware resources, approximately divides several goroutines on physical threads, and the ultra lightweight goroutines ensure the performance of go scheduling.

2. runtime package

2.1.1. runtime.Gosched()

Give up CPU time slice, Reschedule tasks (it probably means that you planned to go out for barbecue on a good weekend, but your mother asked you to go on a blind date. The first is that you are very fast on a blind date, and the meeting will not delay you to continue the barbecue. The second is that you are very slow on a blind date. The meeting is yours and mine, and you delay the barbecue, but if you are greedy, you have to go to barbecue if you delay the barbecue.)

package main

import (

"fmt"

"runtime"

)

func main() {

go func(s string) {

for i := 0; i < 2; i++ {

fmt.Println(s)

}

}("world")

// Main coordination process

for i := 0; i < 2; i++ {

// Cut it and assign the task again

runtime.Gosched()

fmt.Println("hello")

}

}

2.1.2. runtime.Goexit()

Quit the current cooperation process (I was on a blind date while having a barbecue. I suddenly found that the blind date was too ugly to affect the barbecue. I decided to let her go, and then there was no more)

package main

import (

"fmt"

"runtime"

)

func main() {

go func() {

defer fmt.Println("A.defer")

func() {

defer fmt.Println("B.defer")

// End the process

runtime.Goexit()

defer fmt.Println("C.defer")

fmt.Println("B")

}()

fmt.Println("A")

}()

for {

}

}

2.1.3. runtime.GOMAXPROCS

The scheduler of Go runtime uses the GOMAXPROCS parameter to determine how many OS threads need to be used to execute Go code at the same time. The default value is the number of CPU cores on the machine. For example, on an 8-core machine, the scheduler will schedule the Go code to 8 OS threads at the same time (GOMAXPROCS is n in m:n scheduling).

In Go language, you can use runtime The gomaxprocs() function sets the number of CPU logical cores occupied when the current program is concurrent.

Go1. Before version 5, single core execution was used by default. Go1. After version 5, all CPU logical cores are used by default.

We can achieve the effect of parallelism by assigning tasks to different CPU logic cores. Here is an example:

func a() {

for i := 1; i < 10; i++ {

fmt.Println("A:", i)

}

}

func b() {

for i := 1; i < 10; i++ {

fmt.Println("B:", i)

}

}

func main() {

runtime.GOMAXPROCS(1)

go a()

go b()

time.Sleep(time.Second)

}

Two tasks have only one logical core. At this time, it is to finish one task and then do another task. Set the number of logical cores to 2. At this time, the two tasks are executed in parallel. The code is as follows.

func a() {

for i := 1; i < 10; i++ {

fmt.Println("A:", i)

}

}

func b() {

for i := 1; i < 10; i++ {

fmt.Println("B:", i)

}

}

func main() {

runtime.GOMAXPROCS(2)

go a()

go b()

time.Sleep(time.Second)

}

The relationship between operating system threads and goroutine in Go language:

- 1. One operating system thread has multiple goroutine s for the application user state.

- 2.go program can use multiple operating system threads at the same time.

- 3.goroutine and OS threads are many to many, i.e. m:n.

3. Channel

3.1.1. channel

Simply executing functions concurrently is meaningless. Functions need to exchange data between functions to reflect the significance of concurrent execution of functions.

Although shared memory can be used for data exchange, shared memory is prone to race problems in different goroutine s. In order to ensure the correctness of data exchange, the memory must be locked with mutex, which is bound to cause performance problems.

The concurrency model of Go language is CSP (Communicating Sequential Processes), which advocates sharing memory through communication rather than sharing memory.

If goroutine is the concurrent executor of Go program, channel is the connection between them. Channel is a communication mechanism that allows one goroutine to send a specific value to another goroutine.

Channel in Go language is a special type. The channel is like a conveyor belt or queue. It always follows the First In First Out rule to ensure the order of sending and receiving data. Each channel is a conduit of a specific type, that is, when declaring a channel, you need to specify the element type for it.

3.1.2. channel type

Channel is a type, a reference type. The format of declaring the channel type is as follows:

var variable chan Element type

For example:

var ch1 chan int // Declare a channel that passes an integer

var ch2 chan bool // Declare a channel that passes Booleans

var ch3 chan []int // Declare a channel that passes int slices

3.1.3. Create channel

The channel is a reference type, and the null value of the channel type is nil.

var ch chan int fmt.Println(ch) // <nil>

The declared channel can only be used after being initialized with the make function.

The format of creating channel is as follows:

make(chan Element type, [Buffer size])

The buffer size of channel is optional.

For example:

ch4 := make(chan int) ch5 := make(chan bool) ch6 := make(chan []int)

3.1.4. channel operation

The channel has three operations: send, receive and close.

Both sending and receiving use the < - symbol.

Now let's define a channel with the following statement:

ch := make(chan int)

send out

Sends a value to the channel.

ch <- 10 // Send 10 to ch

receive

Receive values from a channel.

x := <- ch // Receives the value from ch and assigns it to the variable x <-ch // Receive value from ch, ignore result

close

We close the channel by calling the built-in close function.

close(ch)

The thing to note about closing the channel is that the channel needs to be closed only when the receiver is notified that all data of goroutine has been sent. The channel can be recycled by the garbage collection mechanism. It is different from closing the file. Closing the file after the operation is necessary, but closing the channel is not necessary.

The closed channel has the following characteristics:

1.Sending a value to a closed channel will result in panic.

2.Receiving a closed channel will get the value until the channel is empty.

3.Performing a receive operation on a closed channel with no value will get the corresponding type of zero value.

4.Closing a closed channel can cause panic.

3.1.5. Unbuffered channel

Unbuffered channels are also called blocked channels. Let's look at the following code:

func main() {

ch := make(chan int)

ch <- 10

fmt.Println("Sent successfully")

}

The above code can be compiled, but the following errors will occur during execution:

fatal error: all goroutines are asleep - deadlock!

goroutine 1 [chan send]:

main.main()

.../src/github.com/pprof/studygo/day06/channel02/main.go:8 +0x54

Why is there a deadlock error?

Because we use ch: = make (Chan int) to create an unbuffered channel. Unbuffered channels can send values only when someone receives them. Just as there is no express cabinet and collection point in your community, the courier must send this item to your hand when calling you. In short, the unbuffered channel must be received before sending.

The above code will block the code in the line ch < - 10 to form a deadlock. How to solve this problem?

One method is to enable a goroutine to receive values, for example:

func recv(c chan int) {

ret := <-c

fmt.Println("Received successfully", ret)

}

func main() {

ch := make(chan int)

go recv(ch) // Enable goroutine to receive values from the channel

ch <- 10

fmt.Println("Sent successfully")

}

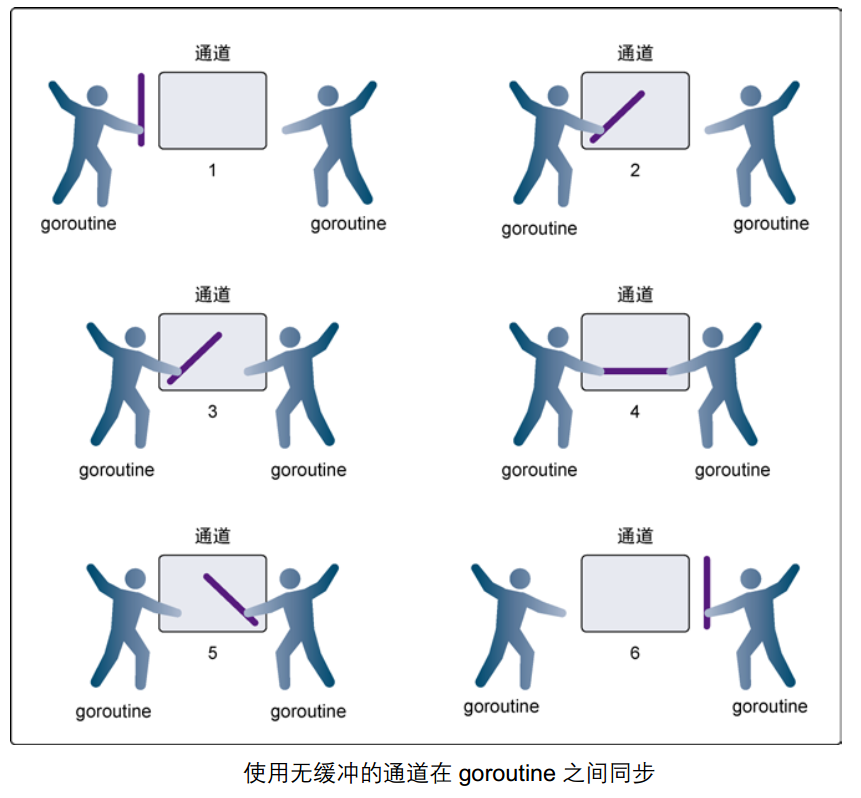

The sending operation on the unbuffered channel will be blocked until another goroutine performs the receiving operation on the channel. At this time, the value can be sent successfully, and the two goroutines will continue to execute. Conversely, if the receive operation is performed first, the receiver's goroutine will block until another goroutine sends a value on the channel.

The use of unbuffered channels for communication will result in the synchronization of the goroutine sent and received. Therefore, the unbuffered channel is also called the synchronous channel.

3.1.6. Buffered channel

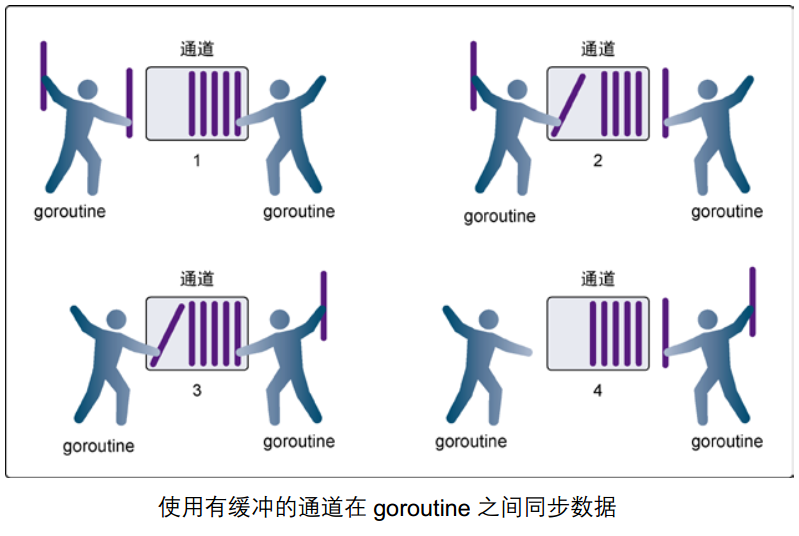

Another way to solve the above problem is to use channels with buffers.

We can specify the capacity of the channel when initializing the channel with the make function, for example:

func main() {

ch := make(chan int, 1) // Create a buffered channel with a capacity of 1

ch <- 10

fmt.Println("Sent successfully")

}

As long as the capacity of the channel is greater than zero, the channel is a buffered channel. The capacity of the channel indicates the number of elements that can be stored in the channel. Just like the express cabinet in your community has only so many grids. When the grid is full, it will not fit, and it will be blocked. When someone else takes one, the courier can put one in it.

We can use the built-in len function to obtain the number of elements in the channel and the cap function to obtain the capacity of the channel, although we rarely do so.

3.1.7. close()

You can close the channel through the built-in close() function (if your pipeline does not store values or take values, be sure to close the pipeline)

package main

import "fmt"

func main() {

c := make(chan int)

go func() {

for i := 0; i < 5; i++ {

c <- i

}

close(c)

}()

for {

if data, ok := <-c; ok {

fmt.Println(data)

} else {

break

}

}

fmt.Println("main end")

}

3.1.8. How to get values from the channel loop gracefully

When sending limited data through the channel, we can close the channel through the close function to tell the goroutine receiving the value from the channel to stop waiting. When the channel is closed, sending a value to the channel will trigger panic, and the value received from the channel is always of type zero. How to judge whether a channel is closed?

Let's look at the following example:

// channel exercise

func main() {

ch1 := make(chan int)

ch2 := make(chan int)

// Turn on goroutine to send the number from 0 to 100 to ch1

go func() {

for i := 0; i < 100; i++ {

ch1 <- i

}

close(ch1)

}()

// Turn on goroutine to receive the value from ch1 and send the square of the value to ch2

go func() {

for {

i, ok := <-ch1 // After the channel is closed, the value is ok=false

if !ok {

break

}

ch2 <- i * i

}

close(ch2)

}()

// Receive print value from ch2 in main goroutine

for i := range ch2 { // After the channel is closed, the for range loop will exit

fmt.Println(i)

}

}

From the above example, we can see that there are two ways to judge whether the channel is closed when receiving the value. We usually use the method of for range.

3.1.9. Unidirectional channel

Sometimes we will pass the channel as a parameter between multiple task functions. Many times, when we use the channel in different task functions, we will restrict it. For example, we can only send or receive the channel in the function.

The Go language provides a one-way channel to deal with this situation. For example, we transform the above example as follows:

func counter(out chan<- int) {

for i := 0; i < 100; i++ {

out <- i

}

close(out)

}

func squarer(out chan<- int, in <-chan int) {

for i := range in {

out <- i * i

}

close(out)

}

func printer(in <-chan int) {

for i := range in {

fmt.Println(i)

}

}

func main() {

ch1 := make(chan int)

ch2 := make(chan int)

go counter(ch1)

go squarer(ch2, ch1)

printer(ch2)

}

Among them,

1.chan<- int It is a channel that can only be sent, which can be sent but cannot be received;

2.<-chan int It is a channel that can only receive, but can not send.

It is possible to convert a two-way channel into a one-way channel in the function transfer parameter and any assignment operation, but the reverse is not possible.

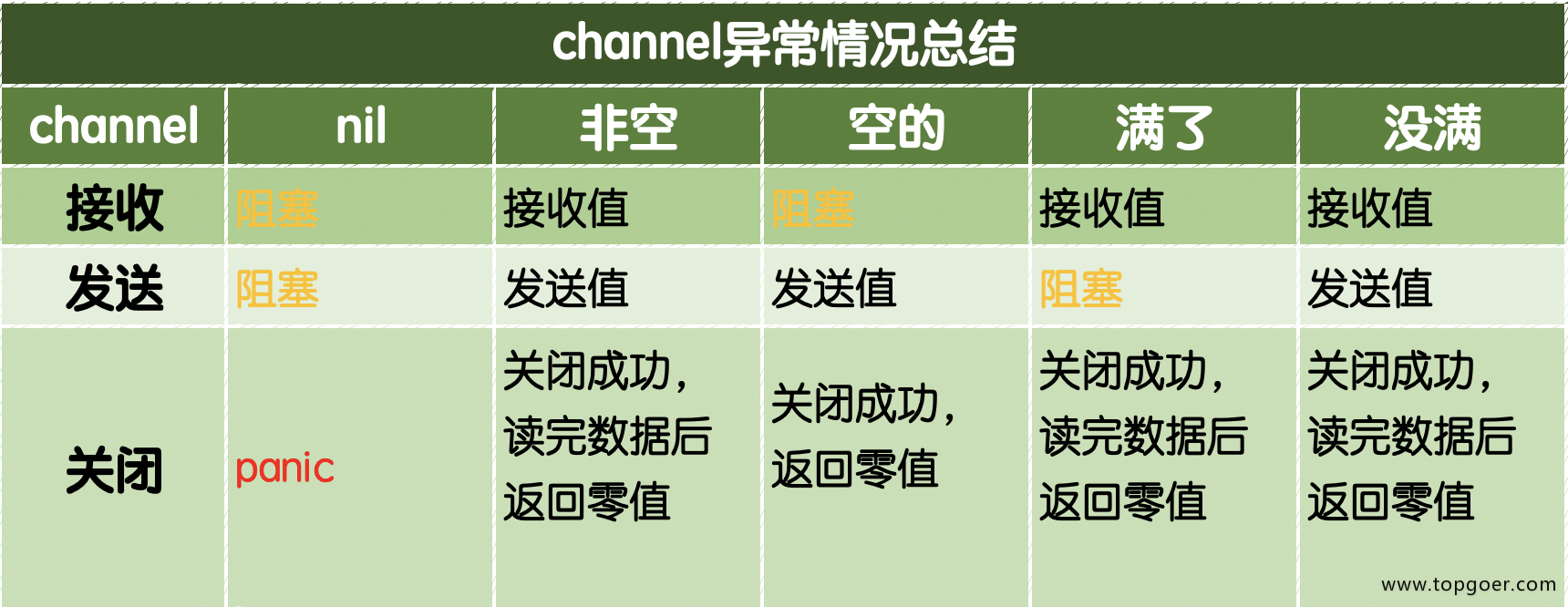

3.1.10. Channel summary

Common channel exceptions are summarized as follows:

Note: closing a closed channel will also cause panic.

4 timer

4.1.1. timer

- Timer: the time is up, and the execution is only performed once

package main

import (

"fmt"

"time"

)

func main() {

// 1.timer basic usage

//timer1 := time.NewTimer(2 * time.Second)

//t1 := time.Now()

//fmt.Printf("t1:%v\n", t1)

//t2 := <-timer1.C

//fmt.Printf("t2:%v\n", t2)

// 2. The verification timer can respond only once

//timer2 := time.NewTimer(time.Second)

//for {

// <-timer2.C

// fmt.Println("time is up")

//}

// 3.timer realizes the function of delay

//(1)

//time.Sleep(time.Second)

//(2)

//timer3 := time.NewTimer(2 * time.Second)

//<-timer3.C

//fmt.Println("2 seconds to")

//(3)

//<-time.After(2*time.Second)

//fmt.Println("2 seconds to")

// 4. Stop timer

//timer4 := time.NewTimer(2 * time.Second)

//go func() {

// <-timer4.C

// fmt.Println("timer executed")

//}()

//b := timer4.Stop()

//if b {

// fmt.Println("timer4 has been closed")

//}

// 5. Reset timer

timer5 := time.NewTimer(3 * time.Second)

timer5.Reset(1 * time.Second)

fmt.Println(time.Now())

fmt.Println(<-timer5.C)

for {

}

}

- Ticker: it's time to execute it many times

package main

import (

"fmt"

"time"

)

func main() {

// 1. Get ticker object

ticker := time.NewTicker(1 * time.Second)

i := 0

// Subprocess

go func() {

for {

//<-ticker.C

i++

fmt.Println(<-ticker.C)

if i == 5 {

//stop it

ticker.Stop()

}

}

}()

for {

}

}5.select

5.1.1. select multiplexing

In some scenarios, we need to receive data from multiple channels at the same time. When receiving data, the channel will be blocked if there is no data to receive. You may write the following code to implement it by traversal:

for{

// Attempt to receive value from ch1

data, ok := <-ch1

// Attempt to receive value from ch2

data, ok := <-ch2

...

}

Although this method can meet the requirements of receiving values from multiple channels, the operation performance will be much worse. In order to cope with this scenario, Go has built-in select keyword, which can respond to the operation of multiple channels at the same time.

The use of select is similar to the switch statement. It has a series of case branches and a default branch. Each case corresponds to the communication (receiving or sending) process of a channel. Select will wait until the communication operation of a case is completed, and the statement corresponding to the case branch will be executed. The specific format is as follows:

select {

case <-chan1:

// If chan1 successfully reads the data, the case processing statement will be executed

case chan2 <- 1:

// If the data is successfully written to chan2, the case processing statement is executed

default:

// If none of the above is successful, enter the default process

}

- select can listen to one or more channel s at the same time until one of them is ready

package main

import (

"fmt"

"time"

)

func test1(ch chan string) {

time.Sleep(time.Second * 5)

ch <- "test1"

}

func test2(ch chan string) {

time.Sleep(time.Second * 2)

ch <- "test2"

}

func main() {

// 2 pipes

output1 := make(chan string)

output2 := make(chan string)

// Run 2 sub processes and write data

go test1(output1)

go test2(output2)

// Monitor with select

select {

case s1 := <-output1:

fmt.Println("s1=", s1)

case s2 := <-output2:

fmt.Println("s2=", s2)

}

}

- If multiple channel s are ready at the same time, select one to execute at random

package main

import (

"fmt"

)

func main() {

// Create 2 pipes

int_chan := make(chan int, 1)

string_chan := make(chan string, 1)

go func() {

//time.Sleep(2 * time.Second)

int_chan <- 1

}()

go func() {

string_chan <- "hello"

}()

select {

case value := <-int_chan:

fmt.Println("int:", value)

case value := <-string_chan:

fmt.Println("string:", value)

}

fmt.Println("main end")

}

- It can be used to judge whether the pipeline is full

package main

import (

"fmt"

"time"

)

// Judge whether the pipeline is full

func main() {

// Create pipe

output1 := make(chan string, 10)

// Write data of subprocess

go write(output1)

// Fetch data

for s := range output1 {

fmt.Println("res:", s)

time.Sleep(time.Second)

}

}

func write(ch chan string) {

for {

select {

// Write data

case ch <- "hello":

fmt.Println("write hello")

default:

fmt.Println("channel full")

}

time.Sleep(time.Millisecond * 500)

}

}

6. Concurrent security and locks

Sometimes, in Go code, multiple goroutine s may operate a resource (critical area) at the same time, which will lead to race (data race). Compared with examples in real life, crossroads are competed by cars from all directions; And the toilet on the train is competed by the people in the carriage.

for instance:

var x int64

var wg sync.WaitGroup

func add() {

for i := 0; i < 5000; i++ {

x = x + 1

}

wg.Done()

}

func main() {

wg.Add(2)

go add()

go add()

wg.Wait()

fmt.Println(x)

}

In the above code, we have opened two goroutines to accumulate the value of variable x. when these two goroutines access and modify the X variable, there will be data competition, resulting in the final result inconsistent with the expectation.

1.1.1. mutex

Mutex is a common method to control the access of shared resources. It can ensure that only one goroutine can access shared resources at the same time. Mutex type of sync package is used in Go language to realize mutual exclusion. Use mutex to fix the problem of the above code:

var x int64

var wg sync.WaitGroup

var lock sync.Mutex

func add() {

for i := 0; i < 5000; i++ {

lock.Lock() // Lock

x = x + 1

lock.Unlock() // Unlock

}

wg.Done()

}

func main() {

wg.Add(2)

go add()

go add()

wg.Wait()

fmt.Println(x)

}

Using a mutex lock can ensure that only one goroutine enters the critical area at the same time, and the other goroutines are waiting for the lock; When the mutex is released, the waiting goroutines can acquire the lock and enter the critical area. When multiple goroutines wait for a lock at the same time, the wake-up strategy is random.

1.1.2. Read write mutex

Mutually exclusive locks are completely mutually exclusive, but there are many actual scenarios where there are more reads and less writes. When we read a resource concurrently and do not involve resource modification, it is not necessary to lock. In this scenario, using read-write locks is a better choice. Read write lock uses RWMutex type in sync package in Go language.

There are two kinds of read-write locks: read lock and write lock. When a goroutine acquires a read lock, other goroutines will continue to acquire the lock if they acquire a read lock, and wait if they acquire a write lock; When a goroutine acquires a write lock, other goroutines will wait whether they acquire a read lock or a write lock.

Example of read / write lock:

var (

x int64

wg sync.WaitGroup

lock sync.Mutex

rwlock sync.RWMutex

)

func write() {

// lock.Lock() / / add mutex lock

rwlock.Lock() // Write lock

x = x + 1

time.Sleep(10 * time.Millisecond) // Assume that the read operation takes 10 milliseconds

rwlock.Unlock() // Write unlock

// lock.Unlock() / / unlock the mutex

wg.Done()

}

func read() {

// lock.Lock() / / add mutex lock

rwlock.RLock() // Read lock

time.Sleep(time.Millisecond) // Suppose the read operation takes 1 millisecond

rwlock.RUnlock() // Interpretation lock

// lock.Unlock() / / unlock the mutex

wg.Done()

}

func main() {

start := time.Now()

for i := 0; i < 10; i++ {

wg.Add(1)

go write()

}

for i := 0; i < 1000; i++ {

wg.Add(1)

go read()

}

wg.Wait()

end := time.Now()

fmt.Println(end.Sub(start))

}

It should be noted that the read-write lock is very suitable for the scenario of more reading and less writing. If there is little difference between reading and writing operations, the advantages of the read-write lock will not be brought into play.

7Sync

7.1.1. sync.WaitGroup

Use time in the code Sleep is definitely not appropriate. Sync can be used in Go language Waitgroup to synchronize concurrent tasks. sync.WaitGroup has the following methods:

| Method name | function |

|---|---|

| (wg * WaitGroup) Add(delta int) | Counter + delta |

| (wg *WaitGroup) Done() | Counter-1 |

| (wg *WaitGroup) Wait() | Block until the counter becomes 0 |

sync.WaitGroup internally maintains a counter whose value can be increased and decreased. For example, when we start N concurrent tasks, we increase the counter value by N. When each task is completed, the counter is decremented by 1 by calling the Done() method. Wait for the execution of concurrent tasks by calling Wait(). When the counter value is 0, it indicates that all concurrent tasks have been completed.

We use sync Waitgroup optimizes the above code:

var wg sync.WaitGroup

func hello() {

defer wg.Done()

fmt.Println("Hello Goroutine!")

}

func main() {

wg.Add(1)

go hello() // Start another goroutine to execute the hello function

fmt.Println("main goroutine done!")

wg.Wait()

}

You need to pay attention to sync Waitgroup is a structure, and the pointer should be passed when passing.

7.1.2. sync.Once

What I said earlier: This is an advanced knowledge point.

In many programming scenarios, we need to ensure that some operations are executed only once in high concurrency scenarios, such as loading the configuration file only once, closing the channel only once, etc.

The sync package in the Go language provides a solution for a one-time scenario – sync Once.

sync.Once has only one Do method, and its signature is as follows:

func (o *Once) Do(f func()) {}

Note: if the function f to be executed needs to pass parameters, it needs to be used with closures.

Load profile example

It is a good practice to delay an expensive initialization operation until it is actually used. Because initializing a variable in advance (such as completing initialization in init function) will increase the startup time of the program, and it may not be used in the actual execution process, the initialization operation is not necessary. Let's take an example:

var icons map[string]image.Image

func loadIcons() {

icons = map[string]image.Image{

"left": loadIcon("left.png"),

"up": loadIcon("up.png"),

"right": loadIcon("right.png"),

"down": loadIcon("down.png"),

}

}

// Icon is not concurrent and safe when called by multiple goroutine s

func Icon(name string) image.Image {

if icons == nil {

loadIcons()

}

return icons[name]

}

When multiple goroutines call Icon functions concurrently, it is not concurrent and safe. Modern compilers and CPU s may freely rearrange the order of accessing memory on the basis of ensuring that each goroutine meets the serial consistency. The loadIcons function may be rearranged to the following results:

func loadIcons() {

icons = make(map[string]image.Image)

icons["left"] = loadIcon("left.png")

icons["up"] = loadIcon("up.png")

icons["right"] = loadIcon("right.png")

icons["down"] = loadIcon("down.png")

}

In this case, even if it is judged that the icons are not nil, it does not mean that the variable initialization is completed. This is the case when we think about how to add a mutually exclusive operation to the icons. However, this is the case when we think about how to add a mutually exclusive operation to the icons, which will not cause this problem.

Use sync The example code of once transformation is as follows:

var icons map[string]image.Image

var loadIconsOnce sync.Once

func loadIcons() {

icons = map[string]image.Image{

"left": loadIcon("left.png"),

"up": loadIcon("up.png"),

"right": loadIcon("right.png"),

"down": loadIcon("down.png"),

}

}

// Icon is concurrency safe

func Icon(name string) image.Image {

loadIconsOnce.Do(loadIcons)

return icons[name]

}

sync.Once actually contains a mutex and a Boolean value. The mutex ensures the security of Boolean value and data, and the Boolean value is used to record whether the initialization is completed. This design can ensure that the initialization operation is concurrent and safe, and the initialization operation will not be executed many times.

7.1.3. sync.Map

The built-in map in Go language is not concurrency safe. See the following example:

var m = make(map[string]int)

func get(key string) int {

return m[key]

}

func set(key string, value int) {

m[key] = value

}

func main() {

wg := sync.WaitGroup{}

for i := 0; i < 20; i++ {

wg.Add(1)

go func(n int) {

key := strconv.Itoa(n)

set(key, n)

fmt.Printf("k=:%v,v:=%v\n", key, get(key))

wg.Done()

}(i)

}

wg.Wait()

}

There may be no problem when the above code starts a few goroutine s. When there is more concurrency, the above code will report a fatal error: concurrent map writes error.

In this scenario, it is necessary to lock the map to ensure concurrent security. The sync package of Go language provides a concurrent secure version of map – sync out of the box Map. Out of the box means you can use it directly without using the make function initialization like the built-in map. Simultaneous sync Map has built-in operation methods such as Store, Load, LoadOrStore, Delete and Range.

var m = sync.Map{}

func main() {

wg := sync.WaitGroup{}

for i := 0; i < 20; i++ {

wg.Add(1)

go func(n int) {

key := strconv.Itoa(n)

m.Store(key, n)

value, _ := m.Load(key)

fmt.Printf("k=:%v,v:=%v\n", key, value)

wg.Done()

}(i)

}

wg.Wait()

}