Main contents:

- Run the program using goroutine

- Detect and correct competition status

- Using channels to share data

1. Concurrency basis

1.1 concurrency and parallelism

Concurrency and parallelism are two different concepts:

- Parallelism means that programs run simultaneously at any time;

- Concurrency means that programs run simultaneously in unit time.

Parallelism is the ability to execute at the same time in any granularity of time: the simplest parallelism is multi machine, multi machine parallel processing.

Concurrency means that multiple requests are executed and processed within a specified time. It emphasizes the feeling to the outside world. In fact, the inside may be time-sharing operation. Concurrency focuses on avoiding blocking. Typical application scenario of Concurrency: time-sharing operating system is a kind of concurrent design.

Parallelism is instantaneous and concurrency is procedural; Concurrency lies in structure and parallelism lies in execution.

-

What are thread s and process es of the operating system?

- The process can be regarded as a container containing various resources that the application needs to use and maintain in operation. These resources include, but are not limited to, memory address spaces, handles to files and devices, and threads.

- A thread is an execution space that is scheduled by the operating system to run the code written in the function.

- Each process contains at least one thread, and the initial thread of each process is called the main thread. Because the space for executing this thread is the space of the application itself, when the main thread terminates, the application will also terminate.

- The operating system will schedule threads to run on the physical processor, while the runtime of Go language will schedule goroutine to run on the logical processor.

-

In version 1.5, the runtime of Go language assigns a logical processor to each available physical processor by default.

-

Concurrency in Go language refers to the ability to make a function run independently of other functions.

-

The concurrent synchronization model of Go language comes from a paradigm called Communicating Sequential Processes (CSP).

-

CSP is a message passing model, which transfers messages by passing data between goroutine s, rather than locking the data to achieve synchronous access.

1.2 goroutine

The concurrent executor of go language is called goroutine. Start a goroutine with the go keyword. Note: the go keyword must be followed by a function, not a statement or anything else. The return value of the function is ignored.

- Start goroutine in the form of go + anonymous function

package main

import (

"runtime"

"time"

)

func main() {

go func() { // go + anonymous function

sum := 0

for i := 0; i < 10000; i++ {

sum += i

}

println(sum)

time.Sleep(1 * time.Second)

}()

// NumGoroutine can return the number of goroutines of the current program

println("NumGoroutine=", runtime.NumGoroutine())

//main goroutine deliberately "sleep" for 5 seconds to prevent it from exiting in advance

time.Sleep(5 * time.Second)

}

//The results are as follows:

NumGoroutine= 2

49995000

- Start goroutine in the form of go + famous function

package main

import (

"runtime"

"time"

)

func sum() {

sum := 0

for i := 0; i < 10000; i++ {

sum += i

}

println(sum)

time.Sleep(1 * time.Second)

}

func main() {

go sum() // go + famous function

// NumGoroutine can return the number of goroutines of the current program

println("NumGoroutine=", runtime.NumGoroutine())

//main goroutine deliberately "sleep" for 5 seconds to prevent it from exiting in advance

time.Sleep(5 * time.Second)

}

goroutine has the following features:

- The execution of go is non blocking and will not wait;

- The return value of the function after go will be ignored.

- The scheduler cannot guarantee the execution order of multiple goroutine s.

- Without the concept of parent-child goroutine, all goroutines are scheduled and executed equally.

- When the Go program is executed, it will create a goroutine separately for the main function, and then create other goroutines when encountering other go keywords.

- Go does not expose the goroutine id to the user, so it cannot explicitly operate another goroutine in one goroutine, but the runtime package provides some information about function access and setting goroutine.

- func GOMAXPROCS

func GOMAXPROCS(n int) int is used to set or query the number of goroutine s that can be executed concurrently. If n is greater than 1, it means to set the GOMAXPROCS value; otherwise, it means to query the current GOMAXPROCS value. For example:

// Gets the current GOMAXPROCS value

println("GOMAXPROCS=", runtime.GOMAXPROCS(0))

// Set GOMAXPROCS value

runtime.GOMAXPROCS(2)

// Gets the current GOMAXPROCS value

println("GOMAXPROCS=", runtime.GOMAXPROCS(0))

- func Goexit

func Goexit() is to end the running of the current goroutine. Goexit will call the defer registered by the current goroutine before ending the running of the current goroutine. Goexit does not generate panic, so the recover call in the goroutine defer returns nil. - func Gosched

func Gosched() is to discard the current scheduling execution opportunity, place the current goroutine in the queue and wait for the next scheduled operation.

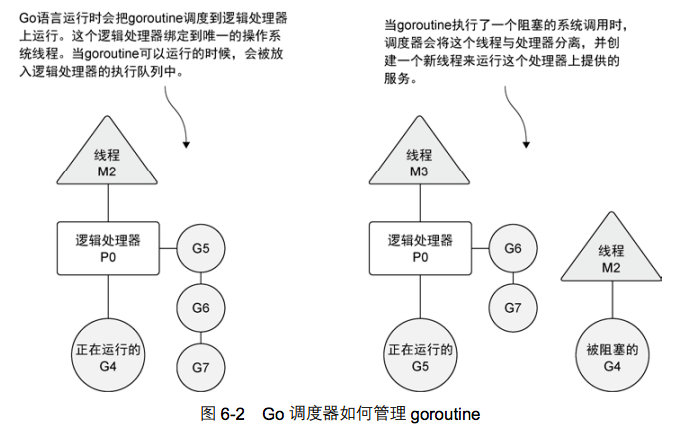

How does the Go scheduler manage goroutine?

- If a goroutine is created and ready to run, the goroutine will be placed in the scheduler's global run queue. After that, the scheduler assigns the goroutine in these queues to a logical processor and puts it into the local running queue corresponding to the logical processor. Goroutine in the local run queue will wait until it is executed by the assigned logical processor.

- If a goroutine needs to make a network I/O call, the process will be somewhat different. In this case, goroutine will be separated from the logical processor and moved to the runtime integrated with the network poller. Once the poller indicates that a network read or write operation is ready, the corresponding goroutine will be reassigned to the logical processor to complete the operation.

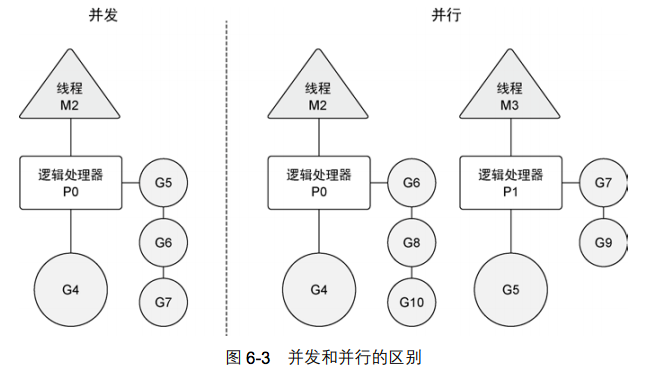

- If you want goroutine to be parallel, you must use more than one logical processor. When there are multiple logical processors, the scheduler will equally allocate goroutine to each logical processor. This allows goroutine to run on different threads.

- However, to really achieve the effect of parallelism, users need to run their programs on a machine with multiple physical processors. Otherwise, even if multiple threads are used in the Go language runtime, goroutine will still run concurrently on the same physical processor, which can not achieve the effect of parallelism.

- Assign a logical processor runtime to each available core GOMAXPROCS(runtime.NumCPU())

- Goroutine will run in parallel only when there are multiple logical processors and each goroutine can run on an available physical processor at the same time.

- If two or more goroutine s access a shared resource without synchronizing with each other and try to read and write the resource at the same time, they will be in a competitive state. This situation is called race condition.

- The read and write operations to a shared resource must be atomic. In other words, only one goroutine can read and write to a shared resource at the same time.

- The current goroutine exits from the thread and is placed back in the queue runtime Gosched()

- Go language has a special tool that can detect competition status in code. This tool is very useful when looking for such errors, especially when the competition state is not as obvious as in this example. go build -race

- A way to modify the code and eliminate the competition state is to use the lock mechanism provided by Go language to lock the shared resources, so as to ensure the synchronization state of goroutine.

Lock shared resources

- If you need to access an integer variable or a piece of code sequentially, the functions in atomic and sync packages provide a good solution.

- Atomic functions can synchronously access integer variables and pointers with a very low-level locking mechanism.

- The AddInt64 function of atmoic package will synchronize the addition of integer values by forcing only one goroutine to run and complete the addition operation at the same time. When goroutines try to call any atomic function, these goroutines will automatically synchronize according to the referenced variables.

- Two other useful atomic functions are LoadInt64 and StoreInt64. These two functions provide a safe way to read and write an integer value.

- Atomic functions will synchronize these read-write calls with each other to ensure that these operations are safe and will not enter the competitive state.

- Another way to access shared resources synchronously is to use mutex.

- Mutex is used to create a critical area on the code to ensure that only one goroutine can execute the critical area code at the same time.

- Only one goroutine can enter the critical zone at the same time. Then, other goroutines cannot enter the critical area until the Unlock() function is called.

1.3 chan

- Channel is a keyword in Go language. It is the abbreviation of channel, that is, channel. goroutine is a concurrent executor in Go language, and channel is an important component of communication and synchronization between goroutines.

- The key data type used to synchronize and transfer data between goroutine s is called channel. Using channels can make it easier to write concurrent programs and make concurrent programs less error prone.

- When a resource needs to be shared between goroutines, the channel sets up a pipeline between goroutines and provides a mechanism to ensure synchronous data exchange. When declaring a channel, you need to specify the type of data to be shared.

- There are types of channels. Declare a simple channel statement to be chan dataType. Go language provides a built-in function make to create channels. As follows:

// Create a bufferless channel, and the type of storage element in the channel is datatype make(chan datatype) //Create a channel with 10 buffers make(chan datatype, 10)

- You can share values or pointers of built-in types, named types, structure types, and reference types through channels.

- Channels are divided into unbuffered channels and buffered channels. Go provides built-in functions len and cap. Unbuffered channels len and cap are both 0. Len of buffered channels represents the number of elements that have not been read, and cap represents the capacity of the whole channel.

- Unbuffered channels can be used for both communication and synchronization of two goroutine s. Buffered channels are mainly used for communication.

- Sending a value or pointer to a channel requires the < - operator

- unbuffered channel refers to a channel that has no ability to save any value before receiving. This type of channel requires both sending goroutine and receiving goroutine to be ready at the same time, so as to complete the sending and receiving operations. If two goroutines are not ready at the same time, the channel will cause the goroutine that performs the send or receive operation first to block and wait. The interactive behavior of sending and receiving channels is synchronous in itself. None of these operations can exist separately from the other operation.

package main

import "runtime"

func main() {

c := make(chan struct{})

go func(i chan struct{}) {

sum := 0

for i := 0; i < 10000; i++ {

sum += i

}

println(sum)

//Write channel

c <- struct{}{}

}(c)

//NumGoroutine can return the number of goroutines of the current program

println("NumGoroutine=", runtime.NumGoroutine())

//Read channel c and wait synchronously through the read channel

<-c

}

- A buffered channel is a channel that can store one or more values before being received. This type of channel does not require goroutine s to send and receive at the same time. The channel will be blocked, and the conditions of sending and receiving actions will be different. The receive action is blocked only when there is no value to receive in the channel. The send action is blocked only when the channel has no available buffer to hold the sent value.

- There is a big difference between buffered channels and unbuffered channels: unbuffered channels ensure that the goroutine s sending and receiving will exchange data at the same time; Buffered channels do not have this guarantee.

- After the channel is closed, goroutine can still receive data from the channel, but can no longer send data to the channel. It is very important to be able to receive data from a closed channel, because it allows all the buffered values to be taken out after the channel is closed without data loss. Getting data from a closed channel without data always returns immediately and returns a zero value of the channel type.

- After the goroutine runs, the data written to the buffer channel will not disappear. It can buffer and adapt to the situation that the processing rates of the two goroutines are inconsistent. The buffer channel is similar to the message queue and has the functions of peak shaving and increasing throughput.

package main

import "runtime"

func main() {

c := make(chan struct{})

ci := make(chan int, 10)

go func(i chan struct{}, j chan int) {

for i := 0; i < 10; i++ {

ci <- i

}

close(ci)

//Write channel

c <- struct{}{}

}(c, ci)

//NumGoroutine can return the number of goroutines of the current program

println("NumGoroutine=", runtime.NumGoroutine())

//Read channel c and wait synchronously through the read channel

<-c

//At this time, the ci channel has been closed and the goroutine started by the anonymous function has exited

println("NumGoroutine=", runtime.NumGoroutine())

//However, the channel ci can continue to read

for v := range ci {

println(v)

}

}

- Operating chan in different states will trigger three behaviors:

- panic

- (1) Writing data to a closed channel can lead to panic, and the best practice is for the writer to close the channel

- (2) Repeated closed channels can cause panic

- block

- (1) Writing or reading data to an uninitialized channel will result in permanent blocking of the current goroutine

- (2) Writing data to a channel whose buffer is full will cause goroutine blocking

- (3) There is no data in the channel. Reading the channel will cause goroutine blocking

- Non blocking

- (1) Reading the closed channel will not cause blocking, but immediately return the zero value of the channel element type. You can use comma and OK syntax to judge whether the channel has been closed.

- (2) Reading / writing to a buffered channel that is not full will not cause blocking

- panic

1.4 WaitGroup

- sync package provides multiple goroutine synchronization mechanisms, which are mainly implemented through WaitGroup.

- WaitGroup is a counting semaphore, which can be used to record and maintain the running goroutine.

- If the value of WaitGroup is greater than 0, the Wait method will block.

- The keyword defer will modify the function call time, and the function declared by defer will be called only when the function being executed returns.

- When goroutine takes too long, the scheduler will stop the currently running goroutine and give other runnable goroutines a chance to run.

The main data structure and operation source code are as follows:

// src/sync/waitgroup.go

// A WaitGroup waits for a collection of goroutines to finish.

// The main goroutine calls Add to set the number of

// goroutines to wait for. Then each of the goroutines

// runs and calls Done when finished. At the same time,

// Wait can be used to block until all goroutines have finished.

//

// A WaitGroup must not be copied after first use.

type WaitGroup struct {

noCopy noCopy

// 64-bit value: high 32 bits are counter, low 32 bits are waiter count.

// 64-bit atomic operations require 64-bit alignment, but 32-bit

// compilers do not ensure it. So we allocate 12 bytes and then use

// the aligned 8 bytes in them as state, and the other 4 as storage

// for the sema.

state1 [3]uint32

}

// Add wait signal

func (wg *WaitGroup) Add(delta int)

//Release waiting signal

func (wg *WaitGroup) Done()

// wait for

func (wg *WaitGroup) Wait()

WaitGroup is used to wait for multiple goroutines to complete. main goroutine calls Add to set the number of goroutines to wait for. At the end of each goroutine, do() is called. Wait() is used by main to wait for all goroutines to complete.

package main

import (

"net/http"

"sync"

)

var wg sync.WaitGroup

var urls = []string{

"http://www.baidu.com",

"http://www.bing.com",

"http://www.bilibili.com",

}

func main() {

for _, url := range urls {

//Start a goroutine for each URL and add 1 to wg at the same time

wg.Add(1)

//Launch a goroutine to fetch the URL

go func(url string) {

//After the current goroutine is completed, the wg count is reduced by 1, wg Done() is equivalent to wg Add(-1)

defer wg.Done()

//Send HTTP get request and print HTTP status code

resp, err := http.Get(url)

if err == nil {

println(resp.Status)

}

}(url)

}

//Wait for all requests to end

wg.Wait()

}

1.5 select

- Select is a multiplexing system API provided by UNIX like systems. Borrowing the concept of multiplexing, Go language provides the select keyword to monitor multiple channels.

- When no state of the monitored channel is readable or writable, selecct is blocked; As long as one state of the monitored channel is readable or writable, the select will not block, but enter the branch flow of the processing ready channel. If there are multiple readable or writable states in the monitored channel, select randomly selects one for processing.

package main

func main() {

ch := make(chan int, 1)

go func(chan int) {

for {

select {

// Writes to 0 or 1 are random

case ch <- 0:

case ch <- 1:

}

}

}(ch)

for i := 0; i < 10; i++ {

println(<-ch)

}

}

Fan out and Fan in (1.6)

- Fan in refers to aggregating multiple channels into one channel for processing. The simplest fan in Go language is to use select to aggregate multiple channel services;

- Fan out refers to sending one channel to multiple channels for processing. The specific implementation is to use the go keyword to start multiple goroutine s for concurrent processing.

1.7 notification exit mechanism

- Closing a channel that the select listens to enables the select to immediately perceive this kind of notification and then handle it accordingly. This is the so-called close channel to broadcast mechanism.

- The exit notification mechanism is the basis for learning to use the context library.

package main

import (

"fmt"

"math/rand"

"runtime"

)

// GenerateIntA is a random number generator

func GenerateIntA(done chan struct{}) chan int {

ch := make(chan int)

go func() {

Label:

for {

select {

case ch <- rand.Int():

//Add one-way monitoring, that is, monitoring the exit notification signal done

case <-done:

break Label

}

}

//Close ch after receiving notification

close(ch)

}()

return ch

}

func main() {

done := make(chan struct{})

ch := GenerateIntA(done)

fmt.Println(<-ch)

fmt.Println(<-ch)

//Send a notification telling the producer to stop the generation

close(done)

fmt.Println(<-ch)

fmt.Println(<-ch)

//The producer has now exited

println("NumGoroutine=", runtime.NumGoroutine())

}