Theoretical knowledge points

1, Meaning of cluster

1. It is composed of multiple hosts. The external performance is the whole. It provides an access portal, and multiple hosts form a cluster,

2. Classification

① , load balancing cluster

② High availability cluster

③ High performance computing cluster

3. Load balancing cluster

Improve the response ability of the system, handle more access requests as much as possible, reduce the delay, and obtain the integrity of high concurrency and high load

The load distribution of lb depends on the shunting algorithm and is shared among multiple service nodes,

4. Make available clusters

Improve the reliability of the system and reduce the interruption time as much as possible, including Shanggong and Zhu cining palace. The duplex number is online at all nodes at the same time, and the master-slave is online at the master node. In case of failure, switch

5. Build performance scattered clusters

Improve the CPU operation speed of the system, expand the hardware Ziyun and analysis capability, and achieve the performance operation of supercomputer. It depends on distributed operation, parallel operation, and the combination of CPU and memory for individual services

Load balancing cluster architecture

Layer 1: load band, the only entrance for reading, VIP

The second time, the server pool, each node has a rip

Third, shared storage provides stable and constant file access services for all nodes

2. Three modes

NET mode

TUN mode

DR mode

Summary:

LVS is not suitable for small clusters

The working mode is different from nat mode, TUN mode and DR mode (compared with nat, it is complex)

real server node server

server number number of nodes low10-20 high 100 high 100

Real servers load scheduler free router free router

IP address public network + private network public network private network

Advantages: high safety, high speed, best performance

Disadvantages: low efficiency, high pressure, tunnel support is required, and lan (local area network) cannot be crossed

3. Four load scheduling algorithms

polling

Weighted polling

Minimum number of connections

Weighted minimum number of connections

LVS cluster creation and management

1,Create virtual server

2. Add and delete server nodes

3. View cluster and node status

4. Save load distribution policy

The management tool of LVS is ipvsadm

② . description of ipvsadm tool options

-A add virtual server

-D delete the entire virtual server

-s specifies the load scheduling algorithm (polling: rr, weighted polling: wrr, least connection: lc, weighted least connection: wlc)

-A means adding a real server (node server)

-d delete a node

-Tspecify t he VIP address and TCP port

-r specify RIP address and TCP port

-m indicates that NAT cluster mode is used

-g means DR mode is used

-i means using TUN mode

-w set the weight (when the weight is 0, it means to pause the node)

-p 60 means to keep a long connection for 60 seconds

-l view LVS virtual server list (view all by default)

-n displays address, port and other information in digital form, which is often used in combination with "- l" option. ipvsadm -ln

experiment

net mode lvs load balancing cluster deployment

The first step is the environment

lvs scheduler

Two web nodes

nfs server

client

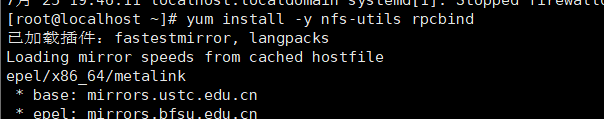

The second step is to deploy nfs

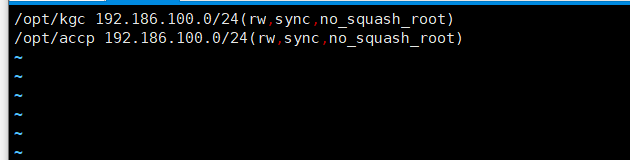

[root@localhost ~]# systemctl start nfs.service [root@localhost ~]# systemctl start rpcbind.service [root@localhost ~]# mkdir /opt/kgc /opt/accp [root@localhost ~]# chmod 777 /opt/kgc /opt/accp/ [root@localhost ~]# vim /etc/exports [root@localhost ~]# exportfs -rv exportfs: /etc/exports:1: unknown keyword "no_squash_root" exportfs: No file systems exported! [root@localhost ~]# showmount -e Export list for localhost.localdomai

Step 3: configure the node server

192.168.17.30

192.168.17.20

yum install -y httpd systemctl start httpd.service systemctl enable httpd.service

echo 'this is benet' > /var/www/html/index.html

Step 4: configure LVS scheduler

Configure multiple network cards, 192.168.17.50

192.168.17.129

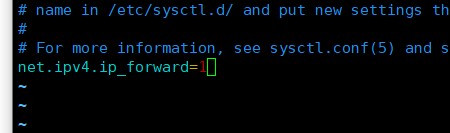

vim /etc/sysctl.conf

net.ipv4.ip_forward=1

iptables -t nat -F iptables -F [root@lvs ~]# iptables -t nat -nL

[root@manager network-scripts]# iptables -t nat -A POSTROUTING -s 192.168.17.0/24 -o ens37 -j SNAT --to-source 192.168.100.129

sysctl -p

[root@manager network-scripts]# iptables -t nat -A POSTROUTING -s 192.168.17.0/24 -o ens37 -j SNAT --to-source 192.168.100.129 [root@manager network-scripts]# modprobe ip_vs [root@manager network-scripts]# cat /proc/net/ip_vs IP Virtual Server version 1.2.1 (size=4096)

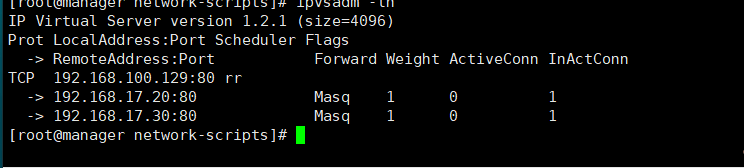

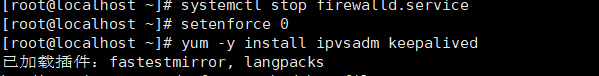

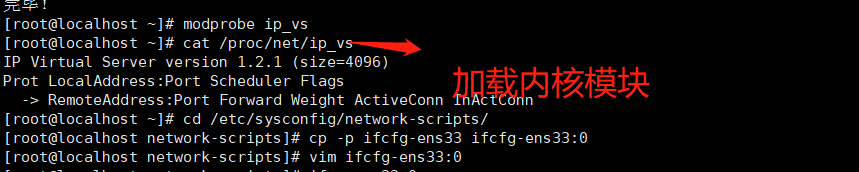

Step 5: load the kernel module and install ipvsadm

yum install -y ipvsadm

modprobe ip_vs # manually loading ip_vs module

cat /proc/net/ip_vs # view ip_vs version information

ipvsadm --save > /etc/sysconfig/ipvsadm

[root@manager network-scripts]# systemctl start ipvsadm.service [root@manager network-scripts]# ipvsadm -C [root@manager network-scripts]# ipvsadm -A -t 192.168.100.129:80 -s rr [root@manager network-scripts]# ipvsadm -a -t 192.168.100.129:80 -r 192.168.17.20:80 -m -w 1 [root@manager network-scripts]# ipvsadm -a -t 192.168.100.129:80 -r 192.168.17.30:80 -m -w 1 [root@manager network-scripts]# ipvsadm IP Virtual Server version 1.2.1 (size=4096) Prot LocalAddress:Port Scheduler Flags -> RemoteAddress:Port Forward Weight ActiveConn InActConn TCP manager:http rr -> 192.168.17.20:http Masq 1 0 0 -> www.cat.com:http Masq 1 0 0

DR mode

1. The client sends a request to the target VIP. The load balancer selects the back-end server according to the algorithm, does not modify the ip message, and asks the mac address of the data frame. The back-end is the server's mac address, and sends it on the LAN. After the back-end server receives the unsealing number, it sends out the message, and then transmits it to the physical network through the lo interface to respond to the client

2. In lvs load balancing, the load balancing is configured with the same vip address as the node, and the lo: 0 bearer vip address is used to set the kernel parameter arp_ignore

=1

3. Traffic analysis: the client sends the request and load balancer, requests the data packet to reach the kernel space, and then judges whether the ip of the packet is the local vip. At this time, whether the service requested for the packet is a cluster service, modifies the source mac address, and the source ip and target ip address have not changed, and then sends the packet to the real server, When a group fight arrives at the real server, the mac address of the message is the breeding mac address. After receiving the message, the packet is resealed

4. Characteristics

In the same physical network, the real server can use the private address or the public address. If the public network is used, it can be used to access rip directly. The Director Server is used as the access portal of the cluster, but not as the gateway. Therefore, the message is sent by direct, but the reply is not

5,keepalived

It supports automatic failover, node health status check, lvs load judgment, lvs load scheduler and node server availability. When the master fails, it switches to the backup node to ensure normal business,

6. Principle

vrrp hot standby protocol is adopted to realize the multi-level hot standby function of linux services. It is a backup solution for Liu Qianqian,

snmp is a protocol that manages servers, switches, routers and other devices,

LVS Dr keepalive experiment

1. Environment

Main regulator 192.168.17.30 ipvsadm kept

Remarks scheduler: 192.168.17.10

web : 192.168.17.20

web: 192.168.17.40

client

2. lvs scheduler configuration

systemctl stop firewalld.service

setenforce 0

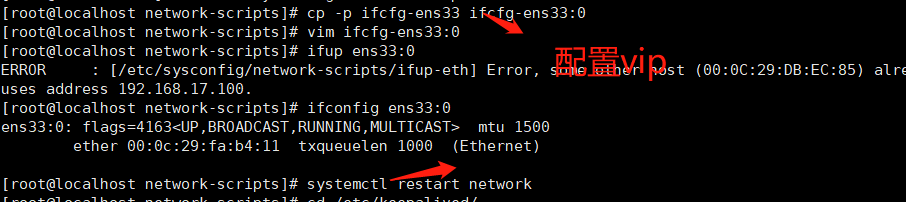

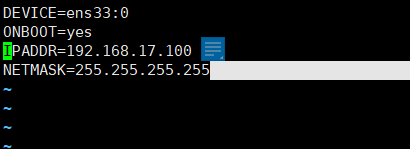

Configure vip

Turn on the network card

3. Since the LVS load balancer and each node need to share a vip address, the redirection response parameter of the linux kernel should be turned off instead of acting as a router

[root@localhost keepalived]# vim /etc/sysctl.conf [root@localhost keepalived]# sysctl -p net.ipv4.ip_forward = 0 net.ipv4.conf.all.send_redirects = 0 net.ipv4.conf.default.send_redirects = 0 net.ipv4.conf.ens33.send_redirects = 0

Enable configuration policy

[root@localhost keepalived]# modprobe ip_vs [root@localhost keepalived]# ipvsadm-save > /etc/sysconfig/ipvsadm [root@localhost keepalived]# systemctl start ipvsadm [root@localhost keepalived]# ipvsadm -Lnc

Configure routing rules

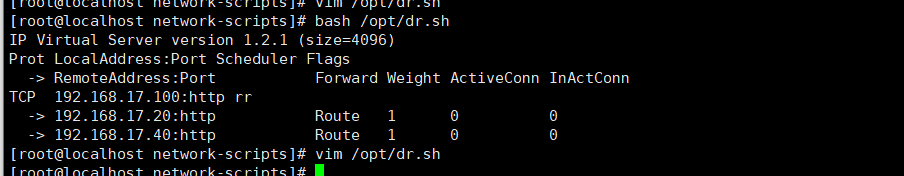

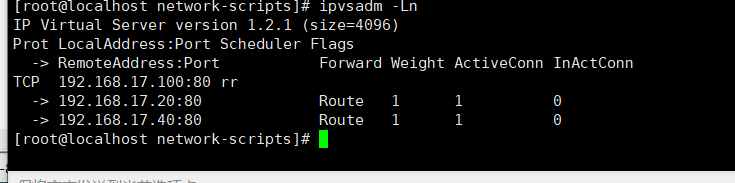

#!/bin/bash ipvsadm -C ipvsadm -A -t 192.168.17.100:80 -s rr ipvsadm -a -t 192.168.17.100:80 -r 192.168.17.20:80 -g ipvsadm -a -t 192.168.17.100:80 -r 192.168.17.40:80 -g ipvsadm ~

ipvsadm -Lnc

bash /opt/dr.sh

4. Web site configuration

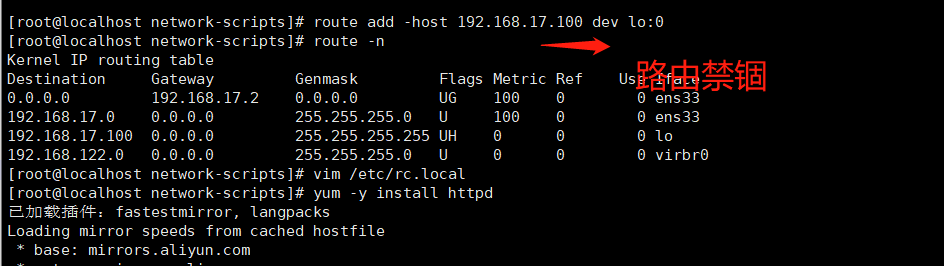

Create lo: 0 port first

cd /etc/sysconfig/network-scripts/ cp -p ifcfg-lo ifcfg-lo:0 vim ifcfg-lo:0 DEVICE=lo:0 ONBOOT=yes IPADDR=192.168.17.100 NETMASK=255.255.255.255

start-up

ifup lo:0

ifconfig lo:0

Then do routing imprisonment

Install http

yum -y install httpd

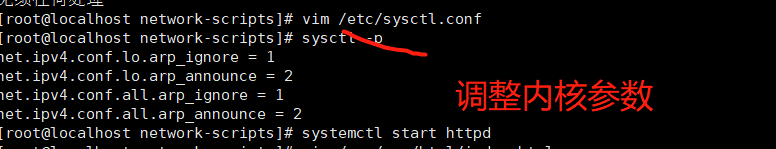

Adjust the ARP response parameters of the kernel to prevent updating the MAC address of VIP and avoid conflict

Start the service and configure different services for different sites

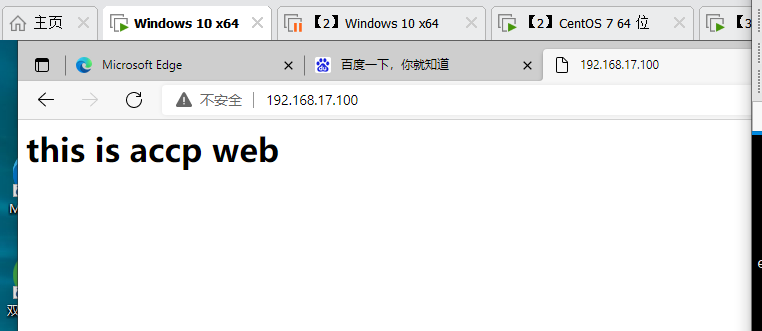

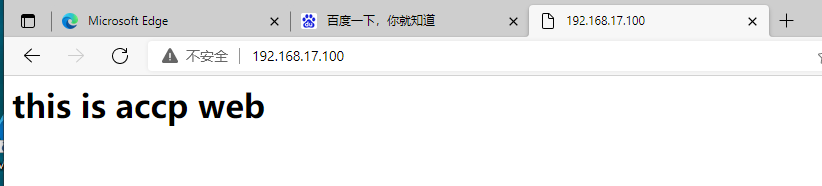

Access successful

5. Configure keepalive

[root@localhost network-scripts]# cd /etc/keepalived/ [root@localhost keepalived]# cp keepalived.conf keepalived.conf.bak [root@localhost keepalived]# vim keepalived.conf [root@localhost keepalived]# systemctl restart keepalived [root@localhost keepalived]# vim keepalived.conf [root@localhost keepalived]# systemctl restart keepalived

global_defs {# defines global parameters

router_id lvs_01 # the equipment names in the hot spare group cannot be consistent

}

vrrp_instance vi_1 {# define VRRP hot standby instance parameters

state MASTER # specifies the hot standby status. The primary is master and the standby is backup

interface ens33 # specifies the physical interface that hosts the vip address

virtual_router_id 51 # specifies the ID number of the virtual router. Each hot spare group is consistent

priority 110 # specifies the priority. The higher the value, the higher the priority

advert_int 1

authentication {

auth_type PASS

auth_pass 6666

}

virtual_ipaddress {# specifies the cluster VIP address

192.168.17.100

}

}

#Specify virtual server address, vip, port, and define virtual server and web server pool parameters

virtual_server 192.168.17.100 80 {

lb_algo rr # specifies the scheduling algorithm, polling (rr)

lb_kind DR # specifies the working mode of the cluster and routes DR directly

persistence_timeout 6 # health check interval

protocol TCP # application service adopts TCP protocol

#Specify the address and port of the first web node

real_server 192.168.17.40 80 {

weight 1 # node weight

TCP_CHECK {

connect_port 80 # add target port for inspection

connect_timeout 3 # add connection timeout

nb_get_retry 3 # add retry times

delay_before_retry 3 # add retry interval

}

}

#Specify the address and port of the second web node

real_server 192.168.17.40 80 {

weight 1

TCP_CHECK {

connect_port 80

connect_timeout 3

nb_get_retry 3

delay_before_retry 3

}

}

}

Empty original content

Let the Lord hang up

[root@localhost network-scripts]# systemctl stop ipvsadm

Check whether the web page can be accessed again

Summary:

1. What is lvs load balancing for? How many ways,

lvs is integrated into the kernel. It is a four layer load balancing strategy, which is suitable for medium and large-scale environments. It has three modes: nat, tun and dr. dr is commonly used in black

It has four shunting algorithms, polling, weighted polling, minimum number of bottle connections and weighted minimum number of connections

2. How to configure dr mode and keepalive, and what are the key steps

lvs can be combined with keealive to realize following, redundancy, health check and failover

vrrp hot standby protocol is mainly aimed at router routers. The same router is the same hot standby group, and different IDS in one person are different

As the ip address for providing services, vip wisdom drifts among the devices with the highest priority. The hot standby priority of various types of devices goes to the primary and standby, and the primary downtime is on the secondary top.

3. What is keepalive for