Grain mall advanced level chapter

Content directory:

1, ElasticSearch

1.1 introduction to elasticserch:

Full text search is the most common requirement. Open source Elasticsearch is the first choice of full-text search engines. It can quickly store, search and analyze massive data. Wikipedia, Stack Overflow and Github all use it. The bottom layer of elastic is the open source library Lucene. However, you can't use Lucene directly. You must write your own code to call its interface. Elastic is the package of Lucene and provides the operation interface of REST API, which can be used out of the box. REST API: natural cross platform.

Official documents: https://www.elastic.co/guide/en/elasticsearch/reference/current/index.html

Official Chinese: https://www.elastic.co/guide/cn/elasticsearch/guide/current/foreword_id.html

Community Chinese: https://es.xiaoleilu.com/index.html http://doc.codingdict.com/elasticsearch/0

1.2 related concepts

1.2.1 Index

Verb, equivalent to insert in MySQL; Noun, equivalent to Database in MySQL

1.2.2 Type

In Index, you can define one or more types. Similar to the Table in MySQL; Put each type of data together;

1.2.3 Document

A data (Document) of a certain Type saved under an Index. The Document is in JSON format, and the Document t is like the content in a Table in MySQL.

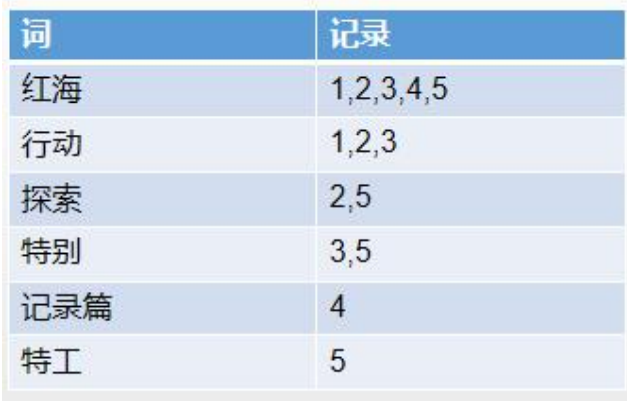

1.2.4 inverted index mechanism

Inverted index comes from the need to find records according to the value of attributes in practical applications. lucene is implemented based on inverted index.

Simply put, it is to get the index value according to the attribute value.

1.3 installation

To install using docker, you need to install ElasticSearch and Kibana images at the same time.

Please refer to the official documentation for more details.

ElasticSearch is RestFul

1.4 basic operation

Refer to relevant documents

It's like using RestFul style to manipulate the database with url.

1.5 combined with JAVA

1.5.1 introducing related dependencies

<dependency> <groupId>org.elasticsearch.client</groupId> <artifactId>elasticsearch-rest-high-level-client</artifactId> <version>7.4.2</version> </dependency>

1.5.2 write configuration class

//Exclude data source dependencies introduced into the common project

//@SpringBootApplication(exclude = DataSourceAutoConfiguration.class)

@Configuration

public class ElasticSearchConfig {

@Bean

public RestHighLevelClient restHighLevelClient() {

RestHighLevelClient client = new RestHighLevelClient(

RestClient.builder(

new HttpHost("101.200.45.111", 9200, "http")));//You need to fill in the domain name and port number of the server where es is located

return client;

}

}

1.5.3 create index (add data)

//Store data to ES (add update in one)

@Test

public void addIndex() throws Exception{

//(the request to create the index specifies the index name (users))

IndexRequest indexRequest = new IndexRequest("users");

//Specify id

indexRequest.id("1");

//This method can also write json strings directly

// indexRequest. Source ("username", "Zhan", "age", 18, "gender", "male");

User user =new User();

user.setUsername("Zhan San");

user.setGender("f");

user.setAge(123);

//Parses an object into a JSON string

String s = JSON.toJSONString(user);

indexRequest.source(s,XContentType.JSON);

//Get the response result after saving

IndexResponse index = restHighLevelClient.index(indexRequest,COMMON_OPTIONS);

System.out.println(index);

}

@Data

class User{

private String username;

private String gender;

private Integer age;

}

1.5.4 query

@Test

public void searchData() throws Exception {

//1. Create index request

SearchRequest searchRequest = new SearchRequest();

//2. Specify index

searchRequest.indices("xxx");

//3. Specify DSL search criteria

//Searchsourcebuilder (query criteria encapsulated inside)

SearchSourceBuilder searchSourceBuilder = new SearchSourceBuilder();

//3.1 build search conditions

// searchSourceBuilder.query();

// searchSourceBuilder.from();

// searchSourceBuilder.size();

// searchSourceBuilder.aggregation();

searchSourceBuilder.query(QueryBuilders.matchQuery("field", "xxx"));

//Create aggregation condition

//1. View value distribution aggregation

TermsAggregationBuilder agg1 = AggregationBuilders.terms("Aggname").field("AggField").size(10);

//Add aggregation criteria to query criteria

searchSourceBuilder.aggregation(agg1);

searchRequest.source(searchSourceBuilder);

//4. Retrieve the data

SearchResponse searchResponse = restHighLevelClient.search(searchRequest, ElasticSearchConfig.COMMON_OPTIONS);

//5. Analysis results (Json string)

//Get all the data found

SearchHits hits = searchResponse.getHits();

SearchHit[] searchHits = hits.getHits();

for (SearchHit hit : searchHits) {

String string = hit.getSourceAsString();

XXClass xxClass = JSON.parseObject(string,XXClass.class);

System.out.println("xxClass"+xxClass);

}

// }

//Get the retrieved analysis information

Aggregations aggregations = searchResponse.getAggregations();

Terms aggName = aggregations.get("AggName");

for (Terms.Bucket bucket : aggName.getBuckets()) {

String keyAsString = bucket.getKeyAsString();

System.out.println("Age"+keyAsString+bucket.getDocCount());

}

}

1.6 combined with mall business

1.6.1 create a micro service gulimall search

Related configuration files:

application.properties

spring.cloud.nacos.discovery.server-addr=127.0.0.1:8848 spring.cloud.nacos.config.server-addr=127.0.0.1:8848 spring.application.name=gulimall-search server.port=12000

1.6.2 writing configuration classes

@Configuration

public class GulimallElasticSearchConfig {

//Configure es request OPTIONS

public static final RequestOptions COMMON_OPTIONS;

static {

RequestOptions.Builder builder = RequestOptions.DEFAULT.toBuilder();

COMMON_OPTIONS = builder.build();

}

//Configure es connection

@Bean

RestHighLevelClient client() {

RestClientBuilder builder = RestClient.builder(new HttpHost("192.168.190.131", 9200, "http"));

return new RestHighLevelClient(builder);

}

}

1.6.3 Controller layer

@RequestMapping("/search")

@RestController

@Slf4j

public class ElasticSaveController {

@Autowired

ProductSaveService productSaveService;

// Goods on the shelves

@PostMapping("/product")

public R productStatusUp(@RequestBody List<SkuEsModel> skuEsModels) {

boolean flag = false;

try {

flag = productSaveService.productStatusUp(skuEsModels);

} catch (IOException e) {

log.error("ElasticSaveController Goods on the shelf error: {}", e);

return R.error(BizCodeEnume.PRODUCT_UP_EXCEPTION.getCode(), BizCodeEnume.PRODUCT_UP_EXCEPTION.getMsg());

}

if (flag) {

return R.ok();

} else {

return R.error(BizCodeEnume.PRODUCT_UP_EXCEPTION.getCode(), BizCodeEnume.PRODUCT_UP_EXCEPTION.getMsg());

}

}

}

1.6.4 Service layer

Note: use RestHighLevelClient for operation

@Slf4j

@Service

public class ProductSaveServiceImpl implements ProductSaveService {

@Autowired

RestHighLevelClient restHighLevelClient;

@Override

public boolean productStatusUp(List<SkuEsModel> skuEsModels) throws IOException {

// Save to es

//1. Index es. product, and establish the mapping relationship.

//2. Save this data to es

//BulkRequest bulkRequest, RequestOptions options

BulkRequest bulkRequest = new BulkRequest();

for (SkuEsModel model : skuEsModels) {

// Construct save request

IndexRequest indexRequest = new IndexRequest(EsConstant.PRODUCT_INDEX);

//Specify the data id

indexRequest.id(model.getSkuId().toString());

//Convert the data object to be saved to JSON format

String s = JSON.toJSONString(model);

//Insert data and indicate that the data type is JSON

indexRequest.source(s, XContentType.JSON);

//Add the save request (indexRequest) to the batch save request

bulkRequest.add(indexRequest);

}

//Create batch execution object

//Use restHighLevelClient client to save the response results

//The first parameter is the request for batch saving, and the second parameter is the request for OPTIONS

BulkResponse bulk = restHighLevelClient.bulk(bulkRequest, GulimallElasticSearchConfig.COMMON_OPTIONS);

//Analyze and save results

boolean b = bulk.hasFailures();

List<String> collect = Arrays.stream(bulk.getItems()).map(item -> {

return item.getId();

}).collect(Collectors.toList());

log.info("Goods on the shelf successfully: {}", collect);

//Return Boolean data. If true, there is an error. If false, there is no error

return b;

}

}

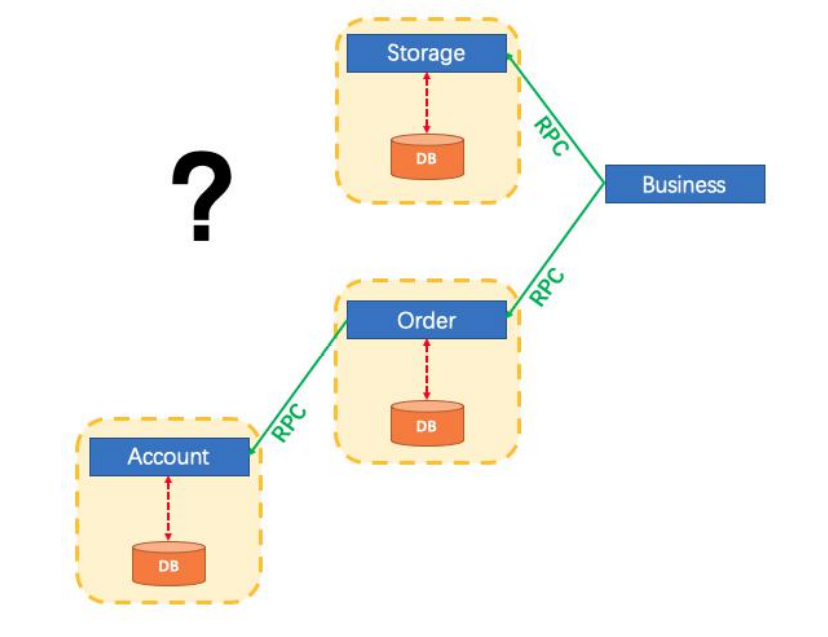

2, Mall business

2.1 using Nginx reverse proxy

2.1.1 differences between nginx and gateway

nginx:

C language, using server to achieve load balancing, high-performance HTTP and reverse proxy web server.

Gateway:

It is a microservice gateway developed by springcloud and built based on spring 5. It can realize responsive and non blocking Api and support long connection.

Asynchronous is supported.

More powerful functions, internal current limiting and load balancing, and stronger scalability. Spring Cloud Gateway clearly distinguishes between Router and Filter, and a great feature is that it has built-in many out of the box functions, which can be used through SpringBoot configuration or manual coding chain call.

It depends on spring weblux and is only suitable for Spring Cloud suite.

Complex code and few comments.

difference:

Nginx is suitable for server-side load balancing. Zuul and gateway are local load balancing and are suitable for implementing gateways in microservices. Spring Cloud Gateway is naturally suitable for Spring Cloud ecology.

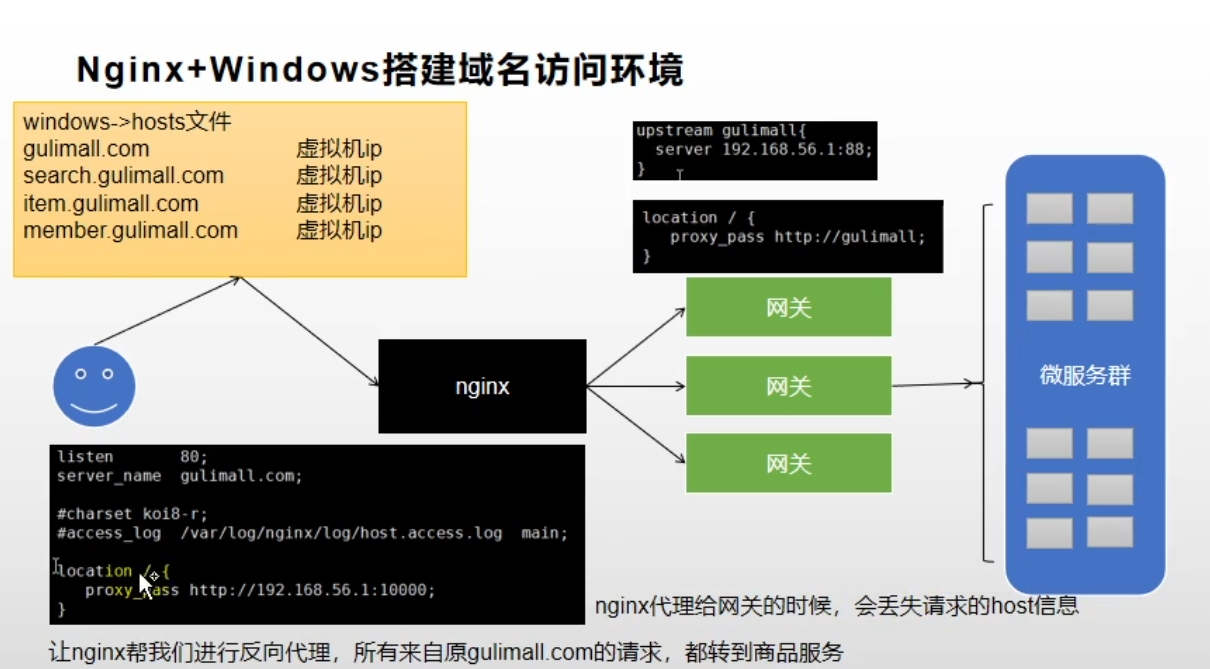

Simulation test diagram:

Configure gulimall. In the host file of the host COM and virtual machine ip binding.

2.1.2 process description

Visit gulimall.com through the browser Com will access the virtual machine and be processed by nginx of the virtual machine. Nginx forwards the request to our gateway, and then we configure the routing rules in the gateway, and finally transfer our request to the gulimall product service.

2.1.3 pit in nginx

When nginx performs load balancing, the request header will be lost. Therefore, we need to configure it in the relevant configuration files of nginx and add our request header information.

3, Performance stress testing & Performance Optimization

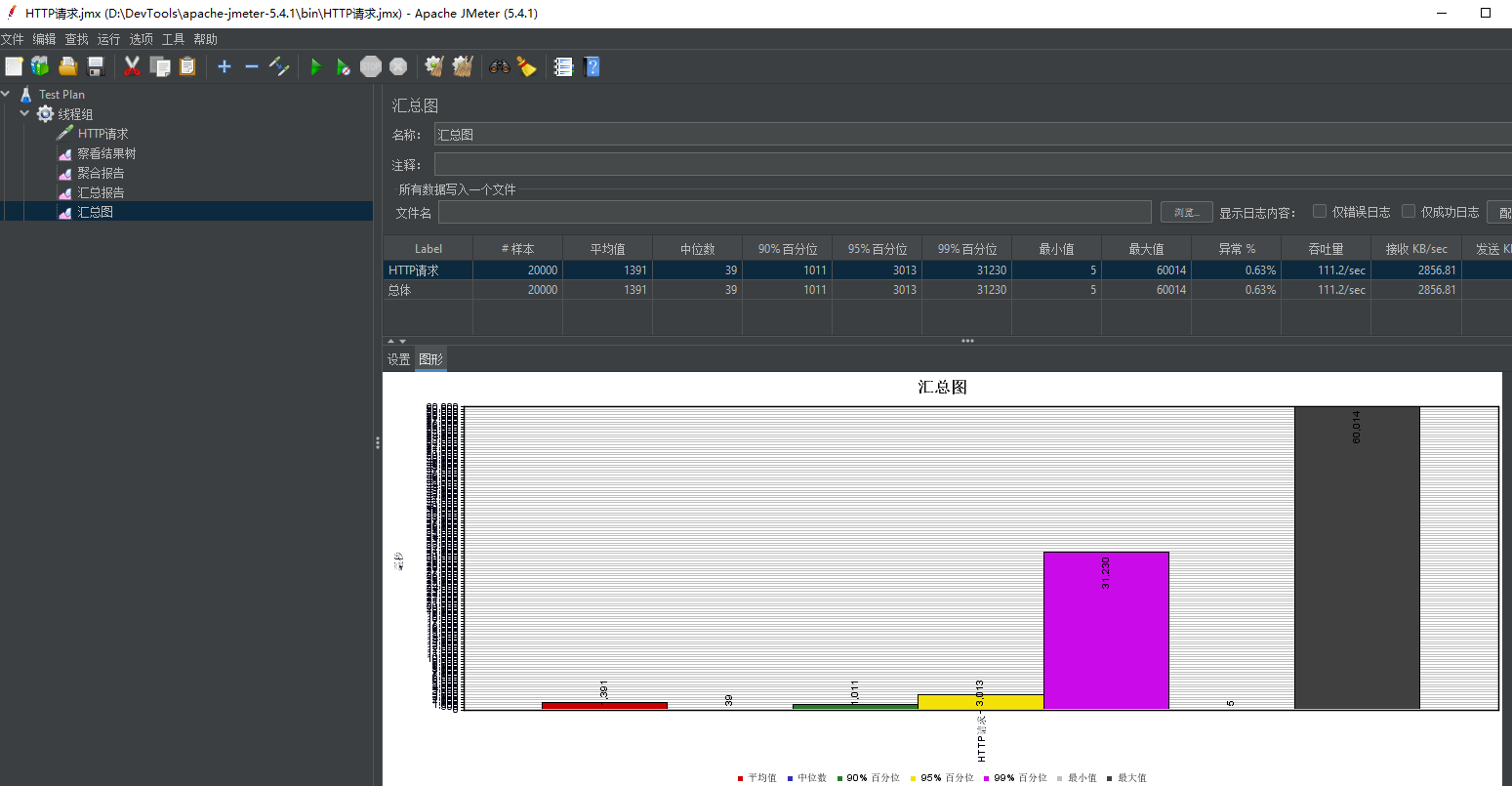

3.1 use of JMeter

1. Official website now

2. Click JMeter Bat usage

Use screenshot:

3.2 JVM optimization

The optimization tool JVISUALVM can be used to test the optimization.

cmd window input command: using jvisualvm

Optimized points:

- JVM memory

- View rendering

- database

3.3 Nginx dynamic and static separation

Put static pages, css, js, etc. into nginx, and set paths in nginx to realize dynamic and static separation.

Every time you visit the home page, the static page is provided by Nginx, and the data is provided by the local service.

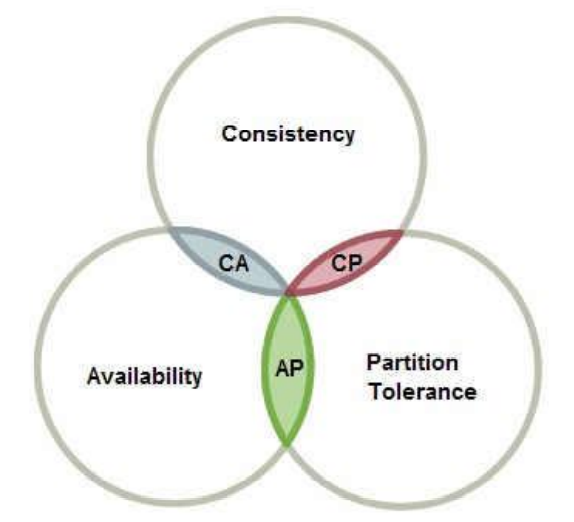

4, Cache and distributed lock

4.1 cache usage

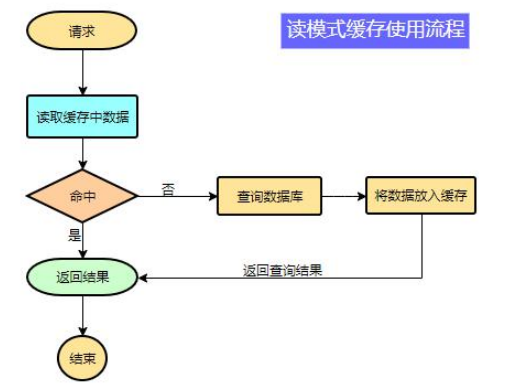

In order to improve the system performance, we usually put some data into the cache to speed up access. db is responsible for data dropping

What data is suitable for caching?

- The requirements for immediacy and data consistency are not high

- Data with large access and low update frequency (read more and write less)

For example, e-commerce applications, commodity classification, commodity list, etc. are suitable for caching and adding an expiration time (depending on the data update frequency). If a commodity is published in the background, the buyer needs 5 minutes to see the new commodity, which is generally acceptable.

flow chart:

Strong consistency is not required, and final consistency is required

Note: during development, we should specify the expiration time for all data put into the cache, so that it can automatically trigger the process of data loading into the cache even if the system does not actively update the data. Avoid permanent data inconsistency caused by business collapse.

4.2 integrating redis

4.2.1 environment configuration

1) Introduce dependency

<!--introduce redis-->

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-data-redis</artifactId>

</dependency>

2) Configure the ip address and port number of redis in the configuration file (optional, because the default port number is 6379)

4.2.2 use of redis

Redis's configuration class has configured two kinds of bean s for us

RedisTemplate and StringRedisTemplate

@Configuration

@ConditionalOnClass(RedisOperations.class)

@EnableConfigurationProperties(RedisProperties.class)

@Import({ LettuceConnectionConfiguration.class, JedisConnectionConfiguration.class })

public class RedisAutoConfiguration {

@Bean

@ConditionalOnMissingBean(name = "redisTemplate")

public RedisTemplate<Object, Object> redisTemplate(RedisConnectionFactory redisConnectionFactory)

throws UnknownHostException {

RedisTemplate<Object, Object> template = new RedisTemplate<>();

template.setConnectionFactory(redisConnectionFactory);

return template;

}

@Bean

@ConditionalOnMissingBean

public StringRedisTemplate stringRedisTemplate(RedisConnectionFactory redisConnectionFactory)

throws UnknownHostException {

StringRedisTemplate template = new StringRedisTemplate();

template.setConnectionFactory(redisConnectionFactory);

return template;

}

}

It is used by injecting IOC container.

4.2.3 test use

@Autowired

StringRedisTemplate stringRedisTemplate;

@Test

public void testStringRedisTemplate() {

ValueOperations<String, String> ops = stringRedisTemplate.opsForValue();

ops.set("hello", "world_" + UUID.randomUUID().toString());

String hello = ops.get("hello");

System.out.println("Previously stored data: " + hello);

}

Actual business use:

//TODO generates external memory overflow: outofdirectoryerror

@Override

public Map<String, List<Catelog2Vo>> getCatalogJson() {

//First judge whether there is data in Redis

String catalogJSON = redisTemplate.opsForValue().get("catalogJSON");

if (StringUtils.isEmpty(catalogJSON)) {

// No data in cache, get data from database

Map<String, List<Catelog2Vo>> catalogJsonFromDb = getCatalogJsonFromDb();

// After obtaining the data, you need to store the obtained data in the redis cache

// Because both key and value in redis are strings, if you want to store data in redis, you must first convert the data object into Json format and then save it in redis

String value = JSON.toJSONString(catalogJsonFromDb);

redisTemplate.opsForValue().set("catalogJSON", value);

return catalogJsonFromDb;

}

//Logic when there is corresponding data in redis

// You need to get data from redis, convert json data into objects, and then return

Map<String, List<Catelog2Vo>> result = JSON.parseObject(catalogJSON, new TypeReference<Map<String, List<Catelog2Vo>>>() {

});

return result;

}

4.2.4 generated BUG

springboot2.0 using lettuce will cause out of heap memory overflow, so we need to temporarily use another redis client jedis, but we use redisTemplate regardless of whether we use jedis or lettuce, because springboot encapsulates the two redis clients.

4.2.5 cache invalidation of high parallel delivery

(1) Cache penetration

The most straightforward meaning of cache penetration is that our business system does not find data in the cache when receiving the request, so it penetrates into the process of checking data in the back-end database.

Or it refers to a large number of external accesses to query the values that do not exist in the cache, which eventually leads to the need to constantly query the database, increasing the pressure on the database, and finally leading to program exceptions.

- Cache penetration refers to querying a certain nonexistent data. Due to the cache miss, we will query the database, but the database does not have this record. We do not write the null of this query into the cache, which will cause the nonexistent data to be queried in the storage layer every request, losing the significance of caching.

- When the traffic is heavy, the DB may hang up. If someone uses a non-existent key to frequently attack our application, this is a vulnerability.

solve:

Cache empty results and set a short expiration time.

(2) Cache avalanche

In short, it means that the key in the cache fails in a large area. At the same time, a large number of requests come to obtain data and view the cache, but the data in the cache has failed, so they go back to access the database, resulting in increased pressure on the database.

- Cache avalanche refers to an avalanche in which the same expiration time is used when we set the cache, resulting in the cache invalidation at the same time at a certain time, all requests are forwarded to the DB, and the DB is under excessive instantaneous pressure.

solve:

Add a random value based on the original expiration time, such as 1-5 minutes, so that the repetition rate of the expiration time of each cache will be reduced, and it is difficult to cause collective failure events

(3) Buffer breakdown

Cache breakdown means that there is no data in the cache but there is some data in the database. When a key is very hot (similar to a hot payment), it is constantly carrying large concurrency, and the large concurrency focuses on accessing this point; When the key fails, the continuous large concurrency breaks through the cache and directly requests the database, which is like cutting a hole in a barrier.

- For some keys with expiration time set, if these keys may be accessed in high concurrency at some time points, they are very "hot" data.

- At this time, we need to consider a problem: if the key fails just before a large number of requests come in at the same time, all data queries on the key will fall to db, which is called cache breakdown

solve:

Add mutex

① Use local lock (synchronized) monomer application

When a large number of requests access this data and find that it is not in the cache, they will access the database for query. If the method of accessing the database operation is locked with synchronized, these requests will be queued for access. After the first request completes the synchronization operation, the lock will be released. Before releasing the lock, the queried data will be stored in the cache. If other requests enter the synchronization operation, they will first judge whether there is corresponding data in the cache, so as to avoid the problem of multiple database searches.

//TODO generates external memory overflow: outofdirectoryerror

@Override

public Map<String, List<Catelog2Vo>> getCatalogJson() {

//First judge whether there is data in Redis

String catalogJSON = redisTemplate.opsForValue().get("catalogJSON");

if (StringUtils.isEmpty(catalogJSON)) {

// No data in cache, get data from database

//Call the following method

Map<String, List<Catelog2Vo>> catalogJsonFromDb = getCatalogJsonFromDb();

return catalogJsonFromDb;

}

//Logic when there is corresponding data in redis

// You need to get data from redis, convert json data into objects, and then return

Map<String, List<Catelog2Vo>> result = JSON.parseObject(catalogJSON, new TypeReference<Map<String, List<Catelog2Vo>>>() {

});

return result;

}

//Query and encapsulate data from database

public Map<String, List<Catelog2Vo>> getCatalogJsonFromDb() {

synchronized (this) {

//Change the query of the database to one time

List<CategoryEntity> selectList = baseMapper.selectList(null);

// Find out all primary classifications

List<CategoryEntity> level1Categorys = getParent_cid(selectList, 0L);

// Encapsulate data

Map<String, List<Catelog2Vo>> parent_cid = level1Categorys.stream().collect(Collectors.toMap(k -> k.getCatId().toString(), v -> {

.................Encapsulate data operations..................

}));

// After obtaining the data, you need to store the obtained data in the redis cache

// Because both key and value in redis are strings, if you want to store data in redis, you must first convert the data object into Json format and then save it in redis

String value = JSON.toJSONString(parent_cid);

redisTemplate.opsForValue().set("catalogJSON", value);

return parent_cid;

}

}

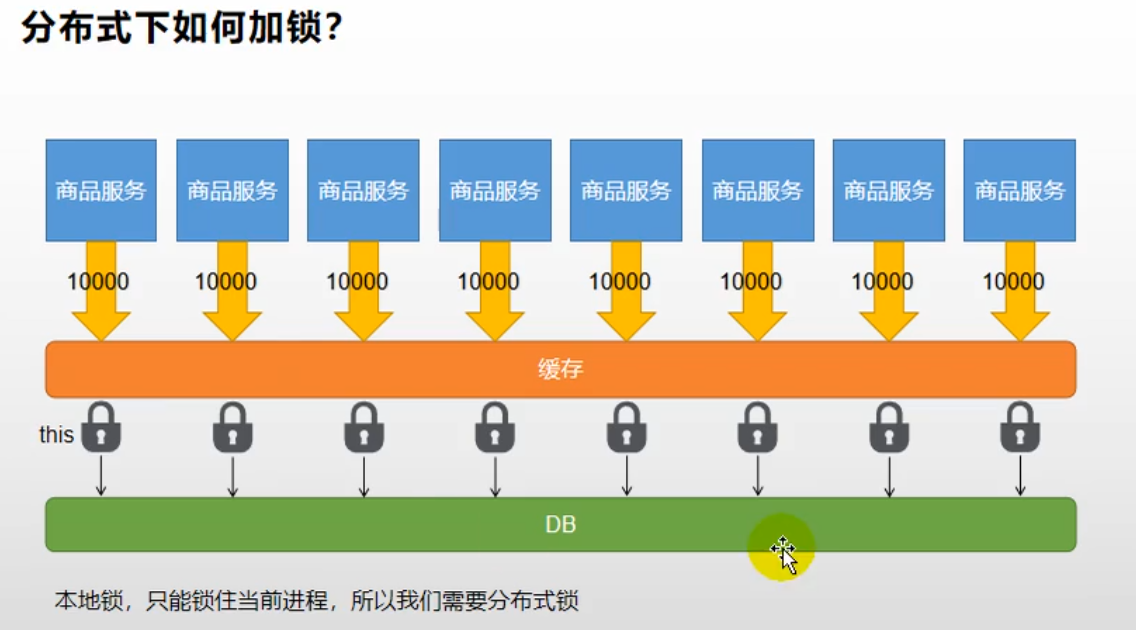

However, using local locks can cause problems in distributed situations: each service locks the current process and cannot lock other processes.

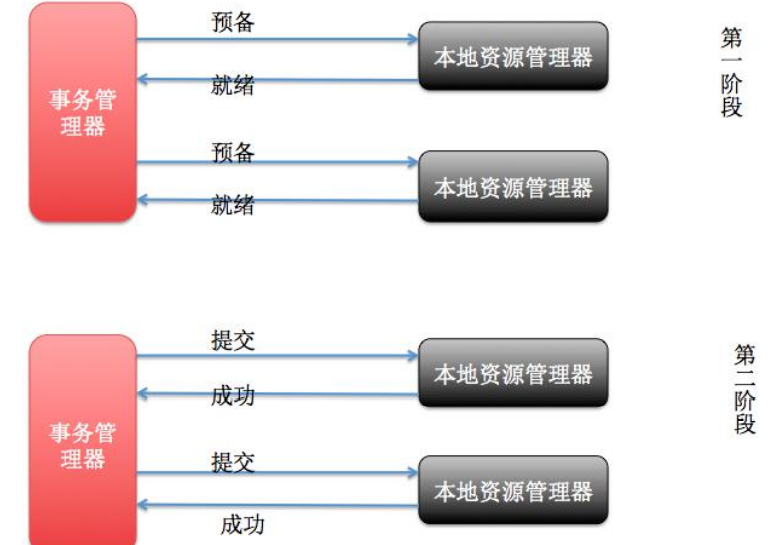

4.3 distributed lock

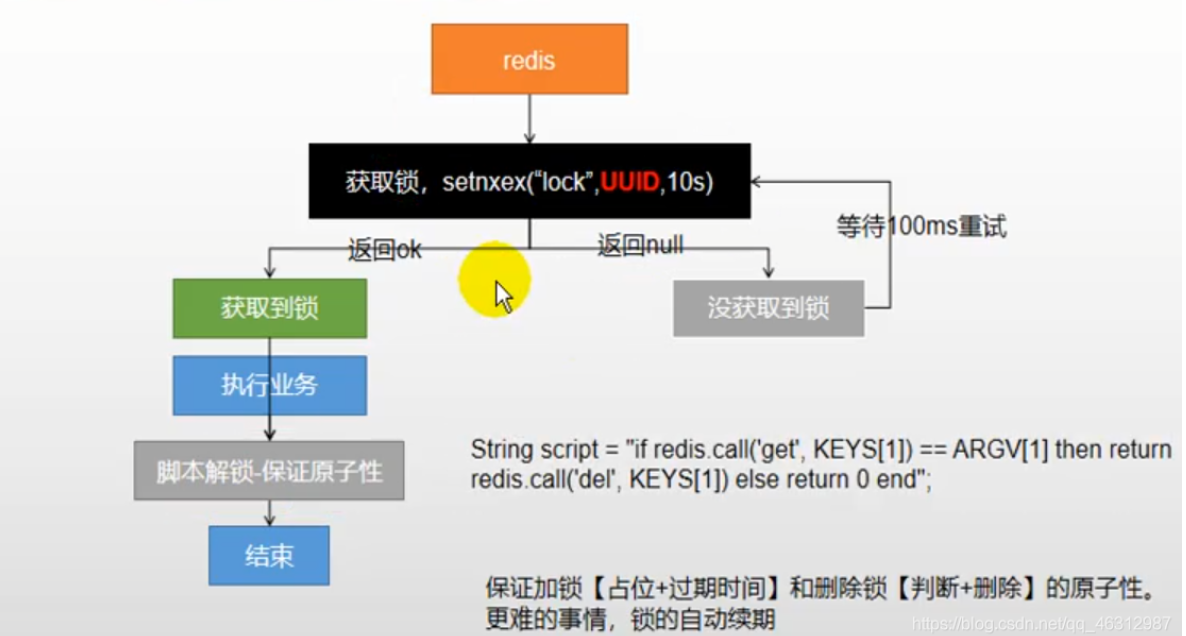

4.3.1 primary

The principle is to use the setnx command in redis according to the official document:

The Redis Setnx (SET if Not eXists) command sets the specified value for the specified key when the specified key does not exist. In this case, it is equivalent SET Command. When the key exists, do nothing.

Return value

- 1 if the key is set

- 0 if the key is not set

In Java, the corresponding method is:

public Boolean setIfAbsent(K key, V value) {

byte[] rawKey = this.rawKey(key);

byte[] rawValue = this.rawValue(value);

return (Boolean)this.execute((connection) -> {

return connection.setNX(rawKey, rawValue);

}, true);

}

// This method can set the expiration time and time type of the key

public Boolean setIfAbsent(K key, V value, long timeout, TimeUnit unit) {

byte[] rawKey = this.rawKey(key);

byte[] rawValue = this.rawValue(value);

Expiration expiration = Expiration.from(timeout, unit);

return (Boolean)this.execute((connection) -> {

return connection.set(rawKey, rawValue, expiration, SetOption.ifAbsent());

}, true);

}

Final form:

4.3.2 advanced

(1) Redisson Introduction & collection

1)Redisson is a more powerful Redis client than Jedis.

2) The operation of locking with Redisson is similar to the API under the JUC package. You can also refer to the relevant documents of JUC.

① Introduce dependency

<!--use redssion As a distributed lock-->

<dependency>

<groupId>org.redisson</groupId>

<artifactId>redisson</artifactId>

<version>3.12.0</version>

</dependency>

② Configure redsession

Refer to the official documentation for more details

Write configuration class

@Configuration

public class MyRedissonConfig {

/**

* All use of Redisson is through the redissoclient object

* @return

*/

//Configure destruction in the specified way

@Bean(destroyMethod = "shutdown")

public RedissonClient redissonClient() {

Config config = new Config();

config.useSingleServer().setAddress("redis://192.168.190.131:6379");

RedissonClient redissonClient = Redisson.create(config);

return redissonClient;

}

}

③ Redisson lock test

After redissoclient is configured, you can operate redissoclient instances to perform various locking operations.

1) Test (watchdog principle)

@ResponseBody

@GetMapping("/hello")

public String hello(){

//1. Get a lock. As long as the name of the lock is the same, it is the same lock

RLock lock = redissonClient.getLock("my-lock");

//2. Lock

//This method does not specify an expiration time

lock.lock();//Blocking wait, the lock will not be released until the previous thread is executed

//1) The automatic lock renewal test found that if a business is too long, the lock will be automatically renewed for 30s during operation. There is no need to worry about the long business time and the lock will be deleted because it expires

//2) As long as the operation of the lock adding business is completed, the lock will not be renewed. Even if it is not manually unlocked, the lock will be automatically deleted after 30s. Therefore, in the test, the service will be closed if the first request to obtain the lock is not unlocked, and the second thread can still obtain the lock

//**********************************************************************************

//lock.lock(10,TimeUnit.SECONDS); Specifies the timeout for the lock

//Question: lock lock(1,fimeUnit.SECONDS); After the lock time expires, it will not be automatically renewed.

//1. If we pass the lock timeout, we will send it to redis to execute the script to occupy the lock. The default timeout is the time we specify

//2. If we do not specify the lock timeout, we use 30 * 100 [LockWatchdogTimeout watchdog default time];

//As long as the lock is occupied successfully, a scheduled task will be started [reset the expiration time for the lock, and the new expiration time is the default time of the watchdog], which will be automatically activated every 10s

//internalLockLeaseTime [watchdog time] / 3,10s

//Best practice

// lock.Lock(30, TimeUnit.SECONDS); The whole renewal operation is omitted, and the specified expiration time is as long as possible than the business time

//**********************************************************************************

try {

System.out.println("Lock successfully executed business"+Thread.currentThread().getId());

Thread.sleep(5000);

}catch (Exception e){

}finally {

//3. Unlock

System.out.println("Release lock"+Thread.currentThread().getId());

lock.unlock();

}

return "hello";

}

④ Read lock and write lock

In order to ensure that the data can be read, during modification, the write lock is an exclusive lock (mutually exclusive lock and exclusive lock), while the read lock is a shared lock.

If the write lock is not released, you must wait

| Read / write operation | Effect description |

|---|---|

| Read + read | It is equivalent to unlocked and concurrent reads. It will only be recorded in Redis. All current read locks are successfully locked or locked at the same time. |

| Write + read | Wait for write lock release |

| Write + Write | Blocking mode |

| Read + Write | Lock the read operation first, and wait for the write operation |

Conclusion: as long as there is writing, we need to wait.

Test code:

@GetMapping("/write")

@ResponseBody

public String write() {

RReadWriteLock lock = redissonClient.getReadWriteLock("rw-lock");

String s = "";

RLock rLock = lock.writeLock();

try {

rLock.lock();

System.out.println("Write lock and lock successfully..." + Thread.currentThread().getName());

s = UUID.randomUUID().toString();

redisTemplate.opsForValue().set("writeValue", s);

Thread.sleep(30000);

} catch (Exception e) {

}finally {

rLock.unlock();

System.out.println("Write lock release..." + Thread.currentThread().getName());

}

return s;

}

@GetMapping("/read")

@ResponseBody

public String read() {

RReadWriteLock lock = redissonClient.getReadWriteLock("rw-lock");

String s = "";

RLock rLock = lock.readLock();

try {

rLock.lock();

System.out.println("Lock reading and locking succeeded..." + Thread.currentThread().getName());

s = UUID.randomUUID().toString();

redisTemplate.opsForValue().set("writeValue", s);

Thread.sleep(30000);

} catch (Exception e) {

}finally {

rLock.unlock();

System.out.println("Read lock release..." + Thread.currentThread().getName());

}

return s;

}

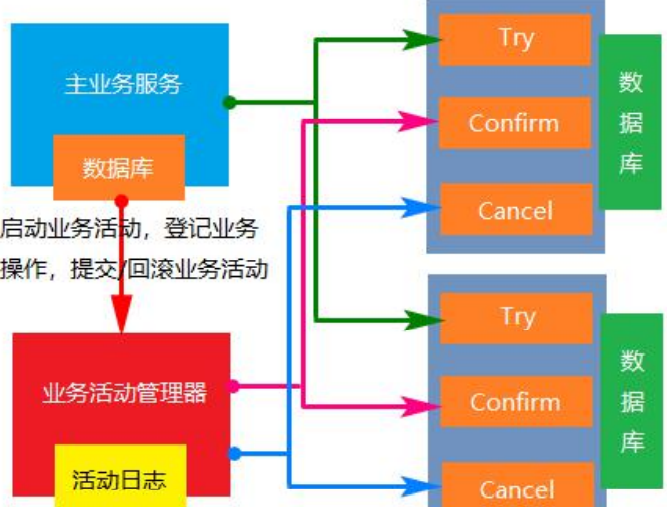

4.4 cache data consistency

Ensure consistency mode

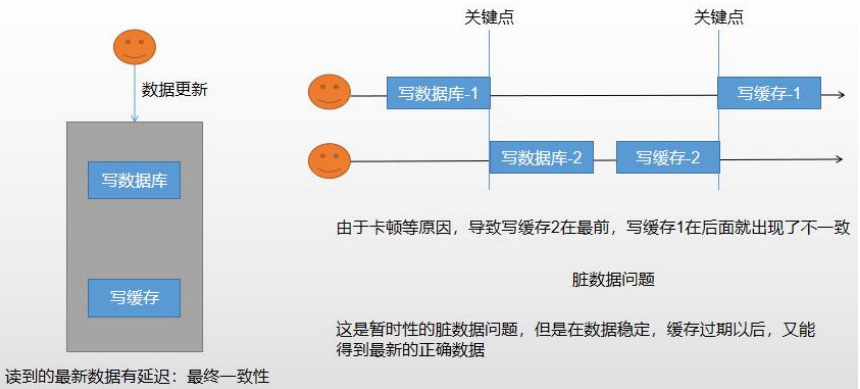

4.4.1 dual write mode

Write the data to the database before modifying the cache.

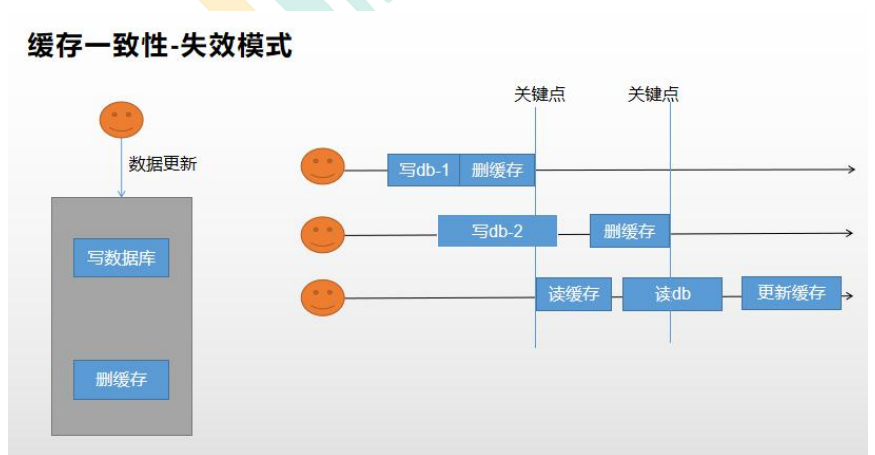

4.4.2 failure mode

As long as the database data is updated, the data in the cache will be deleted after the update, making the cache invalid.

But both of them will produce inconsistent data.

4.4.3 improvement methods

(1) Distributed read / write lock

Distributed read-write lock. Read data and wait for the whole operation of writing data to complete

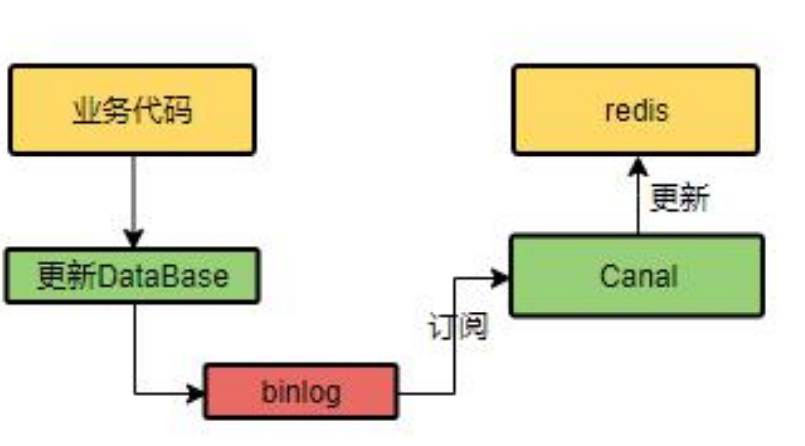

(2) cananl using alibaba

Cananl will record the update of the database, record the changed information in cananl, and then update redis.

4.5 SpringCache

Summarize the previous business logic:

When reading data, we need to check whether there is any data we want in the cache. If there is any data, we need to get the data directly from the cache and return it. If not, we need to query the database, place a copy in the cache and return it.

The read-write lock needs to be set for write and read operations, but each method needs to be operated manually, which is very troublesome. Therefore, we need to introduce SpringCache, which has encapsulated the relevant operations for us.

4.5.1 introduction

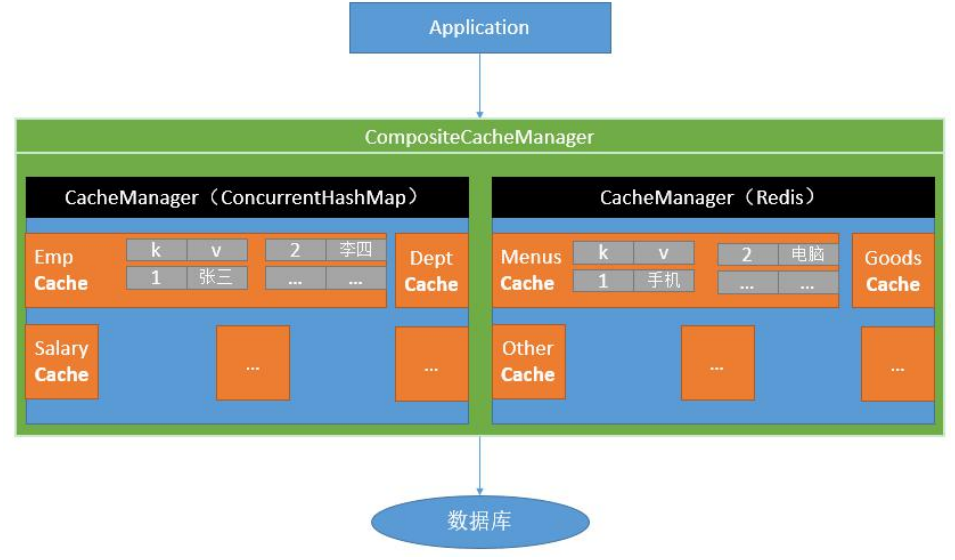

- Spring has defined org. Net since 3.1 springframework. cache. Cache and org springframework. cache. CacheManager interface to unify different caching technologies; It also supports the use of JCache (JSR-107) annotations to simplify our development.

Note: jsr is the abbreviation of Java Specification Requests, which means Java specification proposal.

-

The Cache interface is defined by the component specification of the Cache, including various operation sets of the Cache; Spring provides various Xcache implementations under the Cache interface; Such as RedisCache, ehcachecache and concurrentmapcache

-

Each time a method requiring caching is called, Spring will check whether the specified target method of the specified parameter has been called; If yes, get the result of the method call directly from the cache. If not, call the method and cache the result and return it to the user. The next call is taken directly from the cache.

When using Spring cache abstraction, we need to pay attention to the following two points:

(1) determine the methods that need to be cached and their caching strategies

(2) read the data stored in the previous cache from the cache

4.5.2 basic concepts

4.5.3 Introduction & Configuration

(1) Import dependency:

spring-boot-starter-cache,spring-boot-starter-data-redis

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-cache</artifactId>

</dependency>

<!--introduce redis-->

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-data-redis</artifactId>

<exclusions>

<!--exclude lettuce-->

<exclusion>

<groupId>io.lettuce</groupId>

<artifactId>lettuce-core</artifactId>

</exclusion>

</exclusions>

</dependency>

(2) Write configuration

Configure to use redis as cache

spring.cache.type=redis

4.5.4 notes

- @Cacheable triggers cache filling, (triggers the operation of saving data to the cache)

- @CacheEvict triggers eviction from the cache (triggers the deletion of data from the cache)

- @CachePut updates the cache without interfering with the execution of the method

- @Caching recombines multiple cache operations to be applied on a method (combining more than one operation)

- @CacheConfig shares some common cache related settings at the class level

4.5.5 use & details

(1) Enable caching @ EnableCaching

Example:

@Cacheable({"category"})

@Override

public List<CategoryEntity> getLevel1Categorys() {

List<CategoryEntity> categoryEntities = baseMapper.selectList(new QueryWrapper<CategoryEntity>().eq("parent_cid", 0));

return categoryEntities;

}

1. For each data to be cached, we need to specify the cache to be put into that name. [cache partition (by business type)]

2,@Cacheable

The results representing the current method need to be cached. If there are in the cache, the method will not be called.

If there is no in the cache, the method will be called, and the result of the method will be put into the cache in the left back.

3. Default behavior

1) If there is data in the cache, the method will not be called

2) key is automatically generated by default: category::SimpleKey []

3) The cached value uses jdk serialization mechanism by default to store the serialized data in redis

4) TTL (default lifetime): - 1 never expires

4. Custom

1) specify the key: key attribute used by the generated cache, and accept a spiel expression

2) specify the survival time of data in the cache: modify ttl in the configuration file

3) save the data in json format

If we do not specify our own configuration, we will use the default configuration

Write a custom configuration class:

//Enable the function of loading preconditions

@EnableConfigurationProperties(CacheProperties.class)

@Configuration

@EnableCaching

public class MyCacheConfig {

// @Autowired

// public CacheProperties cacheProperties;

/**

* The configuration of the configuration file is not used

* @return

*/

@Bean

public RedisCacheConfiguration redisCacheConfiguration(CacheProperties cacheProperties) {

RedisCacheConfiguration config = RedisCacheConfiguration.defaultCacheConfig();

// config = config.entryTtl();

config = config.serializeKeysWith(RedisSerializationContext.SerializationPair.fromSerializer(new StringRedisSerializer()));

config = config.serializeValuesWith(RedisSerializationContext.SerializationPair.fromSerializer(new GenericJackson2JsonRedisSerializer()));

CacheProperties.Redis redisProperties = cacheProperties.getRedis();

//All configurations in the configuration file will take effect

if (redisProperties.getTimeToLive() != null) {

config = config.entryTtl(redisProperties.getTimeToLive());

}

if (redisProperties.getKeyPrefix() != null) {

config = config.prefixKeysWith(redisProperties.getKeyPrefix());

}

if (!redisProperties.isCacheNullValues()) {

config = config.disableCachingNullValues();

}

if (!redisProperties.isUseKeyPrefix()) {

config = config.disableKeyPrefix();

}

return config;

}

}

spring.cache.type=redis spring.cache.redis.time-to-live=3600000 #Set whether it is null to prevent cache penetration spring.cache.redis.cache-null-values=true #If a prefix is specified, the prefix we specified will be used. If not, the name of the cache will be used as the prefix by default #spring.cache.redis.key-prefix=CACHE_ #Use prefix spring.cache.redis.use-key-prefix=true

(2) @ cacheput (update a data and update the specified cache)

It can be used to solve the double write mode in cache consistency. It is required to return the latest data after updating the data. However, the return value of the general update operation is void, so it is generally not used

(3)@CacheEvict

According to the business logic, to enter the background management system for update operation, you need to modify the database first and then delete the cache.

However, the cache to be deleted involves two caches in the category created in redis: CACHE_getCatalogJson and CACHE_getLevel1Categorys

Deletion method 1:

@Caching(evict = {

@CacheEvict(value = "category",key = "'getLevel1Categorys'"),

@CacheEvict(value = "category",key = "'getCatalogJson'")

})

/**

* Cascade updates all associated data

* @CacheEvict:failure mode

* @CachePut:Double write mode, return value required

* 1,Multiple cache operations at the same time: @ Caching

* 2,Specify to delete all data @ cacheevict under a partition (value = "category", allentries = true)

* 3,Data of the same type can be specified as the same partition

* @param category

*/

// @Caching(evict = {

// @CacheEvict(value = "category",key = "'getLevel1Categorys'"),

// @CacheEvict(value = "category",key = "'getCatalogJson'")

// })

@CacheEvict(value = "category",allEntries = true) //Delete all data under a partition

@Transactional(rollbackFor = Exception.class)

@Override

public void updateCascade(CategoryEntity category) {

RReadWriteLock readWriteLock = redissonClient.getReadWriteLock("catalogJson-lock");

//Create write lock

RLock rLock = readWriteLock.writeLock();

try {

rLock.lock();

this.baseMapper.updateById(category);

categoryBrandRelationService.updateCategory(category.getCatId(), category.getName());

} catch (Exception e) {

e.printStackTrace();

} finally {

rLock.unlock();

}

//Modify the data in the cache at the same time

//Delete the cache and wait for the next active query to update

}

4.5.6 shortcomings of spring cache

(1) Read mode

-

Cache penetration: query a null data. Solution: cache empty data

-

Cache breakdown: a large number of concurrent queries come in and query an expired data at the same time. Solution: lock? It is unlocked by default; Use sync = true to solve the breakdown problem

-

Cache avalanche: a large number of Keys expire at the same time. Solution: add random time. Plus expiration time

(2) Write mode: (CACHE consistent with database)

- Read write lock.

- Introduce Canal and sense the update of MySQL to update Redis

- Read more and write more. Just go to the database to query

4.5.7 summary

General data (spring cache can be used for data with more reads and less writes, instant, and low consistency requirements): write mode (as long as the cached data has an expiration time is enough)

Special data: special design

Principle:

CacheManager (rediscachemanager) - > cache (rediscache) - > cache is responsible for reading and writing the cache

5, Asynchronous & thread pool

5.1 thread review

5.1.1 method of initializing thread

(1) Inherit Thread class

class Thread01 extends Thread {

@Override

public void run() {

for (int i = 0; i < 5; i++) {

System.out.println("Thread name: " + Thread.currentThread().getName() + ", Thread number: " + Thread.currentThread().getId() + " : " + i);

}

}

}

public class ThreadTest {

public static void main(String[] args) throws ExecutionException, InterruptedException {

Thread01 t1 = new Thread01();

t1.start();

}

}

(2) Implement Runnable interface

class Thread03 implements Callable<Integer> {

@Override

public Integer call() throws Exception {

int sum = 0;

for (int i = 0; i < 5; i++) {

System.out.println("Thread name: " + Thread.currentThread().getName() + ", Thread number: " + Thread.currentThread().getId() + " : " + i);

sum += i;

}

return sum;

}

}

public class ThreadTest {

public static void main(String[] args) throws ExecutionException, InterruptedException {

Thread02 thread02 = new Thread02();

new Thread(thread02).start();

}

}

(3) Implement Callable interface + FutureTask (you can get the returned results and handle exceptions)

class Thread03 implements Callable<Integer> {

@Override

public Integer call() throws Exception {

int sum = 0;

for (int i = 0; i < 5; i++) {

System.out.println("Thread name: " + Thread.currentThread().getName() + ", Thread number: " + Thread.currentThread().getId() + " : " + i);

sum += i;

}

return sum;

}

}

public class ThreadTest {

public static void main(String[] args) throws ExecutionException, InterruptedException {

Thread03 thread03 = new Thread03();

FutureTask<Integer> futureTask = new FutureTask<>(thread03);

new Thread(futureTask).start();

Integer integer = futureTask.get();

System.out.println("sum = " + integer);

}

}

(4) Thread pool

class Thread03 implements Callable<Integer> {

@Override

public Integer call() throws Exception {

int sum = 0;

for (int i = 0; i < 5; i++) {

System.out.println("Thread name: " + Thread.currentThread().getName() + ", Thread number: " + Thread.currentThread().getId() + " : " + i);

sum += i;

}

return sum;

}

}

public class ThreadTest {

public static ExecutorService service = Executors.newFixedThreadPool(10);

public static void main(String[] args) throws ExecutionException, InterruptedException {

Thread03 thread03 = new Thread03();

FutureTask<Integer> futureTask = new FutureTask<>(thread03);

Future<Integer> submit = service.submit(thread03);

System.out.println(submit.get());

service.shutdown();

}

}

5.1.2 differences

- 1. 2 cannot get the return value after task execution, 3 can return the value

- 1. 2 and 3 cannot control resources. A new thread must be created whenever it is used

- 4 can control resources, performance, etc

5.2 completable future asynchronous orchestration

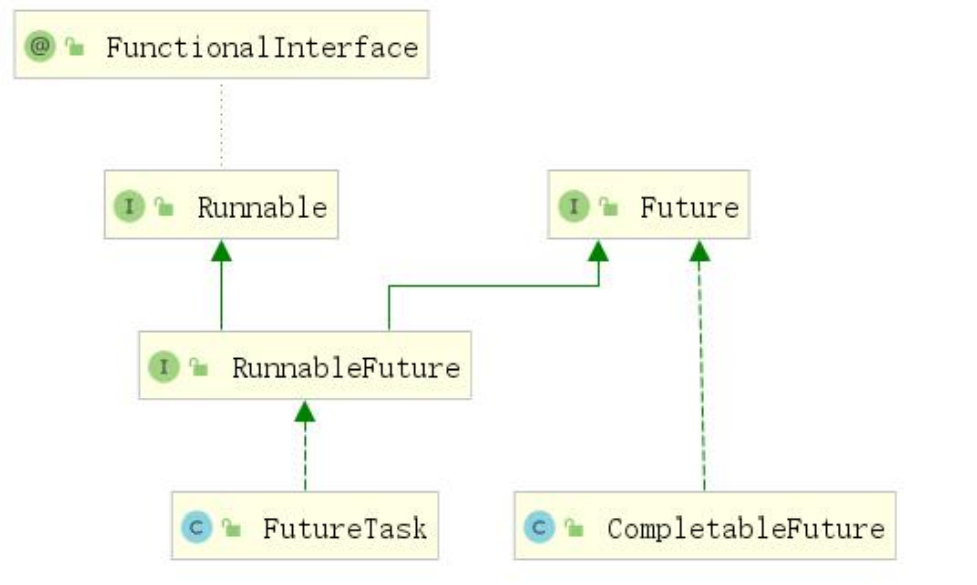

Future is a class added in Java 5 to describe the results of an asynchronous calculation.

You can use the isDone method to check whether the calculation is completed, or use get to block the calling thread until the calculation returns the result. You can also use the cancel method to stop the execution of the task.

Although Future and related usage methods provide the ability to execute tasks asynchronously, it is very inconvenient to obtain the results. The results of tasks can only be obtained by blocking or polling. The blocking method is obviously contrary to the original intention of our asynchronous programming. The polling method will consume unnecessary CPU resources and can not get the calculation results in time. Why not use the observer design mode to inform the listener when the calculation results are completed?

Many languages, such as node JS, using callback to realize asynchronous programming. Some Java frameworks, such as Netty, extend the Future interface of Java and provide multiple extension methods such as addListener; Google guava also provides a common extension Future; Scala also provides an easy-to-use and powerful Future/Promise asynchronous programming mode.

As an orthodox Java class library, should we do something to strengthen the functions of our own library?

In Java 8, a new class containing about 50 methods is added: completable Future, which provides a very powerful extension function of Future, helps us simplify the complexity of asynchronous programming, provides the ability of functional programming, processes calculation results through callback, and provides methods for converting and combining completable Future. The completabilefuture class implements the Future interface, so you can still get results by blocking or polling the get method as before, but this method is not recommended.

Completabilefuture and FutureTask belong to the implementation class of the Future interface. They can get the execution results of threads.

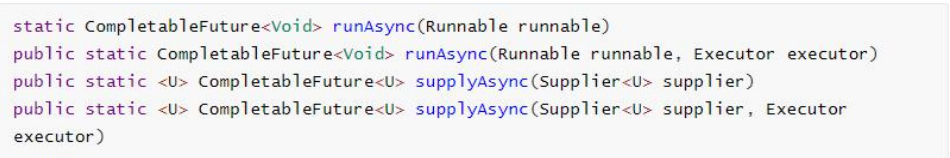

5.2.1 creating asynchronous objects

Completable future provides four static methods to create an asynchronous operation

- runXxxx does not return results, and supplyxx can obtain the returned results

- You can pass in a custom thread pool, otherwise the default thread pool will be used

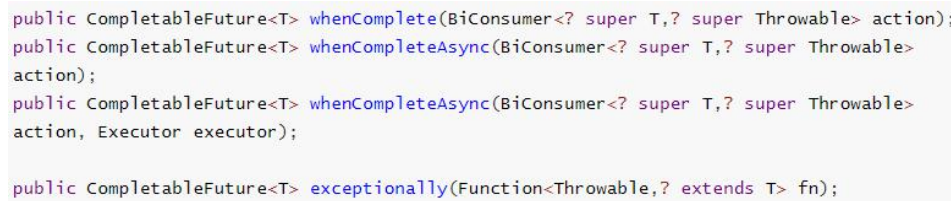

5.2.2 callback method upon completion of calculation

whenComplete can handle normal and abnormal calculation results, and exceptionally handle abnormal situations.

The difference between whenComplete and whenCompleteAsync:

- whenComplete: the thread executing the current task executes the task that continues to execute whenComplete.

- whenCompleteAsync: the task of submitting whenCompleteAsync to the thread pool for execution

The method does not end with Async, which means that the Action uses the same thread to execute, while Async may use other threads to execute (if using the same thread pool, it may also be selected by the same thread to execute)

Example code:

public class CompletableFutureDemo {

public static void main(String[] args) throws ExecutionException, InterruptedException {

CompletableFuture future = CompletableFuture.supplyAsync(new Supplier<Object>() {

@Override

public Object get() {

System.out.println(Thread.currentThread().getName() + "\t

completableFuture");

int i = 10 / 0;

return 1024;

}

}).whenComplete(new BiConsumer<Object, Throwable>() {

@Override

public void accept(Object o, Throwable throwable) {

System.out.println("-------o=" + o.toString());

System.out.println("-------throwable=" + throwable);

}

}).exceptionally(new Function<Throwable, Object>() {

@Override

public Object apply(Throwable throwable) {

System.out.println("throwable=" + throwable);

return 6666;

}

});

System.out.println(future.get());

}

}

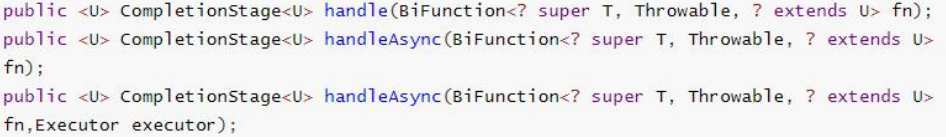

5.2.3 handle method

Like complete, the result can be finally processed (exceptions can be handled) and the return value can be changed.

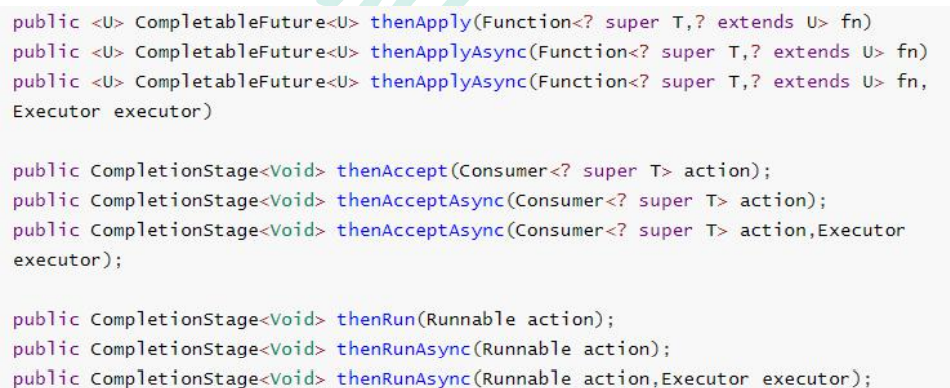

5.2.4 thread serialization method

thenApply method: when a thread depends on another thread, it obtains the result returned by the previous task and returns the return value of the current task.

thenAccept method: consume the processing result. Receive the processing result of the task and consume it. No result is returned.

thenRun method: execute thenRun as long as the above task is completed, but execute the subsequent operations of thenRun after processing the task.

With Async, it is executed asynchronously by default. Same as before. All the above tasks must be completed successfully.

Function <? super , ? extends U>

T: The type of result returned by the previous task

U: Return value type of the current task

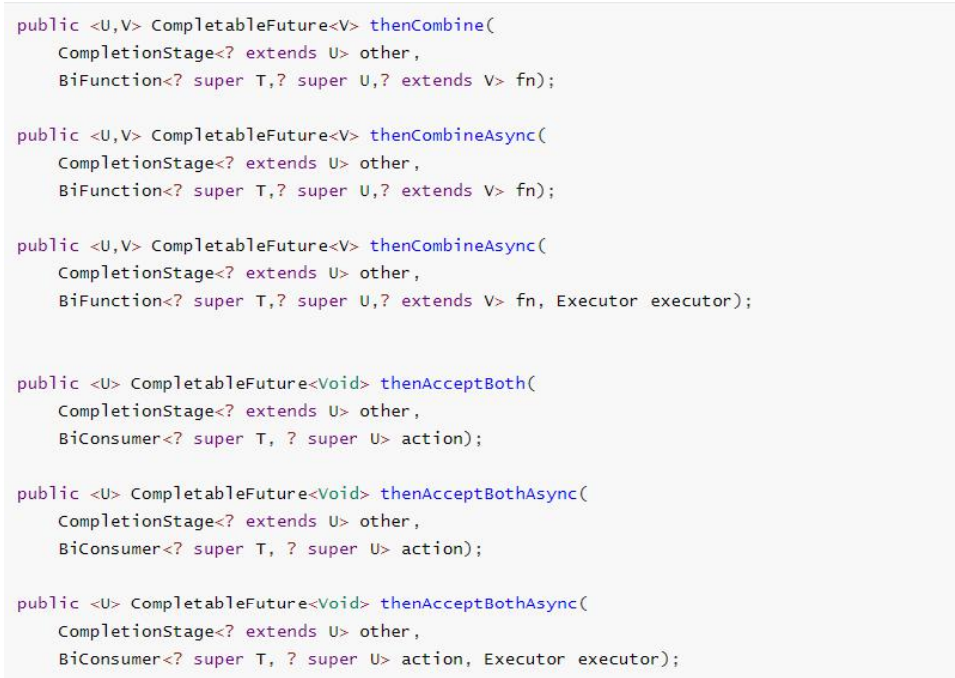

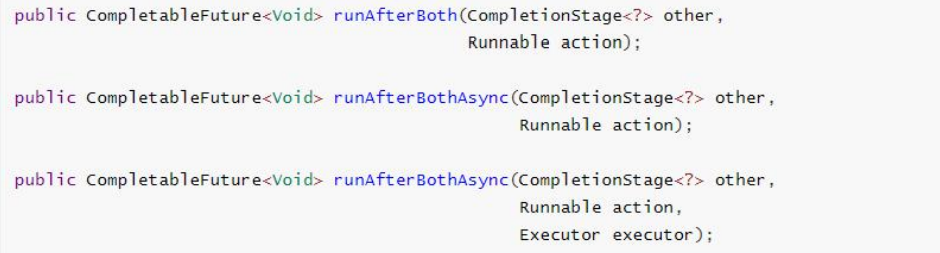

5.2.5 combination of two tasks - both to be completed

Both tasks must be completed to trigger the task.

thenCombine: combine two futures, obtain the return results of the two futures, and return the return value of the current task

Then accept both: combine the two future tasks, obtain the return results of the two future tasks, and then process the tasks without return value.

runAfterBoth: combine two futures. You don't need to obtain the results of the future. You only need to process the task after the two futures process the task

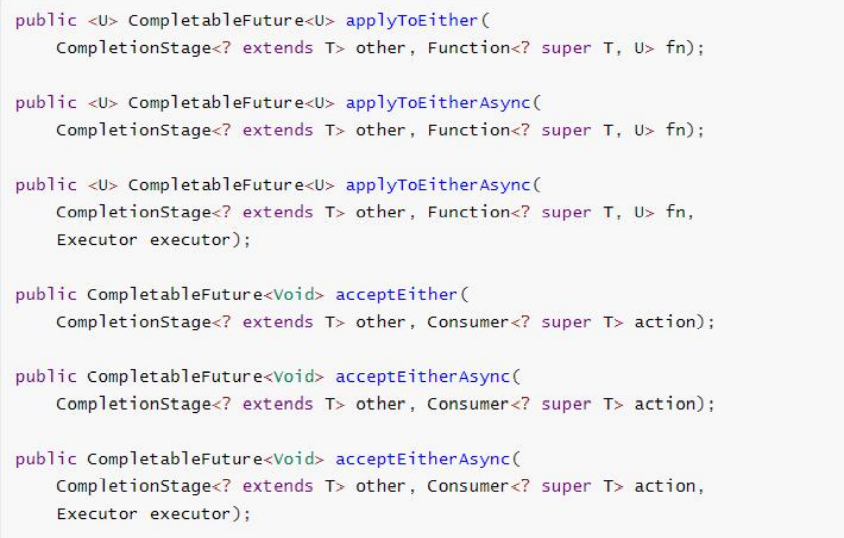

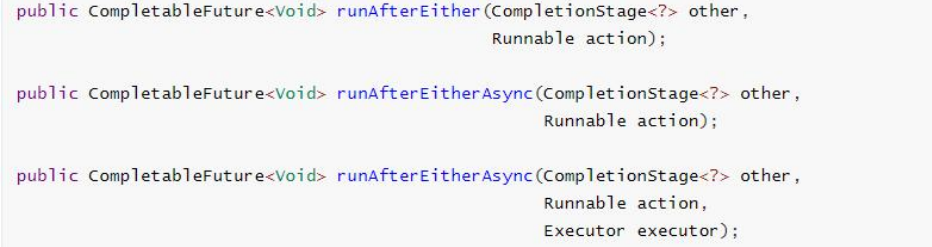

5.2.6 combination of two tasks - one completed

When either of the two future tasks is completed, execute the task.

applyToEither: when one of the two tasks is completed, get its return value, process the task and have a new return value.

acceptEither: one of the two tasks is completed. Get its return value and process the task. There is no new return value.

runAfterEither: one of the two tasks is completed. There is no need to obtain the future results, process the task, and there is no return value.

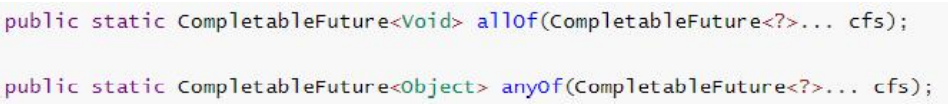

5.2.7 multi task combination

allOf: wait for all tasks to complete

anyOf: as long as one task is completed

5.3 asynchronous orchestration optimization cases

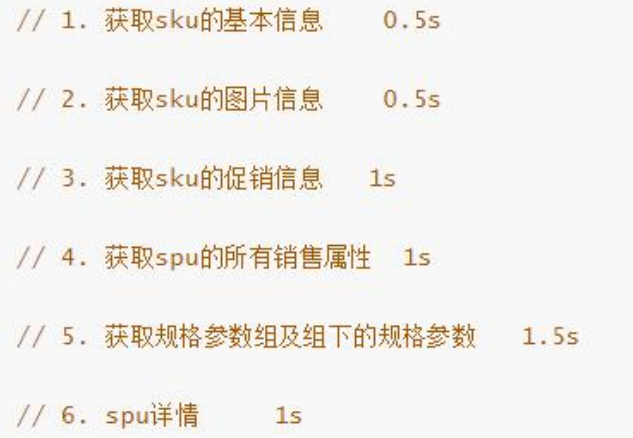

Business scenario:

The logic of querying the product details page is complex. Some data needs to be called remotely, which will inevitably take more time.

If each query on the product details page can be completed within the time indicated below, the user needs 5.5s to see the content of the product details page. Obviously, it is unacceptable. If multiple threads complete these six steps at the same time, it may take only 1.5s to complete the response.

Business Code:

@Override

public SkuItemVo item(Long skuId) throws ExecutionException, InterruptedException {

SkuItemVo skuItemVo = new SkuItemVo();

CompletableFuture<SkuInfoEntity> infoFuture = CompletableFuture.supplyAsync(() -> {

//1. Set sku basic information

SkuInfoEntity info = getById(skuId);

skuItemVo.setInfo(info);

return info;

}, executor);

CompletableFuture<Void> saleAttrFuture = infoFuture.thenAcceptAsync((res) -> {

//3. Get sku's sales attribute combination

List<SkuItemSaleAttrVo> saleAttrVos = skuSaleAttrValueService.getSaleAttrsBySpuId(res.getSpuId());

skuItemVo.setSaleAttr(saleAttrVos);

}, executor);

CompletableFuture<Void> descFuture = infoFuture.thenAcceptAsync((res) -> {

//4 get the introduction PMS of SPU_ spu_ info_ desc

SpuInfoDescEntity spuInfoDescEntity = spuInfoDescService.getById(res.getSpuId());

skuItemVo.setDesc(spuInfoDescEntity);

}, executor);

CompletableFuture<Void> attrFuture = infoFuture.thenAcceptAsync((res) -> {

//5. Get the specification parameter information of spu

List<SpuItemAttrGroupVo> attrGroupVos = attrGroupService.getAttrGroupWithAttrsBySpuId(res.getSpuId(), res.getCatalogId());

skuItemVo.setGroupAttrs(attrGroupVos);

}, executor);

// The above future is associated. All need to share the same future object, but the following obtaining sku picture information is not associated with other tasks, so you can use a new future to execute

CompletableFuture<Void> imageFuture = CompletableFuture.runAsync(() -> {

//2 sku picture information

List<SkuImagesEntity> images = skuImagesService.getImagesBySkuId(skuId);

skuItemVo.setImages(images);

}, executor);

// Wait for all asynchronous tasks to complete

CompletableFuture.allOf(infoFuture, saleAttrFuture, descFuture, attrFuture, imageFuture).get();

return skuItemVo;

}

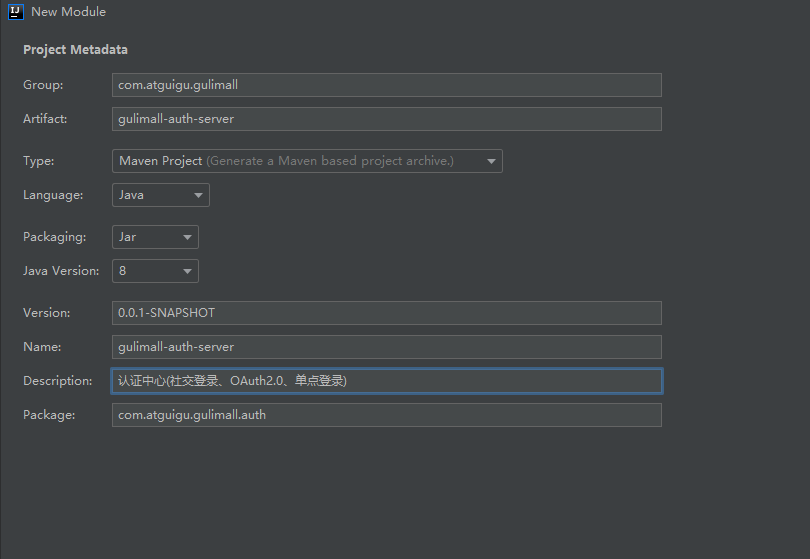

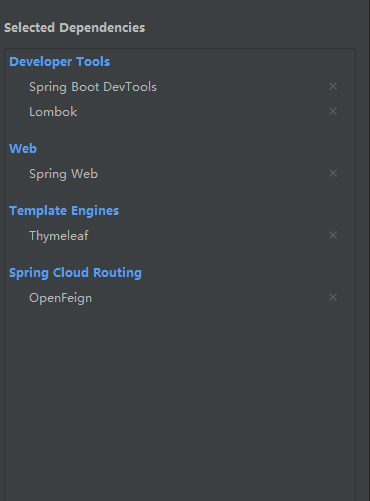

6, Certification services

6.1 environment construction

Create a new module

6.2 Integrated SMS verification code

6.2.1 use of webmvcconfigurer

More details:

SpringBoot - webmvcconfigurer details_ zhangpower1993 blog - CSDN blog_ webmvcconfigurer

In the past, whenever you want to jump to a page, you have to write a method in the controller layer to jump to the page, which is very troublesome, so you can use WebMvcConfigurer

(1) Write configuration class

Put our jump page operations into the configuration class, which greatly simplifies the development.

@Configuration

public class GulimallWebConfig implements WebMvcConfigurer {

@Override

public void addViewControllers(ViewControllerRegistry registry) {

registry.addViewController("/login.html").setViewName("login");

registry.addViewController("/reg.html").setViewName("reg");

}

}

6.2.2 introduction of Alibaba cloud SMS service

-

Step 1: first purchase Alibaba cloud's SMS service

-

Step 2: write the SMS sending component gulimall third party (configuration class) in the module of the third-party service

@ConfigurationProperties(prefix = "spring.cloud.alicloud.sms")

@Data

@Component

public class SmsComponent {

private String host;

private String path;

private String appcode;

public void sendSmsCode(String phone,String code) {

// String host = "https://dfsns.market.alicloudapi.com";

// String path = "/data/send_sms";

String method = "POST";

// String appcode = "809227c6f6c043319ecd98f03ca61bed";

Map<String, String> headers = new HashMap<String, String>();

//Finally, the format in the header (English space in the middle) is authorization: appcode 83359fd73fe948385f570e3c139105

headers.put("Authorization", "APPCODE " + appcode);

//Define the corresponding content type according to API requirements

headers.put("Content-Type", "application/x-www-form-urlencoded; charset=UTF-8");

Map<String, String> querys = new HashMap<String, String>();

Map<String, String> bodys = new HashMap<String, String>();

bodys.put("content", "code:" + code);

bodys.put("phone_number", phone);

bodys.put("template_id", "TPL_0000");

try {

/**

* Important tips are as follows:

* HttpUtils Please from

* https://github.com/aliyun/api-gateway-demo-sign-java/blob/master/src/main/java/com/aliyun/api/gateway/demo/util/HttpUtils.java

* download

*

* For corresponding dependencies, please refer to

* https://github.com/aliyun/api-gateway-demo-sign-java/blob/master/pom.xml

*/

HttpResponse response = HttpUtils.doPost(host, path, method, headers, querys, bodys);

System.out.println(response.toString());

//Get the body of the response

//System.out.println(EntityUtils.toString(response.getEntity()));

} catch (Exception e) {

e.printStackTrace();

}

}

}

Corresponding profile:

spring:

cloud:

alicloud:

sms:

host: https://dfsns.market.alicloudapi.com

path: /data/send_sms

appcode: 809227c6f6c043319ecd98f03ca61bed

For more operations, please refer to the help documents related to SMS service.

6.2.3 write SMS service controller

Process of sending verification code

First, the foreground page sends a request to the micro service related to registration, then the service calls the third micro service that sends SMS, and then the SMS service sends SMS.

At the same time, when sending the verification code, the interface sending the verification code is exposed, so redis should be added to realize the interface anti brushing function.

preparation:

- Introducing Redis dependency

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-data-redis</artifactId>

</dependency>

- Add the configuration in the application configuration file, and specify the redis address and port number

spring.redis.host=192.168.190.131 spring.redis.port=6379

SMS controller

@ResponseBody

@GetMapping("/sms/sendcode")

public R sendCode(@RequestParam("phone") String phone) {

// Obtain the verification code from redis through the mobile phone number

String redisCode = redisTemplate.opsForValue().get(AuthServerConstant.SMS_CODE_CACHE_PREFIX + phone);

// Judge whether redis has saved the verification code

if (!StringUtils.isEmpty(redisCode) || !(redisCode == null)) {

long l = Long.parseLong(redisCode.split("_")[1]);

// SMS verification code cannot be sent within 60 seconds

if (System.currentTimeMillis() - l < 60000) {

return R.error(BizCodeEnume.SMS_CODE_EXCEPTION.getCode(), BizCodeEnume.SMS_CODE_EXCEPTION.getMsg());

}

}

// If the verification code has not been set or the time for sending the verification code has passed 60s, the verification code will be regenerated

String code = UUID.randomUUID().toString().substring(0, 5) + "_" + System.currentTimeMillis();

//Set key value key: SMS: codemobile number value: verification code in redis

redisTemplate.opsForValue().set(AuthServerConstant.SMS_CODE_CACHE_PREFIX + phone, code, 10, TimeUnit.MINUTES);

// Use OpenFeign to remotely call the interface of the third-party service and send the verification code

thirdPartFeignService.sendCode(phone, code.split("_")[0]);

return R.ok();

}

Third party related service controller

@Autowired

SmsComponent smsComponent;

@ResponseBody

@GetMapping("/sendcode")

public R sendCode(@RequestParam("phone") String phone, @RequestParam("code") String code) {

smsComponent.sendSmsCode(phone, code);

return R.ok();

}

6.2.4 realization of registration function

First consider our registration process:

First, the form data from the front end is encapsulated, so create a UserRegistVo to encapsulate it (the data in it should be verified at the same time of encapsulation), and then judge whether there is an error in the verification information. If there is an error, return the error information, and request redirection to register the page. If there is no error, first obtain the verification code from redis according to the mobile phone number. If the obtained result is null, it indicates that the verification code has expired and needs to be redirected to the login page. If the obtained is not null, judge whether the verification code is correct, call the remote service for registration, and delete the verification code (token mechanism), Judge whether the registration is successful according to the information returned by the remote service interface. If successful, request to redirect to the login page, otherwise redirect to the registration page.

(1) Registration form data verification

Form to submit when registering:

The data submitted according to the form is encapsulated into a Vo:

UserRegistVo

Not only the front-end part needs to be verified, but also the back-end part needs to verify the form data, so JSR303 verification rules are added.

@Data

public class UserRegistVo {

@NotEmpty(message = "User name must be submitted")

@Length(min = 6, max = 18 , message = "User name must be 6-18 Bit character")

private String username;

@NotEmpty(message = "Password must be submitted")

@Length(min = 6, max = 18 , message = "Password must be 6-18 Bit character")

private String password;

@NotEmpty(message = "Mobile phone number must be filled in")

@Pattern(regexp = "^[1]([3-9])[0-9]{9}$", message = "Incorrect mobile phone number format")

private String phone;

@NotEmpty(message = "Verification code must be filled in")

private String code;

}

At the same time, you need to add the @ Valid annotation to the relevant controller that accepts the data and the bindingresult parameter to the parameter to obtain the exception information in the verification.

(2) MD5 & salt value & bcrypt

(1) MD5

The full name of MD5 is message digest algorithm 5

MD5 message digest algorithm belongs to Hash algorithm. MD5 algorithm runs the input message of any length to generate a 128 bit message summary (32-bit alphanumeric mixed code).

In the business logic registered in the grain mall, we use org springframework. security. crypto. bcrypt. BCryptPasswordEncoder in BCryptPasswordEncoder package

BCryptPasswordEncoder will generate random salt values for us and encrypt them.

Easy to use:

//Encrypted storage of passwords // Encryption operation BCryptPasswordEncoder bCryptPasswordEncoder = new BCryptPasswordEncoder(); memberEntity.setPassword(bCryptPasswordEncoder.encode(vo.getPassword())); // Decryption operation String password = vo.getPassword(); BCryptPasswordEncoder passwordEncoder = new BCryptPasswordEncoder(); //For the maches method, the first parameter is plaintext and the second parameter is ciphertext. After calling the maches side, a bool value will be returned boolean matches = passwordEncoder.matches(password, memberEntity.getPassword());

(3) controller layer method writing

The controller of the authentication service (gulimall auth server):

@PostMapping("/regist")

public String regist(@Valid UserRegistVo vo, BindingResult result,

RedirectAttributes redirectAttributes) {

// Determine whether there are errors in form data verification

if (result.hasErrors()) {

// Encapsulate the error attribute and error information one by one

Map<String, String> errors = result.getFieldErrors().stream().collect(Collectors.toMap(FieldError::getField,

fieldError -> fieldError.getDefaultMessage(),

(fieldError1, fieldError2) -> {

return fieldError2;

}));

// The addFlashAttribute data is retrieved only once

redirectAttributes.addFlashAttribute("errors", errors);

return "redirect:http://auth.gulimall.com/reg.html";

}

//Check whether the verification code is correct

String redisCode = redisTemplate.opsForValue().get(AuthServerConstant.SMS_CODE_CACHE_PREFIX + vo.getPhone());

if (!StringUtils.isEmpty(redisCode)) {

if (vo.getCode().equalsIgnoreCase(redisCode.split("_")[0])) {

// The verification code is correct

//Delete verification code, token mechanism

redisTemplate.delete(AuthServerConstant.SMS_CODE_CACHE_PREFIX + vo.getPhone());

//If the verification code passes, call the remote service for registration

R r = memberFeignService.regist(vo);

if (r.getCode() == 0) {

//success

// Registration succeeded, request directed from to login page

return "redirect:http://auth.gulimall.com/login.html";

} else {

//fail

Map<String, String> errors = new HashMap<>();

errors.put("msg", r.getData(new TypeReference<String>() {

}));

redirectAttributes.addFlashAttribute("errors", errors);

return "redirect:http://auth.gulimall.com/reg.html";

}

} else {

Map<String, String> errors = new HashMap<>();

errors.put("code", "Verification code error");

redirectAttributes.addFlashAttribute("errors", errors);

return "redirect:http://auth.gulimall.com/reg.html";

}

} else {

Map<String, String> errors = new HashMap<>();

errors.put("code", "Verification code expired");

redirectAttributes.addFlashAttribute("errors", errors);

return "redirect:http://auth.gulimall.com/reg.html";

}

}

controller of gulimall member:

@Transactional

@Override

public void regist(MemberRegistVo vo) {

MemberEntity memberEntity = new MemberEntity();

//Set default level

MemberLevelEntity levelEntity = this.baseMapper.getDefaultLevel();

memberEntity.setLevelId(levelEntity.getId());

//Set phone number

//Check whether the user name and mobile phone number are unique in advance. If not, an exception will be thrown

checkPhoneUnique(vo.getPhone());

checkUsernameUnique(vo.getUsername());

memberEntity.setMobile(vo.getPhone());

memberEntity.setUsername(vo.getUsername());

//Encrypted storage of passwords

BCryptPasswordEncoder bCryptPasswordEncoder = new BCryptPasswordEncoder();

memberEntity.setPassword(bCryptPasswordEncoder.encode(vo.getPassword()));

save(memberEntity);

}

If the operation is successful, a new piece of data will be found in the database and redirected from the registration page to the login page.

6.2.5 realization of login function

Sorting process:

First obtain the login form data from the front page, and then remotely call the member service to judge the login logic.

When using OpenFeign for remote calls, data in json format is transmitted, so a Vo object is encapsulated for data transmission. At the same time, pay attention to the Post request mode.

Finally, the logic of the member service layer is to query the data in the database according to the transmitted data, and then judge the controller layer. If the obtained data is null, the error information will be encapsulated and returned.

(1) Registration form data verification

Method of controller layer:

@PostMapping("/login")

public R login(@RequestBody MemberLoginVo vo) {

MemberEntity memberEntity = memberService.login(vo);

if (memberEntity != null) {

return R.ok();

} else {

return R.error(BizCodeEnume.LOGINACCT_PASSWORD_EXCEPTION.getCode(), BizCodeEnume.LOGINACCT_PASSWORD_EXCEPTION.getMsg());

}

}

service layer method:

@Override

public MemberEntity login(MemberLoginVo vo) {

String loginacct = vo.getLoginacct();

// Query to database

MemberEntity memberEntity = this.baseMapper.selectOne(new QueryWrapper<MemberEntity>()

.eq("username", loginacct)

.or().eq("email", loginacct)

.or().eq("mobile", loginacct));

if (memberEntity == null) {

return null;

} else {

String password = vo.getPassword();

BCryptPasswordEncoder passwordEncoder = new BCryptPasswordEncoder();

boolean matches = passwordEncoder.matches(password, memberEntity.getPassword());

if (matches) {

return memberEntity;

} else {

return null;

}

}

}

6.3 social login

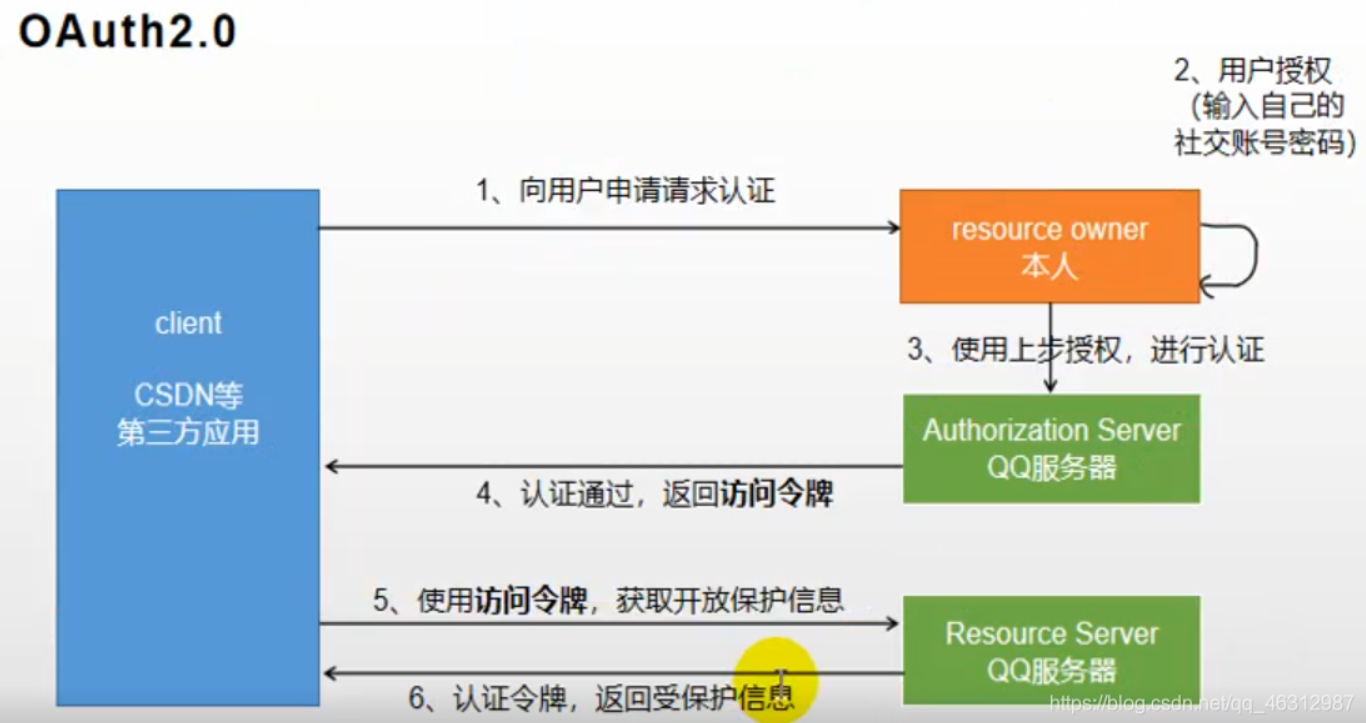

6.3.1 Oauth2.0

- brief introduction

OAuth is simply an authorization protocol. As long as the authorizer and the authorized party abide by this agreement to write code and provide services, both parties have realized OAuth mode. OAuth2.0 is a continuation of OAuth protocol. OAuth 2.0 focuses on the simplicity of client developers. Either organize the approved interaction between the resource owner and the HTTP service provider to represent the user, or allow the third-party application to obtain access rights on behalf of the user. At the same time, it provides a special certification process for Web applications, desktop applications and mobile phones, and living room equipment.

Let's say: we want to log in to CSDN, but we still don't register an account. Because it's troublesome to register an account, we want a faster way to log in, so we can choose QQ or microblog.

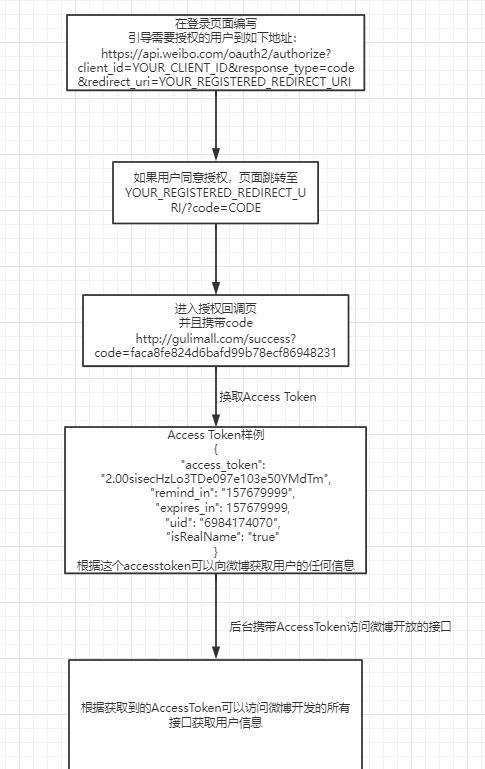

- flow chart

6.3.2 microblog authentication login

(1) Process

Microblog authentication flow chart:

The general process is to visit the login page of Weibo through our page. After successful login, Weibo will call back the page according to the authentication and authorization filled in by us, such as: http://gulimall.com/success Request redirection will be performed, and a code will be carried in the request parameters. Our own server backend will obtain the AccessToken from the microblog according to this code, plus App Key and App Secret. Then, we can view the relevant interfaces provided by the microblog through the official documents of the microblog and use the AccessToken to obtain user information.

At the same time, it should be noted that the code will expire and become invalid after use.

(2) Authentication service Controller

package com.atguigu.gulimall.auth.controller;

/**

* Third party authentication login controller

*/

@Slf4j

@Controller

public class OAuth2Controller {

@Autowired

MemberFeignService memberFeignService;

@GetMapping("/oauth2.0/weibo/success")

public String weibo(@RequestParam("code") String code) throws Exception {

Map<String, String> map = new HashMap<String, String>();

map.put("client_id", "3182348399");

map.put("client_secret", "d3ca776d45c0c4158f4b200d85cd213e");

map.put("grant_type", "authorization_code");

map.put("redirect_uri", "http://auth.gulimall.com/oauth2.0/weibo/success");

map.put("code", code);

// Get AccessToken

//Using HttpUtils

HttpResponse response = HttpUtils.doPost("https://api.weibo.com", "/oauth2/access_token", "post", new HashMap<String, String>(), new HashMap<String, String>(), map);

if (response.getStatusLine().getStatusCode() == 200) {

String json = EntityUtils.toString(response.getEntity());

// Encapsulate the obtained AccessToken and other related information into the SocialUser object

SocialUser socialUser = JSON.parseObject(json, SocialUser.class);

// If the social account is logged in for the first time, register it and call the member service remotely

R r = memberFeignService.oauth2Login(socialUser);

if (r.getCode() == 0) {

MemberRespVo memberRespVo = r.getData("data", new TypeReference<MemberRespVo>() {

});

log.info("Returned user information: {}", memberRespVo);

return "redirect:http://gulimall.com";

} else {

return "redirect:http://auth.gulimall.com/login.html";

}

} else {

return "redirect:http://auth.gulimall.com/login.html";

}

}

}

(3) Authentication login method for remote call

/**

* Social account login method

*

* @param socialUser

* @return

*/

@Override

public MemberEntity login(SocialUser socialUser) {

// Login and registration merge logic

String uid = socialUser.getUid();

MemberEntity memberEntity = this.baseMapper.selectOne(new QueryWrapper<MemberEntity>().eq("social_uid", uid));

// Judge whether the current social user has logged in to the system

if (memberEntity != null) {

MemberEntity update = new MemberEntity();

update.setId(memberEntity.getId());

update.setAccessToken(socialUser.getAccess_token());

update.setExpiresIn(socialUser.getExpires_in());

this.baseMapper.updateById(update);

memberEntity.setAccessToken(socialUser.getAccess_token());

memberEntity.setExpiresIn(socialUser.getExpires_in());

return memberEntity;

} else {

//If you don't find the user information, you have to register one yourself

MemberEntity regist = new MemberEntity();

// Relevant user information can be obtained by accessing the interface provided by HttpUtils microblog

try {

Map<String, String> query = new HashMap<String, String>();

query.put("access_token", socialUser.getAccess_token());

query.put("uid", socialUser.getUid());

//https://api.weibo.com/2/users/show.json

HttpResponse response = HttpUtils.doGet("https://api.weibo.com", "/2/users/show.json", "get", new HashMap<String, String>(), query);

// Judge whether the remote acquisition of social user information is successful

if (response.getStatusLine().getStatusCode() == 200) {

String json = EntityUtils.toString(response.getEntity());

JSONObject jsonObject = JSON.parseObject(json);

String name = jsonObject.getString("name");

String gender = jsonObject.getString("gender");

regist.setNickname(name);

regist.setGender("m".equals(gender) ? 1 : 0);

// TODO can also set more information

}

regist.setAccessToken(socialUser.getAccess_token());

regist.setExpiresIn(socialUser.getExpires_in());

regist.setSocialUid(uid);

int insert = this.baseMapper.insert(regist);

} catch (Exception e) {

e.printStackTrace();

}

return regist;

}

}

**Summary: * * first send a request to the microblog to obtain the information of the authenticated user. The obtained information has the user's unique uid. After obtaining the user information, you need to remotely call the authentication login method of the member service. In the member authentication login method, you need to judge whether the current authenticated user logs in for the first time, If you log in for the first time, you need to obtain the information of the authenticated user according to the AccessToken, and create a new MemberEntity object. This object is used to encapsulate the relevant information of the authenticated user, save it to the database, and finally return the MemberEntity. If it is not the first login, the AccessToken and expires corresponding to this user in the database must be updated_ In (AccessToken expiration time), and finally return the relevant information of the user (through the MemberEntity object).

Problem: after successful authentication login, the login information needs to be echoed on the home page of the mall, but the domain name at the time of authentication login is auth gulimall.com, the domain name on the home page of the mall is gulimall COM, which involves the problem that cross domain data cannot be shared.

6.4 distributed Session

6.4.1 Session principle

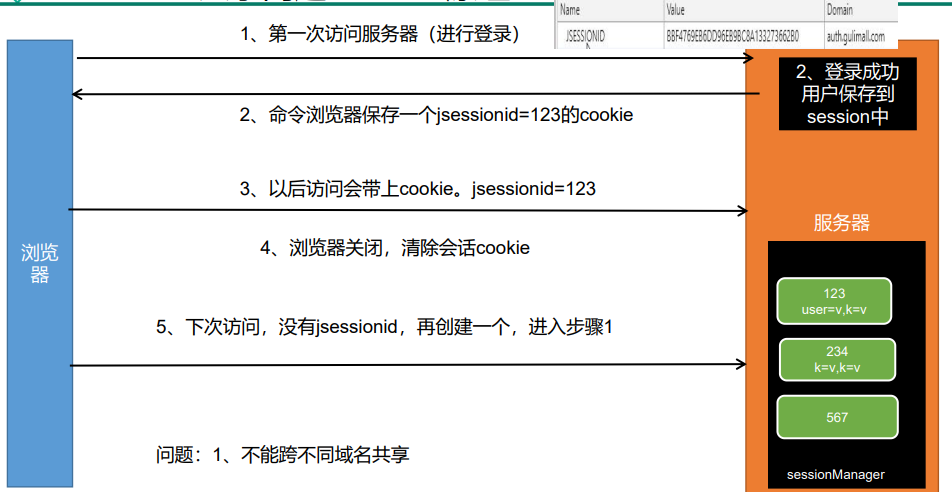

The essence of a session is an object in the server memory. You can regard a session as a Map collection. All sessions in the server are managed in the sessionManager.

6.4.2 two major problems of distributed Session

After the login operation, it will jump to the home page. However, the information of successful login needs to be echoed, so we need to use the session to store the information we need.

Session is based on cookies. When the client accesses the server, the server will issue a cookie to the client. A JSSESSIONID will be saved in the cookie. When logging in for the second time, the browser will take this JSSESSIONID to find the response session in the server's memory, so as to obtain relevant data information.

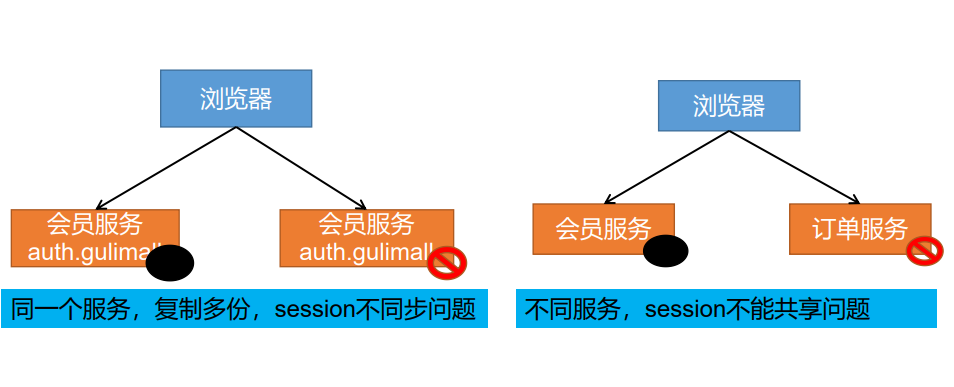

However, problems may occur in distributed scenarios:

- Problem 1: session s will not cross domains

- Problem 2: the session will fail in a cluster

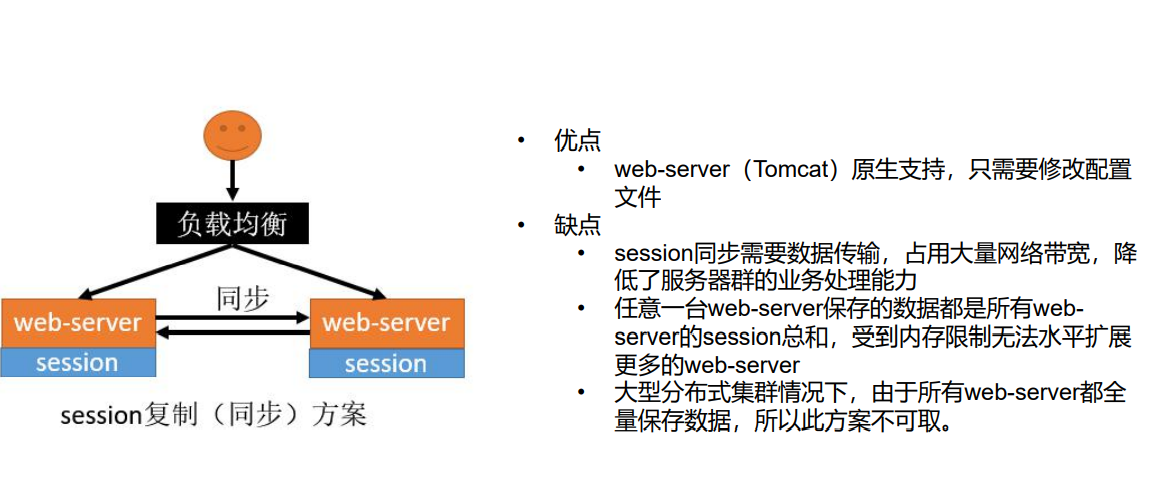

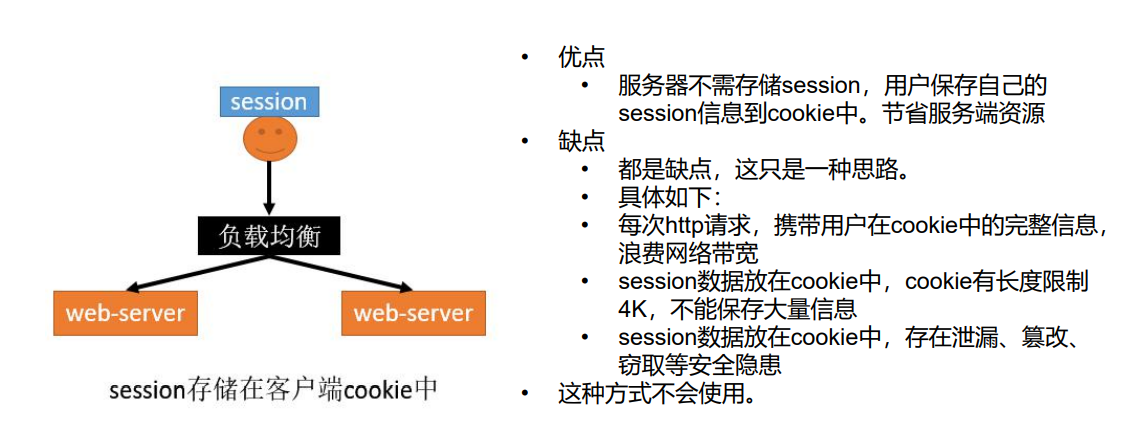

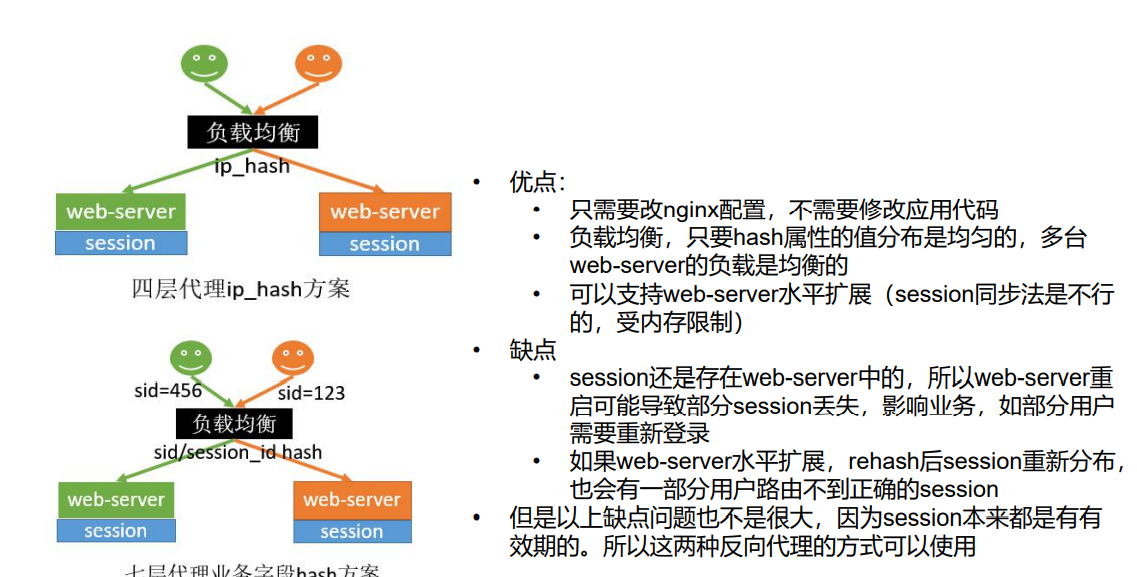

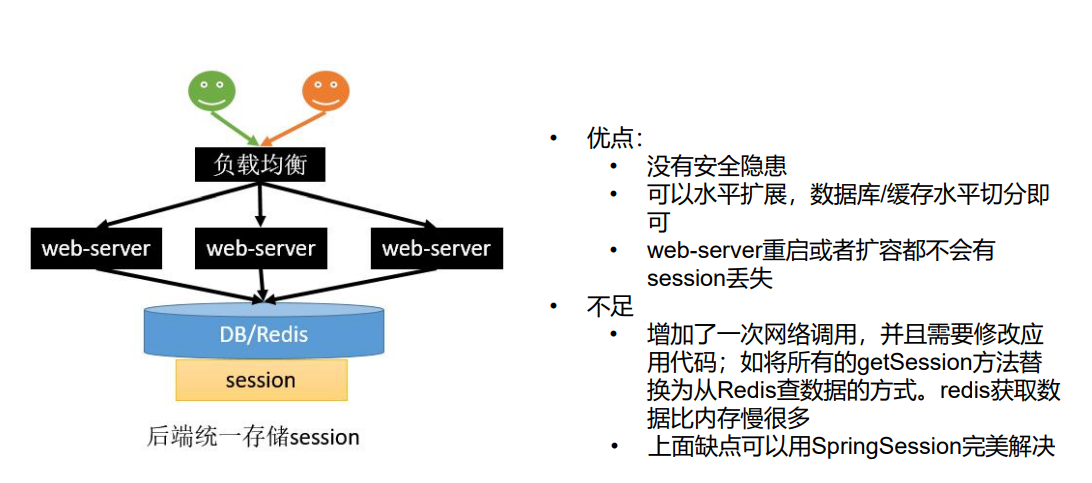

Solution 1: session replication

Solution 2: client storage

Solution 3: Hash consistency

Solution 4: use SpringSession

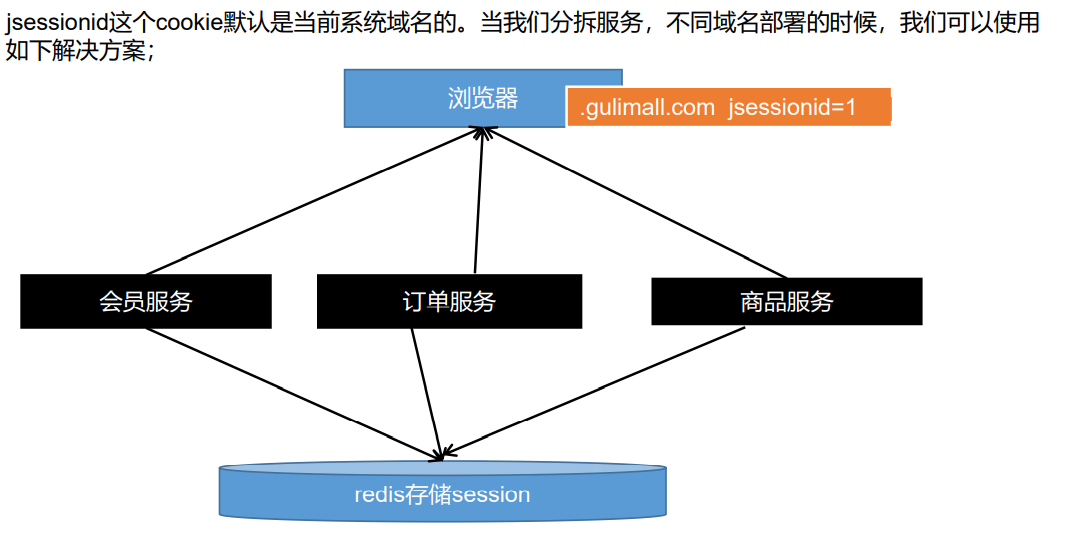

- Problem 3: sub domains are not shared

Set the jsessionid in the cookie to gulimall.com

So, auto gulimall. COM and other subdomains can share the data in the session

The browser's cookie is issued by our server by default. To customize the issued cookie content, we need to modify it manually, but this problem can be solved by introducing spring session

6.4.3 SpringSession

Refer to the blog: https://blog.csdn.net/qq_43371556/article/details/100862785

For more details, please refer to the official Spring documentation.

(1) Configure SpringSession

- Introduce dependency

<!-- integration SpringSession solve session Sharing problem -->

<dependency>

<groupId>org.springframework.session</groupId>

<artifactId>spring-session-data-redis</artifactId>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-data-redis</artifactId>

</dependency>

- Configure application properties

spring.session.store-type=redis # Session store type.

- Mark the annotation @ enablereredishttpsession on the configuration class

@EnableRedisHttpSession

@EnableDiscoveryClient

@EnableFeignClients

@SpringBootApplication

public class GulimallAuthServerApplication {

public static void main(String[] args) {

SpringApplication.run(GulimallAuthServerApplication.class, args);

}

}

- Write configuration class

@Configuration

public class SessionConfig {

/**

* Configure the Cookie serialization mechanism, convert the session object to JSON format, and set the domain name

* @return

*/

@Bean

public CookieSerializer cookieSerializer() {

DefaultCookieSerializer cookieSerializer = new DefaultCookieSerializer();

cookieSerializer.setDomainName("xxx.com");

cookieSerializer.setCookieName("YOUR_SESSION_NAME");

return cookieSerializer;

}

/**

* Configure serialization mechanism

* @return

*/

@Bean

public RedisSerializer<Object> springSessionDefaultRedisSerializer() {

return new GenericJackson2JsonRedisSerializer();

}

}

(2) SpringSession principle

The @ enablereredishttpsession annotation is marked on the main startup class of the service to use SpringSession

@EnableRedisHttpSession

@Retention(RetentionPolicy.RUNTIME)

@Target(ElementType.TYPE)

@Documented

@Import(RedisHttpSessionConfiguration.class)// Import configuration

@Configuration

public @interface EnableRedisHttpSession {

@The configuration class RedisHttpSessionConfiguration is imported into enablereredishttpsession

In the RedisHttpSessionConfiguration configuration class, a component is added to the IOC container: RedisOperationsSessionRepository is used to use redis operation sessions, that is, the addition, deletion, modification and query encapsulation class of sessions.

public class RedisHttpSessionConfiguration extends SpringHttpSessionConfiguration

implements BeanClassLoaderAware, EmbeddedValueResolverAware, ImportAware,

SchedulingConfigurer {

RedisHttpSessionConfiguration inherits SpringHttpSessionConfiguration,

Spring httpsessionconfiguration adds another important component to the container: SessionRepositoryFilter

SessionRepositoryFilter is a filter for session storage. SessionRepositoryFilter inherits OncePerRequestFilter, which implements the filter interface, so SessionRepositoryFilter is a servlet filter, and all requests need to pass through the filter.

The most core method, doFilterInternal, is rewritten in SessionRepositoryFilter. This method is referenced in doFilter in the parent class of SessionRepositoryFilter, that is, OncePerRequestFilter rewrites doFilter of Fliter interface, and doFilterInternal is called in doFilter to realize core functions.

doFilterInternal method:

@Override

protected void doFilterInternal(HttpServletRequest request,

HttpServletResponse response, FilterChain filterChain)

throws ServletException, IOException {

request.setAttribute(SESSION_REPOSITORY_ATTR, this.sessionRepository);

// Wrap the native request with a wrapper class SessionRepositoryRequestWrapper

SessionRepositoryRequestWrapper wrappedRequest = new SessionRepositoryRequestWrapper(

request, response, this.servletContext);

// Pack the native response with a wrapper class package SessionRepositoryResponseWrapper

SessionRepositoryResponseWrapper wrappedResponse = new SessionRepositoryResponseWrapper(

wrappedRequest, response);

try {

//Finally, the packaged request and response are released in the filter chain

filterChain.doFilter(wrappedRequest, wrappedResponse);

}

finally {

wrappedRequest.commitSession();

}

}