Download and install

Official website https://hadoop.apache.org/

System CentOS7

Download and install hadoop-3.1 3.tar. gz

Installation decompression path / opt/module

Also configure environment variables

#HADOOP_HOME export HADOOP_HOME=/opt/module/hadoop-3.1.3 export PATH=$PATH:$HADOOP_HOME/bin export PATH=$PATH:$HADOOP_HOME/sbin

Three servers are used here. The domain names are Hadoop 102, Hadoop 103 and Hadoop 104

Cluster configuration

1) Cluster deployment planning

Note:

Ø NameNode and SecondaryNameNode should not be installed on the same server

Ø resource manager also consumes a lot of memory. It should not be configured on the same machine as NameNode and SecondaryNameNode.

| hadoop102 | hadoop103 | hadoop104 | |

|---|---|---|---|

| HDFS | NameNode DataNode | DataNode | SecondaryNameNode DataNode |

| YARN | NodeManager | ResourceManager NodeManager | NodeManager |

2) Profile description

Hadoop configuration files are divided into two types: default configuration files and user-defined configuration files. Only when users want to modify a default configuration value, they need to modify the user-defined configuration file and change the corresponding attribute value.

(1) Default profile:

| Default file to get | The file is stored in the jar package of Hadoop |

|---|---|

| [core-default.xml] | hadoop-common-3.1.3.jar/core-default.xml |

| [hdfs-default.xml] | hadoop-hdfs-3.1.3.jar/hdfs-default.xml |

| [yarn-default.xml] | hadoop-yarn-common-3.1.3.jar/yarn-default.xml |

| [mapred-default.xml] | hadoop-mapreduce-client-core-3.1.3.jar/mapred-default.xml |

(2) Custom profile:

core-site.xml,hdfs-site.xml,yarn-site.xml,mapred-site.xml four configuration files are stored in $Hadoop_ On the path of home / etc / Hadoop, users can modify the configuration again according to the project requirements.

3) Configure cluster

(1) Core profile

Configure core site xml

cd $HADOOP_HOME/etc/hadoop vim core-site.xml

The contents of the document are as follows:

<?xml version="1.0" encoding="utf-8"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<configuration>

<!-- appoint NameNode Address of -->

<property>

<name>fs.defaultFS</name>

<value>hdfs://hadoop102:8020</value>

</property>

<!-- appoint hadoop Storage directory of data -->

<property>

<name>hadoop.tmp.dir</name>

<value>/opt/module/hadoop-3.1.3/data</value>

</property>

<!-- to configure HDFS The static user used for web page login is username -->

<property>

<name>hadoop.http.staticuser.user</name>

<value>username</value>

</property>

</configuration>

PS:username is the user name you log in to CentOS7

(2) HDFS profile

Configure HDFS site xml

vim hdfs-site.xml

The contents of the document are as follows:

<?xml version="1.0" encoding="utf-8"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<configuration>

<!-- nn web End access address-->

<property>

<name>dfs.namenode.http-address</name>

<value>hadoop102:9870</value>

</property>

<!-- 2nn web End access address-->

<property>

<name>dfs.namenode.secondary.http-address</name>

<value>hadoop104:9868</value>

</property>

</configuration>

(3) YARN profile

Configure yarn site xml

vim yarn-site.xml

The contents of the document are as follows:

<?xml version="1.0" encoding="utf-8"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<configuration>

<!-- appoint MR go shuffle -->

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

<!-- appoint ResourceManager Address of-->

<property>

<name>yarn.resourcemanager.hostname</name>

<value>hadoop103</value>

</property>

<!-- Inheritance of environment variables -->

<property>

<name>yarn.nodemanager.env-whitelist</name>

<value>JAVA_HOME,HADOOP_COMMON_HOME,HADOOP_HDFS_HOME,HADOOP_CONF_DIR,CLASSPATH_PREPEND_DISTCACHE,HADOOP_YARN_HOME,HADOOP_MAPRED_HOME</value>

</property>

</configuration>

(4) MapReduce profile

Configure mapred site xml

vim mapred-site.xml

The contents of the document are as follows:

<?xml version="1.0" encoding="utf-8"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<configuration>

<!-- appoint MapReduce The program runs on Yarn upper -->

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

</configuration>

4) Distribute the configured Hadoop configuration file on the cluster

xsync /opt/module/hadoop-3.1.3/etc/hadoop/

5) Go to 103 and 104 to check the distribution of documents

cat /opt/module/hadoop-3.1.3/etc/hadoop/core-site.xml

Group together

1) Configure workers

vim /opt/module/hadoop-3.1.3/etc/hadoop/workers

Add the following contents to the document:

hadoop102 hadoop103 hadoop104

Note: no space is allowed at the end of the content added in the file, and no empty line is allowed in the file.

Synchronize all node profiles

xsync /opt/module/hadoop-3.1.3/etc

2) Start cluster

(1) if the cluster is started for the first time, you need to format the namenode on the Hadoop 102 node (Note: formatting namenode will generate a new cluster id, resulting in inconsistent cluster IDS between namenode and datanode, and the cluster cannot find past data. If the cluster reports an error during operation and needs to reformat namenode, be sure to stop the namenode and datanode processes, delete the data and logs directories of all machines, and then format.)

hdfs namenode -format

(2) Start HDFS

sbin/start-dfs.sh

(3) Start YARN on the node (Hadoop 103) where the resource manager is configured

sbin/start-yarn.sh

(4) View the NameNode of HDFS on the Web side

(a) Enter in the browser: http://hadoop102:9870

(b) view the data information stored on the HDFS

(5) View YARN's ResourceManager on the Web

(a) Enter in the browser: http://hadoop103:8088

(b) view the Job information running on YARN

3) Cluster Basic test

(1) Upload files to cluster

Upload small files

hadoop fs -mkdir /input

hadoop fs -put $HADOOP_HOME/wcinput/word.txt /input

Upload large files

hadoop fs -put /opt/software/jdk-8u212-linux-x64.tar.gz /

Execute the wordcount program

hadoop jar share/hadoop/mapreduce/hadoop-mapreduce-examples-3.1.3.jar wordcount /input /output

Configure history server

In order to view the historical operation of the program, you need to configure the history server. The specific configuration steps are as follows:

1) Configure mapred site xml

vim mapred-site.xml

Add the following configuration to this file.

<!-- Historical server address --> <property> <name>mapreduce.jobhistory.address</name> <value>hadoop102:10020</value> </property> <!-- History server web End address --> <property> <name>mapreduce.jobhistory.webapp.address</name> <value>hadoop102:19888</value> </property>

2) Distribution configuration

xsync $HADOOP_HOME/etc/hadoop/mapred-site.xml

3) Start the history server in Hadoop 102

mapred --daemon start historyserver

4) Check whether the history server is started

jps

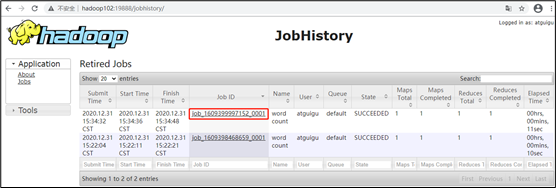

5) View JobHistory

http://hadoop102:19888/jobhistory

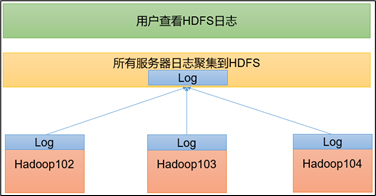

Configure log aggregation

Log aggregation concept: after the application runs, upload the program running log information to the HDFS system.

Benefits of log aggregation function: you can easily view the details of program operation, which is convenient for development and debugging.

Note: to enable the log aggregation function, you need to restart NodeManager, ResourceManager and HistoryServer.

The specific steps to enable log aggregation are as follows:

1) Configure yarn site xml

vim yarn-site.xml

Add the following configuration to this file.

<!-- Enable log aggregation --> <property> <name>yarn.log-aggregation-enable</name> <value>true</value> </property> <!-- Set log aggregation server address --> <property> <name>yarn.log.server.url</name> <value>http://hadoop102:19888/jobhistory/logs</value> </property> <!-- Set the log retention time to 7 days --> <property> <name>yarn.log-aggregation.retain-seconds</name> <value>604800</value> </property>

2) Distribution configuration

xsync $HADOOP_HOME/etc/hadoop/yarn-site.xml

3) Close NodeManager, ResourceManager, and HistoryServer

Execute under the hadoop directory on hadoop 103

sbin/stop-yarn.sh mapred --daemon stop historyserver

4) Start NodeManager, ResourceManage, and HistoryServer

On Hadoop 103

start-yarn.sh

On Hadoop 102

mapred --daemon start historyserver

5) Delete the existing output file on HDFS

On Hadoop 102

hadoop fs -rm -r /output

6) Execute the WordCount program

Under the hadoop directory on hadoop 102

hadoop jar share/hadoop/mapreduce/hadoop-mapreduce-examples-3.1.3.jar wordcount /input /output

7) View log

(1) historical server address

http://hadoop102:19888/jobhistory

(2) historical task list

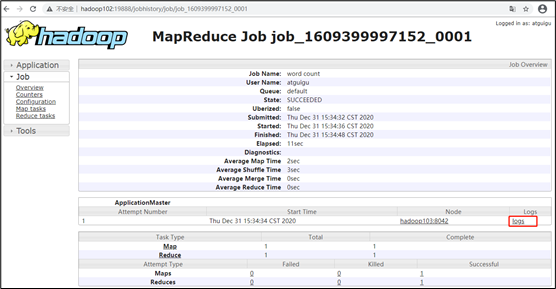

(3) view the task running log

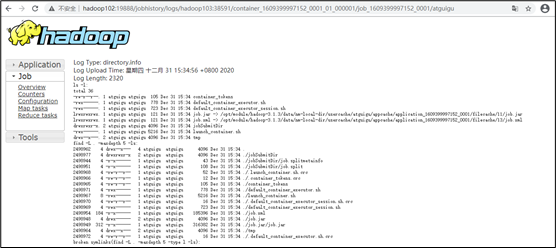

(4) details of operation log

Cluster start / stop mode summary

1) Each module starts / stops separately (ssh configuration is the premise)

(1) overall start / stop of HDFS

start-dfs.sh/stop-dfs.sh

(2) overall start / stop of YARN

start-yarn.sh/stop-yarn.sh

2) Each service component starts / stops one by one

(1) start / stop HDFS components respectively

hdfs --daemon start/stop namenode/datanode/secondarynamenode

(2) start / stop YARN

yarn --daemon start/stop resourcemanager/nodemanager

Write common scripts for Hadoop cluster

1) Hadoop cluster startup and shutdown script (including HDFS, Yan and Historyserver): myhadoop.sh

cd /home/username/bin #This username is your username

vim myhadoop.sh

Ø enter the following

#!/bin/bash

if [ $# -lt 1 ]

then

echo "No Args Input..."

exit ;

fi

case $1 in

"start")

echo " =================== start-up hadoop colony ==================="

echo " --------------- start-up hdfs ---------------"

ssh hadoop101 "/opt/module/hadoop-3.1.3/sbin/start-dfs.sh"

echo " --------------- start-up yarn ---------------"

ssh hadoop102 "/opt/module/hadoop-3.1.3/sbin/start-yarn.sh"

echo " --------------- start-up historyserver ---------------"

ssh hadoop101 "/opt/module/hadoop-3.1.3/bin/mapred --daemon start historyserver"

;;

"stop")

echo " =================== close hadoop colony ==================="

echo " --------------- close historyserver ---------------"

ssh hadoop101 "/opt/module/hadoop-3.1.3/bin/mapred --daemon stop historyserver"

echo " --------------- close yarn ---------------"

ssh hadoop102 "/opt/module/hadoop-3.1.3/sbin/stop-yarn.sh"

echo " --------------- close hdfs ---------------"

ssh hadoop101 "/opt/module/hadoop-3.1.3/sbin/stop-dfs.sh"

;;

*)

echo "Input Args Error..."

;;

esac

Ø exit after saving, and then grant script execution permission

chmod +x myhadoop.sh

2) View three server Java process scripts: jpsall

cd /home/username/bin vim jpsall

Ø enter the following

#!/bin/bash

for host in hadoop101 hadoop102 hadoop103

do

echo =============== $host ===============

ssh $host jps $@ | grep -v Jps

done

Ø exit after saving, and then grant script execution permission

chmod +x jpsall

3) Distribute the / home/atguigu/bin directory to ensure that custom scripts can be used on all three machines

xsync /home/atguigu/bin/

Common port number Description

| Port name | Hadoop2.x | Hadoop3.x |

|---|---|---|

| NameNode internal communication port | 8020 / 9000 | 8020 / 9000/9820 |

| NameNode HTTP UI | 50070 | 9870 |

| MapReduce view task execution port | 8088 | 8088 |

| History server communication port | 19888 | 19888 |