1. copyFromLocal

$ hadoop fs -help copyFromLocal

-copyFromLocal [-f] [-p] [-l] [-d] [-t <thread count>] <localsrc> ... <dst> :

Copy files from the local file system into fs. Copying fails if the file already

exists, unless the -f flag is given.

Flags:

-p Preserves access and modification times, ownership and the

mode.

-f Overwrites the destination if it already exists.

-t <thread count> Number of threads to be used, default is 1.

-l Allow DataNode to lazily persist the file to disk. Forces

replication factor of 1. This flag will result in reduced

durability. Use with care.

-d Skip creation of temporary file(<dst>._COPYING_).

Upload the local file copy to HFDFS. Specify the - f option when the file exists, otherwise the copy will fail.

The following code will create a txt file, create a directory on HDFS (similar to the Linux mkdir command), and then upload the file to this directory

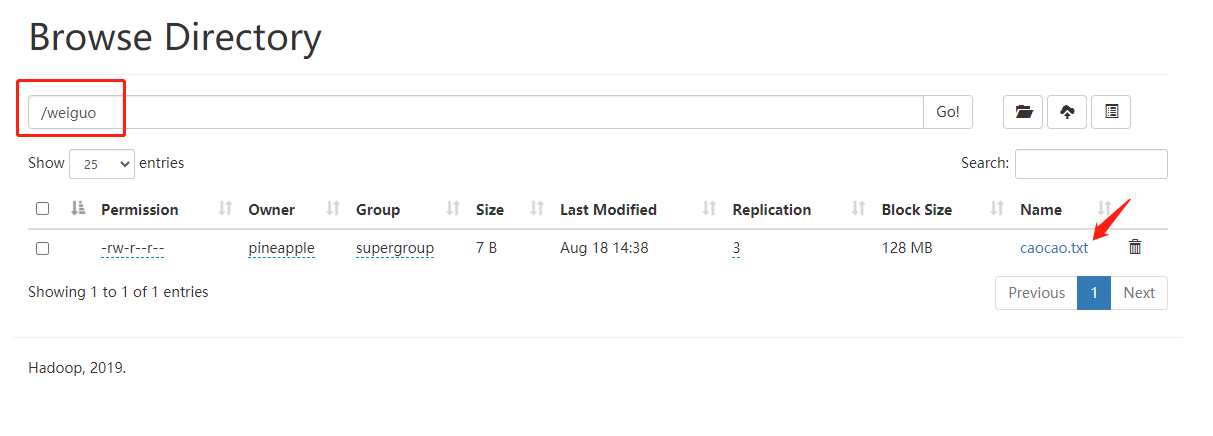

mkdir weiguo echo caocao >> weiguo/caocao.txt hadoop fs -mkdir /weiguo hadoop fs -copyFromLocal weiguo/caocao.txt /weiguo

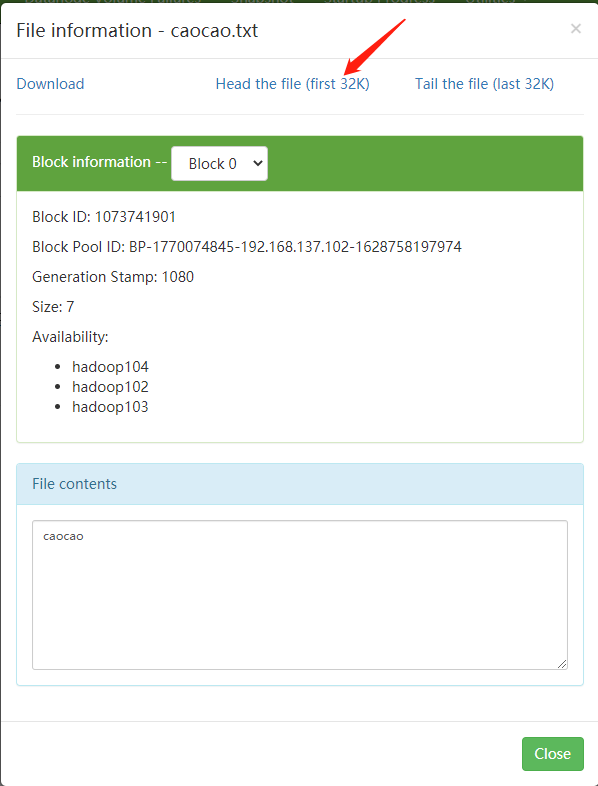

On the Web page, enter the specified directory and click Caocao Txt, click Head the file to view the first 32K contents of the file

By default, the access permission, owner, group, modification time and other information of the file will not be reserved. If necessary, use the - p option, which means preserve reservation

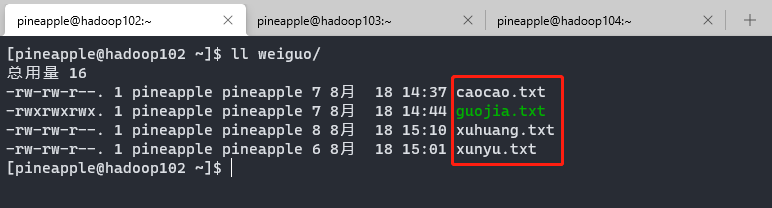

echo guojia >> weiguo/guojia.txt chmod 777 weiguo/guojia.txt hadoop fs -copyFromLocal -p weiguo/guojia.txt /weiguo

If the file on HDFS already exists, you can overwrite it with the - f option

hadoop fs -copyFromLocal -p -f weiguo/guojia.txt /weiguo

-The t option can specify the number of threads to use. The default is 1. It is very useful when uploading directories containing more than one file.

hadoop fs -copyFromLocal -p -f -t 5 weiguo/guojia.txt /weiguo

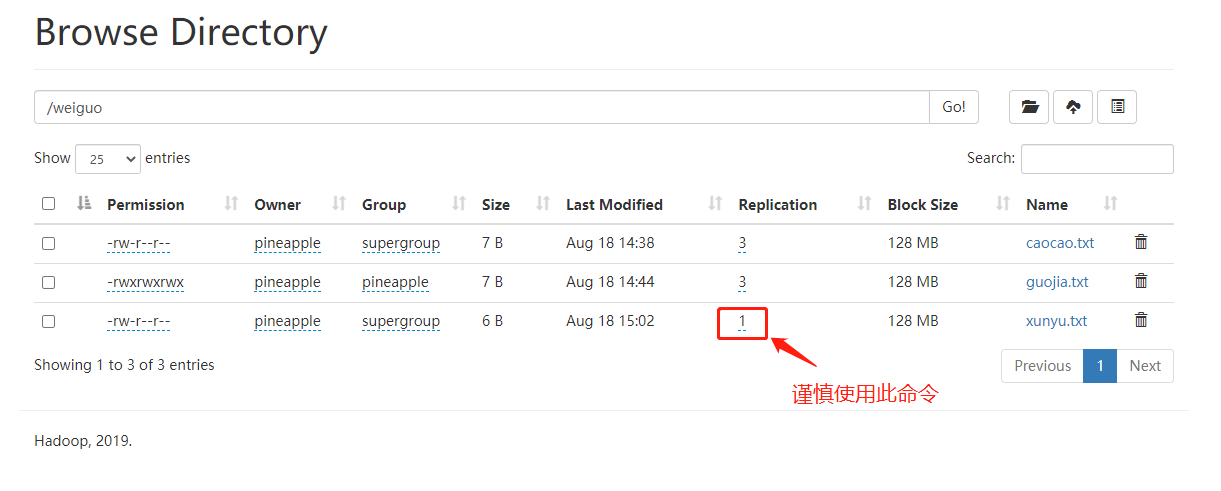

Using the - l option will allow the DN to delay persistence of files to the hard disk, forcing the number of copies to be 1 This option will result in reduced file durability. Use with caution.

echo xunyu >> weiguo/xunyu.txt hadoop fs -copyFromLocal -l weiguo/xunyu.txt /weiguo

-The d option can skip creating a temporary file. What is this temporary file and where is it? I don't understand yet. I'm in doubt.

echo xuhuang >> weiguo/xuhuang.txt hadoop fs -copyFromLocal -d weiguo/xuhuang.txt /weiguo

The summary is as follows:

| option | describe |

|---|---|

| -p | Retain access and modification time, ownership and mode |

| -f | If the target already exists, overwrite the target |

| -t | The number of threads used. The default is 1 |

| -l | Allow DN to delay persistence of files to hard disk. The number of forced copies is 1. This option will result in reduced durability. Use with caution. |

| -d | Skip creating temporary files |

2. put

$ hadoop fs -help put

-put [-f] [-p] [-l] [-d] <localsrc> ... <dst> :

Copy files from the local file system into fs. Copying fails if the file already

exists, unless the -f flag is given.

Flags:

-p Preserves access and modification times, ownership and the mode.

-f Overwrites the destination if it already exists.

-l Allow DataNode to lazily persist the file to disk. Forces

replication factor of 1. This flag will result in reduced

durability. Use with care.

-d Skip creation of temporary file(<dst>._COPYING_).

It's similar to the copyFromLocal command above, but it can't start multi-threaded upload, but put is often used in shell operations.

3. moveFromLocal

$ hadoop fs -help moveFromLocal -moveFromLocal <localsrc> ... <dst> : Same as -put, except that the source is deleted after it's copied.

Like the - put copyFromLocal command, local files are uploaded to HDFS. The difference is that local files will be deleted.

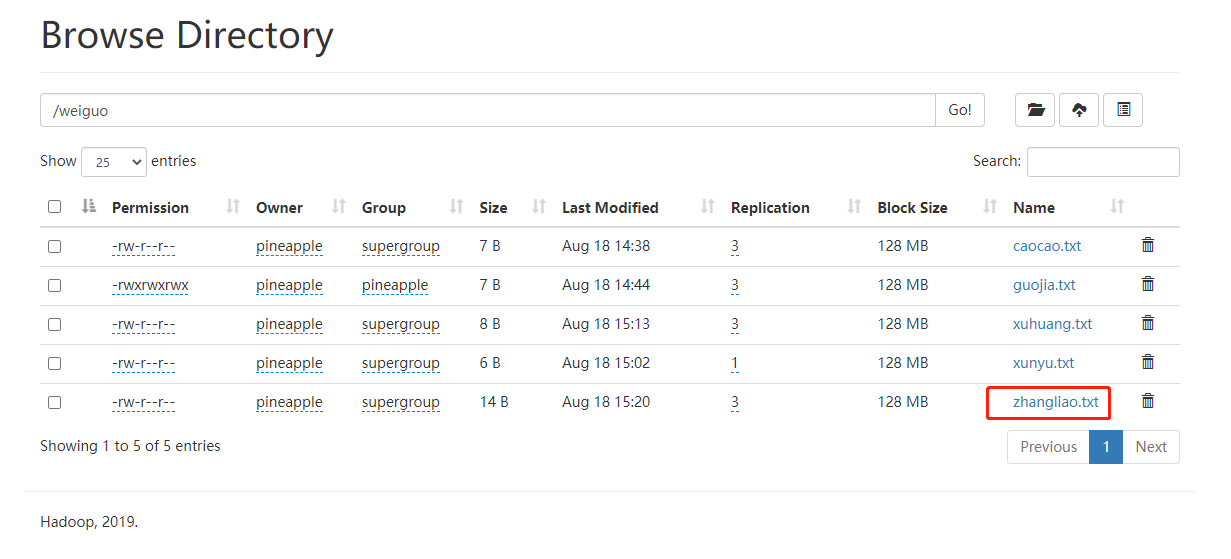

echo zhangliao.txt >> weiguo/zhangliao.txt hadoop fs -moveFromLocal weiguo/zhangliao.txt /weiguo

4. appendToFile

$ hadoop fs -help appendToFile -appendToFile <localsrc> ... <dst> : Appends the contents of all the given local files to the given dst file. The dst file will be created if it does not exist. If <localSrc> is -, then the input is read from stdin.

Appends all specified local files to the end of the specified HDFS file.

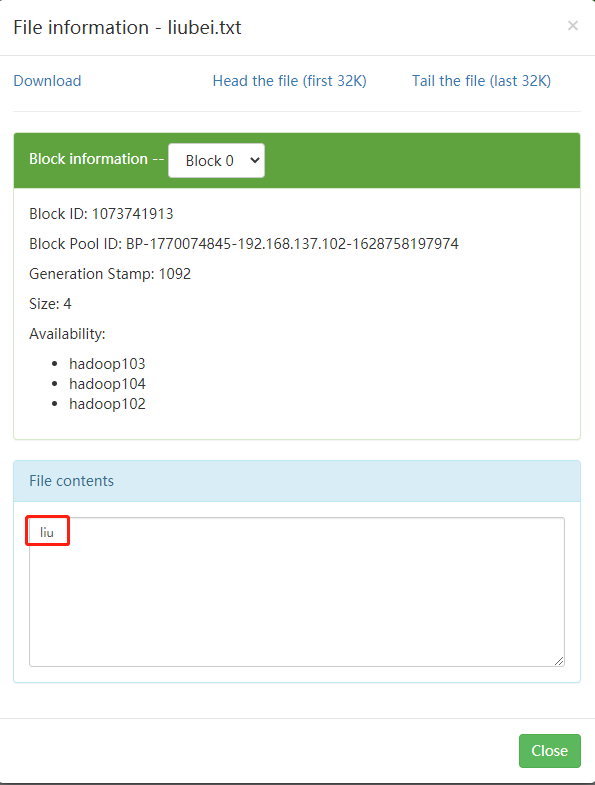

mkdir shuguo echo liu >> shuguo/liu.txt echo bei >> shuguo/bei.txt hadoop fs -mkdir /shuguo hadoop fs -put shuguo/liu.txt /shuguo/liubei.txt

The original Liubei Txt file:

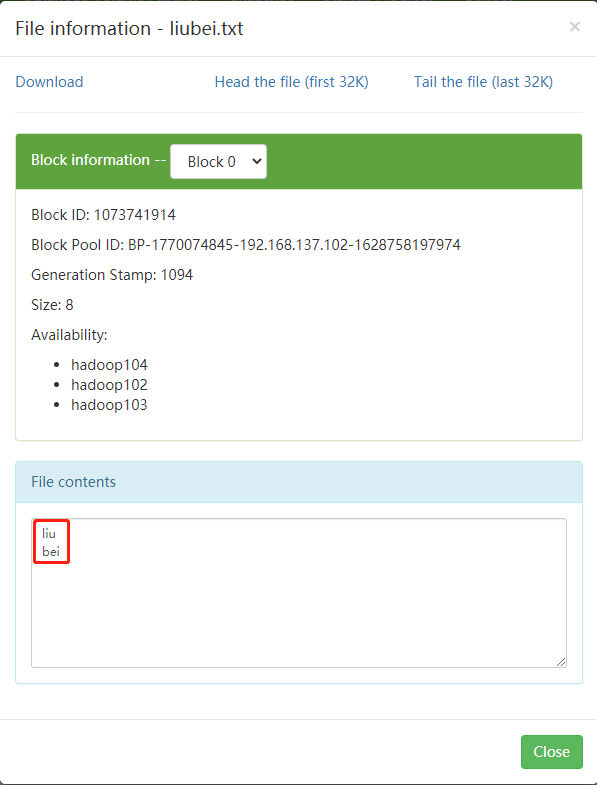

hadoop fs -appendToFile shuguo/bei.txt /shuguo/liubei.txt

Liubei. After appending txt:

If the HDFS file does not exist, a new one will be created. If the specified local file is -, it will be read from the standard input stdin.

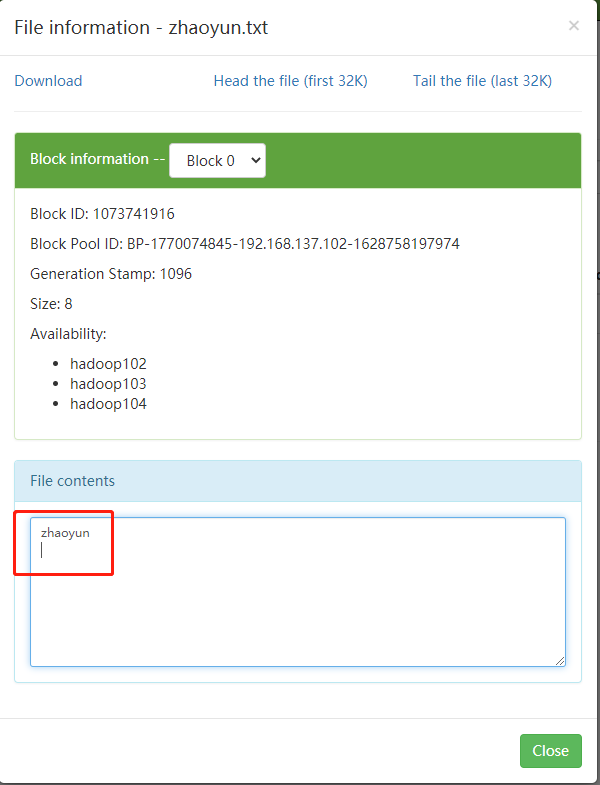

hadoop fs -appendToFile - /shuguo/zhaoyun.txt zhaoyun

CTRL + C to launch

Document content: