catalogue

Blocking and wakeup of MessageQueue

The inspiration of Handler to our developers

Handler common interview questions

preface

For a developer, reading source code is a required course. In the process of learning the source code, we can understand the design patterns and the development habits of the source code developers. In the process of reading the source code, I have been adhering to the sentence of Guo Lin's great God "peel the cocoon and click to stop". We don't need to go deep into every line of code. Usually, we just need to know the function of this line of code.

Have you found that we rarely encounter the problem of multithreading concurrency in the process of Android development? This is due to the inter thread communication tool Handler provided by Android, so we need to know how it realizes cross thread communication.

Use of Handler

We first know how to use Handler, and then analyze its core source code.

import androidx.annotation.NonNull;

import androidx.appcompat.app.AppCompatActivity;

import android.annotation.SuppressLint;

import android.os.Bundle;

import android.os.Handler;

import android.os.Message;

import android.util.Log;

import android.widget.TextView;

public class MainActivity extends AppCompatActivity {

private static final String TAG = "MainActivity";

private TextView mTvMain;

@Override

protected void onCreate(Bundle savedInstanceState) {

super.onCreate(savedInstanceState);

setContentView(R.layout.activity_main);

initView();

}

@Override

protected void onStart() {

super.onStart();

new Thread(() -> {

try {

// Simulate time-consuming operations

Thread.sleep(5000);

// Get Message instance object

Message msg = Message.obtain();

// Set the identification content of the Message object

msg.what = Constants.MSG_UPDATE_TEXT;

// Send the message through the Handler

handler.sendMessage(msg);

} catch (InterruptedException e) {

Log.e(TAG, "onStart: InterruptedException");

}

}).start();

}

private void initView() {

mTvMain = findViewById(R.id.tv_main);

}

@SuppressLint("HandlerLeak")

private Handler handler = new Handler() {

@Override

public void handleMessage(@NonNull Message msg) {

switch (msg.what) {

case Constants.MSG_UPDATE_TEXT:

mTvMain.setText("Update operation completed");

}

}

};

}Next, we will analyze the Handler source code according to the above code case, in the order of Handler initialization

Handler initialization

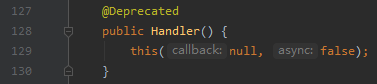

Finally, the following methods will be called:

/*

* Set this flag to true to detect anonymous, local, or member classes that extend this Handler class. This class may cause leakage.

*/

private static final boolean FIND_POTENTIAL_LEAKS = false;

// If we don't call looper prepare(),sThreadLocal. Get() will return null

// @Unsupported appusage is not available to our developers

static final ThreadLocal<Looper> sThreadLocal = new ThreadLocal<Looper>();

final Looper mLooper;

final MessageQueue mQueue;

// @Unsupported appusage is not available to our developers

final Handler.Callback mCallback;

final boolean mAsynchronous;

public Handler(@Nullable Handler.Callback callback, boolean async) {

if (FIND_POTENTIAL_LEAKS) {

final Class<? extends Handler> klass = getClass();

// If the Handler class object we create is an anonymous class, or a member class (internal class), or a local class (class created in method), and this class is not static

// There is a risk of memory leakage.

if ((klass.isAnonymousClass() || klass.isMemberClass() || klass.isLocalClass()) &&

(klass.getModifiers() & Modifier.STATIC) == 0) {

Log.w(TAG, "The following Handler class should be static or leaks might occur: " +

klass.getCanonicalName());

}

}

mLooper = Looper.myLooper();

if (mLooper == null) {

throw new RuntimeException(

"Can't create handler inside thread " + Thread.currentThread()

+ " that has not called Looper.prepare()");

}

mQueue = mLooper.mQueue;

mCallback = callback;

mAsynchronous = async;

}

/**

* Returns the Looper object associated with the current thread. null if the calling thread is not associated with Looper.

*

* @return Looper object

*/

public static @android.annotation.Nullable

Looper myLooper() {

return sThreadLocal.get();

}Here we focus on mlooper = looper myLooper(); In this line of code, the implementation of myloop is: sthreadlocal get(); Here we will talk about ThreadLocal. Please refer to another blog post: Fundamentals of concurrent programming (II) -- Analysis of the basic principles of ThreadLocal and CAS

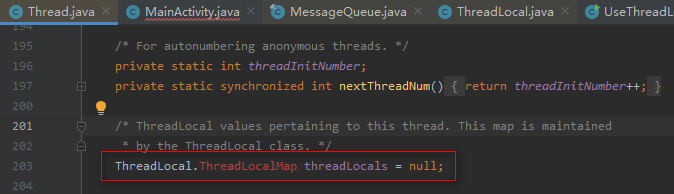

In fact, ThreadLocal is just like its name. It is a local copy of thread. There will be a ThreadLocal inside our thread Members of threadlocalmap:

Let's look at the specific get method:

/**

* Returns the value (Object type) of the ThreadLocalMap member in this thread with ThreadLocal as the key. If the ThreadLocalMap member is null,

* First initialize it and call {@ link #initialValue}.

*

* @return Returns the value corresponding to ThreadLocal in the current thread

*/

public T get() {

// Get current thread

Thread t = Thread.currentThread();

// Gets the ThreadLocal. Name of the current thread Threadlocalmap member

ThreadLocalMap map = getMap(t);

// If the map is not empty

if (map != null) {

// Get Entry through ThreadLocal

ThreadLocalMap.Entry e = map.getEntry(this);// this here is an instance of ThreadLocal, a copy of the current thread

// If the Entry is not empty, return the value of its value attribute

if (e != null) {

@SuppressWarnings("unchecked")

T result = (T) e.value;

return result;

}

}

//Otherwise, set the initial value

return setInitialValue();

}The above code contains threadlocalmap Entry:

We found that the constructor of Entry contains ThreadLocal and Object, and it is the internal class of ThreadLocalMap , so we can understand that ThreadLocalMap is a key value pair structure (Map structure) with , ThreadLocal as the key and any Object as the value, which is one-to-one correspondence.

Since there is a get method, there is a set method. We found that in {looper Prepare() called set:

static final ThreadLocal<Looper> sThreadLocal = new ThreadLocal<Looper>();

private static void prepare(boolean quitAllowed) {

// If a Looper has been set for the ThreadLocal of the current thread, an exception will be thrown, which ensures that a ThreadLocal corresponds to only one Looper

if (sThreadLocal.get() != null) {

throw new RuntimeException("Only one Looper may be created per thread");

}

// Otherwise, new a Looper object and set it to the ThreadLocal of the current thread

sThreadLocal.set(new Looper(quitAllowed));

}

// Construction method of Looper

private Looper(boolean quitAllowed) {

mQueue = new MessageQueue(quitAllowed);

mThread = Thread.currentThread();

}The above code judges sThreadLocal Once the thread is called, it will only assign a value to the thread local method, that is, once the thread is called, it will only change the value of the thread local method, It shows that looper and ThreadLocal correspond one-to-one. Moreover, we searched the global search method of MessageQueue in the Android source code and found that it was only invoked in the Looper construction method.

new MessageQueue(quitAllowed), and the construction method of MessageQueue is package management permission, that is, we ordinary developers cannot call it, which means that the Looper construction method can only create a MessageQueue object and realize the correspondence between Looper and MessageQueue. Combined with our study of ThreadLocal above, we know that a Thread corresponds to a unique ThreadLocal, so it shows that in the Handler mechanism of Android, Thread, ThreadLocal, Looper and MessageQueue correspond one by one.

send message

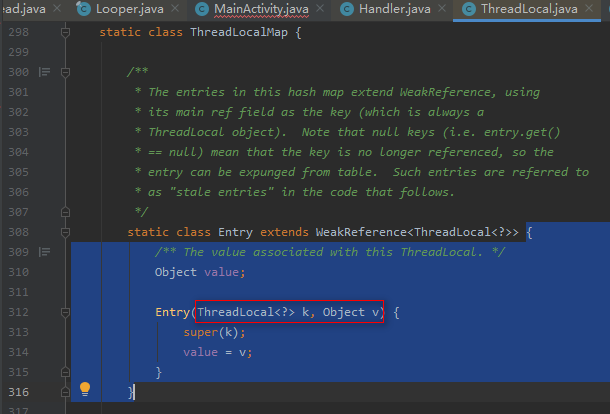

Main methods of Handler

Call process:

Handler.sendMessage() --> Handler.sendMessageDelayed()--> Handler.sendMessageAtTime()--> Handler.enqueueMessage()--> MessageQueue.enqueueMessage()

You can see that messagequeue will eventually be called Enqueuemessage() inserts a Message into the queue.

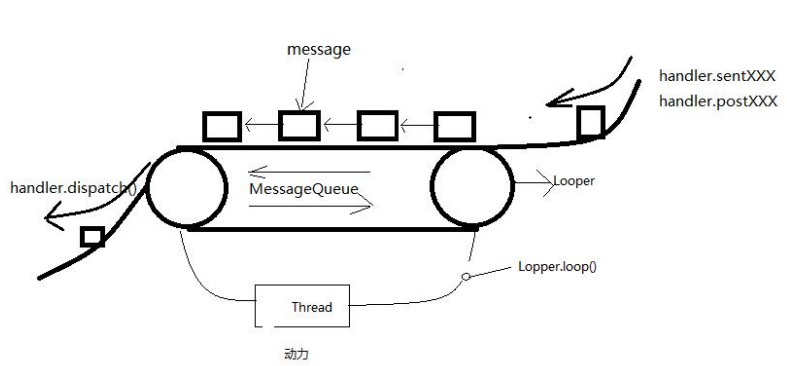

Handler workflow diagram

Handler workflow diagram

Next, let's analyze the enquque method:

Message next; // Members of Message

Message mMessages;// The header of the message queue can be regarded as the member of the queue

boolean enqueueMessage(Message msg, long when) {

// Omit code that is not very important

// Locking ensures thread safety and prevents multiple threads from inserting messages into MessageQueue at the same time

synchronized (this) {

// Omit code that is not very important

// Specify the delay time for the message to be inserted into the message queue, that is, how long after the message is processed

msg.when = when;

// Assign the queue head of the message queue to p

Message p = mMessages;

// Wake up message queue

boolean needWake;

// At first, the message queue must be empty. If there is no message in the current message queue or the waiting time of the inserted message is 0, we will insert the message,

// And let it point to null;

// Or if there is a message to be processed in the message queue, and the waiting time of the message we insert is less than that of the message to be processed, put the message we insert in front of it.

if (p == null || when == 0 || when < p.when) {

// The next message of the inserted message points to p, which may be null

msg.next = p;

// Assign the message we want to insert to the queue head of the message queue to realize the operation of inserting the queue

mMessages = msg;

// When there is no message in the message queue, it will be blocked. At this time, mBlocked is true

needWake = mBlocked;

}

// In this case, there are more than N messages in the message queue

else {

// Insert in the middle of the queue. Usually, we don't have to wake up the message queue unless there is a barrier at the head of the queue and the message is the earliest asynchronous message in the queue.

needWake = mBlocked && p.target == null && msg.isAsynchronous();

Message prev;

// Through an endless loop, we constantly compare the messages we want to insert with the existing messages in the queue

for (;;) {

// The message used for comparison in the current message queue is regarded as the previous message

prev = p;

// Then let prev point to p(p points to p.next)

p = p.next;

// Start traversing from the first message in the message queue until the end of the message queue, i.e. null value, can jump out of the loop;

// Or if the waiting time of the message to be inserted is less than that of the message we are comparing, the loop will also jump out.

if (p == null || when < p.when) {

break;

}

}

// Insert message

msg.next = p; // invariant: p == prev.next

prev.next = msg;

}

}

return true;

}

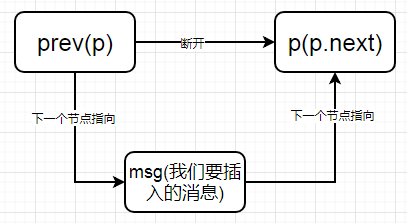

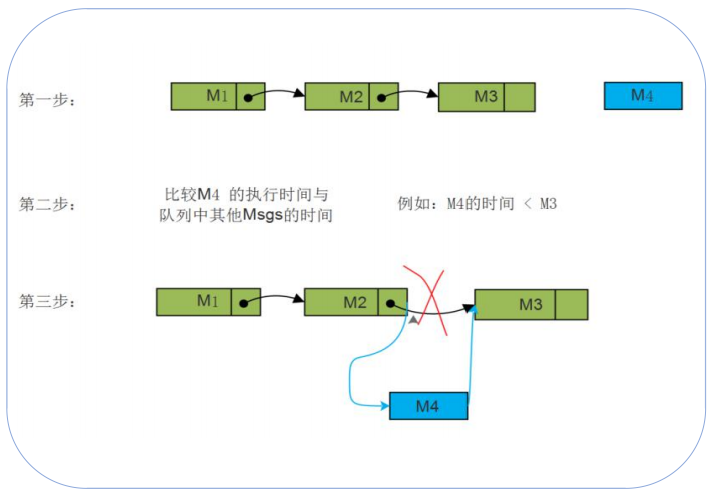

Message queue diagram

Message queue diagram

Processing messages

Join the team. We're finished. It's time to talk out of the team. Out of the team, we passed looper Loop () implementation:

/**

* Runs the message queue in the current thread. Be sure to call {@ link #quit()} to end the loop. The following code omits unnecessary (incomprehensible) code for parsing

*/

public static void loop() {

// Gets the Looper object of the current thread

final Looper me = myLooper();

if (me == null) {

// This exception is often encountered by beginners, because beginners always forget to call looper in the sub thread prepare()

throw new RuntimeException("No Looper; Looper.prepare() wasn't called on this thread.");

}

// Gets the MessageQueue object of the current thread

final MessageQueue queue = me.mQueue;

for (; ; ) {

// Take out the message - out of the team

Message msg = queue.next(); // It may be blocked. When there is no message in the MessageQueue, nativePollOnce(ptr, -1) will be called; Hang all the time

if (msg == null) {

// No message indicates that the message queue is being pushed out

return;

}

try {

// target is the Handler member in the Message

msg.target.dispatchMessage(msg);

} catch (Exception exception) { }

finally { }

// Recycle messages that may be in use

msg.recycleUnchecked();

}

}The loop method contains an endless loop through messagequeue Next() keeps fetching messages:

/**

* Message out of the queue, also omit N lines of code that is not very important (incomprehensible)

*

* Try to retrieve the next message and return if found.

*

* @return Returns the message to be processed

*/

private final ArrayList<IdleHandler> mIdleHandlers = new ArrayList<IdleHandler>();

Message next() {

int pendingIdleHandlerCount = -1; // -1 only during first iteration

int nextPollTimeoutMillis = 0;

for (;;) {

// Call the native method to sleep nextPollTimeoutMillis for so many milliseconds. If the value is - 1, it will wait indefinitely

nativePollOnce(ptr, nextPollTimeoutMillis);

synchronized (this) {

// The number of milliseconds of non sleep uptime since startup.

final long now = SystemClock.uptimeMillis();

Message prevMsg = null;

Message msg = mMessages;// mMessages can be regarded as the queue head of the message queue

if (msg != null && msg.target == null) {

// Blocked by a barrier, find the next asynchronous message in the queue.

do {

prevMsg = msg;

msg = msg.next;

} while (msg != null && !msg.isAsynchronous());

}

if (msg != null) {

if (now < msg.when) {

// The next message is not ready yet, set the delay time to wake up when it is ready.

nextPollTimeoutMillis = (int) Math.min(msg.when - now, Integer.MAX_VALUE);

} else {

// The next message is ready for processing and mBlocked is set to false

mBlocked = false;

if (prevMsg != null) {

prevMsg.next = msg.next;

} else {

mMessages = msg.next;

}

msg.next = null;

// Waiting for a message to return

return msg;

}

} else {

// No message

nextPollTimeoutMillis = -1;

}

if (pendingIdleHandlerCount < 0

&& (mMessages == null || now < mMessages.when)) {

// mIdleHandlers is an ArrayList, which is added through addIdleHandler. Generally, we will not call this method,

// So in most cases, midlehandlers Size () is 0

pendingIdleHandlerCount = mIdleHandlers.size();

}

if (pendingIdleHandlerCount <= 0) {

// There is no idle Handler to run. It loops and waits for more time, so it is always blocked in most cases, which explains why our

// After the Activity is displayed, as long as we keep the screen on, it will not end, because the main method of ActivityThread calls looper loop,

// Make the program hang all the time.

mBlocked = true;

continue;

}

}

}

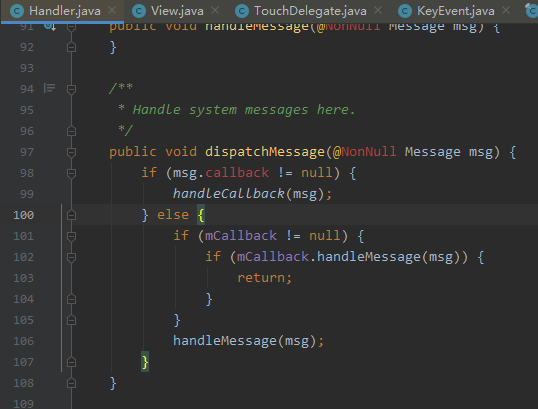

}Finally, call} handler Dispatchmessage(), and handler Dispatchmessage() will call back handleMessage().

From the source code analysis of MessageQueue, we can conclude that it is a priority queue composed of single linked list. Why do you say that?

Single linked list: the next member of Message is also Message, forming a single linked list.

Priority: every time a message enters the queue, we finally call the enqueueMessage(Message msg, long when) method. Here, when refers to the waiting time or delay time of the message, and the processing of the message will also be based on the order of the waiting time.

Queue: meet the first in first out rule.

Blocking and wakeup of MessageQueue

block

In fact, in the above code example, I have already mentioned calling messagequeue When next(), it will call {nativePollOnce(ptr, nextPollTimeoutMillis) to block. When} nextPollTimeoutMillis is - 1, it will be blocked all the time. If you don't understand, take a good look at the code above (be sure to see the comments).

awaken

In messagequeue Enqueuemessage() has such logic:

// Need to wake up

boolean needWake;

// If there is no message in the message queue, mBlocked is true, so I have to wake up the message queue when I insert a message

if (p == null || when == 0 || when < p.when) {

// New head, wake up the event queue if blocked.

msg.next = p;

mMessages = msg;

// If mBlocked is true, that is, the queue is blocked, you need to wake up

needWake = mBlocked;

}

if (needWake) {

// Call the native method to wake up the queue

nativeWake(mPtr);

}

// When there is no Handler to process, or it can be understood that there is no message in the message queue, the message queue will be blocked

if (pendingIdleHandlerCount <= 0) {

// No idle handlers to run. Loop and wait some more.

mBlocked = true;

continue;

}The above code means that when there is no Message in the MessageQueue, it will block. At this time, we insert the Message and wake up the Message.

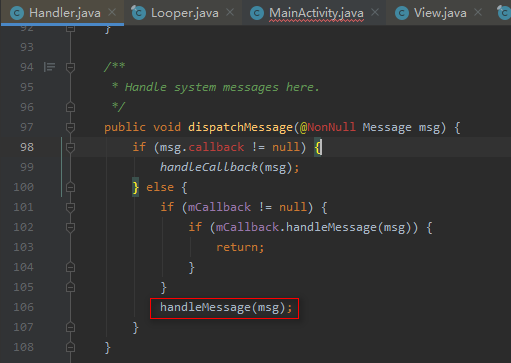

The inspiration of Handler to our developers

Highlight 1

/**

* Process system messages here.

*/

public void dispatchMessage(@NonNull Message msg) {

// If Message has its own callback, call Message callback. run()

if (msg.callback != null) {

handleCallback(msg);

} else {

// If we assign a value to Callback when creating the Handler, let's go here

if (mCallback != null) {

// After the Callback processes the message, we can determine whether to go to the last handleMessage(msg) according to the return value;

// This is similar to our View event distribution mechanism. It determines whether to continue to distribute events according to the return value

if (mCallback.handleMessage(msg)) {

return;

}

}

handleMessage(msg);

}

}

private static void handleCallback(Message message) {

message.callback.run();

}

/**

* You can use the callback interface when instantiating the Handler to avoid having to implement your own Handler subclass.

*/

public interface Callback {

/**

* @param msg An instance of {@ link android.os.Message Message} object

* @return true if no further processing is required

*/

boolean handleMessage(@NonNull Message msg);

}The above code reflects the idea of object-oriented encapsulation. Message encapsulates its own Callback. If message sets its own Callback, it will call back its own Callback run(); Similarly, the Handler encapsulates its own Callback. If the Handler sets its own Callback, it will call back its own {handleMessage(), and we can decide whether to execute the} handleMessage() overridden by the Handler subclass according to the return value. This makes the program very flexible, a bit like Responsibility chain model.

Highlight 2

Have you ever thought that after the Handler calls dispatchMessage, the message will be sent out. How is the message recycled?

Actually, in looper In loop (), message. Will eventually be called Recycleunchecked() performs the so-called message recycling (in fact, the message is not recycled). Let's look at the source code:

public static final Object sPoolSync = new Object();

/**

* Recycle messages that may be in use. When processing queued messages, it is used internally by MessageQueue and Looper.

*/

@UnsupportedAppUsage

void recycleUnchecked() {

// Mark the message as in use and keep it in the recycled object pool. Clear all other details.

flags = FLAG_IN_USE;

what = 0;

arg1 = 0;

arg2 = 0;

obj = null;

replyTo = null;

sendingUid = UID_NONE;

workSourceUid = UID_NONE;

when = 0;

target = null;

callback = null;

data = null;

// Lock to avoid message confusion in the message pool when multiple threads recycle messages

synchronized (sPoolSync) {

if (sPoolSize < MAX_POOL_SIZE) {

// Queue leader of message pool

next = sPool;

// Assign the currently processed message to the team leader

sPool = this;

// The message volume of the message pool is increased by one

sPoolSize++;

}

}

}Then look at message obtain():

/**

* Returns a Message instance from the Message pool. Avoid creating new Message objects in many cases.

*/

public static Message obtain() {

synchronized (sPoolSync) {

// Message pool queue header is not empty

if (sPool != null) {

// The queue header is assigned to the message object to be returned

Message m = sPool;

// The queue head points to the next node of m

sPool = m.next;

// The next node of m is empty

m.next = null;

m.flags = 0; // clear in-use flag

sPoolSize--;

return m;

}

}

return new Message();

}We found that the above code is very similar to the scene of security check before boarding. Message is like a box for luggage, and the members of message what, arg1, arg2 and objtct are equivalent to luggage. After we pass the security check, the box will not be discarded, but placed at the security check gate for the next person who passes the security check, which is like our message pool, In fact, what is used here is Sharing mode.

From the perspective of memory optimization, what are the benefits of doing so Obtaining the message with obtain () greatly reduces the call of new Message(), which reduces the excessive destruction of continuous memory space, that is, it will not be excessively fragmented, that is, there is more continuous space in memory, and the probability of OOM is small. Some students may say what GC does for food. Have you ever thought about why OOM will appear since GC will help us recycle garbage and free memory. In fact, even if GC uses the tag sorting algorithm to make the memory space continuous, when GC threads work, they will STW (stop the world), that is, all other threads have to hang. Moreover, if we constantly new objects and trigger GC, memory jitter will occur, resulting in jamming. Therefore, from the perspective of performance optimization, we try to avoid unnecessary memory overhead.

When will Looper be launched

If we create a Looper in the sub thread, we often have the problem of memory leakage, because most students do not release the Looper. What about the release? By calling Looper Quitsafe() or Looper quit()

/**

* Exit Looper safely.

* After processing all the remaining messages in the message queue, immediately terminate the {@ link #loop} method. However, pending extensions that expire in the future will not be delivered until the loop terminates

* Late news. After requesting Looper to exit, any attempt to post a message to the queue will fail. For example, {@ link Handler#sendMessage(Message)} method

* false will be returned.

*/

public void quitSafely() {

mQueue.quit(true);// The parameter passed for safe exit is true, while looper The parameter passed by quit() is false

}It will eventually be called to messagequeue quit():

/**

* Exit Looper

*

* @param safe Do you need to exit safely

*/

void quit(boolean safe) {

// If the exit is not allowed, an exception will be thrown, and the Looper in the ActivityThread is not allowed to exit.

if (!mQuitAllowed) {

throw new IllegalStateException("Main thread not allowed to quit.");

}

synchronized (this) {

// If you are exiting, return

if (mQuitting) {

return;

}

mQuitting = true;

if (safe) {

// Safe exit

removeAllFutureMessagesLocked();

} else {

// Unsafe exit

removeAllMessagesLocked();

}

// We can assume mPtr != 0 because mQuitting was previously false.

nativeWake(mPtr);

}

}Implementation of safe exit:

private void removeAllFutureMessagesLocked() {

// Get current time

final long now = SystemClock.uptimeMillis();

// The queue head of the message queue is assigned to p

Message p = mMessages;

// If the team leader exists

if (p != null) {

// If the queue head delay time is greater than the current time, remove all messages

if (p.when > now) {

removeAllMessagesLocked();

} else {// Continue to judge and get all messages in the queue that are greater than the current time

Message n;

for (;;) {

n = p.next;

if (n == null) {

return;

}

if (n.when > now) {

break;

}

p = n;

}

p.next = null;

// Recycle all messages that are greater than the current time. Messages with a delay time less than the current time will continue to be taken out and executed even if the message queue exits

do {

p = n;

n = p.next;

p.recycleUnchecked();

} while (n != null);

}

}

}Implementation of unsafe exit:

/**

* Remove all messages from the message queue, including those whose delay time is less than the current time

*/

private void removeAllMessagesLocked() {

Message p = mMessages;

// All Message objects in the round robin queue are cached into the Message pool one by one

while (p != null) {

Message n = p.next;

// Cache to message pool

p.recycleUnchecked();

p = n;

}

// Team head empty

mMessages = null;

}Handler common interview questions

1. How many handlers does a thread have?

We can create multiple handlers in one thread.

2. How many loopers are there in a thread? How is it guaranteed?

There is only one Looper in a thread;

Before using Looper in the Thread, we need to call Looper Prepare, and ThreadLocal is used in prepare. ThreadLocal is the local copy of the Thread and the unique member of each Thread. The Thread realizes Thread data isolation through it, and the data stored in ThreadLocal is stored through its internal class Entry in ThreadLocalMap. The construction method of Entry contains two members, ThreadLocal and Object, Therefore, in the Handler mechanism, ThreadLocalMap can be understood as a key value pair with ThreadLocal as the key and Looper as the value. Then we found that the ThreadLocal member in Looper is modified by static final, that is, when Looper is loaded, its member ThreadLocal is initialized and cannot be changed. That ensures the uniqueness of ThreadLocal. As long as I guarantee the uniqueness of Looper, it can be explained that in the Handler mechanism, Thread, Looper and ThreadLocal correspond one by one. We found that in the prepare method, we will first judge whether the value has been set for ThreadLocal. If it has not been set, new Looper() will be set to it, otherwise an exception will be thrown. This shows that ThreadLocal and Looper are also one-to-one correspondence.

3. Why is the handler memory leaking? Why haven't other inner classes mentioned this problem?

4. Why can the main thread use new Handler? If you want to create a new Handler in a child thread, what should you do?

5. What is the processing scheme for the Looper maintained in the sub thread when there is no message in the message queue? What's the usage?

6. Since there can be multiple handlers to add data to the MessageQueue (each Handler may be in different threads when sending messages), how does it ensure thread safety internally? Cancel interest?

It's simple. Add a synchronized lock

7. How should we create Message when we use it?

Message.obtain(); Because it adopts the sharing mode and reuses the recycled messages, it greatly reduces the probability of new Message(). From the perspective of memory optimization, it can reduce unnecessary memory overhead and effectively avoid excessive memory fragmentation, thus reducing the probability of OOM.

8. Why does looper loop not cause the application to get stuck

The answers to some questions will be released later. Let's start thinking first with a smart little head. Welcome to the comment area to communicate with me. If there are inaccuracies in the article, please correct ヾ ( ̄ ー) X(^ ▽ ^)