KNN nearest neighbor classification algorithm:

(nearest neighbor sampling) Proximity algorithm, or K-nearest neighbor (kNN) classification algorithm, is one of the simplest methods in machine learning classification technology. The so-called k nearest neighbors means k nearest neighbors, which means that each sample can be represented by its nearest K neighbors.

It belongs to supervised learning with category mark, and KNN is inert learning. It is called memory based learning, also known as instance based learning. It has no obvious pre training process. After the program runs and loads the data into memory, it can be classified without training.

Algorithm implementation: 1. Calculate the distance between each sample point and the test point 2. Select the nearest K samples and obtain their label s 3. Then find the label with the largest number in the K samples and return the label

The essence of KNN is based on a method of data statistics.

The following is the application of KNN case: handwritten numeral recognition. My case here is in text format. There are no picture conversion steps. Material model: (source code + material will be pasted with githup link at the end)

KNN handwritten numeral recognition

Implementation idea:

- The test data is converted into a 0-1 matrix with only one column, and all (L) training data are also converted into a 0-1 matrix with only one column by the above method

- Store L single column data into the new matrix A - each column of matrix A stores all the information of a word

- Calculate the distance between the test data and each column in matrix A, and the obtained L distances are stored in the distance array

- Take the index of the training set corresponding to the smallest K distances from the distance array, and the value with the most indexes is the predicted value

Step 1: Import module:

import os,time,operator #Import the os built-in library to read the file name and import time to test the efficiency import pandas as pd #Import data processing library pandas installation method pip install pandas import numpy as np #Import scientific computing library numpy installation method pip install numpy import matplotlib.pyplot as plt #Import drawing library matplotlib installation method pip install matplotlib

Step 2: Introduce a file to define a function to read data and convert data

## print(len(tarining)) #1934 Training sets ## print(len(test)) #945 test sets

trainingDigits =r'D:\work\Daily task 6 Machine Learning\day2 Handwritten numeral recognition\trainingDigits'

testDigits = r'D:\work\Daily task 6 Machine Learning\day2 Handwritten numeral recognition\testDigits'

## ↑ data path

tarining = (os.listdir(trainingDigits)) ## Read training set

test = (os.listdir(testDigits)) ## Read test set

def read_file(doc_name): ## Define a function that converts 32x32 format to 1 line

data=np.zeros((1,1024)) ## Create a zero array

f=open(doc_name) ## Open file

for i in range(32): ## It is known that there are 32 rows and 32 columns in each file

hang=f.readline() ## Take line

for j in range(32): ## Take each column in each row

data[0,32*i+j]=int(hang[j]) ## Give data value

# print(pd.DataFrame(data)) ## Do not convert to DataFrame here.

return data ## Otherwise, the test set efficiency will be reduced by 7 times

## The efficiency of reading training set will be reduced by 12 timesStep 3: Define a function to convert a field to a list, which will be used later. Because I didn't use the Dataframe in pandas to operate data in order to improve efficiency.

def dict_list(dic:dict): ## Defines a function that converts a dictionary to a list

keys = dic.keys() ## dic.keys() is the k of the dictionary

values = dic.values() ## dic.values() is the V of the dictionary

lst = [(key,val) for key,val in zip(keys, values)] ## for k,v in zip(k,v)

return lst ## zip is an iteratable object

## Returns a listStep 4: Define similarity function:

def xiangsidu(tests,xunlians,labels,k): ## tests:Test set # xulians:Training sample set # labels:label # k: Number of adjacent

data_hang=xunlians.shape[0] ## Get row number data of training set_ hang

zu=np.tile(tests,(data_hang,1))-xunlians ## Use tile to reconstruct the test set tests into a data_hang 1-dimensional array of rows and columns

q=np.sqrt((zu**2).sum(axis=1)).argsort() ## After calculating the distance, sort from low to high, and argsort returns the index

my_dict = {} ## Set a dict

for i in range(k): ## According to our k to count the occurrence frequency and sample category

votelabel=labels[q[i]] ## q[i] is the index value, and the corresponding label is obtained through labels

my_dict[votelabel] = my_dict.get(votelabel,0)+1 ## Count the number of times per label

sortclasscount=sorted(dict_list(my_dict),key=operator.itemgetter(1),reverse=True)

## Get the value corresponding to the votelabel key. If there is no, return to the default value

return sortclasscount[0][0] ## Returns the most frequent categoryStep 5: Write recognition function:

def shibie(): ## Define a function that recognizes handwritten digits

label_list = [] ## Store the training set in a matrix and store its label

train_length = len(tarining) ## Directly obtain the length of training set at one time

train_zero = np.zeros((train_length,1024)) ## Create a zeros array of (training set length, 1024) dimensions

for i in range(train_length): ## By traversing the length of the training set

doc_name = tarining[i] ## Get all file names

file_label = int(doc_name[0]) ## Take the label of the first file in the file name

label_list.append(file_label) ## Add labels to handlabel

train_zero[i,:] = read_file(r'%s\%s'%(trainingDigits,doc_name))## Array converted to 1024

## Here is the test set

errornum = 0 ## Record the initial value of error

testnum = len(test) ## Get the length of the test set as above

errfile = [] ## Define an empty list

for i in range(testnum): ## Put each test sample into the training set and test with KNN

testdoc_name = test[i] ## Obtain the files in the test set by using i as a subscript

test_label = int(testdoc_name[0]) ## Get the name of the test file and get our digital label

testdataor = read_file(r'%s\%s' %(testDigits,testdoc_name)) ## Call read_file operation test set

result = xiangsidu(testdataor, train_zero, label_list, 3) ## The call to xiangsidu returned result

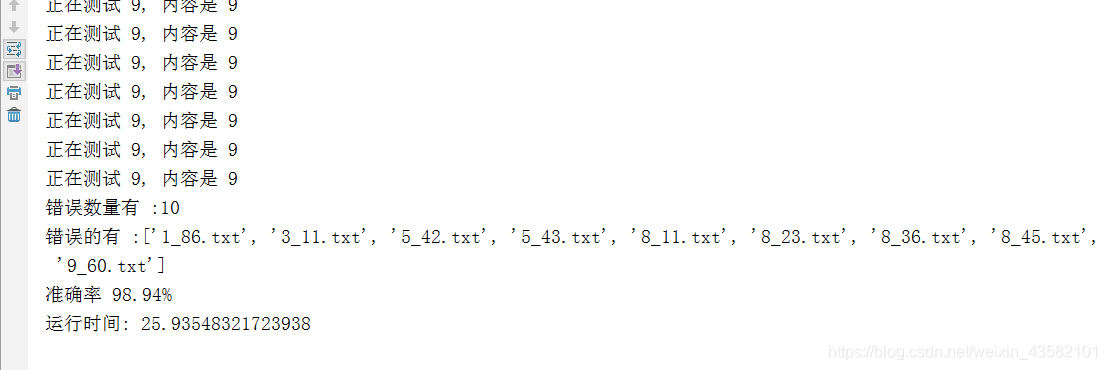

print("Testing %d, The content is %d" % (test_label,result)) ## Output result and label

if (result != test_label): ## Determine whether the label is equal to the test name

errornum += 1 ## If not, + 1 record times

errfile.append(testdoc_name) ## And add the wrong file name to the error list

print("Number of errors :%d" % errornum) ## Number of output errors

print("Wrong are :%s"%[i for i in errfile]) ## Output the name in the wrong list

print("Accuracy %.2f%%" % ((1 - (errornum / float(testnum))) * 100)) ## Calculation accuracyLast call:

if __name__ == '__main__': ## Declare main function

a = time.time() ## Set start time

shibie() ## Call test function

b= time.time() - a ## Calculate run time

print("Running time:",b) ## Output run timeThere's nothing to say. In order to improve efficiency, many skilled operations have been carried out in the middle, although it's still a pile of for loops. But the notes of each step are very clear. I believe you can understand it. If you don't understand it, please leave a message.

Github full link: https://github.com/lixi5338619/KNN_Distinguish/tree/master