)(warehouse access_secret)

Warehouse access_secret

Warehouse access_secret

Warehouse access_key

Warehouse status

Warehouse status

Warehouse name

Unique ID of the warehouse in Harbor

Warehouse credential type

Warehouse access_secret

Warehouse access_key

Harbor edge deployment document

Generate certificate

Generate CA certificate

1. Generate CA certificate private key

$ openssl genrsa -out ca.key 4096

2. Generate CA certificate

# Set yourdomain COM is modified to the domain name to be configured, and cedhub.com is used in this article com $ openssl req -x509 -new -nodes -sha512 -days 3650 \ -subj "/C=CN/ST=Beijing/L=Beijing/O=example/OU=Personal/CN=yourdomain.com" \ -key ca.key \ -out ca.crt

Generate server certificate

1. Generate the server private key

$ openssl genrsa -out tls.key 4096

2. Generate CSR (Certificate signing request)

# Set yourdomain COM is modified to the domain name to be configured, and cedhub.com is used in this article com

$ openssl req -sha512 -new \

-subj "/C=CN/ST=Beijing/L=Beijing/O=example/OU=Personal/CN=yourdomain.com" \

-key tls.key \

-out tls.csr3. Generate x509 v3 extension file

# Set yourdomain COM is modified to the domain name to be configured, and cedhub.com is used in this article com $ cat > v3.ext <<-EOF authorityKeyIdentifier=keyid,issuer basicConstraints=CA:FALSE keyUsage = digitalSignature, nonRepudiation, keyEncipherment, dataEncipherment extendedKeyUsage = serverAuth subjectAltName = @alt_names [alt_names] DNS.1=yourdomain.com EOF

4. Use v3 extension file to generate certificate for Harbor

$ openssl x509 -req -sha512 -days 3650 \

-extfile v3.ext \

-CA ca.crt -CAkey ca.key -CAcreateserial \

-in tls.csr \

-out tls.crtDownload the specified version of Chart

$ helm repo add harbor https://helm.goharbor.io # View the chart that can be installed (including historical version) $ helm search repo harbor -l # Install Chart version 1.8.1 (Harbor version 2.4.1) $ helm fetch harbor/harbor --untar --version 1.8.1

Configure values Yaml file

Create a separate namespace

# kubectl create namespace harbor

Configure Expose mode

If http is used to provide services, the Docker configuration file needs to be modified and restarted, so https is used to provide services, so tls needs to be configured

# Create a secret and import the certificate generated in step 1

kubectl create secret generic cedhub-tls --from-file=tls.crt --from-file=tls.key --from-file=ca.crt -n harbor

# Configure value yaml

...

tls:

# Enable the tls or not.

# Delete the "ssl-redirect" annotations in "expose.ingress.annotations" when TLS is disabled and "expose.type" is "ingress"

# Note: if the "expose.type" is "ingress" and the tls

# is disabled, the port must be included in the command when pull/push

# images. Refer to https://github.com/goharbor/harbor/issues/5291

# for the detail.

enabled: true

# The source of the tls certificate. Set it as "auto", "secret"

# or "none" and fill the information in the corresponding section

# 1) auto: generate the tls certificate automatically

# 2) secret: read the tls certificate from the specified secret.

# The tls certificate can be generated manually or by cert manager

# 3) none: configure no tls certificate for the ingress. If the default

# tls certificate is configured in the ingress controller, choose this option

certSource: secret

auto:

# The common name used to generate the certificate, it's necessary

# when the type isn't "ingress"

commonName: ""

secret:

# The name of secret which contains keys named:

# "tls.crt" - the certificate

# "tls.key" - the private key

secretName: "cedhub-tls"

# The name of secret which contains keys named:

# "tls.crt" - the certificate

# "tls.key" - the private key

# Only needed when the "expose.type" is "ingress".

notarySecretName: "cedhub-tls"

...The edge Harbor is only used inside the cluster, so the clusterIP exposure service is used

expose: # Set the way how to expose the service. Set the type as "ingress", # "clusterIP", "nodePort" or "loadBalancer" and fill the information # in the corresponding section type: clusterIP ...

Configure externalURL

That is, use the address used by clrient to log in to Harbor. Functions: generate the docker/helm command displayed in the portal interface; Generate the token service address returned to docker/notaryclient

# The external URL for Harbor core service. It is used to # 1) populate the docker/helm commands showed on portal # 2) populate the token service URL returned to docker/notary client # # Format: protocol://domain[:port]. Usually: # 1) if "expose.type" is "ingress", the "domain" should be # the value of "expose.ingress.hosts.core" # 2) if "expose.type" is "clusterIP", the "domain" should be # the value of "expose.clusterIP.name" # 3) if "expose.type" is "nodePort", the "domain" should be # the IP address of k8s node # # If Harbor is deployed behind the proxy, set it as the URL of proxy externalURL: https://cedhub.com

Configure persistent storage

Using hostPath

Create folder on Node

mkdir -p /data/disks/{disk1-5G,disk2-5G,disk3-1G,disk4-1G,disk5-1G,disk6-5G}If StorageClass is not provided to dynamically provide persistence, allocate it manually and create a PersistentVolume of hostPath type

apiVersion: v1

kind: PersistentVolume

metadata:

name: disk1

spec:

storageClassName: manual

capacity:

storage: 5Gi

accessModes:

- ReadWriteOnce

persistentVolumeReclaimPolicy: Recycle

hostPath:

path: "/data/disks/disk1-5G"

nodeAffinity:

required:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/hostname

operator: In

values:

- cvm-lrxdi7pm

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: disk2

spec:

storageClassName: manual

capacity:

storage: 5Gi

accessModes:

- ReadWriteOnce

persistentVolumeReclaimPolicy: Recycle

hostPath:

path: "/data/disks/disk2-5G"

nodeAffinity:

required:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/hostname

operator: In

values:

- cvm-lrxdi7pm

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: disk3

spec:

storageClassName: manual

capacity:

storage: 1Gi

accessModes:

- ReadWriteOnce

persistentVolumeReclaimPolicy: Recycle

hostPath:

path: "/data/disks/disk3-1G"

nodeAffinity:

required:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/hostname

operator: In

values:

- cvm-lrxdi7pm

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: disk4

spec:

storageClassName: manual

capacity:

storage: 1Gi

accessModes:

- ReadWriteOnce

persistentVolumeReclaimPolicy: Recycle

hostPath:

path: "/data/disks/disk4-1G"

nodeAffinity:

required:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/hostname

operator: In

values:

- cvm-lrxdi7pm

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: disk5

spec:

storageClassName: manual

capacity:

storage: 1Gi

accessModes:

- ReadWriteOnce

persistentVolumeReclaimPolicy: Recycle

hostPath:

path: "/data/disks/disk5-1G"

nodeAffinity:

required:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/hostname

operator: In

values:

- cvm-lrxdi7pm

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: disk6

spec:

storageClassName: manual

capacity:

storage: 5Gi

accessModes:

- ReadWriteOnce

persistentVolumeReclaimPolicy: Recycle

hostPath:

path: "/data/disks/disk6-5G"

nodeAffinity:

required:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/hostname

operator: In

values:

- cvm-lrxdi7pmCreate PVC

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: disk1-pv-claim

namespace: harbor

spec:

storageClassName: manual

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 5Gi

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: disk2-pv-claim

namespace: harbor

spec:

storageClassName: manual

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 5Gi

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: disk3-pv-claim

namespace: harbor

spec:

storageClassName: manual

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 1Gi

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: disk4-pv-claim

namespace: harbor

spec:

storageClassName: manual

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 1Gi

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: disk5-pv-claim

namespace: harbor

spec:

storageClassName: manual

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 1Gi

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: disk6-pv-claim

namespace: harbor

spec:

storageClassName: manual

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 5GiConfigure values Yaml persistent storage section

···

# For storing images and charts, you can also use "azure", "gcs", "s3",

# "swift" or "oss". Set it in the "imageChartStorage" section

persistence:

enabled: true

# Setting it to "keep" to avoid removing PVCs during a helm delete

# operation. Leaving it empty will delete PVCs after the chart deleted

# (this does not apply for PVCs that are created for internal database

# and redis components, i.e. they are never deleted automatically)

resourcePolicy: "keep"

persistentVolumeClaim:

registry:

# Use the existing PVC which must be created manually before bound,

# and specify the "subPath" if the PVC is shared with other components

existingClaim: "disk1-pv-claim"

# Specify the "storageClass" used to provision the volume. Or the default

# StorageClass will be used(the default).

# Set it to "-" to disable dynamic provisioning

storageClass: ""

subPath: ""

accessMode: ReadWriteOnce

size: 5Gi

chartmuseum:

existingClaim: "disk2-pv-claim"

storageClass: ""

subPath: ""

accessMode: ReadWriteOnce

size: 5Gi

jobservice:

existingClaim: "disk3-pv-claim"

storageClass: ""

subPath: ""

accessMode: ReadWriteOnce

size: 1Gi

# If external database is used, the following settings for database will

# be ignored

database:

existingClaim: "disk4-pv-claim"

storageClass: ""

subPath: ""

accessMode: ReadWriteOnce

size: 1Gi

# If external Redis is used, the following settings for Redis will

# be ignored

redis:

existingClaim: "disk5-pv-claim"

storageClass: ""

subPath: ""

accessMode: ReadWriteOnce

size: 1Gi

trivy:

existingClaim: "disk6-pv-claim"

storageClass: ""

subPath: ""

accessMode: ReadWriteOnce

size: 5Gi

···Using storageClass

Configure values Yaml persistent storage section

···

# For storing images and charts, you can also use "azure", "gcs", "s3",

# "swift" or "oss". Set it in the "imageChartStorage" section

persistence:

enabled: true

# Setting it to "keep" to avoid removing PVCs during a helm delete

# operation. Leaving it empty will delete PVCs after the chart deleted

# (this does not apply for PVCs that are created for internal database

# and redis components, i.e. they are never deleted automatically)

resourcePolicy: "keep"

persistentVolumeClaim:

registry:

# Use the existing PVC which must be created manually before bound,

# and specify the "subPath" if the PVC is shared with other components

existingClaim: "

# Specify the "storageClass" used to provision the volume. Or the default

# StorageClass will be used(the default).

# Set it to "-" to disable dynamic provisioning

storageClass: "storageClassName"

subPath: ""

accessMode: ReadWriteOnce

size: 5Gi

chartmuseum:

existingClaim: ""

storageClass: "storageClassName"

subPath: ""

accessMode: ReadWriteOnce

size: 5Gi

jobservice:

existingClaim: ""

storageClass: "storageClassName"

subPath: ""

accessMode: ReadWriteOnce

size: 1Gi

# If external database is used, the following settings for database will

# be ignored

database:

existingClaim: ""

storageClass: "storageClassName"

subPath: ""

accessMode: ReadWriteOnce

size: 1Gi

# If external Redis is used, the following settings for Redis will

# be ignored

redis:

existingClaim: ""

storageClass: "storageClassName"

subPath: ""

accessMode: ReadWriteOnce

size: 1Gi

trivy:

existingClaim: ""

storageClass: "storageClassName"

subPath: ""

accessMode: ReadWriteOnce

size: 5Gi

···Install / uninstall Harbor

Install Harbor

# Under the harbor directory $ helm install --namespace harbor my-harbor .

Uninstall Harbor

$ helm uninstall my-harbor -n harbor

High availability configuration

The high availability scheme of active Standby mode is adopted, the Harbor of edge cluster provides services, and the TCR in the cloud is in Standby state

private_key.pem and root CRT file

In the client authentication process, Harbor provides certificate and private key files for Distribution to create and verify the Bearer token in the request. In the high availability scheme of multi instance Harbor, the bear token created by any instance needs to be recognized and verified by other instances, that is, all instances need to use the same private_key.pem and root CRT file.

csrf_key

In order to prevent Cross Site RequestForgery, harbor has enabled the token verification of csrf. Harbor will generate a random number as a token of csrf and attach it to the cookie. When the user submits a request, the client will extract the random number from the cookie and submit it as a token of csrf. Harbor will reject the access request based on whether the value is empty or invalid. Then, between multiple instances, it is necessary to ensure that the token created by any instance can be successfully verified by any other instance, that is, it is necessary to unify the csrf token private key value of each instance.

Not implemented temporarily

Configure certificate for Docker of node

The Docker daemon will The crt file is parsed into a CA certificate Resolve cert file to cert

$ openssl x509 -inform PEM -in tls.crt -out tls.cert

Create Docker certificate store directory

# Set yourdomain COM is modified to the domain name to be configured, and cedhub.com is used in this article com # If you map the default 443 port to another port, you need to create / etc / docker / certs d/yourdomain. COM: port or / etc / docker / certs d/harbor_ IP:port $ mkdir -p /etc/docker/certs.d/yourdomain.com/

Copy the certificate to the Docker certificate store directory

# Set yourdomain COM is modified to the domain name to be configured, and cedhub.com is used in this article com cp tls.cert /etc/docker/certs.d/yourdomain.com/ cp tls.key /etc/docker/certs.d/yourdomain.com/ cp ca.crt /etc/docker/certs.d/yourdomain.com/

Deploying workloads using edge Harbor

Add registry secret

# Docker server is the domain name of service harbor kubectl create secret docker-registry registry-secret --namespace=default \ --docker-server=cedhub.com \ --docker-username=admin \ --docker-password=Harbor12345

Configure Deployment

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

labels:

appname: nginx

spec:

selector:

matchLabels:

appname: nginx

replicas: 3

template:

metadata:

labels:

appname: nginx

spec:

containers:

- name: nginx

image: cedhub.com/preheat/nginx:latest # Edge mirror address

ports:

- containerPort: 80

imagePullSecrets: # Use the secret of edge Harbor

- name: registry-secretConfigure Service

apiVersion: v1

kind: Service

metadata:

name: nginx-service

spec:

selector:

appname: nginx

type: NodePort

ports:

- protocol: TCP

port: 80

targetPort: 80

nodePort: 30080Unexpected Case solution

1. There is no StorageClass at the edge. When using persistent storage of hostPath type, data backup is performed

Use pgcli to back up metadata to other nodes

Configuring image synchronization using Harbor API

Warehouse configuration API

1. Test warehouse connectivity

# -k allow curl not to validate certificates

# -v detailed output, output Headers and other information

# -i output Response Body

# authorization: Basic configure base64 encoded user name and password in the format of username:password

$ curl -k -v -i -X 'POST' 'https://cedhub.com/api/v2.0/registries/ping' -H 'accept: application/json' -H 'authorization: Basic YWRtaW46SGFyYm9yMTIzNDU=' -H 'Content-Type: application/json' -d '{

"access_key": "AKIDNa7NM5WgZBWaNvEH3XliOoywd3D6i37v",

"access_secret": "YpujHYYHkwrUqvjGWVDdngj6LL4WhJgm",

"description": "",

"insecure": false,

"name": "test",

"type": "tencent-tcr",

"url": "https://preheat-test.tencentcloudcr.com"

}'2. Add remote warehouse

# -k allow curl not to validate certificates

# -v detailed output, output Headers and other information

# -i output Response Body

# authorization: Basic configure base64 encoded user name and password in the format of username:password

$ curl -k -v -i -X 'POST' 'https://cedhub.com/api/v2.0/registries' -H 'accept: application/json' -H 'authorization: Basic YWRtaW46SGFyYm9yMTIzNDU=' -H 'Content-Type: application/json' -d '{

"credential": {

"access_key": "AKIDNa7NM5WgZBWaNvEH3XliOoywd3D6i37v",

"access_secret": "YpujHYYHkwrUqvjGWVDdngj6LL4WhJgm",

"type": "basic"

},

"description": "",

"insecure": false,

"name": "test",

"type": "tencent-tcr",

"url": "https://preheat-test.tencentcloudcr.com"

}'3. Get the list of remote warehouses

# -k allow curl not to validate certificates # -v detailed output, output Headers and other information # -i output Response Body # authorization: Basic configure base64 encoded user name and password in the format of username:password $ curl -k -v -i -X 'GET' 'https://cedhub.com/api/v2.0/registries?page=1&page_size=10' -H 'accept: application/json' -H 'authorization: Basic YWRtaW46SGFyYm9yMTIzNDU='

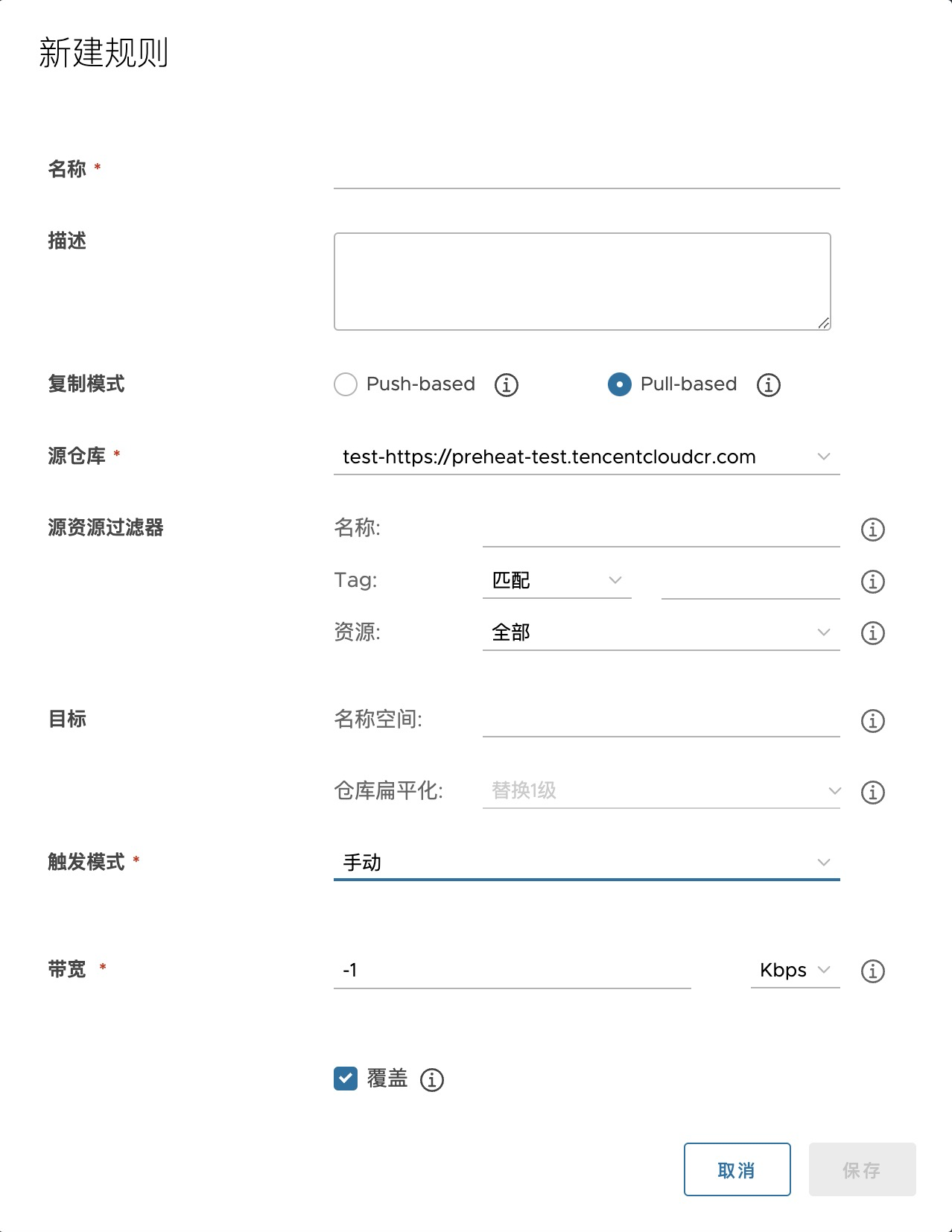

Copy rule description

Pull based replication rules

Pull resources from remote warehouse to local warehouse

{

"name": "sync_test",

"description": "",

"src_registry": {

"creation_time": "2022-02-23T04:01:40.344Z",

"credential": {

"access_key": "AKIDNa7NM5WgZBWaNvEH3XliOoywd3D6i37v",

"access_secret": "*****",

"type": "basic"

},

"id": 1,

"name": "test",

"status": "healthy",

"type": "tencent-tcr",

"update_time": "2022-02-23T04:01:40.344Z",

"url": "https://preheat-test.tencentcloudcr.com"

}

"dest_registry": null,

"dest_namespace": "library",

"dest_namespace_replace_count": 1,

"trigger": {

"t15pe": "manual",

"trigger_settings": {

"cron": ""

}

},

"enabled": true,

"deletion": false,

"override": false,

"speed": -1,

"filters": [

{

"type": "name",

"value": "preheat/**"

},

{

"type": "tag",

"decoration": "matches",

"value": "latest"

},

{

"type": "resource",

"value": "image"

}

]

}Parameter description

parameter | Parameter description |

|---|---|

name | Copy rule name |

description | Copy rule description |

src_registry | The remote warehouse interface can obtain the information source list from the warehouse |

filters | Source resource filter list |

dest_registry | Target warehouse, null in pull based mode |

dest_namespace | Target namespace specifies the target namespace. If it is not filled in, the resource will be placed under the same namespace as the source (if there is no corresponding namespace, it will be created directly) |

dest_namespace_replace_count | Flat level of target warehouse |

trigger | Trigger mode |

speed | Bandwidth (Kbps) |

src_ Description of registry

"src_registry": {

"creation_time": "2022-02-23T03:49:16.652Z",

"credential": {

"access_key": "AKIDNa7NM5WgZBWaNvEH3XliOoywd3D6i37v",

"access_secret": "*****",

"type": "basic"

},

"id": 7,

"name": "test",

"status": "healthy",

"type": "tencent-tcr",

"update_time": "2022-02-23T03:49:16.652Z",

"url": "https://preheat-test.tencentcloudcr.com"

},

parameter | explain |

|---|---|

creation_time | Warehouse creation time |

credential.access_key | Warehouse access_key |

credential.access_secret | Warehouse access_secret |

credential.type | Warehouse access_secret |

id | Warehouse access_secret (automatically created) |

name | Warehouse name |

status | Warehouse status |

type | Warehouse type |

update_time | Warehouse status update time |

url | Warehouse URL |

Description of filters

"filters": [

{

"type": "name",

"value": "preheat/**"

},

{

"type": "tag",

"decoration": "matches",

"value": "latest"

},

{

"type": "resource",

"value": "image"

}

]- Resource name filter

parameter | explain |

|---|---|

type | Name is the name filter |

value | Filter the resource name without filling in or "* *", and match all resources; "Library / * *" only matches the resources under library |

- Tag filter

parameter | explain |

|---|---|

type | Tag, i.e. tag filter |

decoration | matches/excludes |

value | Filter the tag/version of the resource. Do not fill in or "" to match all; '1.0 *' only matches tag/version starting with '1.0' |

- Resource type filter

parameter | explain |

|---|---|

type | resource, i.e. type filter |

value | Filter resource type, image/char / not filled in (image / helm chart / all) |

Trigger (trigger mode)

parameter | explain |

|---|---|

type | manual/scheduled |

trigger_settings.trigger_settings.cron | cron expressions |

Remote replication API

1. Create remote replication policy

- Synchronize all repo s in the target space locally

# -k allow curl not to validate certificates

# -v detailed output, output Headers and other information

# -i output Response Body

# authorization: Basic configure base64 encoded user name and password in the format of username:password

# SRC needs to be obtained from the remote warehouse list interface_ Registry information

$ curl -k -v -i -X 'POST' \

'https://cedhub.com/api/v2.0/replication/policies' \

-H 'accept: application/json' \

-H 'authorization: Basic YWRtaW46SGFyYm9yMTIzNDU=' \

-H 'Content-Type: application/json' -d '

{

"name": "test_sync",

"description": "",

"src_registry": {

"creation_time": "2022-02-23T04:01:40.344Z",

"credential": {

"access_key": "AKIDNa7NM5WgZBWaNvEH3XliOoywd3D6i37v",

"access_secret": "*****",

"type": "basic"

},

"id": 1,

"name": "test",

"status": "healthy",

"type": "tencent-tcr",

"update_time": "2022-02-23T04:01:40.344Z",

"url": "https://preheat-test.tencentcloudcr.com"

},

"dest_registry": null,

"dest_namespace": "",

"dest_namespace_replace_count": 1,

"trigger": {

"type": "manual",

"trigger_settings": {

"cron": ""

}

},

"enabled": true,

"deletion": false,

"override": true,

"speed": -1,

"filters": [

{

"type": "name",

"value": "preheat/**"

}

]

} '2. List remote replication policies

# -k allow curl not to validate certificates # -v detailed output, output Headers and other information # -i output Response Body # authorization: Basic configure base64 encoded user name and password in the format of username:password $ curl -k -v -i -X 'GET' \ 'https://cedhub.com/api/v2.0/replication/policies?page=1&page_size=10' \ -H 'accept: application/json' \ -H 'authorization: Basic YWRtaW46SGFyYm9yMTIzNDU='

3. Manually touch the copy rule

# -k allow curl not to validate certificates

# -v detailed output, output Headers and other information

# -i output Response Body

# authorization: Basic configure base64 encoded user name and password in the format of username:password

# You need to get policy information from the interface that lists remote replication policies

$ curl -k -v -i -X 'POST' \

'https://cedhub.com/api/v2.0/replication/executions' \

-H 'accept: application/json' \

-H 'Content-Type: application/json' \

-H 'authorization: Basic YWRtaW46SGFyYm9yMTIzNDU=' \

-d '{

"policy_id": 1

}'4. List the execution records of remote replication tasks

# -k allow curl not to validate certificates # -v detailed output, output Headers and other information # -i output Response Body # authorization: Basic configure base64 encoded user name and password in the format of username:password # You need to get policy information from the interface that lists remote replication policies $ curl -k -v -i -X 'GET' \ 'https://cedhub.com/api/v2.0/replication/executions?page=1&page_size=10&policy_id=1' \ -H 'accept: application/json'\ -H 'authorization: Basic YWRtaW46SGFyYm9yMTIzNDU='

reference material

1 https://helm.sh/zh/docs/intro/install/

2 https://www.daimajiaoliu.com/daima/60ec4c05cee6803

3 https://www.qikqiak.com/post/harbor-quick-install/

4 https://www.cnblogs.com/longgor/p/11203820.html

5 https://cloud.tencent.com/developer/article/1754686

6 https://goharbor.io/docs/2.1.0/install-config/configure-https/

7 https://goharbor.io/docs/2.4.0/install-config/harbor-ha-helm/

8 http://www.361way.com/harbor-install/6511.html

9 https://tuxnotes.github.io/2021/09/02/Harbor-HA.html