1. Install pacemaker and corosync on server4 and server5

yum install pacemaker yum install corosync -y

2. Modify the configuration file

cd /etc/corosync/

cp corosync.conf.example corosync.conf

vim corosync.conf

# Please read the corosync.conf.5 manual page

compatibility: whitetank

totem {

version: 2

secauth: off

threads: 0

interface {

ringnumber: 0

bindnetaddr: 172.25.70.0 # Network segment for cluster work

mcastaddr: 226.94.1.3 # Multicast ip

mcastport: 5405 # Multicast port number

ttl: 1

}

}

logging {

fileline: off

to_stderr: no

to_logfile: yes

to_syslog: yes

logfile: /var/log/cluster/corosync.log

debug: off

timestamp: on

logger_subsys {

subsys: AMF

debug: off

}

}

amf {

mode: disabled

}

service { #After starting corosync, start pacemaker

name: pacemaker

ver: 0

} 3. Send the modified node to another node

scp corosync.conf server5:/etc/corosync/

Open corosync on servr5 and server4

/etc/init.d/corosync start

4. Check the logs at two nodes to see if there are any errors

5. On server4 and server5

yum install crmsh-1.2.6-0.rc2.2.1.x86_64.rpm pssh-2.3.1-2.1.x86_64.rpm

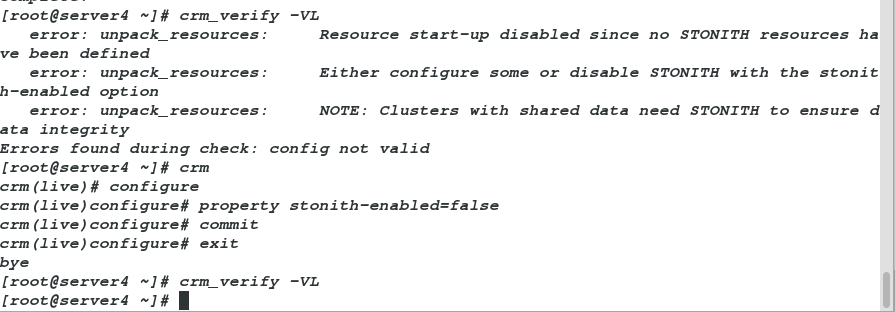

6. Both nodes are CRM verify VL verified. At the beginning, an error will be reported and the following policy will be added. It will be correct

7. On server4

[root@server4 ~]# crm

crm(live)# configure

crm(live)configure# show

node server1

node server4

property $id="cib-bootstrap-options" \

dc-version="1.1.10-14.el6-368c726" \

cluster-infrastructure="classic openais (with plugin)" \

expected-quorum-votes="2"

crm(live)configure# property stonith-enabled=false

# Stonith is a part of the Heartbeat package that shot the other node in the head,

//This component allows the system to automatically reset a failed server using remote power supplies connected to a healthy server.

Stonith A device is one that automatically turns off power in response to software commands

crm(live)configure# commit

crm(live)configure# show

node server1

node server4

property $id="cib-bootstrap-options" \

dc-version="1.1.10-14.el6-368c726" \

cluster-infrastructure="classic openais (with plugin)" \

expected-quorum-votes="2" \

stonith-enabled="false"On server4:

# crm

crm(live)# configure

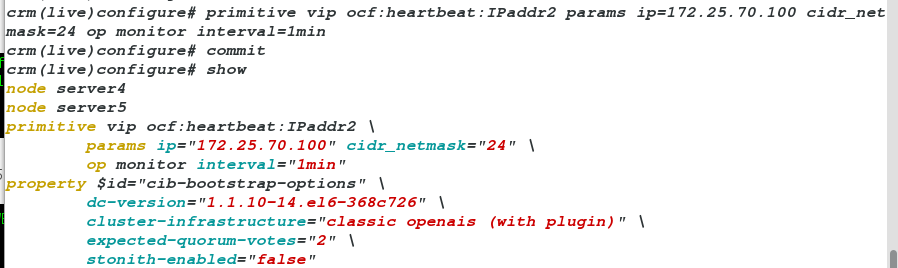

crm(live)configure# primitive vip ocf:heartbeat:IPaddr2 params ip=172.25.70.100 cidr_netmask=24 op monitor interval=1min ###Add vip, monitoring interval 1 minute

crm(live)configure# commit

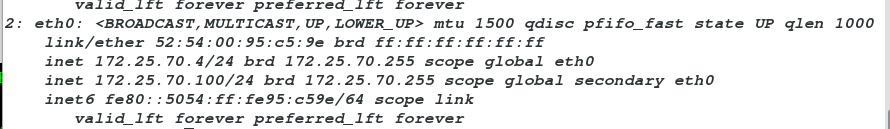

View the virtual ip and add

crm(live)configure# property no-quorum-policy=ignore

###Ignore the detection of the number of nodes. When a host is hung up, it will take over immediately

# Turn off the check of the number of nodes in the cluster. If node server1 fails, node server4 will not receive the heartbeat request, and take over the program directly to ensure normal operation, so as not to cause the whole cluster to collapse due to the collapse of one node

crm(live)configure# commit

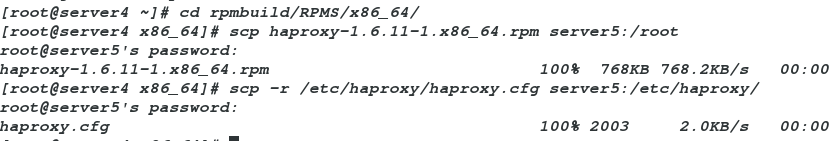

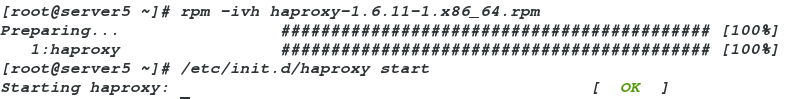

Configure the haproxy service in server4 scp rpmbuild/RPMS/x86_64/haproxy-1.6.11-1.x86_64.rpm server5:/root RPM - IVH haproxy-1.6.11-1.x86-64.rpm on server1 and server4 SCP / etc / haproxy / haproxy. CFG server5: / etc / haproxy ාාාsend the configuration file This rpm package is in / root / haproxy-1.6.11/rpmbuild/rpms/x86_ . open haproxy at both ends: / etc/init.d/haproxy start

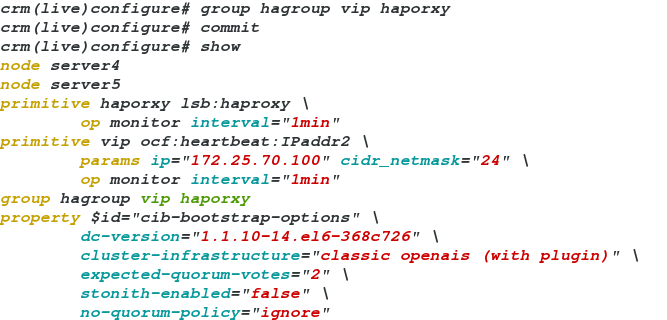

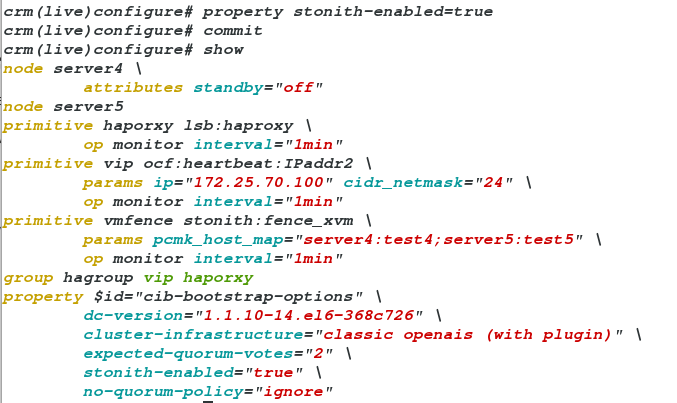

crm(live)configure# primitive haporxy lsb:haproxy op monitor interval=1min ###

crm(live)configure# commit

crm(live)configure# group hagroup vip haporxy ###Add resource management group

crm(live)configure# commit

crm(live)configure# exit

bye

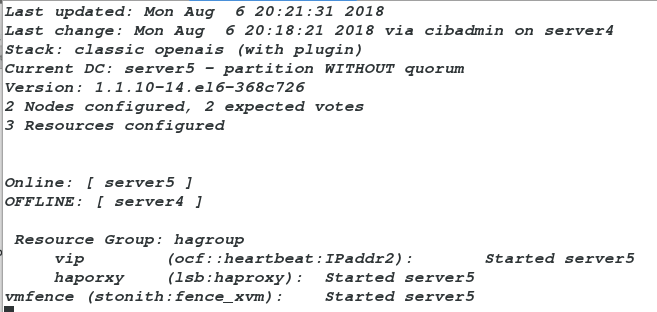

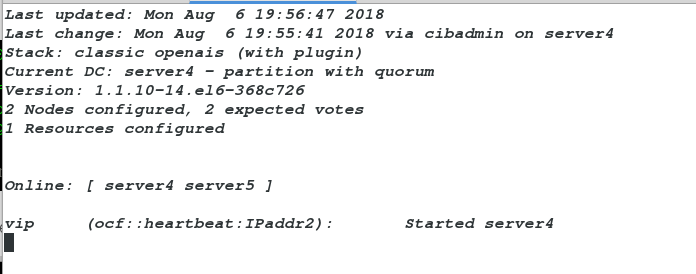

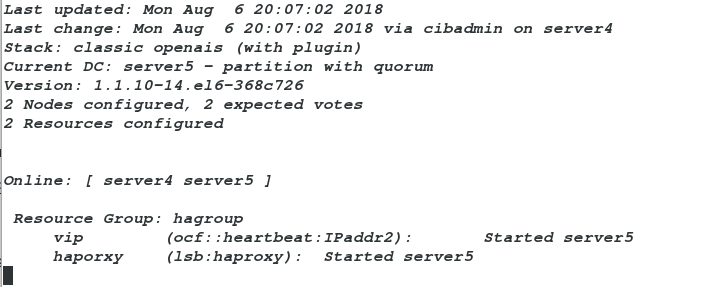

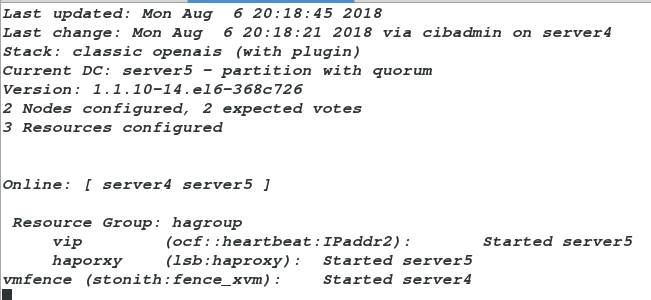

9. CRM mon monitoring command

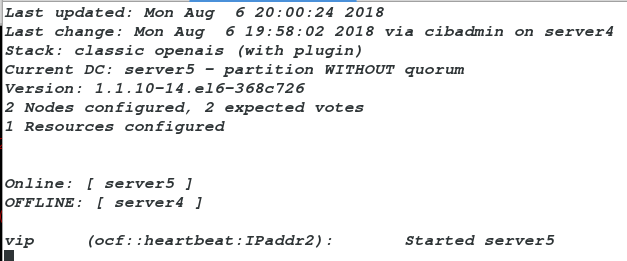

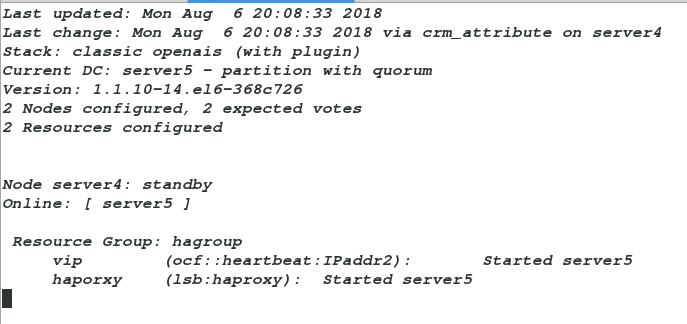

10.crm node standby stops the service at server1 or server4. Check with the monitoring command at the other end and find that it has been offline.

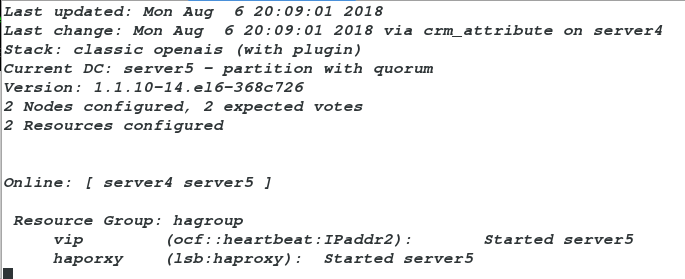

11.crm node online starts the service on server1 or server4

Add fence device

On the physical machine:

sysytemctl status fence_virtd ###Check whether fence service is enabled

//On server4:

stonith_admin -I

###Check if there is any fence? XVM file. If not, install fence-virt-0.2.3-15.el6.x86? 64

fence_xvm

fence_wti

fence_vmware_soap

fence_vmware_helper

fence_vmware

fence_virt

stonith_admin -M -a fence_xvm ###Check whether the previously set fence is normalcrm(live)configure# primitive vmfence stonith:fence_xvm params pcmk_host_map="server1:test1;server4:test4" op monitor interval=1min

# Add to fence Service processing node server1:test1 server1 Is the host name, test1 Is the real virtual machine name

crm(live)configure# commit

crm(live)configure# property stonith-enabled=true ###Turn disabled stonith on

crm(live)configure# commit ##Add once submit once

crm(live)configure# exit

CRM monitor

If the addition is successful, the fence device will work on the opposite server4

[root@server4 ~]# echo c > /proc/sysrq-trigger ###If the system of server4 breaks down, server4 will restart automatically

fence The device will automatically switch to server1 In this way, the high availability configuration is successful