Author: duktig

Blog: https://duktig.cn (first article)

Excellent still work hard. May you enjoy what you give and enjoy what you get.

See github knowledge base for more articles: https://github.com/duktig666/knowledge

background

After learning Hadoop, do you feel that writing a MapReduce program is very complex, and it requires a lot of development cost to conduct analysis and statistics. Then let's learn about Hive, another member of the Hadoop ecosystem. Let's learn how to use SQL like language to quickly query and analyze data.

In the last article, we learned about Hive's overview. In this article, we'll learn about Hive's DDL statements and DML statements (key points).

Hive series articles are as follows:

DDL

Create database

CREATE DATABASE [IF NOT EXISTS] database_name [COMMENT database_comment] [LOCATION hdfs_path] [WITH DBPROPERTIES (property_name=property_value, ...)];

matters needing attention:

- To avoid existing errors in the database to be created, add the if not exists judgment

- COMMENT: database COMMENT

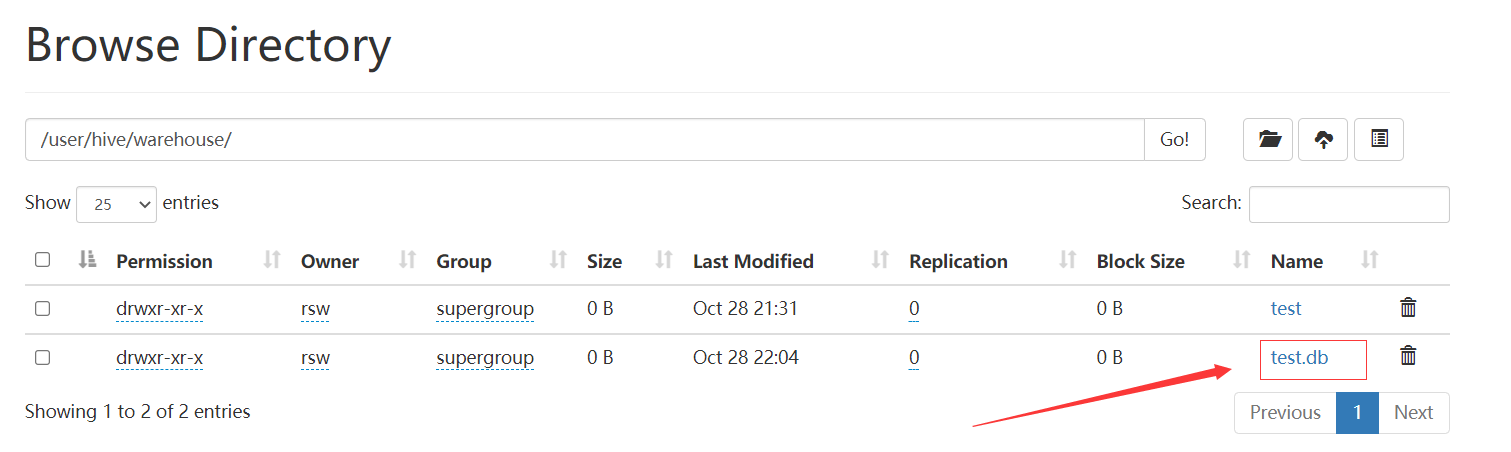

- LOCATION: the storage path of the database on HDFS (the default storage path is / user/hive/warehouse/*.db).

- WITH: specify database parameters (not commonly used)

example:

create database if not exists test;

result:

query data base

# show database show databases; # Filter the database that displays the query show databases like 'db_hive*'; # Display database information desc database test; # Display database details, extended desc database extended test;

Switch database

use test;

modify the database

Users can use the ALTER DATABASE command to set key value pair attribute values for DBPROPERTIES of a database to describe the attribute information of the database.

alter database test set dbproperties('createtime'='20170830');

Delete database

# Delete empty database drop database test; # If the deleted database does not exist, it is best to use if exists to judge whether the database exists drop database if exists test; # If the database is not empty, you can use the cascade command to forcibly delete it drop database test cascade;

Create table

CREATE [EXTERNAL] TABLE [IF NOT EXISTS] table_name [(col_name data_type [COMMENT col_comment], ...)] [COMMENT table_comment] [PARTITIONED BY (col_name data_type [COMMENT col_comment], ...)] [CLUSTERED BY (col_name, col_name, ...) [SORTED BY (col_name [ASC|DESC], ...)] INTO num_buckets BUCKETS] [ROW FORMAT row_format] [STORED AS file_format] [LOCATION hdfs_path] [TBLPROPERTIES (property_name=property_value, ...)] [AS select_statement]

Field interpretation:

- EXTERNAL: allows the user to create an EXTERNAL table. When deleting a table, the metadata and data of the internal table will be deleted together, while the EXTERNAL table only deletes the metadata and does not delete the data.

- LOCATION: Specifies the LOCATION information of the table

- COMMENT: adds comments to tables and columns

- PARTITIONED BY: creates a partitioned table

- CLUSTERED BY: creates a bucket table

- ROW FORMAT row_format: defines the format of the row

- [TBLPROPERTIES (property_name=property_value,...)]: additional properties

- AS: followed by a query statement to create a table according to the query results

- SORTED BY: not commonly used. Sort one or more columns in the bucket separately

- STORED AS: Specifies the storage file type

- Common storage file types: SEQUENCEFILE (binary sequence file), TEXTFILE (text), RCFILE (column storage format file)

- If the file data is plain text, you can use STORED AS TEXTFILE. If the data needs to be compressed, use stored as sequence file.

Management table

The tables created by default are so-called management tables, sometimes referred to as internal tables. Because of this table, Hive controls (more or less) the life cycle of the data. By default, Hive stores the data of these tables in a subdirectory of the directory defined by the configuration item Hive.metastore.warehouse.dir (for example, / user/hive/warehouse).

When we delete a management table, Hive will also delete the data in the table. Management tables are not suitable for sharing data with other tools.

External table

Because the table is an external table, Hive does not think it has the data completely. Deleting the table will not delete the data, but the metadata information describing the table will be deleted.

Manage usage scenarios for tables and external tables

The collected website logs are regularly flowed into HDFS text files every day. Do a lot of statistical analysis based on the external table (original log table). The intermediate table and result table used are stored in the internal table, and the data is entered into the internal table through SELECT+INSERT.

Manage the conversion between tables and external tables

# Type of query table MANAGED_TABLE

desc formatted test;

# Modify internal table test to external table

alter table test set tblproperties('EXTERNAL'='TRUE');

Note: ('EXTERNAL'='TRUE') and ('EXTERNAL'='FALSE') are fixed and case sensitive!

Modify table

# rename table ALTER TABLE table_name RENAME TO new_table_name; # Update column ALTER TABLE table_name CHANGE [COLUMN] col_old_name col_new_name column_type [COMMENT col_comment] [FIRST|AFTER column_name] # Add and replace columns ALTER TABLE table_name ADD|REPLACE COLUMNS (col_name data_type [COMMENT col_comment], ...)

Note:

- ADD means to ADD a new field. The field position is after all columns (before the partition column)

- REPLACE means to REPLACE all fields in the table.

Delete table

drop table test;

DML

Data import

Load data into a table (load)

load data [local] inpath 'Data path' [overwrite] into table test [partition (partcol1=val1,...)];

Field interpretation:

- load data: indicates loading data

- Local: indicates loading data from local to hive table; Otherwise, load data from HDFS to hive table

- inpath: indicates the path to load data

- Overwrite: it means to overwrite the existing data in the table, otherwise it means to append

- into table: indicates which table to load

- test: indicates the specific table

- Partition: indicates to upload to the specified partition

Insert data into a table through a query statement (insert)

# Create a table create table student_par(id int, name string) row format delimited fields terminated by '\t'; # Basic insert data insert into table student_par values(1,'wangwu'),(2,'zhaoliu'); # Basic mode insertion (based on the query results of a single table) insert overwrite table student_par select id, name from student where month='201709'; # Multi table (multi partition) insertion mode (based on query results of multiple tables) from student insert overwrite table student partition(month='201707') select id, name where month='201709' insert overwrite table student partition(month='201706') select id, name where month='201709';

Insert into: insert into a table or partition by appending data. The original data will not be deleted

insert overwrite: it will overwrite the existing data in the table

Note: insert does not support inserting partial fields

Create tables and load data in query statements (As Select)

create table if not exists student3 as select id, name from student;

Create a table based on the query results (the query results are added to the newly created table)

Import data into the specified Hive table

Note: export first, and then import the data.

import table student2 from '/user/hive/warehouse/export/student';

Data export

Insert export

# Export query results to local insert overwrite local directory '/opt/module/hive/data/export/student' select * from student; # Format and export query results to local insert overwrite local directory '/opt/module/hive/data/export/student1' ROW FORMAT DELIMITED FIELDS TERMINATED BY '\t' select * from student; # Export the query results to HDFS (no local) insert overwrite directory '/user/atguigu/student2' ROW FORMAT DELIMITED FIELDS TERMINATED BY '\t' select * from student;

Hadoop command export to local

dfs -get /user/hive/warehouse/student/student.txt /opt/module/data/export/student3.txt;

Hive Shell command export

bin/hive -e 'select * from default.student;' > /opt/module/hive/data/export/student4.txt;

Export to HDFS

export table default.student to '/user/hive/warehouse/export/student';

Clear data in table (Truncate)

Note: Truncate can only delete management tables, not data in external tables

truncate table student;

Basic query

Data preparation (auxiliary query)

Raw data preparation

dept.txt:

10 ACCOUNTING 1700 20 RESEARCH 1800 30 SALES 1900 40 OPERATIONS 1700

emp.txt:

7369 SMITH CLERK 7902 1980-12-17 800.00 20 7499 ALLEN SALESMAN 7698 1981-2-20 1600.00 300.00 30 7521 WARD SALESMAN 7698 1981-2-22 1250.00 500.00 30 7566 JONES MANAGER 7839 1981-4-2 2975.00 20 7654 MARTIN SALESMAN 7698 1981-9-28 1250.00 1400.00 30 7698 BLAKE MANAGER 7839 1981-5-1 2850.00 30 7782 CLARK MANAGER 7839 1981-6-9 2450.00 10 7788 SCOTT ANALYST 7566 1987-4-19 3000.00 20 7839 KING PRESIDENT 1981-11-17 5000.00 10 7844 TURNER SALESMAN 7698 1981-9-8 1500.00 0.00 30 7876 ADAMS CLERK 7788 1987-5-23 1100.00 20 7900 JAMES CLERK 7698 1981-12-3 950.00 30 7902 FORD ANALYST 7566 1981-12-3 3000.00 20 7934 MILLER CLERK 7782 1982-1-23 1300.00 10

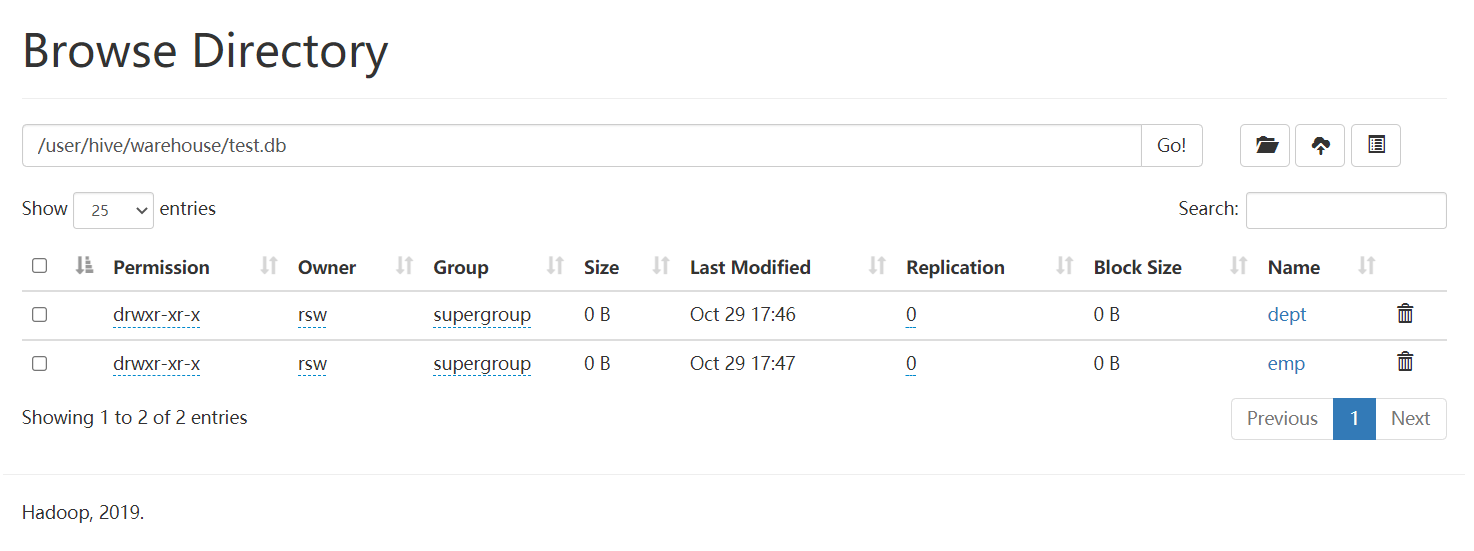

Using test database

use test;

Create department table

create table if not exists dept( deptno int, dname string, loc int ) row format delimited fields terminated by ' ';

Create employee table

create table if not exists emp( empno int, ename string, job string, mgr int, hiredate string, sal double, comm double, deptno int ) row format delimited fields terminated by ' ';

Import data

load data local inpath './dept.txt' overwrite into table dept; load data local inpath './emp.txt' overwrite into table emp;

Full table and specific column queries

# Full table query select * from emp; select empno,ename,job,mgr,hiredate,sal,comm,deptno from emp ; # Select a specific column query select empno, ename from emp;

matters needing attention:

- SQL language is case insensitive.

- SQL can be written on one or more lines

- Keywords cannot be abbreviated or broken

- Each clause should be written separately.

- Use indentation to improve the readability of statements.

alias

Rename a column / table to facilitate calculation, followed by the column name, or add the keyword 'AS' between the column name and alias.

select ename AS name, deptno dn from emp;

Some query keywords that need special attention

Most of the keywords in Hive are the same as mysql, so they are not listed one by one. Here are some special keywords

RLIKE

RLIKE clause is an extension of this function in Hive, which can specify matching conditions through the more powerful language of Java regular expression.

# Find employee information with A in name select * from emp where ename RLIKE '[A]';

Group By group statement

The GROUP BY statement is usually used with an aggregate function to group one or more queued results, and then aggregate each group.

# Calculate the average salary of each department in emp table select t.deptno, avg(t.sal) avg_sal from emp t group by t.deptno; # Calculate emp the maximum salary for each position in each department select t.deptno, t.job, max(t.sal) max_sal from emp t group by t.deptno, t.job;

Having statement

Differences between having and where

- Grouping functions cannot be written after where, but can be used after having.

- having is only used for group by group statistics statements.

# Find the average salary of each department select deptno, avg(sal) from emp group by deptno; # The average salary of each department is greater than 2000 select deptno, avg(sal) avg_sal from emp group by deptno having avg_sal > 2000;

join query

The same as mysql, especially full external connection

Full outer join: all records in all tables that meet the conditions of WHERE statement will be returned. If the specified field of any table does not have a qualified value, NULL value is used instead.

select e.empno, e.ename, d.deptno from emp e full join dept d on e.deptno = d.deptno;

sort

Global sorting

Order By (default ASC ascending): Global sorting, with only one Reducer

select * from emp order by sal; select * from emp order by sal desc;

Sort by alias

# Sort by twice the employee's salary select ename, sal*2 twosal from emp order by twosal;

Multiple column sorting

# Sort by department and salary in ascending order select ename, deptno, sal from emp order by deptno, sal;

Sort By within each Reduce

sort by: for large-scale data sets, order by is very inefficient. In many cases, global sorting is not required. In this case, sort by can be used.

Sort by generates a sort file for each reducer. Each reducer is sorted internally, not for the global result set.

The example operation is as follows:

-

Set the number of reduce

set mapreduce.job.reduces=3;

-

View and set the number of reduce

set mapreduce.job.reduces;

-

View employee information in descending order according to department number

select * from emp sort by deptno desc;

-

Import the query results into the file (sorted by department number in descending order)

insert overwrite local directory './sortby-result' select * from emp sort by deptno desc;

Distribution by

Distribution by: in some cases, we need to control which reducer a particular row should go to, usually for subsequent aggregation operations. The distribute by clause can do this. distribute by is similar to partition (custom partition) in MR. it is used in combination with sort by.

For the distribution by test, you must allocate multiple reduce for processing, otherwise you can't see the effect of distribution by.

The example operation is as follows:

First, it is divided by department number, and then sorted in descending order by employee number.

set mapreduce.job.reduces=3; insert overwrite local directory './distribute-result' select * from emp distribute by deptno sort by empno desc;

matters needing attention:

- The partition rule of distribution by is to divide the hash code of the partition field by the number of reduce, and then divide the same remainder into a region.

- Hive requires that the DISTRIBUTE BY statement be written before the SORT BY statement.

Cluster By

When the distribution by and sorts by fields are the same, the cluster by method can be used.

In addition to the function of distribute by, cluster by also has the function of sort by. However, sorting can only be in ascending order, and the sorting rule cannot be ASC or DESC.

# The following two expressions are equivalent select * from emp cluster by deptno; select * from emp distribute by deptno sort by deptno;

Note: division by department number is not necessarily a fixed value, but can be divided into departments 20 and 30

Go inside the next section.