1, Introduction

Hive has three types of UDFs: (normal) UDF, user-defined aggregate function (UDAF), and user-defined table generating function (UDTF).

- UDF: the operation acts on a single data row and produces a data row as output. Most functions, such as mathematical and string functions, fall into this category.

- UDAF: accepts multiple input data rows and generates one output data row. For example, the COUNT and MAX functions.

- UDTF: acts on a single data row and generates multiple data rows (i.e. a table) as output. For example, the alternate view and expand functions.

2, Write UDF

Before developing Hive UDF, we need to introduce a jar package: hive exec.

<dependency>

<groupId>org.apache.hive</groupId>

<artifactId>hive-exec</artifactId>

<version>2.1.1</version>

<scope>provided</scope>

</dependency>The default scope is compile, which means that the jar package is required in the classpath during the compilation, testing and running phases of the project. The scope specified here is provided, which means that this jar package is only required in the compilation and testing phase. Because hive already has the jar package at runtime, there is no need to rely on it repeatedly.

Next, we need to implement the UDF interface. At present, Hive's UDF is mainly divided into two types of interfaces: UDF and generic UDF.

- UDF: a relatively simple class. The inherited base class is org.apache.hadoop.hive.ql.exec.UDF

- GenericUDF: it is relatively complex and has better control over type checking. The inherited base class is org.apache.hadoop.hive.ql.udf.generic.GenericUDF.

Next, let's look at a simple UDF implementation class: Strip

package com.scb.dss.udf;

import org.apache.commons.lang.StringUtils;

import org.apache.hadoop.hive.ql.exec.Description;

import org.apache.hadoop.hive.ql.exec.UDF;

@Description(name = "strip",

value = "_FUNC_(str) - Removes the leading and trailing space characters from str.")

public class Strip extends UDF {

// Remove spaces at the beginning and end of str

public String evaluate(String str) {

if (str == null) {

return null;

}

return StringUtils.strip(str);

}

// Removes any characters in the specified character set from the stripChars at the beginning and end of str

public String evaluate(String str, String stripChars) {

if (str == null) {

return null;

}

return StringUtils.strip(str, stripChars);

}

}

This Strip class has two evaluate methods. The first method removes the spaces at the beginning and end of STR, while the second method removes any characters in the specified character set in the stripChars at the beginning and end of str.

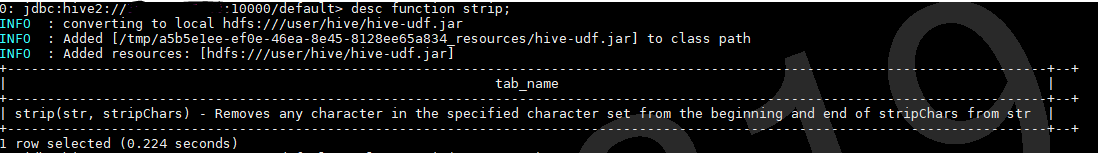

@The Description annotation indicates the document Description of the UDF function, which can be viewed later through the Description function < UDF > command. The annotation has three attributes: name, value, and extended.

- Name: indicates the name of the function

- value: describes the function, where_ FUNC_ Is a macro definition, which will be replaced with the actual name of this function when desc

- extended: mainly used to write function usage examples

Other points needing attention:

- UDF names are case insensitive

- Hive supports the use of Java's basic data types (as well as types such as java.util.map and java.util.list) in UDF.

- Hive also supports Hadoop basic data types, such as Text. It is recommended to use Hadoop basic data types, which can take advantage of object reuse to increase efficiency and save costs.

Next, make a simple UT

package com.scb.dss.udf;

import org.junit.Assert;

import org.junit.Test;

import static org.junit.Assert.*;

public class StripTest {

private Strip strip = new Strip();

@Test

public void evaluate() {

System.out.println(strip.evaluate(" a b c "));

Assert.assertEquals("a b c", strip.evaluate(" a b c "));

System.out.println(strip.evaluate(" a b c a", "a"));

Assert.assertEquals(" a b c ", strip.evaluate(" a b c a", "a"));

System.out.println(strip.evaluate(" a b c a", "a "));

Assert.assertEquals("b c", strip.evaluate(" a b c a", "a "));

}

}3, Deploy UDF

1. Packing

mvn clean package

2. Upload Jar package to HDFS

hdfs dfs -put hive-udf.jar /user/hive/

3. Connect HIVE via beeline

beeline -u jdbc:hive2://host:10000/default -n username -p 'password'

4. Create function

create function strip as 'com.scb.dss.udf.UDFStrip' using jar 'hdfs:///user/hive/hive-udf.jar';

5. Use

View UDF description information through desc function < UDF >.

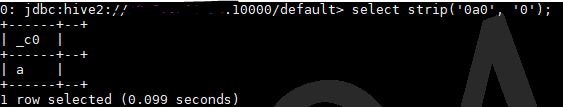

select strip('0a0', '0'); // Remove first 0

4, Other

- Delete function: drop function < UDF >

- Create temporary function: create temporary function < UDF > as < UDF. Class. Path >