Learning is not so utilitarian. The second senior brother will take you to easily read the source code from a higher dimension ~

Earlier, we talked about how the Nacos client obtains the instance list, how to cache, and how to subscribe to the change of the instance list. After obtaining an instance list, have you ever thought about a question: if there are 100 instances in the instance list, how does the Nacos client choose one?

In this article, we will take you to analyze from the source code level how the Nacos client uses the algorithm to obtain an instance from the instance list for request. It can also be called the load balancing algorithm of Nacos client.

Single instance acquisition

NamingService not only provides a method to obtain the instance list, but also a method to obtain a single instance, such as:

Instance selectOneHealthyInstance(String serviceName, String groupName, List<String> clusters, boolean subscribe)

throws NacosException;This method will obtain a healthy instance from the instance list according to the predefined load algorithm. Other overloaded methods have similar functions and will eventually call this method. Let's take this method as an example to analyze the specific algorithm.

Specific implementation code:

@Override

public Instance selectOneHealthyInstance(String serviceName, String groupName, List<String> clusters,

boolean subscribe) throws NacosException {

String clusterString = StringUtils.join(clusters, ",");

if (subscribe) {

// Get ServiceInfo

ServiceInfo serviceInfo = serviceInfoHolder.getServiceInfo(serviceName, groupName, clusterString);

if (null == serviceInfo) {

serviceInfo = clientProxy.subscribe(serviceName, groupName, clusterString);

}

// One instance is obtained through the load balancing algorithm

return Balancer.RandomByWeight.selectHost(serviceInfo);

} else {

// Get ServiceInfo

ServiceInfo serviceInfo = clientProxy

.queryInstancesOfService(serviceName, groupName, clusterString, 0, false);

// One instance is obtained through the load balancing algorithm

return Balancer.RandomByWeight.selectHost(serviceInfo);

}

}The logic of the selectOneHealthyInstance method is very simple. Call the method we mentioned earlier to obtain the ServiceInfo object, and then pass it to the load balancing algorithm as a parameter. The load balancing algorithm calculates which Instance to use in the end.

Algorithm parameter encapsulation

First track the code implementation, non core business logic, and just mention it briefly.

As can be seen from the above code, the selectHost method of the Balancer internal class RandomByWeight is called:

public static Instance selectHost(ServiceInfo dom) {

// Get the instance list in ServiceInfo

List<Instance> hosts = selectAll(dom);

// ...

return getHostByRandomWeight(hosts);

}The core logic of the selectHost method is to get the list of instances from ServiceInfo and then call the getHostByRandomWeight method:

protected static Instance getHostByRandomWeight(List<Instance> hosts) {

// ... judgment logic

// Reorganize data formats

List<Pair<Instance>> hostsWithWeight = new ArrayList<Pair<Instance>>();

for (Instance host : hosts) {

if (host.isHealthy()) {

hostsWithWeight.add(new Pair<Instance>(host, host.getWeight()));

}

}

// Random weight load balancing algorithm is realized through Chooser

Chooser<String, Instance> vipChooser = new Chooser<String, Instance>("www.taobao.com");

vipChooser.refresh(hostsWithWeight);

return vipChooser.randomWithWeight();

}The first half of getHostByRandomWeight is to convert the Instance list and its weight data into a Pair, that is, to establish a pairwise relationship. Only healthy nodes are used in this process.

The real algorithm is implemented through the Chooser class. According to the name, we basically know that the implementation strategy is a random algorithm based on weight.

Implementation of load balancing algorithm

All load balancing algorithms are implemented in the Chooser class, which provides two methods: refresh and randomWithWeight.

The refresh method is used to filter data, check the validity of data and establish the data model required by the algorithm.

randomWithWeight method performs random algorithm processing based on the previous data.

First look at the refresh method:

public void refresh(List<Pair<T>> itemsWithWeight) {

Ref<T> newRef = new Ref<T>(itemsWithWeight);

// Prepare data, check data

newRef.refresh();

// After the above data is refreshed, a GenericPoller is reinitialized here

newRef.poller = this.ref.poller.refresh(newRef.items);

this.ref = newRef;

}Basic steps:

- Create Ref class, which is the internal class of Chooser;

- Call the refresh method of Ref to prepare data, check data, etc;

- After data filtering, calling the poller#refresh method essentially creates a GenericPoller object;

- Re assignment of member variables;

Here we focus on the Ref#refresh method:

/**

* Get the list of instances participating in the calculation, calculate the sum of the number of incremental arrays, and check

*/

public void refresh() {

// Sum of instance weights

Double originWeightSum = (double) 0;

// Sum all health weights

for (Pair<T> item : itemsWithWeight) {

double weight = item.weight();

//ignore item which weight is zero.see test_randomWithWeight_weight0 in ChooserTest

// If the weight is less than or equal to 0, it will not participate in the calculation

if (weight <= 0) {

continue;

}

// Put valid instances in the list

items.add(item.item());

// If the value is infinite

if (Double.isInfinite(weight)) {

weight = 10000.0D;

}

// If the value is non numeric

if (Double.isNaN(weight)) {

weight = 1.0D;

}

// Weight value accumulation

originWeightSum += weight;

}

double[] exactWeights = new double[items.size()];

int index = 0;

// Calculate the weight proportion of each node and put it into the array

for (Pair<T> item : itemsWithWeight) {

double singleWeight = item.weight();

//ignore item which weight is zero.see test_randomWithWeight_weight0 in ChooserTest

if (singleWeight <= 0) {

continue;

}

// Calculate the weight proportion of each node

exactWeights[index++] = singleWeight / originWeightSum;

}

// Initialize incremental array

weights = new double[items.size()];

double randomRange = 0D;

for (int i = 0; i < index; i++) {

// The value of the i-th item of the incremental array is the sum of the first I values of items

weights[i] = randomRange + exactWeights[i];

randomRange += exactWeights[i];

}

double doublePrecisionDelta = 0.0001;

// Return after index traversal;

// Or weights, if the error of the last bit value is less than 0.0001 compared with 1, return

if (index == 0 || (Math.abs(weights[index - 1] - 1) < doublePrecisionDelta)) {

return;

}

throw new IllegalStateException(

"Cumulative Weight calculate wrong , the sum of probabilities does not equals 1.");

}It can be understood in combination with the comments in the above code. The core steps include the following:

- Traverse itemsWithWeight to calculate the weight sum data; Non healthy nodes will be eliminated;

- Calculate the proportion of the weight value of each node in the total weight value and store it in the exactWeights array;

- Reconstruct the values in the exactWeights array to form an incremental array weights (each value is the sum of the exactWeights coordinate values), which is used for the random algorithm;

- Judge whether the cycle is completed or the error is within the specified range (0.0001), and return if it is qualified.

After all data are prepared, call the random algorithm method randomWithWeight:

public T randomWithWeight() {

Ref<T> ref = this.ref;

// Generate random numbers between 0-1

double random = ThreadLocalRandom.current().nextDouble(0, 1);

// Use the dichotomy to find the specified value in the array. If it does not exist, it returns (- (insertion point) - 1). The insertion point is the position where the random number will be inserted into the array, that is, the first element index greater than this key.

int index = Arrays.binarySearch(ref.weights, random);

// If no query is found (return - 1 or "- insertion point")

if (index < 0) {

index = -index - 1;

} else {

// Direct return result on hit

return ref.items.get(index);

}

// Judge that the coordinates are not out of bounds

if (index < ref.weights.length) {

// If the random number is less than the value of the specified coordinate, the coordinate value is returned

if (random < ref.weights[index]) {

return ref.items.get(index);

}

}

// This should not happen, but if it does, the value of the last position is returned

/* This should never happen, but it ensures we will return a correct

* object in case there is some floating point inequality problem

* wrt the cumulative probabilities. */

return ref.items.get(ref.items.size() - 1);

}The basic operation of this method is as follows:

- Generate a random number of 0-1;

- Use Arrays#binarySearch to find in the array, that is, binary search. This method will return the value containing the key. If not, it will return "- 1" or "- insertion point". The insertion point is the position where the random number will be inserted into the array, that is, the first element index greater than this key.

- If it hits, it returns directly; if it misses, it reverses the return value by 1 to obtain the index value;

- Judge the index value, and return the result if it meets the conditions;

So far, the code level tracking of the load balancing algorithm obtained by the Nacos client instance has been completed.

Algorithm example demonstration

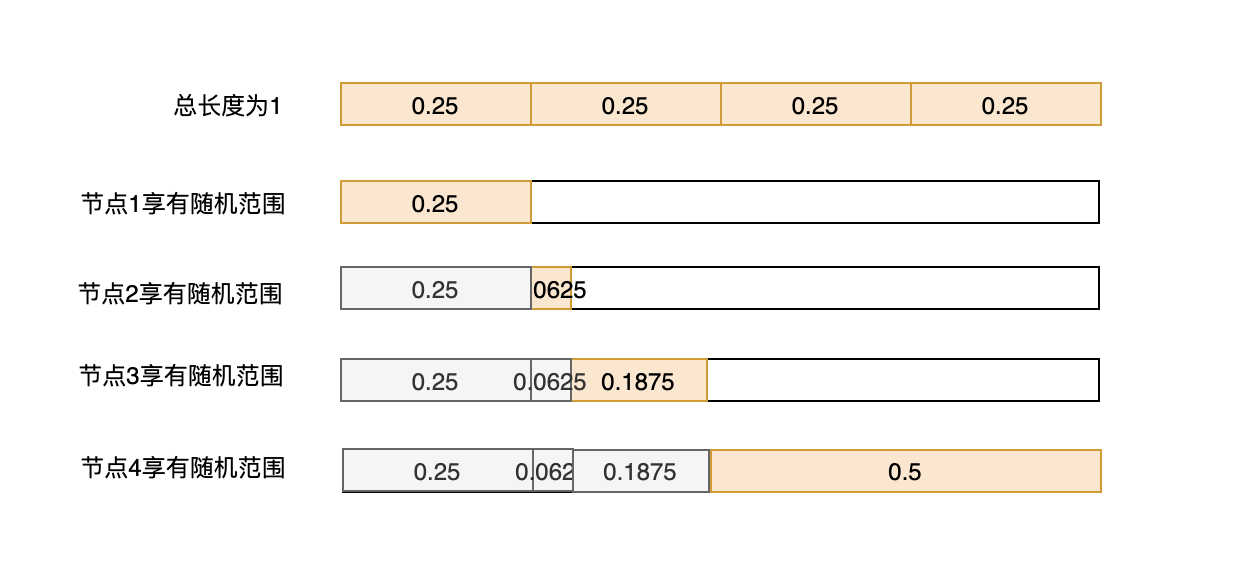

Next, an example is used to demonstrate the data changes involved in the algorithm. In order to make the data beautiful, four groups of data are used, and each group of data comes in to ensure that it can be divided;

Node and weight data (front node and back weight) are as follows:

1 100 2 25 3 75 4 200

Step 1: calculate the weight synthesis:

originWeightSum = 100 + 25 + 75 + 200 = 400

Step 2: calculate the weight ratio of each node:

exactWeights = {0.25, 0.0625, 0.1875, 0.5}Step 3: calculate the incremental array weights:

weights = {0.25, 0.3125, 0.5, 1}Step 4: generate a random number of 0-1:

random = 0.3049980013493817

Step 5: call Arrays#binarySearch to search for random from weights:

index = -2

The Arrays#binarySearch(double[] a, double key) method is explained here. If the passed in key happens to be in the array, such as 1, the returned index is 3; if the key is the above random value, find the insertion point first, take the inverse and subtract one.

The insertion point is the index of the first element greater than this key. Then the value of the first element greater than 0.3049980013493817 above is 0.3125, and the insertion point value is 1;

Then, according to the formula, the index returned by Arrays#binarySearch is:

index = - ( 1 ) - 1 = -2

Step 6, that is, the case of no hit:

index = -( -2 ) - 1 = 1

Then judge whether the index is out of bounds. Obviously, 1 < 4. If it is not out of bounds, the value with coordinate 1 will be returned.

The core of the algorithm

The above demonstrates the algorithm, but can this algorithm really load by weight? Let's analyze this problem.

The focus of this problem is not on the random value, which is basically random. How to ensure that nodes with significant weight get more opportunities?

Here, the incremental array weights is represented in another form:

As can be seen from the above algorithm, weights and exactWeights are arrays with the same size. For the same coordinate (index), the value of weights is the sum of exactWeights including the current coordinate and all previous coordinate values.

If weights are understood as a line, the value of the corresponding node is each point on the line, which is reflected in the figure (Figure 2 to figure 5) colored (gray + Orange) part.

The insertion point of Arrays#binarySearch algorithm obtains the first coordinate greater than key (i.e. random), that is, each node has different random range. Their range is determined by the interval between the current point and the previous point, and this interval is exactly the weight ratio.

Nodes with large weight ratio occupy more intervals. For example, node 1 accounts for 1 / 4 and node 4 accounts for 1 / 2. In this way, if the random number is evenly distributed, the nodes with a large range are more likely to be favored. It will achieve the opportunity to be called according to the weight.

Summary

This article traces the source code of Nacos client and analyzes the algorithm to obtain one of the instances from the instance list, that is, the random weight load balancing algorithm. The overall business logic is relatively simple. Obtain the instance list from ServiceInfo, filter all the way, select the target instance, then conduct secondary processing according to their weight, encapsulate the data structure, and finally obtain the corresponding instance based on the binary search method provided by Arrays#binarySearch.

The focus we need to pay attention to and learn is the idea and specific implementation of the weight acquisition algorithm, which can be used in practice.

About the blogger: the author of the technical book "inside of SpringBoot technology", loves to study technology and write technical dry goods articles.