What is the lease

We all know that redis can set the expiration time of the key through the expire command to realize the ttl of the cache. Etcd also has a feature that can set the expiration time of the key, that is, Lease. However, in comparison, the applicable scenarios of the two are different. Etcd Lease is widely used in service registration and keeping alive, and redis is mainly used to eliminate caching. Let's introduce the Lease mechanism of etcd, which will be explored step by step from the use mode and implementation principle.

Mode of use

Firstly, it briefly introduces its use through a case.

package main

import (

"context"

"log"

"os"

"os/signal"

"syscall"

"time"

clientv3 "go.etcd.io/etcd/client/v3"

)

func main() {

key := "linugo-lease"

cli, err := clientv3.New(clientv3.Config{

Endpoints: []string{"127.0.0.1:23790"},

DialTimeout: time.Second,

})

if err != nil {

log.Fatal("new client err: ", err)

}

//First, create a Lease and apply for a Lease through the Grant method. Set the ttl to 20 seconds. If there is no renewal, the Lease appointment will disappear after 20 seconds

ls := clientv3.NewLease(cli)

grantResp, err := ls.Grant(context.TODO(), 20)

if err != nil {

log.Fatal("grant err: ", err)

}

log.Printf("grant id: %x\n", grantResp.ID)

//Next, insert a key value pair to bind the lease, and the key value pair will be deleted accordingly with the expiration of the lease

putResp, err := cli.Put(context.TODO(), key, "value", clientv3.WithLease(grantResp.ID))

if err != nil {

log.Fatal("put err: ", err)

}

log.Printf("create version: %v\n", putResp.Header.Revision)

//Renew the lease through the KeepAliveOnce method. The lease will be renewed every 5s to the initial 20s

go func() {

for {

time.Sleep(time.Second * 5)

resp, err := ls.KeepAliveOnce(context.TODO(), grantResp.ID)

if err != nil {

log.Println("keep alive once err: ", err)

break

}

log.Println("keep alive: ", resp.TTL)

}

}()

sigC := make(chan os.Signal, 1)

signal.Notify(sigC, os.Interrupt, syscall.SIGTERM)

s := <-sigC

log.Println("exit with: ", s.String())

}We can keep a service module alive through the above methods. We can register the address of the node in etcd, bind a lease with an appropriate length of time, and renew the lease regularly. If the node goes down and exceeds the lease length, the information of the node in etcd will be removed to realize the automatic removal of the service. Usually, we can achieve real-time perception in combination with the watch feature of etcd.

In addition to the Grant and KeepAliveOnce methods mentioned above, the v3 client interface also includes some other important methods, such as Revoke deleting a lease, TimeToLive viewing the remaining duration of a lease, etc.

There are five interfaces between etcd server and client, which implement the methods of client respectively. This time mainly analyzes the implementation method of the server.

type LeaseServer interface {

//Create a lease using the Grant method of the corresponding client

LeaseGrant(context.Context, *LeaseGrantRequest) (*LeaseGrantResponse, error)

//Delete a lease

LeaseRevoke(context.Context, *LeaseRevokeRequest) (*LeaseRevokeResponse, error)

//A renewal of a lease

LeaseKeepAlive(Lease_LeaseKeepAliveServer) error

//Lease remaining duration query

LeaseTimeToLive(context.Context, *LeaseTimeToLiveRequest) (*LeaseTimeToLiveResponse, error)

//View all leases

LeaseLeases(context.Context, *LeaseLeasesRequest) (*LeaseLeasesResponse, error)

}initialization

When etcd is started, a lessor will be initialized. The lessor stores all the information about the lease, including lease ID, expiration time, and key value of lease binding; Lessor implements a series of interfaces, which are the specific implementation logic of lease functions, including grant, revoke, renew, etc.

type lessor struct {

mu sync.RWMutex

demotec chan struct{}

//Store all valid lease information. The key is leaseID, and the value includes the ID of the lease, ttl, key bound by lease and other information

leaseMap map[LeaseID]*Lease

//Easy to find a data structure of lease. Based on the minimum heap implementation, you can put the lease that is about to expire at the head of the queue. When checking whether it expires, you only need to check the head of the queue

leaseExpiredNotifier *LeaseExpiredNotifier

//Remaining time for real-time update of lease

leaseCheckpointHeap LeaseQueue

//The binding relationship between the key stored by the user and lease. The lease can be found through the key

itemMap map[LeaseItem]LeaseID

......

//Expired lease s will be placed in the chan and cleaned up by consumers

expiredC chan []*Lease

......

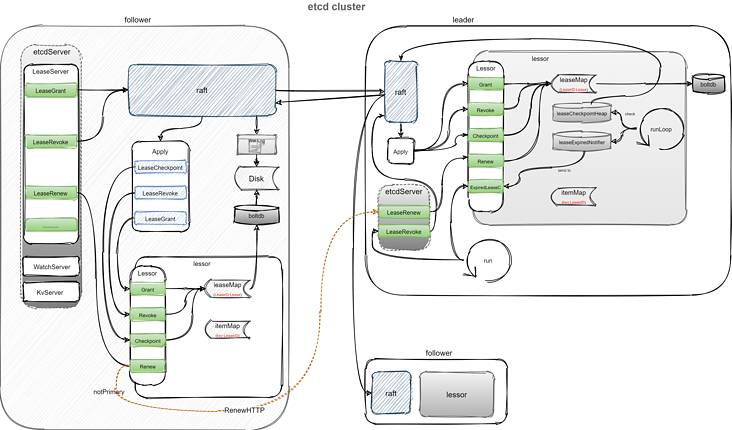

}After lessor is initialized, a goroutine will be started at the same time, which is used to frequently check whether there is expired lease and update the remaining time of lease. These checks of lease are done by the leader node of the cluster, including updating the remaining time, maintaining the minimum heap of lease, and revoking lease when it expires. The follower node is only used to respond to the leader node's storage, update or revoke the lead request.

func (le *lessor) runLoop() {

defer close(le.doneC)

for {

//Check for expired lease

le.revokeExpiredLeases()

//The checkpoint mechanism checks and updates the remaining time of lease

le.checkpointScheduledLeases()

//Check every 500 milliseconds

select {

case <-time.After(500 * time.Millisecond):

case <-le.stopC:

return

}

}

}In order to cover most scenarios, let's assume a three node etcd cluster scenario and initiate a request for one of the follower nodes through the above case code.

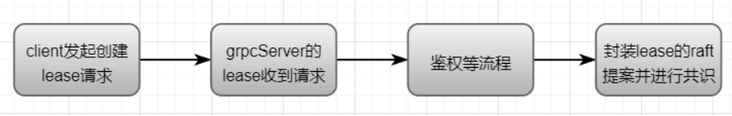

establish

When the v3 client calls the Grant method, it will correspond to the LeaseGrant method of LeaseServer on the server side. This method will go through a series of intermediate steps (authentication, etc.) to the LeaseGrant method packaged and implemented by etcdServer. This method will call the raft module, encapsulate a Lease proposal and carry out the data synchronization process. Since the node is a follower at this time, it will transfer the request to the leader for processing. After receiving the request, the leader will encapsulate the proposal into a log and broadcast it to the follower node. The follower node will execute the proposal message and reply to the leader node.

When the follower node executes the proposal content, it will analyze that the request is a request to create a lease, and the process is executed in the apply module. The leaseapply module will call its own wrapper method.

func (a *applierV3backend) Apply(r *pb.InternalRaftRequest, shouldApplyV3 membership.ShouldApplyV3) *applyResult {

op := "unknown"

ar := &applyResult{}

......

// call into a.s.applyV3.F instead of a.F so upper appliers can check individual calls

switch {

......

case r.LeaseGrant != nil:

op = "LeaseGrant"

ar.resp, ar.err = a.s.applyV3.LeaseGrant(r.LeaseGrant)

......

default:

a.s.lg.Panic("not implemented apply", zap.Stringer("raft-request", r))

}

return ar

}The LeaseGrant method is a further encapsulation of the Grant method implemented by lessor.

func (a *applierV3backend) LeaseGrant(lc *pb.LeaseGrantRequest) (*pb.LeaseGrantResponse, error) {

l, err := a.s.lessor.Grant(lease.LeaseID(lc.ID), lc.TTL)

resp := &pb.LeaseGrantResponse{}

if err == nil {

resp.ID = int64(l.ID)

resp.TTL = l.TTL()

resp.Header = newHeader(a.s)

}

return resp, err

}

lessor adopt Grant Method will lease Package and store into your own leaseMap,And by lease Persist to boltdb.

func (le *lessor) Grant(id LeaseID, ttl int64) (*Lease, error) {

......

//Encapsulate lease

l := &Lease{

ID: id,

ttl: ttl,

//It is used to store the key bound by the lease and delete the key when the lease expires

itemSet: make(map[LeaseItem]struct{}),

revokec: make(chan struct{}),

}

......

//If it is a leader node, refresh the expiration time of the lead

if le.isPrimary() {

l.refresh(0)

} else {

//There is no expiration time for storing lease in the follower node

l.forever()

}

le.leaseMap[id] = l

//lease information persistence

l.persistTo(le.b)

//If it is a leader node, put the lease information into the minimum heap

if le.isPrimary() {

item := &LeaseWithTime{id: l.ID, time: l.expiry}

le.leaseExpiredNotifier.RegisterOrUpdate(item)

le.scheduleCheckpointIfNeeded(l)

}

return l, nil

}binding

After the lease is created, you can create a data through the put instruction and bind it with the lease. During put, there will be a leaseID in the value field of put, which will coexist in boltDB. In this way, after etcd hangs, the corresponding relationship between lease and data can be restored according to persistent storage.

func (tw *storeTxnWrite) put(key, value []byte, leaseID lease.LeaseID) {

......

kv := mvccpb.KeyValue{

Key: key,

Value: value,

CreateRevision: c,

ModRevision: rev,

Version: ver,

//Leave field

Lease: int64(leaseID),

}

//.... Persistence and other operations

//attach operation

if leaseID != lease.NoLease {

if tw.s.le == nil {

panic("no lessor to attach lease")

}

err = tw.s.le.Attach(leaseID, []lease.LeaseItem{{Key: string(key)}})

if err != nil {

panic("unexpected error from lease Attach")

}

}

tw.trace.Step("attach lease to kv pair")

}The Attach operation of lessor will bind lease and key and coexist in its own itemMap and itemSet of lease.

func (le *lessor) Attach(id LeaseID, items []LeaseItem) error {

......

l := le.leaseMap[id]

l.mu.Lock()

for _, it := range items {

//itemSet saved to lease

l.itemSet[it] = struct{}{}

//Save to itemMap of lessor

le.itemMap[it] = id

}

l.mu.Unlock()

return nil

}Keep alive

The keepAlive method provided by the client is used for lease renewal. Each call will make the remaining time of lease return to the remaining time set during initialization. Because some inspections and maintenance of the lead node are maintained by the leader node, when we send a request to the follower, we will directly redirect the request to the leader node.

func (s *EtcdServer) LeaseRenew(ctx context.Context, id lease.LeaseID) (int64, error) {

//Sending to the follower will return an ErrNotPrimary error

ttl, err := s.lessor.Renew(id)

if err == nil { // already requested to primary lessor(leader)

return ttl, nil

}

......

for cctx.Err() == nil && err != nil {

//Get leader node

leader, lerr := s.waitLeader(cctx)

if lerr != nil {

return -1, lerr

}

for _, url := range leader.PeerURLs {

lurl := url + leasehttp.LeasePrefix

//Request the keepalive interface of the leader through the http interface

ttl, err = leasehttp.RenewHTTP(cctx, id, lurl, s.peerRt)

if err == nil || err == lease.ErrLeaseNotFound {

return ttl, err

}

}

time.Sleep(50 * time.Millisecond)

}

......

return -1, ErrCanceled

}After arriving at the Leader node, the remaining time, expiration time and the lease in the minimum heap will be updated through Renew.

func (le *lessor) Renew(id LeaseID) (int64, error) {

le.mu.RLock()

if !le.isPrimary() {

le.mu.RUnlock()

return -1, ErrNotPrimary

}

demotec := le.demotec

l := le.leaseMap[id]

if l == nil {

le.mu.RUnlock()

return -1, ErrLeaseNotFound

}

//true when cp (checkpoint method, which requires data synchronization through raft) is not empty and the remaining time is greater than 0

clearRemainingTTL := le.cp != nil && l.remainingTTL > 0

le.mu.RUnlock()

//If lease expires

if l.expired() {

select {

case <-l.revokec: //When revoke, it will return directly

return -1, ErrLeaseNotFound

// The expired lease might fail to be revoked if the primary changes.

// The caller will retry on ErrNotPrimary.

case <-demotec:

return -1, ErrNotPrimary

case <-le.stopC:

return -1, ErrNotPrimary

}

}

if clearRemainingTTL {

//The remaining time to synchronize to each node lease through the checkpoint method

le.cp(context.Background(), &pb.LeaseCheckpointRequest{Checkpoints: []*pb.LeaseCheckpoint{{ID: int64(l.ID), Remaining_TTL: 0}}})

}

le.mu.Lock()

l.refresh(0)

item := &LeaseWithTime{id: l.ID, time: l.expiry}

//Update lease in minimum heap

le.leaseExpiredNotifier.RegisterOrUpdate(item)

le.mu.Unlock()

return l.ttl, nil

}revoke

Revocation can be triggered in two ways: one is triggered passively by the client directly calling the Revoke method, and the other is triggered actively when the leader node detects that the lease is expired. Passive triggering is relatively simple. After receiving the request, the follower node directly calls the raft module to synchronize the request. After receiving the request, each node actively deletes the lease through the lessor (deleting does not directly delete the lease in the leaseMap, but closes the corresponding revokec), and deletes the key bound to it.

func (le *lessor) Revoke(id LeaseID) error {

le.mu.Lock()

l := le.leaseMap[id]

//Close notification pipeline

defer close(l.revokec)

le.mu.Unlock()

if le.rd == nil {

return nil

}

txn := le.rd()

//The Keys method will take out the key of itemSet in lease

keys := l.Keys()

sort.StringSlice(keys).Sort()

//Delete the key of lease binding

for _, key := range keys {

txn.DeleteRange([]byte(key), nil)

}

le.mu.Lock()

defer le.mu.Unlock()

delete(le.leaseMap, l.ID)

//Delete the lease of boltdb persistence

le.b.BatchTx().UnsafeDelete(buckets.Lease, int64ToBytes(int64(l.ID)))

txn.End()

return nil

}For active triggering, call revokeExpiredLeases every 500ms to check whether it is expired through the asynchronous collaborative process runLoop() started when the lessor is created.

func (le *lessor) revokeExpiredLeases() {

var ls []*Lease

// rate limit

revokeLimit := leaseRevokeRate / 2

le.mu.RLock()

//If it is a leader node

if le.isPrimary() {

//An expired lease was found in the leaseExpiredNotifier minimum heap

ls = le.findExpiredLeases(revokeLimit)

}

le.mu.RUnlock()

if len(ls) != 0 {

select {

case <-le.stopC:

return

case le.expiredC <- ls://Send expired lease to expireC

default:

}

}

}When etcd is started, another asynchronous run process will be started, and the expireC will be subscribed. After receiving the message, a Revoke proposal will be initiated and synchronized.

//The leader exposes expiredC through the ExpiredLeasesC method

func (le *lessor) ExpiredLeasesC() <-chan []*Lease {

return le.expiredC

}

//Asynchronous run coroutine started by etcd

func (s *EtcdServer) run() {

......

var expiredLeaseC <-chan []*lease.Lease

if s.lessor != nil {

expiredLeaseC = s.lessor.ExpiredLeasesC()

}

for{

select{

case leases := <-expiredLeaseC://Received expired message

s.GoAttach(func() {

for _, lease := range leases {

......

lid := lease.ID

s.GoAttach(func() {

ctx := s.authStore.WithRoot(s.ctx)

//Call the revoke method

_, lerr := s.LeaseRevoke(ctx, &pb.LeaseRevokeRequest{ID: int64(lid)})

......

<-c

})

}

})

......

//Other case operations

}

}

}

Summary

In order to maintain the consistency of data, the creation, deletion and checkpoint of lease need to be synchronized through the raft module. In the renewal stage, it is directly sent to the leader node through http request. All maintenance and inspection work are in the leader node, which can be roughly represented by the following figure. Because the author's understanding of the raft module is not deep enough, so I've mentioned it.

Reference

- etcd-v3.5.0 source code- https://github.com/etcd-io/et...

- How etcd realizes lease hook education, etcd principle and Practice