1, AWS Serverless service

In recent years, AWS highly advocates the serverless mode. Since Lambda released in 2014, serverless mode has become popular. Then, AWS Fargate service was launched in 2017, which was applied to its own container service platform ECS. In 2019, EKS also successively supported AWS Fargate.

Now, more users use serverless computing to build applications, and AWS is designed to let users not worry about the preset or management problems of infrastructure. Developers can use AWS Fargate to encapsulate their code as a serverless container or AWS Lambda as a serverless function. The low operating cost of no server will continue to play a key role in the future of computing.

With more and more users adopting serverless technology, AWS realizes that the current virtualization technology has not developed synchronously, so as to optimize the workload with such event driven and sometimes transient characteristics. AWS believes that it is necessary to build virtualization technology especially for serverless computing design. This technology needs to not only provide the security boundary of virtual machine based on hardware virtualization, but also keep the packaging model and agility of smaller containers and functions.

2, Firecracker Technology

2.1, introduction

In the current technology environment, the container has fast start-up time and high density. VM can virtualize the hardware, have better security, and have better isolation to the workload. The features of the container and VM are not yet compatible.

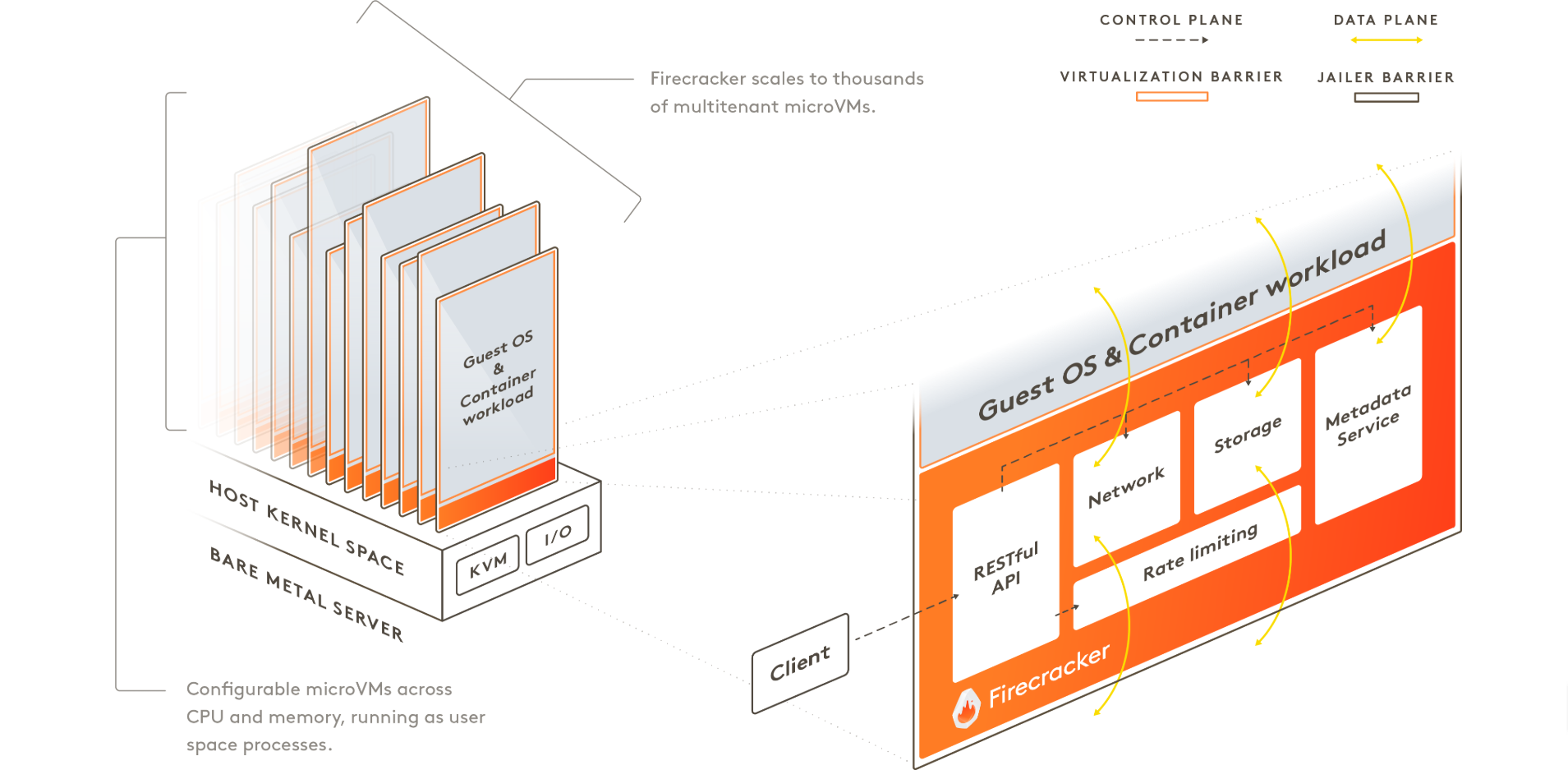

AWS open source Firecracker, a new virtualization technology using KVM, is dedicated to creating and managing multi tenant containers and function based services. You can start a lightweight micro VM in a fraction of a second in a non virtualized environment, making full use of the security and workload isolation provided by the traditional virtual machine, and also the resource efficiency of the container.

Firecracker It is a kind of open-source virtual machine monitor (VMM) based on Linux kernel virtual machine (KVM) technology. Firecracker allows you to create micro virtual machines, or microvms. Firecracker adheres to the design principle of brevity, which only contains the components needed to run a secure, lightweight virtual machine. At every step of the design process, AWS optimizes firecracker according to the requirements of security, speed and efficiency. For example, only relatively new Linux kernels are started, and only kernels compiled with a specific set of configuration options (more than 1000 kernel compilation configuration options) are started. In addition, it does not support any type of graphics card or accelerator, does not support hardware passthrough, and does not support (most) older devices.

Firecracker starts with very few kernel configurations, does not rely on simulated BIOS, and does not use full device mode. The only devices are the semi virtualized network card and the semi virtualized hard disk, as well as the single button keyboard (the reset pin is used when there is no power management device). This minimalist device mode is not only conducive to shortening the boot time (the boot time of i3.metal instance with default micro VM model is less than 125ms), but also reduces the number of * * * devices, thus improving the security. See about Firecracker promises to support execution at a very low cost More about containers and serverless workloads.

In autumn 2017, AWS decided to Rust Language to write Firecracker, which is a very advanced programming language, can ensure thread and memory security, prevent cache overflow and many other types of memory security problems that may lead to security vulnerabilities. Please visit Firecracker design To learn more about Firecracker VMM features and architecture.

Because the device model is very simple and the kernel loading process is also simple, it can achieve less than 125 ms startup time and less memory consumption. Firecracker currently supports Intel CPU, will start supporting AMD and ARM in 2019, and will integrate with popular container runtime such as containerd. Firecracker supports Linux host and client operating systems with kernel version 4.14 and later.

Firecracker microVM improves efficiency and utilization, with a very low memory overhead of < 5mib per microVM. This means that users can encapsulate thousands of micro VMS into one virtual machine. The in-process rate limiter can be used to achieve fine control over the sharing of network and storage resources, even across thousands of micro VMS. All hardware computing resources can be safely overbooked to maximize the number of workloads that can run on the host.

2.2 advantages of Firecracker

AWS is based on Guiding beliefs Developed Firecracker.

- Built in security: AWS provides a computational security barrier that supports multi tenant workloads and is not disabled by customer errors. Customer workloads are considered to be both sacred (inviolable) and evil (should be excluded).

- High performance: microVM can be started in as little as 125 milliseconds (faster in 2019), making it an ideal choice for many workload types, including transient or short-term workloads.

- Lightweight Virtualization: focus on transient or stateless workloads rather than long-running or persistent workloads. The hardware resource overhead of Firecracker is clear and guaranteed.

- Long experience: Firecracker has passed many tests and has provided support for many high-capacity AWS services, including AWS Lambda and AWS Fargate.

- Low overhead: Firecracker consumes about 5 MiB of memory per microVM. You can run thousands of secure VM S with different vCPU and memory configurations on the same instance.

- Functional minimalism: does not build functions that are not explicitly required by our tasks. Only one is implemented for each function.

- Computing overbooking: all hardware computing resources opened by Firecracker to guests can be safely overbooked.

- Open source: Firecracker is an open source project. AWS is ready to review and accept pull requests.

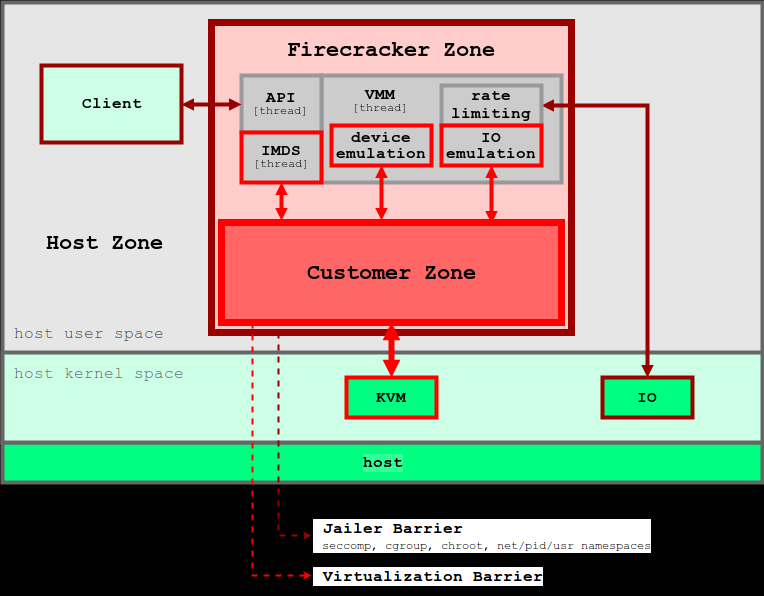

2.3 security of Firecracker

- Simple client model: the Firecracker client provides a very simple virtualization device model to minimize the * * * side: only network devices, block I / O devices, programmable timers, KVM clocks, serial consoles and an incomplete keyboard (just enough for VM reset).

- Process jailing: use cgroups and seccomp BPF to jail the Firecracker process, and access a strictly controlled list of small system calls.

- Static link: the Firecracker process is statically linked and can be started from the process prison to ensure that the host environment is as safe and clean as possible.

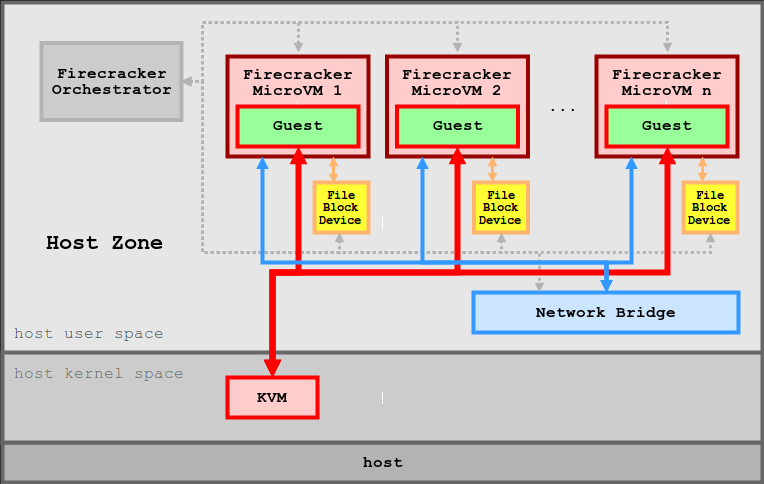

2.4 working mode of Firecracker

2.4.1 relationship with host computer

Firecracker runs on a Linux host with a 4.14 or newer kernel and uses Linux guest OSs (from this point, called guest). After starting the process, the user interacts with the Firecracker API to configure the microVM before issuing the instanceart command.

2.4.2 internal architecture of Firecracker

Each Firecracker process encapsulates one and only one microVM. The process runs the following threads: API, VMM, and vCPU. API thread is responsible for Firecracker's API server and related control plane. It will never be on the fast path of a virtual machine. The VMM thread exposes the machine model, the minimum legacy device model, the microVM metadata service (MMDS) and the VirtIO device to emulate the Net and Block devices, and provides i / o rate limits. In addition, there are one or more vCPU threads (one per customer CPU core). They are created through KVM and run the KVM run main loop. They perform synchronous i / o and memory mapped i / o operations on the device model.

2.4.3 how does Firecracker work

Firecracker runs in user space and uses a Linux kernel based virtual machine (KVM) to create a microVM. The fast start-up time and low memory overhead of each microVM allow you to package thousands of microvms on the same machine. This means that each function or container group can be encapsulated with a virtual machine barrier so that different users' workloads can run on the same computer without a tradeoff between security and efficiency. Firecracker is an alternative to QEMU, which is a mature VMM with a general and extensive feature set and can host a variety of customer operating systems.

The firecracker process can be controlled through the RESTful API, which enables common operations, such as configuring the number of vCPU or starting the computer. Firecracker provides a built-in rate limiter that precisely controls the network and storage resources used by thousands of microvms on the same computer. You can create and configure rate limiters through the Firecracker API, and flexibly define rate limiters to support unexpected situations or specific bandwidth / operation restrictions. Firecracker also provides metadata services to securely share configuration information between host and client operating systems. The metadata service can be set using the Firecracker API.

Firecracker is not yet available on Kubernetes, Docker, or Kata Container. Kata Container is an OCI compliant container running in a QEMU based virtual machine. Firecracker is a cloud native alternative to QEMU, which is specially used to run containers safely and efficiently. This is the difference between firecracker, Kata Container and QEMU.

2.5 implementation of AWS lambda & fargate

AWS Lambda uses Firecracker as the configuration and operation basis of sandbox environment, and AWS will execute customer code on the sandbox environment. Because the security micro virtual machine configured by Firecracker can be quickly configured in the smallest volume, it can bring excellent performance without sacrificing the level of security. As a result, AWS will be able to achieve high resource utilization on top of physical hardware - including optimizing the specific way to allocate and run workload for Lambda, and mixing workload according to factors such as active / idle time and memory utilization.

Before that, Fargate Tasks included one or more Docker containers running in a dedicated EC2 virtual machine to ensure that tasks were isolated from each other. These tasks can now be executed on the Firecracker micro virtual machine, which enables AWS to configure the Fargate runtime layer faster and more efficiently based on the EC2 bare metal instance, while improving the workload density without affecting the kernel level isolation capability of the task. Over time, this also allows AWS to continue to innovate within the runtime layer, providing customers with better performance while maintaining a high level of security and reducing the overall cost of running a serverless container architecture.

Firecracker currently runs on Intel processors and will support AMD and ARM processors by 2019.

Users can run Firecracker on an AWS. Metal instance, as well as on any other bare metal server, including the internal environment and developers' laptops.

Firecracker will also enable the currently popular container runtime (such as containerd) to manage containers as micro virtual machines. As a result, users' dockers and container choreography frameworks (such as Kubernetes) will be able to use firecracker.

3, Getting started with Firecracker

3.1 preconditions

Getting started with Firecracker Provides detailed instructions on how to download Firecracker binaries, launch Firecracker with different options, build from source, and run integration tests. You can use Firecracker Jailer Run Firecracker in a production environment.

Let's see how to start using Firecracker on the AWS cloud (these steps can be used on any bare metal machine):

Create an instance of i3.metal using Ubuntu 18.04.1.

Firecracker is built on KVM and requires read / write access to / dev/kvm. Log in to a host in a terminal and set the access rights:

sudo chmod 777 /dev/kvm

3.2 download binary package

You can just download the latest binary from our release page, and run it on your x86_64 or aarch64 Linux machine.

wget https://github.com/firecracker-microvm/firecracker/releases/download/v0.21.0/firecracker-v0.21.0-x86_64 chmod +x firecracker-v0.21.0-x86_64 ./firecracker-v0.21.0-x86_64 --api-sock /tmp/firecracker.sock

Through ps-ef, we can see that the process of firetracker is 3501, and then we can see that it only takes up 4kb of memory before starting.

# cat /proc/3501/status|grep VmRSS VmRSS: 4 kB

3.3 running Firecracker

3.3.1 modify vcpu and memory

Now we have started a micro VM, which can be used by every micro VM REST API To visit. Query the microVM in another terminal:

# curl --unix-socket /tmp/firecracker.sock "http://localhost/machine-config"

{ "vcpu_count": 1, "mem_size_mib": 128, "ht_enabled": false, "cpu_template": "Uninitialized" }This starts a VMM process and waits for the microVM configuration. By default, each micro VM will allocate one vCPU and 128MiB memory. If you need to modify vCPU and memory size, you can send the following request to the micro VM API:

curl --unix-socket /tmp/firecracker.sock -i \

-X PUT 'http://localhost/machine-config' \

-H 'Accept: application/json' \

-H 'Content-Type: application/json' \

-d '{

"vcpu_count": 2,

"mem_size_mib": 4096,

"ht_enabled": false

}'3.3.2. Set the boot kernel and root directory

Now this microVM needs to be configured using the extracted Linux kernel binaries and the ext4 file system that will be used as the root file system.

Download the sample kernel and rootfs:

curl -fsSL -o hello-vmlinux.bin https://s3.amazonaws.com/spec.ccfc.min/img/hello/kernel/hello-vmlinux.bin curl -fsSL -o hello-rootfs.ext4 https://s3.amazonaws.com/spec.ccfc.min/img/hello/fsfiles/hello-rootfs.ext4

Set guest kernel:

curl --unix-socket /tmp/firecracker.sock -i \

-X PUT 'http://localhost/boot-source' \

-H 'Accept: application/json' \

-H 'Content-Type: application/json' \

-d '{

"kernel_image_path": "./hello-vmlinux.bin",

"boot_args": "console=ttyS0 reboot=k panic=1 pci=off"

}'Return the following:

HTTP/1.1 204 Server: Firecracker API Connection: keep-alive

Then set the root file system:

curl --unix-socket /tmp/firecracker.sock -i \

-X PUT 'http://localhost/drives/rootfs' \

-H 'Accept: application/json' \

-H 'Content-Type: application/json' \

-d '{

"drive_id": "rootfs",

"path_on_host": "./hello-rootfs.ext4",

"is_root_device": true,

"is_read_only": false

}'3.3.3. Start microVM

After the kernel and root file system are configured, the guest virtual machine will be started:

curl --unix-socket /tmp/firecracker.sock -i \

-X PUT 'http://localhost/actions' \

-H 'Accept: application/json' \

-H 'Content-Type: application/json' \

-d '{

"action_type": "InstanceStart"

}'The first terminal will now display a sequence TTY, which prompts you to log in to the guest virtual machine. When we switch to the first terminal, we can see the whole startup process of the microVM. We can see through the startup process that it takes about 150ms to complete the startup. I will post it below:

[ 0.000000] Linux version 4.14.55-84.37.amzn2.x86_64 (mockbuild@ip-10-0-1-79) (gcc version 7.3.1 20180303 (Red Hat 7.3.1-5) (GCC)) #1 SMP Wed Jul 25 18:47:15 UTC 2018 [ 0.000000] Command line: console=ttyS0 reboot=k panic=1 pci=off root=/dev/vda rw virtio_mmio.device=4K@0xd0000000:5 [ 0.000000] x86/fpu: Supporting XSAVE feature 0x001: 'x87 floating point registers' [ 0.000000] x86/fpu: Supporting XSAVE feature 0x002: 'SSE registers' [ 0.000000] x86/fpu: Supporting XSAVE feature 0x004: 'AVX registers' [ 0.000000] x86/fpu: xstate_offset[2]: 576, xstate_sizes[2]: 256 [ 0.000000] x86/fpu: Enabled xstate features 0x7, context size is 832 bytes, using 'standard' format. [ 0.000000] e820: BIOS-provided physical RAM map: [ 0.000000] BIOS-e820: [mem 0x0000000000000000-0x000000000009fbff] usable [ 0.000000] BIOS-e820: [mem 0x0000000000100000-0x0000000007ffffff] usable [ 0.000000] NX (Execute Disable) protection: active [ 0.000000] DMI not present or invalid. [ 0.000000] Hypervisor detected: KVM [ 0.000000] tsc: Using PIT calibration value [ 0.000000] e820: last_pfn = 0x8000 max_arch_pfn = 0x400000000 [ 0.000000] MTRR: Disabled [ 0.000000] x86/PAT: MTRRs disabled, skipping PAT initialization too. [ 0.000000] CPU MTRRs all blank - virtualized system. [ 0.000000] x86/PAT: Configuration [0-7]: WB WT UC- UC WB WT UC- UC [ 0.000000] found SMP MP-table at [mem 0x0009fc00-0x0009fc0f] mapped at [ffffffffff200c00] [ 0.000000] Scanning 1 areas for low memory corruption [ 0.000000] Using GB pages for direct mapping [ 0.000000] No NUMA configuration found [ 0.000000] Faking a node at [mem 0x0000000000000000-0x0000000007ffffff] [ 0.000000] NODE_DATA(0) allocated [mem 0x07fde000-0x07ffffff] [ 0.000000] kvm-clock: Using msrs 4b564d01 and 4b564d00 [ 0.000000] kvm-clock: cpu 0, msr 0:7fdc001, primary cpu clock [ 0.000000] kvm-clock: using sched offset of 125681660769 cycles [ 0.000000] clocksource: kvm-clock: mask: 0xffffffffffffffff max_cycles: 0x1cd42e4dffb, max_idle_ns: 881590591483 ns [ 0.000000] Zone ranges: [ 0.000000] DMA [mem 0x0000000000001000-0x0000000000ffffff] [ 0.000000] DMA32 [mem 0x0000000001000000-0x0000000007ffffff] [ 0.000000] Normal empty [ 0.000000] Movable zone start for each node [ 0.000000] Early memory node ranges [ 0.000000] node 0: [mem 0x0000000000001000-0x000000000009efff] [ 0.000000] node 0: [mem 0x0000000000100000-0x0000000007ffffff] [ 0.000000] Initmem setup node 0 [mem 0x0000000000001000-0x0000000007ffffff] [ 0.000000] Intel MultiProcessor Specification v1.4 [ 0.000000] MPTABLE: OEM ID: FC [ 0.000000] MPTABLE: Product ID: 000000000000 [ 0.000000] MPTABLE: APIC at: 0xFEE00000 [ 0.000000] Processor #0 (Bootup-CPU) [ 0.000000] IOAPIC[0]: apic_id 2, version 17, address 0xfec00000, GSI 0-23 [ 0.000000] Processors: 1 [ 0.000000] smpboot: Allowing 1 CPUs, 0 hotplug CPUs [ 0.000000] PM: Registered nosave memory: [mem 0x00000000-0x00000fff] [ 0.000000] PM: Registered nosave memory: [mem 0x0009f000-0x000fffff] [ 0.000000] e820: [mem 0x08000000-0xffffffff] available for PCI devices [ 0.000000] Booting paravirtualized kernel on KVM [ 0.000000] clocksource: refined-jiffies: mask: 0xffffffff max_cycles: 0xffffffff, max_idle_ns: 7645519600211568 ns [ 0.000000] random: get_random_bytes called from start_kernel+0x94/0x486 with crng_init=0 [ 0.000000] setup_percpu: NR_CPUS:128 nr_cpumask_bits:128 nr_cpu_ids:1 nr_node_ids:1 [ 0.000000] percpu: Embedded 41 pages/cpu @ffff880007c00000 s128728 r8192 d31016 u2097152 [ 0.000000] KVM setup async PF for cpu 0 [ 0.000000] kvm-stealtime: cpu 0, msr 7c15040 [ 0.000000] PV qspinlock hash table entries: 256 (order: 0, 4096 bytes) [ 0.000000] Built 1 zonelists, mobility grouping on. Total pages: 32137 [ 0.000000] Policy zone: DMA32 [ 0.000000] Kernel command line: console=ttyS0 reboot=k panic=1 pci=off root=/dev/vda rw virtio_mmio.device=4K@0xd0000000:5 [ 0.000000] PID hash table entries: 512 (order: 0, 4096 bytes) [ 0.000000] Memory: 111064K/130680K available (8204K kernel code, 622K rwdata, 1464K rodata, 1268K init, 2820K bss, 19616K reserved, 0K cma-reserved) [ 0.000000] SLUB: HWalign=64, Order=0-3, MinObjects=0, CPUs=1, Nodes=1 [ 0.000000] Kernel/User page tables isolation: enabled [ 0.004000] Hierarchical RCU implementation. [ 0.004000] RCU restricting CPUs from NR_CPUS=128 to nr_cpu_ids=1. [ 0.004000] RCU: Adjusting geometry for rcu_fanout_leaf=16, nr_cpu_ids=1 [ 0.004000] NR_IRQS: 4352, nr_irqs: 48, preallocated irqs: 16 [ 0.004000] Console: colour dummy device 80x25 [ 0.004000] console [ttyS0] enabled [ 0.004000] tsc: Detected 2299.998 MHz processor [ 0.004000] Calibrating delay loop (skipped) preset value.. 4599.99 BogoMIPS (lpj=9199992) [ 0.004000] pid_max: default: 32768 minimum: 301 [ 0.004000] Security Framework initialized [ 0.004000] SELinux: Initializing. [ 0.004187] Dentry cache hash table entries: 16384 (order: 5, 131072 bytes) [ 0.005499] Inode-cache hash table entries: 8192 (order: 4, 65536 bytes) [ 0.006697] Mount-cache hash table entries: 512 (order: 0, 4096 bytes) [ 0.008013] Mountpoint-cache hash table entries: 512 (order: 0, 4096 bytes) [ 0.009671] Last level iTLB entries: 4KB 64, 2MB 8, 4MB 8 [ 0.010636] Last level dTLB entries: 4KB 64, 2MB 0, 4MB 0, 1GB 4 [ 0.012005] Spectre V2 : Mitigation: Full generic retpoline [ 0.012987] Speculative Store Bypass: Vulnerable [ 0.025015] Freeing SMP alternatives memory: 28K [ 0.026799] smpboot: Max logical packages: 1 [ 0.027795] x2apic enabled [ 0.028005] Switched APIC routing to physical x2apic. [ 0.030291] ..TIMER: vector=0x30 apic1=0 pin1=0 apic2=-1 pin2=-1 [ 0.031291] smpboot: CPU0: Intel(R) Xeon(R) Processor @ 2.30GHz (family: 0x6, model: 0x4f, stepping: 0x1) [ 0.032000] Performance Events: unsupported p6 CPU model 79 no PMU driver, software events only. [ 0.032000] Hierarchical SRCU implementation. [ 0.032093] smp: Bringing up secondary CPUs ... [ 0.032817] smp: Brought up 1 node, 1 CPU [ 0.033456] smpboot: Total of 1 processors activated (4599.99 BogoMIPS) [ 0.034834] devtmpfs: initialized [ 0.035417] x86/mm: Memory block size: 128MB [ 0.036178] clocksource: jiffies: mask: 0xffffffff max_cycles: 0xffffffff, max_idle_ns: 7645041785100000 ns [ 0.037685] futex hash table entries: 256 (order: 2, 16384 bytes) [ 0.038868] NET: Registered protocol family 16 [ 0.039717] cpuidle: using governor ladder [ 0.040006] cpuidle: using governor menu [ 0.044665] HugeTLB registered 1.00 GiB page size, pre-allocated 0 pages [ 0.045744] HugeTLB registered 2.00 MiB page size, pre-allocated 0 pages [ 0.046973] dmi: Firmware registration failed. [ 0.047770] NetLabel: Initializing [ 0.048026] NetLabel: domain hash size = 128 [ 0.048731] NetLabel: protocols = UNLABELED CIPSOv4 CALIPSO [ 0.049639] NetLabel: unlabeled traffic allowed by default [ 0.050631] clocksource: Switched to clocksource kvm-clock [ 0.051521] VFS: Disk quotas dquot_6.6.0 [ 0.051521] VFS: Dquot-cache hash table entries: 512 (order 0, 4096 bytes) [ 0.053231] NET: Registered protocol family 2 [ 0.054036] TCP established hash table entries: 1024 (order: 1, 8192 bytes) [ 0.055137] TCP bind hash table entries: 1024 (order: 2, 16384 bytes) [ 0.056156] TCP: Hash tables configured (established 1024 bind 1024) [ 0.057164] UDP hash table entries: 256 (order: 1, 8192 bytes) [ 0.058077] UDP-Lite hash table entries: 256 (order: 1, 8192 bytes) [ 0.059067] NET: Registered protocol family 1 [ 0.060338] virtio-mmio: Registering device virtio-mmio.0 at 0xd0000000-0xd0000fff, IRQ 5. [ 0.061666] platform rtc_cmos: registered platform RTC device (no PNP device found) [ 0.063021] Scanning for low memory corruption every 60 seconds [ 0.064162] audit: initializing netlink subsys (disabled) [ 0.065238] Initialise system trusted keyrings [ 0.065946] Key type blacklist registered [ 0.066623] audit: type=2000 audit(1582381251.667:1): state=initialized audit_enabled=0 res=1 [ 0.067999] workingset: timestamp_bits=36 max_order=15 bucket_order=0 [ 0.070284] squashfs: version 4.0 (2009/01/31) Phillip Lougher [ 0.073661] Key type asymmetric registered [ 0.074318] Asymmetric key parser 'x509' registered [ 0.075091] Block layer SCSI generic (bsg) driver version 0.4 loaded (major 254) [ 0.076319] io scheduler noop registered (default) [ 0.077122] io scheduler cfq registered [ 0.077799] virtio-mmio virtio-mmio.0: Failed to enable 64-bit or 32-bit DMA. Trying to continue, but this might not work. [ 0.079660] Serial: 8250/16550 driver, 1 ports, IRQ sharing disabled [ 0.102677] serial8250: ttyS0 at I/O 0x3f8 (irq = 4, base_baud = 115200) is a U6_16550A [ 0.105548] loop: module loaded [ 0.106732] tun: Universal TUN/TAP device driver, 1.6 [ 0.107583] hidraw: raw HID events driver (C) Jiri Kosina [ 0.108489] nf_conntrack version 0.5.0 (1024 buckets, 4096 max) [ 0.109523] ip_tables: (C) 2000-2006 Netfilter Core Team [ 0.110405] Initializing XFRM netlink socket [ 0.111154] NET: Registered protocol family 10 [ 0.112326] Segment Routing with IPv6 [ 0.112931] NET: Registered protocol family 17 [ 0.113638] Bridge firewalling registered [ 0.114325] sched_clock: Marking stable (112005721, 0)->(211417276, -99411555) [ 0.115605] registered taskstats version 1 [ 0.116270] Loading compiled-in X.509 certificates [ 0.117814] Loaded X.509 cert 'Build time autogenerated kernel key: 3472798b31ba23b86c1c5c7236c9c91723ae5ee9' [ 0.119392] zswap: default zpool zbud not available [ 0.120179] zswap: pool creation failed [ 0.120924] Key type encrypted registered [ 0.123818] EXT4-fs (vda): recovery complete [ 0.124608] EXT4-fs (vda): mounted filesystem with ordered data mode. Opts: (null) [ 0.125761] VFS: Mounted root (ext4 filesystem) on device 254:0. [ 0.126874] devtmpfs: mounted [ 0.128116] Freeing unused kernel memory: 1268K [ 0.136083] Write protecting the kernel read-only data: 12288k [ 0.138147] Freeing unused kernel memory: 2016K [ 0.140430] Freeing unused kernel memory: 584K OpenRC init version 0.35.5.87b1ff59c1 starting Starting sysinit runlevel OpenRC 0.35.5.87b1ff59c1 is starting up Linux 4.14.55-84.37.amzn2.x86_64 (x86_64) * Mounting /proc ... [ ok ] * Mounting /run ... * /run/openrc: creating directory * /run/lock: creating directory * /run/lock: correcting owner * Caching service dependencies ... Service `hwdrivers' needs non existent service `dev' [ ok ] Starting boot runlevel * Remounting devtmpfs on /dev ... [ ok ] * Mounting /dev/mqueue ... [ ok ] * Mounting /dev/pts ... [ ok ] * Mounting /dev/shm ... [ ok ] * Setting hostname ... [ ok ] * Checking local filesystems ... [ ok ] * Remounting filesystems ... [ ok[ 0.292620] random: fast init done ] * Mounting local filesystems ... [ ok ] * Loading modules ... modprobe: can't change directory to '/lib/modules': No such file or directory modprobe: can't change directory to '/lib/modules': No such file or directory [ ok ] * Mounting misc binary format filesystem ... [ ok ] * Mounting /sys ... [ ok ] * Mounting security filesystem ... [ ok ] * Mounting debug filesystem ... [ ok ] * Mounting SELinux filesystem ... [ ok ] * Mounting persistent storage (pstore) filesystem ... [ ok ] Starting default runlevel [ 1.088040] clocksource: tsc: mask: 0xffffffffffffffff max_cycles: 0x212733415c7, max_idle_ns: 440795236380 ns Welcome to Alpine Linux 3.8 Kernel 4.14.55-84.37.amzn2.x86_64 on an x86_64 (ttyS0) localhost login:

3.3.4 log in to microVM

Log in with root and password root to view the terminals of the guest virtual machine:

Welcome to Alpine Linux 3.8 Kernel 4.14.55-84.37.amzn2.x86_64 on an x86_64 (ttyS0) localhost login: root Password: Welcome to Alpine! The Alpine Wiki contains a large amount of how-to guides and general information about administrating Alpine systems. See <http://wiki.alpinelinux.org>. You can setup the system with the command: setup-alpine You may change this message by editing /etc/motd. login[855]: root login on 'ttyS0' localhost:~#

You can use ls / to view the file system:

localhost:~# ls / bin home media root srv usr dev lib mnt run sys var etc lost+found proc sbin tmp

At this point, let's look at its memory usage of 36MB.

# cat /proc/3501/status|grep VmRSS VmRSS: 36996 kB

Use the reboot command to terminate the microVM. To balance efficiency, Firecracker does not currently implement guest power management. Instead, the reboot command issues a keyboard reset operation as a shutdown switch.

After creating the basic microVM, you can add network interfaces, more drives, and continue to configure the microVM.

Need to create thousands of microVMs on your bare metal instance?

for ((i=0; i<1000; i++)); do

./firecracker-v0.21.0-x86_64 --api-sock /tmp/firecracker-$i.sock &

doneMultiple micro VMS can configure the same shared root file system, and then assign each micro VM its own read / write share.

3.4. Configure network for microVM

3.4.1 create tap device

Currently, there is no network or other I/O for the created micro VM. Now we configure the network for it. First, we add a tap0 device to the host.

sudo ip tuntap add tap0 mode tap

microVM needs to access the public network. We use NAT here. We need to configure iptables. First, clear the iptables rules to avoid other problems. The name of the host network interface here is enp4s0:

sudo ip addr add 172.16.0.1/24 dev tap0 sudo ip link set tap0 up sudo sh -c "echo 1 > /proc/sys/net/ipv4/ip_forward" sudo iptables -t nat -A POSTROUTING -o enp4s0 -j MASQUERADE sudo iptables -A FORWARD -m conntrack --ctstate RELATED,ESTABLISHED -j ACCEPT sudo iptables -A FORWARD -i tap0 -o enp4s0 -j ACCEPT

Now we can view the created tap0:

root@ip-172-31-20-74:~# ifconfig tap0

tap0: flags=4099<UP,BROADCAST,MULTICAST> mtu 1500

inet 172.16.0.1 netmask 255.255.255.0 broadcast 0.0.0.0

ether fe:2d:e3:ba:09:ae txqueuelen 1000 (Ethernet)

RX packets 0 bytes 0 (0.0 B)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 0 bytes 0 (0.0 B)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0If a physical machine wants to start multiple microvms, we need to create a tap device for each microVM and set iptables NAT rules for each tap device.

3.4.2. Configure tap for microVM

Before microVM starts, we configure the network interface for it through the microVM API.

curl --unix-socket /tmp/firecracker.sock -i \

-X PUT 'http://localhost/network-interfaces/eth0' \

-H 'Accept: application/json' \

-H 'Content-Type: application/json' \

-d '{

"iface_id": "eth0",

"guest_mac": "AA:FC:00:00:00:01",

"host_dev_name": "tap0"

}'3.4.3 configure IP for microVM

We log in to microVM and configure IP for its network interface eth0:

ip addr add 172.16.0.2/24 dev eth0 ip link set eth0 up ip route add default via 172.16.0.1 dev eth0

View the network.

localhost:~# ifconfig

eth0 Link encap:Ethernet HWaddr AA:FC:00:00:00:01

inet addr:172.16.0.2 Bcast:0.0.0.0 Mask:255.255.255.0

inet6 addr: fe80::a8fc:ff:fe00:1/64 Scope:Link

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX packets:0 errors:0 dropped:0 overruns:0 frame:0

TX packets:6 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:0 (0.0 B) TX bytes:516 (516.0 B)

localhost:~# ping 8.8.8.8

PING 8.8.8.8 (8.8.8.8): 56 data bytes

64 bytes from 8.8.8.8: seq=0 ttl=47 time=1.491 ms

64 bytes from 8.8.8.8: seq=1 ttl=47 time=1.118 ms

64 bytes from 8.8.8.8: seq=2 ttl=47 time=1.136 ms3.4.4 clearing the network

When we delete a micro VM, we can delete its related network devices.

sudo ip link del tap0 sudo iptables -F sudo sh -c "echo 0 > /proc/sys/net/ipv4/ip_forward"

Here is just a brief introduction to the use of Firecracker. For the use of production environment, please check the recommendation of the official github document in detail.

Reference documents:

https://github.com/firecracker-microvm/firecracker/blob/master/docs/jailer.md

https://firecracker-microvm.github.io/

Welcome to scan for more information