preface

This blog will summarize Java multithreading according to the existing knowledge. The following blog is only a summary of your personal learning process. It's a great honor to be helpful to all bloggers.

This blog will briefly introduce the concept of thread safety and several methods of practicing thread safety, focusing on several common locking operations, which will be supplemented and modified later with further learning.

Risks in multithreaded environment

As mentioned earlier, the introduction of multithreading greatly improves the efficiency of task execution, but on the other hand, it also introduces some risks while improving the efficiency

For example, the following scenario

//Use multithreading to do + + operation on the same static variable

public static int COUNT = 0;

public static void main(String[] args) {

Thread[] threads = new Thread[20];//Creating a thread array is the same as storing the next created thread

for(int i = 0; i < threads.length; i++){

threads[i] = new Thread(new Runnable() {//Creating threads using anonymous inner classes

@Override

public void run() {

for(int i = 0; i < 1000;i++) {

COUNT++;

}

}

});

}

for(Thread t : threads){//Start the 20 threads in turn

t.start();

}

while (Thread.activeCount() > 2){

Thread.yield();//Ensure that all threads in the Thread array run

}

System.out.println(COUNT);

}

The above code implements + + operation on the same static variable in a multithreaded environment

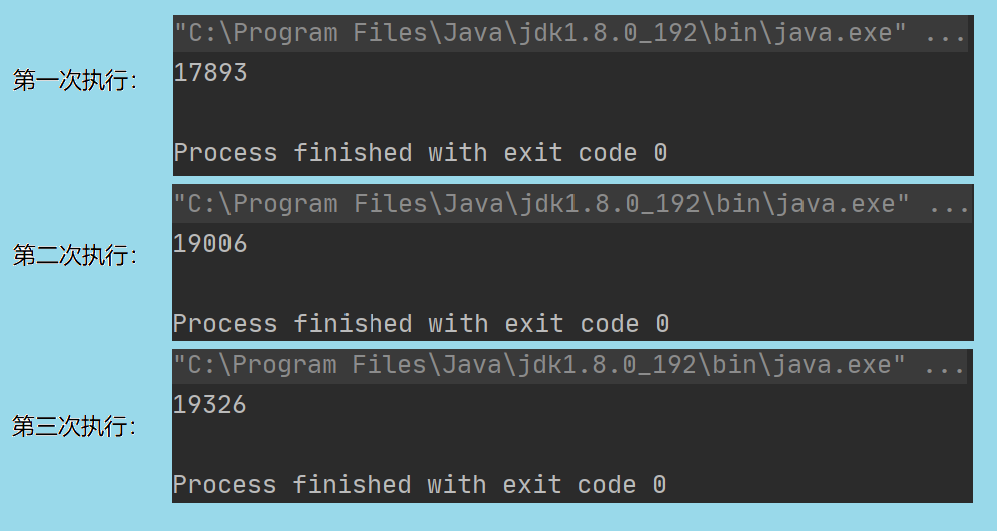

A total of 20 threads are created, and each thread executes COUNT++ 1000 times. Theoretically, when all 20 threads are executed, the value of COUNT will change to 20000

In fact, after three executions, the value of COUNT does not reach 20000, and the value is different each time. Why?

Thread safety problems caused by analysis

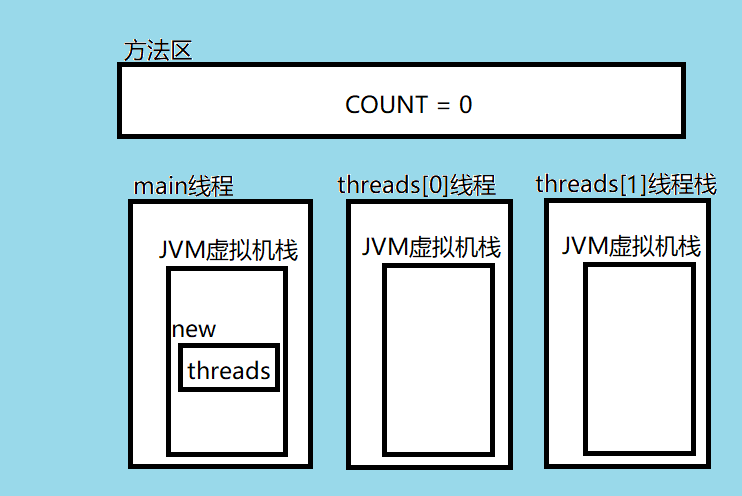

For the above code, it goes through the following process after startup:

Start - > execute java Exe process - > initialize JVM parameters - > create JVM virtual machine - > start background thread - > start java level main thread (start executing main method in java)

When the thread thread starts executing COUNT + + of the run method, it will be disassembled into three steps

- Get COUNT from method area

- Change the value of COUNT to COUNT+1 in working memory

- Write the value of COUNT back to main memory

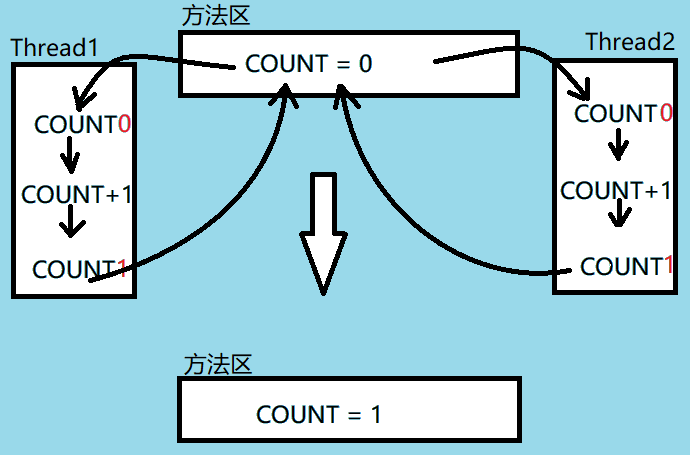

Risk: at this time, because there will be 20 Thread threads executing at the same time, it may occur that the working memory of the two threads obtains COUNT at the same time (that is, the obtained COUNT value is the same). At this time, after the execution of the two threads is completed and written back to the main memory, the COUNT value is only + 1 and does not reach the expected + 2. At this time, Thread safety occurs.

The root cause of thread safety: multiple threads modify and rewrite the same section of memory, so that the modified content cannot be really modified

Reasons why multithreading is unsafe

- Atomicity

For multiline instructions, if there are dependencies before and after the instructions, you cannot insert other instructions that affect the execution results of your own thread - visibility

In the system call CPU execution thread, one thread can immediately see the modification of shared variables by another thread - Order

The program is executed in the order of the code (the processor may reorder the instructions)

Methods to solve thread safety problems

synchronized keyword

In Java, the synchronized keyword is used to control synchronized threads. In a multithreaded environment, synchronized modified code blocks, classes, methods and variables cannot be executed by threads at the same time,

In jdk1 6. Previously, the synchronized java built-in lock did not have the lock expansion mechanism of biased lock - > lightweight lock - > heavyweight lock,

The lock inflation mechanism was implemented after 1.6 to optimize the synchronization performance of java threads. Before 1.6, it was a heavyweight lock based on the monitor mechanism.

Specific operation of synchronized

When the synchronized keyword is added to the static method and code block, it locks the class. When it is added to the instance method and code block, it locks the object instance

public class Singleton {

private volatile static Singleton instance;

private Singleton() {

}

public static Singleton getInstance(){

if(instance == null){

synchronized (Singleton.class){

instance = new Singleton();

}

}

return instance;

}

}

In the singleton mode, the volatile keyword modification of instance is also very important to prevent the reordering of the initialization step and the method of introducing the assignment step in the new Singleton()

Implementation principle of synchronized bottom layer

Realize multi-threaded synchronization and mutual exclusion of a program (a piece of code can only be executed by one thread at any point in time)

When decompilating the synchronized modified code block into bytecode, the word monitor is added before and after the code block. The front is monitorener, and the back is monitorexit. The two flags respectively mark the acquisition and release of locks

When the monitorenter instruction is executed, the current thread will attempt to obtain the ownership of the monitor corresponding to the object lock. When the counter of the monitor of the object lock is 0, the thread can obtain the monitor, set the counter to 1, and successfully obtain the object lock. If the current thread has the ownership of the monitor of the object lock, it can re-enter the monitor, and the counter will be incremented by 1. If other threads already own the monitor ownership of the object lock, the thread will be blocked until the execution of the thread that obtains the object lock ends, that is, the monitorexit instruction is executed. After execution, the object lock will be released and the counter will be set to 0

Reasons for two monitor flags

If there is only one monitor flag to obtain the object lock, the object lock still cannot be released normally after exiting the program under abnormal circumstances, which will lead to deadlock. Therefore, set the next monitor flag. No matter how the program exits, it will eventually execute monitorexit and release the lock here

Principle of synchronized reentrant lock

Reentry lock is to enable the same thread to repeatedly acquire an object lock. The bottom layer is based on a counter, which is + 1 for each acquisition and - 1 for each release

spin

In the actual scenario, many synchronized locked objects are executed and used quickly. At this time, if all other threads are set to blocking state, it will involve the switching between user state and kernel state, which is very time-consuming. Therefore, the spin operation is introduced to make the threads that do not preempt the lock cycle and constantly try to obtain the lock, which improves the efficiency.

The principle of synchronized lock upgrading the underlying layer

There is a threadid field in the object header of the lock object. When accessing for the first time, the threadid is empty. The JVM makes it hold the bias lock and sets the threadid as its thread id. when entering again, it will first judge whether the threadid is consistent with its thread id. if it is consistent, the object will be used directly. If it is inconsistent, it will be upgraded to a lightweight lock; Obtain the lock by spinning a few times. If the lock is not obtained after a period of execution, the lock will be upgraded to a heavyweight lock.

CAS

concept

CAS -- CompareAndSwap -- compare and exchange

CAS contains three operands -- memory location (V), expected index (A), and new value to be written (B)

Step 1: compare whether the memory location (V) is equal to the expected value (A)

Step 2: If equal, write the new value (B) to be written to memory location (A)

Part 3: return boolean type, indicating whether the operation is successful

When multiple threads perform CAS operations on a resource at the same time, only one thread will return true successfully, and other threads will wait. Optimistic locking is a typical implementation of CAS

What are the problems with CAS?

1.ABA problem

The ABA problem is that the value in V changes from a to B and from B to A. from the perspective of CAS, the thread is not occupied during this period of time

Solution: introduce version number (e.g. carry a timestamp)

2. The spin overhead is always too large

When too many threads preempt the same lock, the probability of CAS spin will be large, thus wasting a lot of CPU resources. At this time, the efficiency will be lower than that of synchronized

3. Only atomic operation of one shared variable can be guaranteed

When operating on only one shared variable, CAS can be used to ensure atomicity, but when operating on multiple shared variables, CAS is not applicable. At this time, it needs to be locked.

Lock lock

Lock interface

The method provided in the Lock interface is more extensible than the synchronized locking synchronization method and synchronization code block. It allows more flexible structure, can have completely different properties, and can support conditional objects of multiple related classes

Its advantages:

- Make locks fairer

- Causes the thread to respond to an interrupt while waiting for a lock

- It can make the thread return immediately or wait for a period of time when it cannot obtain the lock

- Locks can be acquired and released in different ranges and in different orders

In general, lock is an extended and upgraded version of synchronized. Lock provides unconditional, pollable, timed, interruptible and multi conditional queue lock operations.

Lock interface api

1,void lock();// Acquire lock

2,void lockInterruptibly;// The process of acquiring locks can respond to interrupts

3,boolean tryLock();// The non blocking response interrupt can be returned immediately, and the lock obtained returns true, otherwise it is false

4,boolean tryLock(long time,TimeUnit unit);// Timeout to obtain the lock. The lock can be obtained within the timeout or without interruption

5,Condition newCondition(); // Obtain the wait notification component bound to lock. The current thread must obtain the lock before waiting. Waiting will release the lock. Obtaining the lock again can return from waiting

Generally, the form of display lock is as follows:

public class Test {

public volatile static int COUNT = 0;

public static void main(String[] args) {

Lock lock = new ReentrantLock();

Thread[] threads = new Thread[20];//Creating a thread array is the same as storing the next created thread

for(int i = 0; i < threads.length; i++){

Runnable r = new Runnable() {//Creating threads using anonymous inner classes

@Override

public void run() {

for(int i = 0; i < 1000;i++) {

lock.lock();

try {

COUNT++;

}finally {

lock.unlock();

}

}

}

};

threads[i] = new Thread(r);

}

for(Thread t : threads){//Start the 20 threads in turn

t.start();

}

while (Thread.activeCount() > 2){

Thread.yield();//Ensure that all threads in the Thread array run

}

System.out.println(COUNT);

}

}

Subclass of Lock implementation

- ReadWriteLock

- ReentrantLock

- ReentrantReadWriteLock

- StampedLock

AQS concept

AQS - Abstract queue synchronizer is a framework used to construct locks and synchronizers. It is suitable for AQS to simply and efficiently construct a large number of synchronizers widely used, such as ReentrantLock and Semaphore. Other synchronizers such as RenntrantReadWriteLock, SynchronousQueue and FutureTask are based on AQS

AQS realizes the management of synchronization status, queuing blocked threads and waiting for notifications

AQS principle analysis

The core idea of AQS is: if the requested shared resource is idle, set the thread requesting the resource as a valid worker thread, and set the shared resource to the locked state. If the requested shared resources are occupied, a set of thread blocking and waiting and lock allocation mechanism when waking up are required. This mechanism is implemented by AQS using CLH queue (virtual two-way queue) lock, that is, the thread that cannot obtain the lock temporarily is added to the queue.

AQS resource sharing mode

Two resource sharing methods are defined at the bottom of AQS:

- Exclusive: only one thread can obtain resources, such as ReentrantLock, which can be divided into fair lock and unfair lock;

Fair lock: according to the order of threads in the queue, those who enter the queue first obtain resources first

Unfair lock: when a thread wants to obtain resources, it ignores the queue order and directly preempts the lock - Share: multiple threads can execute simultaneously; Such as Semaphore, CountDownLatch

Reentrantlock

ReentrantLock is a class that implements the Lock interface. It supports reentry, which means that the share can be locked repeatedly, that is, the current thread will not be blocked after acquiring the Lock again

The synchronized keyword in Java implicitly supports reentry. The synchronized keyword is realized by incrementing the counter of monitor.

Implementation principle:

- When a thread acquires a lock, if the thread that has acquired the lock is the current thread, it will directly obtain the lock again

- Because the lock will be acquired n times, the lock can be released successfully only after it is released n times

Readwritelock

ReadWriteLock is a read-write lock interface. Read-write lock is a separation technology used to improve the performance of concurrent programs. ReentrantReadWriteLock is a specific implementation of ReadWriteLock interface, which realizes the separation of read and write. Resources are shared when reading lock and exclusive when writing lock

Read / write locks have three important characteristics:

- Fair selectivity: supports unfair and fair lock acquisition. Throughput is still unfair and better than fair lock

- Reentry lock: both read lock and write lock support thread reentry

- Lock demotion: follow the sequence of obtaining a write lock, obtaining a read lock, and then releasing a write lock. A write lock can be demoted to a read lock

ThreadLocal

ThreadLocal is used to provide thread local variables. In a multithreaded environment, it can ensure that the variables in each thread are independent of those of other threads. It can be understood that ThreadLocal creates a separate copy of shared variables for each thread without affecting each other

ThreadLocal is used in multiple threads. It is an independent variable that operates its own thread, and there is no correlation between threads

ThreadLocal ensures the independence of data in a multithreaded environment

ThreadLocal application instance

public class Test {

private static String commStr;

private static ThreadLocal<String> threadStr = new ThreadLocal<String>();

public static void main(String[] args) {

commStr = "main";

threadStr.set("main");

Thread thread = new Thread(new Runnable() {

@Override

public void run() {

commStr = "thread";

threadStr.set("thread");

System.out.println("thread "+Thread.currentThread().getName()+":");

System.out.println("commStr:"+commStr);

System.out.println("threadStr:"+threadStr.get());

}

});

thread.start();

try {

thread.join();

} catch (InterruptedException e) {

e.printStackTrace();

}

System.out.println("thread "+Thread.currentThread().getName()+":");

System.out.println("commStr:"+commStr);

System.out.println("threadStr:"+threadStr.get());

}

}

results of enforcement

Underlying principle of ThreadLocal

In essence, ThreadLocal enables each thread's ThreadHashMap to maintain its own shared variable copy, so that each thread is independent

- There is a ThreadHashMap data structure in the Thread class, which is used to save the variables of the Thread object

- get(), put(), and remove() methods will get the current thread, and then get the ThreadHashMap through the current thread. If ThreadHashMap is null, a new ThreadHashMap will be created for the current thread

- When using ThreadLocal type variable operation, the ThreadHashMap obtained by the current thread will be used for operation. The ThreadHashMap of each thread belongs to the thread itself, and the values maintained in the ThreadHashMap also belong to the thread itself, which ensures that the ThreadLocal type variables are independent in each thread, and the simultaneous operation of multiple threads will not affect each other

Thread pool

Thread pool can be compared with the concept of constant pool in JMM, in which a certain number of threads are stored in advance. When the program needs to be executed under a thread, the thread pool will allocate a thread to execute the corresponding program.

The introduction of thread pool solves the disadvantage of consuming a lot of performance to create and destroy threads every time the program is executed, and reduces the loss of threads every time they are started and destroyed.

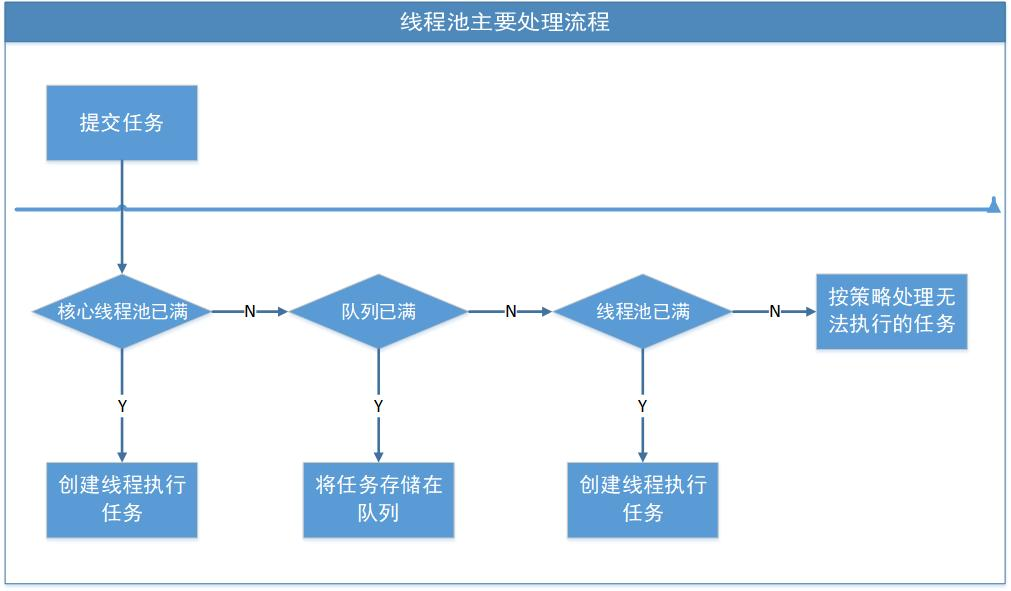

Main execution flow of thread pool

- The thread pool determines whether the threads in the core thread pool are working. If not, create a working thread to execute the task. If so, enter the next process

- The thread pool determines whether the work queue is full. If not, the newly submitted task is stored in the work queue. Otherwise, it enters the next process

- The thread pool determines whether all threads in the pool are working. If a new thread is not created to execute the task, otherwise, it is handled by the saturation strategy

Four common thread pools created by the Executors class

Some static factory methods are provided in the tool class Executors to generate some common thread pools:

- Newsinglethreadexecution: create a single thread thread pool. There is only one thread working in this thread pool, which is equivalent to a single thread executing all tasks serially

- newFIxedThreadPool: create a fixed size thread pool. Each time a task is submitted, a thread will be created until the thread reaches the maximum value of the thread pool

- newCachedThreadPool: create a cacheable thread pool. If the size of the thread pool exceeds the threads required for processing tasks, some idle threads (threads that do not execute tasks within 60 seconds) will be recycled. When the number of tasks increases, this thread can intelligently add new threads to process tasks. This thread pool will not limit the size of threads, The size of the thread pool depends on the size of the largest thread that the operating system can create

- newScheduleThreadPool: create a thread pool with unlimited size. This thread pool supports the need to execute tasks regularly and periodically

ThreadPoolExecutor to create a thread pool

ThreadPoolExecutor has only one way to create a thread pool -- creating a thread pool through custom parameters

ThreadPoolExecutor pool = new ThreadPoolExecutor(

4,//corePoolSize: number of core threads

10,//maximumPoolSize: maximum number of threads (core thread + temporary thread)

60,//keepAliveTime: number of idle time (the maximum time that a temporary thread can be idle. If it exceeds this time, the temporary thread will be destroyed)

TimeUnit.SECONDS,//Unit: time unit

new ArrayBlockingQueue<>(1000),//workQueue: blocking queue (container for threads)

new ThreadFactory(){//threadFactory: anonymous inner class

@Override

public Thread newThread(Runnable r){

//Factory class for thread

return new Thread(r);

}

},

//handler: reject policy

//1. new ThreadPoolExecutor.AbortPolicy() / / exception throwing method

//2. new ThreadPoolExecutor.CallerRunsPolicy()//

//3. new ThreadPoolExecutor.DiscardOldestPolicy() / / discard the task with the longest blocking queue

//4.

new ThreadPoolExecutor.DiscardPolicy());//Do not process the task, discard it directly

Core parameters:

- corePoolSize: number of core threads, the minimum number of threads that can run simultaneously defined by threads

- maximumPoolSize: the maximum number of worker threads allowed in the thread pool

- workQueue: a container for storing tasks to be executed. If a new task enters and all threads are working at this time, store the task in the container and wait for an idle thread to take it out

Usage example of thread pool

public class Test {

public static int COUNT;

public static void main(String[] args) {

ThreadPoolExecutor pool = new ThreadPoolExecutor(

10,

30,

60,

TimeUnit.SECONDS,

new ArrayBlockingQueue<>(1000),

new ThreadFactory() {

@Override

public Thread newThread(Runnable r) {

return new Thread(r);

}

},

new ThreadPoolExecutor.DiscardPolicy());

Lock lock = new ReentrantLock();

Runnable r = new Runnable() {

@Override

public void run() {

for (int i = 0; i < 1000; i++) {

lock.lock();

try {

COUNT++;

} finally {

lock.unlock();

}

}

}

};

for(int i = 0 ;i < 20; i++) {

pool.execute(r);

}

while (pool.getActiveCount() > 0){

Thread.yield();

}

pool.shutdown();

System.out.println(COUNT);

}

}

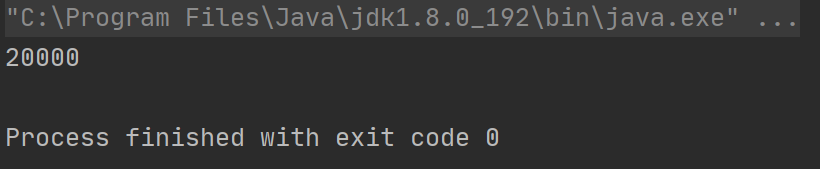

results of enforcement

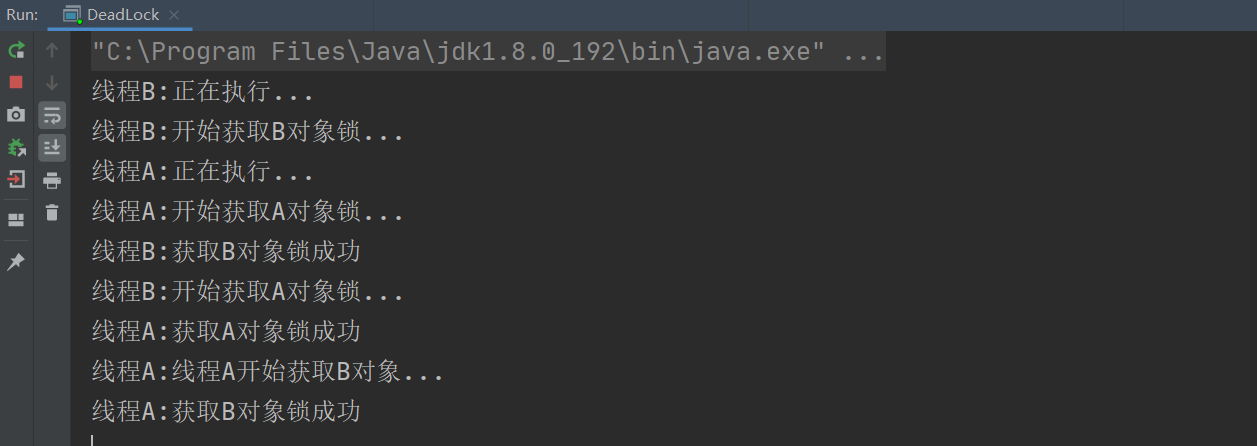

deadlock

Thread deadlock refers to the deadlock caused by two or more threads holding each other's resources and not actively releasing them during execution. These threads / processes that are always waiting for each other are called deadlocks

Deadlock example

public class DeadLock {

private static Integer A = 0;

private static Integer B = 10;

public static void main(String[] args) {

deadLock();

}

private static void deadLock() {

Thread threadA = new Thread(new Runnable() {

@Override

public void run() {

System.out.println("thread A:Executing...");

System.out.println("thread A:Start getting A Object lock...");

synchronized (A){

System.out.println("thread A:obtain A Object lock succeeded");

try {

Thread.sleep(1000);

} catch (InterruptedException e) {

e.printStackTrace();

}

System.out.println("thread A:thread A Start getting B object...");

System.out.println("thread A:obtain B Object lock succeeded");

synchronized (B){

Integer t = A;

A = B;

B = t;

}

}

}

});

Thread threadB = new Thread(new Runnable() {

@Override

public void run() {

System.out.println("thread B:Executing...");

System.out.println("thread B:Start getting B Lock object...");

synchronized (B){

System.out.println("thread B:obtain B Object lock succeeded");

System.out.println("thread B:Start getting A Object lock...");

synchronized (A){

System.out.println("thread B:obtain A Object lock succeeded");

System.out.println(A);

System.out.println(B);

}

}

}

});

threadA.start();

threadB.start();

}

}

Four necessary conditions for deadlock

- Mutually exclusive condition: the thread is exclusive to the allocated resources, that is, a resource can only be occupied by one thread until it is released by the thread

- Request and hold condition: when a thread is blocked due to the resources occupied by the request, it will hold the obtained resources

- No deprivation condition: the resources obtained by a thread cannot be forcibly deprived by other threads before they are used up. They can only be released after they are used up

- Loop waiting condition: when a deadlock occurs, the thread waiting for the lock must form an endless loop

Methods to avoid deadlock

Just break one of the four conditions above:

- Destroy mutex condition: because mutex condition is a characteristic of the lock itself, it cannot be destroyed

- Destroy request and hold condition: encapsulate the resource to be applied into a class, and then lock its object

- Condition of no deprivation of destruction: it can be set that when some resources are occupied and it is found that the application for other resources is not available, the applied resources can be released and re applied

- Destroy cycle waiting condition: apply for resources in a certain order, and release resources in reverse order

Specific methods:

- Try to use the methods of tryLock(long timeout,TimeUnit unit) (ReentrantLock, ReentrantReadWriteLock) to set the timeout, so that the timeout exits to prevent deadlock

- Try to use Java util. Concurrent class to replace your own handwritten lock

- Try to reduce the use granularity of locks, and do not use the same lock for multiple functions

The difference between deadlock and livelock

Deadlock: refers to the deadlock caused by two or more threads holding each other's resources and not actively releasing them during execution

Livelock: refers to the process that a thread repeatedly acquires a lock because some conditions are not met without blocking

Difference: the thread state in a livelock is constantly changing, but the thread state in a deadlock has not changed. It is in a waiting state. The livelock may unlock itself, but the deadlock cannot

The above is a summary of the knowledge points of multithreading safety. With the further study, the content will be supplemented and modified synchronously. It will be a great honor if you can help all bloggers. Please correct it