During the development of some application projects, voice detection functions are sometimes needed, that is, the functions of recognizing knocking, door ringing, car horn sound and so on. For small and medium-sized developers, developing and building this ability alone is time-consuming and labor-consuming. However, the voice recognition service SDK in Huawei machine learning service can realize this function on the end side by simple integration.

Voice recognition capability of Huawei machine learning service

Voice recognition service supports the detection of voice events through online (real-time recording) mode. Based on the detected voice events, it can help developers carry out subsequent command actions. At present, 13 kinds of sound events are supported, including laughter, baby or child crying, snoring, sneezing, shouting, cat barking, dog barking, running water (including faucet running water, stream sound and wave sound), car horn sound, door bell, knocking, fire alarm sound (including fire alarm sound and smoke alarm sound) Alarm sound (including fire engine alarm sound, ambulance alarm sound, police car alarm sound and air defense alarm sound).

Integration preparation

Development environment configuration

1. You need to create an application on Huawei developer Alliance:

For details of this step, please refer to this link: https://developer.huawei.com/consumer/cn/doc/development/AppGallery-connect-Guides/agc-get-started#createproject?ha__source=hms1

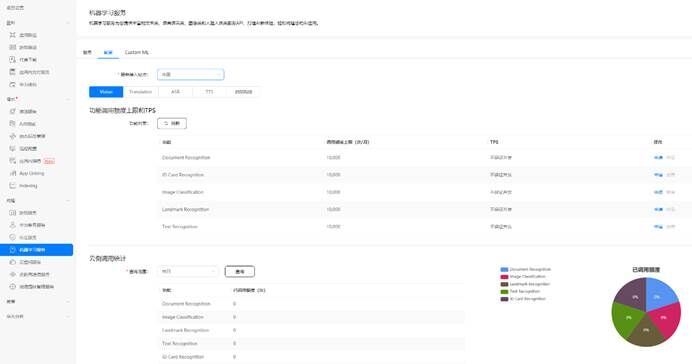

2. Open the machine learning service:

You can view this link for specific opening steps: https://developer.huawei.com/consumer/cn/doc/development/HMSCore-Guides-V5/enable-service-0000001050038078-V5?ha__source=hms1

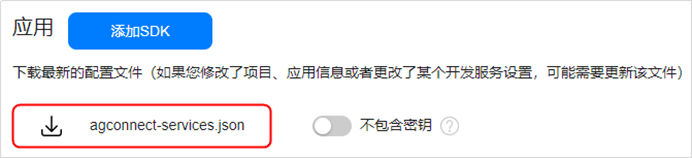

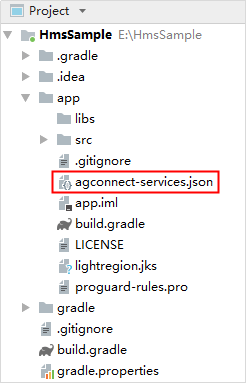

2. After creating the application, agconnect services will be generated automatically JSON file, you need to manually add agconnect services Copy the JSON file to the application level root directory

3. Configure Maven warehouse address of HMS Core SDK.

For the configuration of Maven warehouse, you can view this link: https://developer.huawei.com/consumer/cn/doc/development/HMSCore-Guides/config-maven-0000001050040031?ha__source=hms1

4. Integrated voice recognition service SDK

- It is recommended to use Full SDK for integration in build Configure the corresponding sdk in the gradle file

// Introduction of voice recognition collection package implementation 'com.huawei.hms:ml-speech-semantics-sounddect-sdk:2.1.0.300' implementation 'com.huawei.hms:ml-speech-semantics-sounddect-model:2.1.0.300'

- There are two ways to declare AGC plug-in configuration according to the actual situation

apply plugin: 'com.android.application' apply plugin: 'com.huawei.agconnect'

or

plugins {

id 'com.android.application'

id 'com.huawei.agconnect'

}3. Automatically update machine learning model

Add the following statement to androidmanifest In the XML file, the user will automatically update the machine learning model to the device after installing your application from Huawei application market:

<meta-data

android:name="com.huawei.hms.ml.DEPENDENCY"

android:value= "sounddect"/>For more detailed steps, see the link: https://developer.huawei.com/consumer/cn/doc/development/HMSCore-Guides/sound-detection-sdk-0000001055602754?ha__source=hms1

Application development coding stage

1. Get the permission of microphone. If you don't have the permission of microphone, an error of 12203 will be reported

Set static permissions (required)

<uses-permission android:name="android.permission.RECORD_AUDIO" />

Dynamic permission acquisition (required)

ActivityCompat.requestPermissions(

this, new String[]{Manifest.permission.RECORD_AUDIO

}, 1);2. Create MLSoundDector object

private static final String TAG = "MLSoundDectorDemo";

//Object of speech recognition

private MLSoundDector mlSoundDector;

//Create the MLSoundDector object and set the callback method

private void initMLSoundDector(){

mlSoundDector = MLSoundDector.createSoundDector();

mlSoundDector.setSoundDectListener(listener);

}3. Voice recognition result callback, which is used to obtain the detection result and send the callback to the voice recognition instance.

//Create a voice recognition result callback to obtain the detection result and pass the callback into the voice recognition instance.

private MLSoundDectListener listener = new MLSoundDectListener() {

@Override

public void onSoundSuccessResult(Bundle result) {

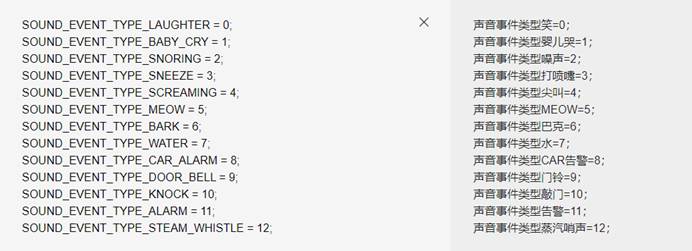

//Identify the successful processing logic, and the recognition results are: 0-12 (corresponding to the 13 sound types named beginning with SOUND_EVENT_TYPE defined in mlsounddecistants.java).

int soundType = result.getInt(MLSoundDector.RESULTS_RECOGNIZED);

Log.d(TAG,"Voice recognition successful:"+soundType);

}

@Override

public void onSoundFailResult(int errCode) {

//Recognition failed. There may be exceptions such as not granting microphone permission (Manifest.permission.RECORD_AUDIO).

Log.d(TAG,"Voice recognition failed:"+errCode);

}

};In this code, only the int type of the voice recognition result is printed. In the actual coding, the voice recognition result of int type can be converted into a type that can be recognized by the user.

Definition of voice recognition type:

<string-array name="sound_dect_voice_type">

<item>laughter</item>

<item>Baby or child crying</item>

<item>snoring </item>

<item>sneeze </item>

<item>cry</item>

<item>Cat cry</item>

<item>Dog barking</item>

<item>Sound of running water</item>

<item>Car horn</item>

<item>Doorbell</item>

<item>Knock at the door</item>

<item>Fire alarm sound</item>

<item>Alarm sound</item>

</string-array>3. Turn speech recognition on and off

@Override

public void onClick(View v) {

switch (v.getId()){

case R.id.btn_start_detect:

if (mlSoundDector != null){

boolean isStarted = mlSoundDector.start(this); //Context is the context

//If isstarted is equal to true, it means that the recognition is started successfully, and if isstarted is equal to false, it means that the recognition fails (the reason may be that the mobile phone microphone is occupied by the system or other third-party applications)

if (isStarted){

Toast.makeText(this,"Speech recognition enabled successfully", Toast.LENGTH_SHORT).show();

}

}

break;

case R.id.btn_stop_detect:

if (mlSoundDector != null){

mlSoundDector.stop();

}

break;

}

}4. When the page is closed, you can call the destroy() method to release resources

@Override

protected void onDestroy() {

super.onDestroy();

if (mlSoundDector != null){

mlSoundDector.destroy();

}

}Run test

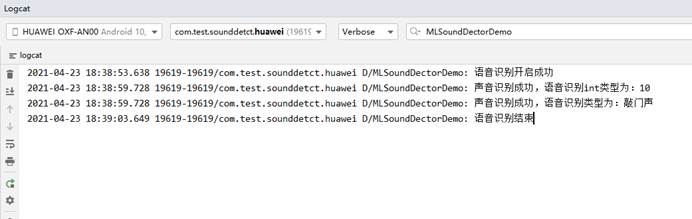

1. Taking the knock at the door as an example, the output result of the voice recognition type is expected to be 10

2. Click the open voice recognition button and simulate the knock on the door. The following log can be obtained in the AS console, indicating that the integration is successful.

other

1. Voice recognition service is a very small module of Huawei machine learning service. Huawei machine learning service includes six modules: text, speech language, image, human face, natural language processing and user-defined model.

2. This record document only introduces the "voice recognition service" in the "voice language class" module

3. If readers are interested in other modules of Huawei's machine learning service, they can check the information provided by Huawei Relevant integration documents

>>Visit the official website of Huawei machine learning service for more information

>>Obtain Huawei machine learning service development guidance document

>>Huawei HMS Core official forum

>>Huawei machine learning service open source warehouse address: GitHub,Gitee

Click the attention on the right side of the avatar in the upper right corner to learn the latest technology of Huawei mobile services for the first time~