How to speed up the calculation when the model is large (I)

python common parallel Libraries

DASK

dask is a parallel computing library of python. It can dynamically schedule resources to provide parallel computing. Parallel data integration provides interfaces to numpy, pandas or python iterators. The Task Graph is very clear, so that developers and users can freely build complex algorithms, And deal with the confusion that the map / filter / group by paradigm is difficult to deal with in most data engineering frameworks.

Picture address: Official manual

dask example

Let's test the time of data calculation in numpy and dask.

import time import numpy as np import matplotlib.pyplot as plt import dask import dask.array as da data = np.random.normal(loc =10.0 , scale= 0.1,size = (20000,20000))

Generate a wave of array data with fixed expected variance, and use numpy to calculate the mean value of the dimension vector according to a certain dimension on the data.

def numpy_compute():

print(data.mean(axis=0))

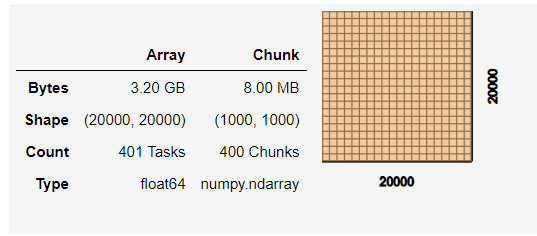

At the same time, dask is used to block the array. Here, each block is 1000 * 1000.

data_p=da.from_array(data,chunks=(1000,1000))

Calculate the vector mean value of a dimension in the dask array.

Calculate the vector mean value of a dimension in the dask array.

def dask_compute():

print(data_p.mean(axis=0).compute())

Time to compare the two:

def run_time(fun):

start_time = time.time()

fun()

end_time = time.time()

print("The running time of the program is:{} second".format(str(round((end_time - start_time), 1))))

return end_time - start_time

@run_time

def task():

numpy_compute()

@run_time

def task():

dask_compute()

The running time of courseware dask is faster.

The functions of dask are far from known, and there are many operations that can be parallelized.

multiprocessing

multiprocessing is a multi Process program written by python. It supports sub processes, communication and sharing data, and performs different forms of synchronization. It provides Process, Queue, Pipe, Lock and other components.

# parameter

multiprocessing.Process(group=None, target=None, name=None, args=(), kwargs={})

- group: Grouping is rarely used in practice

- target: Represents the calling object. You can pass in the name of the method

- name: Alias, which is equivalent to giving the process a name

- args: Represents the location parameter tuple of the called object, such as target It's a function a,He has two parameters m,n,that args Just pass in(m, n)that will do

- kwargs: Represents the dictionary of the calling object

Simple use

import math

import datetime

import multiprocessing as mp

def train_on_parameter(name, param):

result = 0

for num in param:

result += math.sqrt(num * math.tanh(num) / math.log2(num) / math.log10(num))

return {name: result}

if __name__ == '__main__':

start_t = datetime.datetime.now()

num_cores = int(mp.cpu_count())

print("The local computer has: " + str(num_cores) + " core")

pool = mp.Pool(num_cores)

param_dict = {'task1': list(range(10, 30000000)),

'task2': list(range(30000000, 60000000)),

'task3': list(range(60000000, 90000000)),

'task4': list(range(90000000, 120000000)),

'task5': list(range(120000000, 150000000)),

'task6': list(range(150000000, 180000000)),

'task7': list(range(180000000, 210000000)),

'task8': list(range(210000000, 240000000))}

results = [pool.apply_async(train_on_parameter, args=(name, param)) for name, param in param_dict.items()]

results = [p.get() for p in results]

end_t = datetime.datetime.now()

elapsed_sec = (end_t - start_t).total_seconds()

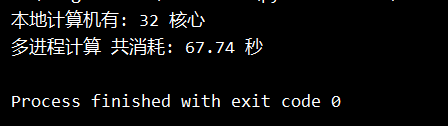

print("Multi process computing co consumption: " + "{:.2f}".format(elapsed_sec) + " second")

Operation results:

Thread pool

import random

from multiprocessing.pool import Pool

from time import sleep, time

import os

def run(name):

print("%s Child process start, process ID: %d" % (name, os.getpid()))

start = time()

sleep(random.choice([1, 2, 3, 4]))

end = time()

print("%s Child process end, process ID: %d. Time consuming 0.2%f" % (name, os.getpid(), end-start))

if __name__ == "__main__":

print("Parent process start")

# Create multiple processes, indicating the number of processes that can be executed at the same time. The default size is the number of cores of the CPU

p = Pool(8)

for i in range(10):

# Create processes and put them into the process pool for unified management

p.apply_async(run, args=(i,))

# If we use a process pool, we must close() before calling join(), and we can't add new processes to the process pool after close()

p.close()

# When the process pool object calls join, it will wait for all the child processes in the process to finish before ending the parent process

p.join()

print("The parent process ended.")

threading

Thread object data attributes include name, ident, daemon, etc. The main objects include start (), run() and join (). Start means to start executing the thread, run() defines the thread function, which is usually rewritten by the application developer in the subclass, and join (timeout=None) means to hang until the started thread terminates. Unless timeout seconds are given, it will be blocked.

import threading

import time

def read():

for x in range(5):

print('stay%s,Listening to music' % time.ctime())

time.sleep(1.5)

def write():

for x in range(5):

print('stay%s,I'm watching TV' % time.ctime())

time.sleep(1.5)

def main():

music_threads = [] # Used to store the list of threads executing the read function

TV_threads = [] # Used to store the list of threads executing the write function

for i in range(1,2): # Create a thread for read() and add it to read()_ Threads list

t = threading.Thread(target=read) # If the executed function needs to pass parameters, threading.Thread(target = function name, args = (parameters, separated by commas))

music_threads.append(t)

for i in range(1,2): # Create a thread to execute write() and add it to write_threads list

t = threading.Thread(target=write) # If the executed function needs to pass parameters, threading.Thread(target = function name, args = (parameters, separated by commas))

TV_threads.append(t)

for i in range(0,1): # Start and store in read_threads and write_threads in the threads list

music_threads[i].start()

TV_threads[i].start()

if __name__ == '__main__':

main()

This example refers to the blog

In order to better encapsulate threads, you can use the Thread class under the threading module to inherit this class, and then implement the run method. The Thread will automatically run the code in the run method.

import threading

import time

count = 0

class MyThread(threading.Thread):

def __init__(self , threadName):

super(MyThread,self).__init__(name=threadName)

"""Once this MyThread Class is called, and the following will run automatically run Method,

Because of this run To which the method belongs MyThread Class inherits threading.Thread"""

def run(self):

global count

for i in range(100):

count += 1

time.sleep(0.3)

print(self.getName() , count)

for i in range(2):

MyThread("MyThreadName:" + str(i)).start()